This topic describes the terms that are involved in data development in different services of DataWorks, including Data Integration, Data Modeling, DataStudio, DataAnalysis, and DataService Studio.

Common terms

workspace

A workspace is a basic unit used to manage tasks, members, roles, and permissions in DataWorks. The administrator of a workspace can add users to the workspace as members and assign the Workspace Administrator, Develop, O&M, Deploy, Security Administrator, or Visitor role to each member. This way, workspace members to which different roles are assigned can collaborate with each other.

We recommend that you create workspaces by department or business unit to isolate resources.

resource group

A resource group is a basic service that is provided by DataWorks. Resource groups provide computing resources for different services and features of DataWorks. Before you can perform data development operations by using DataWorks, you must have a resource group. The status of a resource group affects the running status of related features. The quota of a resource group affects the running efficiency of tasks or services.

Resource groups in DataWorks are different from the resource groups within your Alibaba Cloud account. A resource group within an Alibaba Cloud account is used to manage the resources of the account by group. This helps simplify the resource and permission management of the account. Resource groups in DataWorks are used to run tasks. You must take note of the differences between the two types of resource groups.

Unless otherwise specified, resource groups used in DataWorks refer to serverless resource groups rather than old-version resource groups. Serverless resource groups can serve general purposes.

Serverless resource groups can be used in data synchronization, data scheduling, and DataService Studio.

basic mode and standard mode

To facilitate data output for users who have different security control requirements, DataWorks provides two workspace modes: basic mode and standard mode. If you add a data source to a workspace in standard mode, two data sources are separately created in the development environment and production environment for data isolation.

computing resource

A computing resource is a resource instance that is used by the related compute engine to run data processing and analysis tasks. For example, a MaxCompute project for which a quota group is configured and a Hologres instance are computing resources.

You can associate multiple computing resources with a workspace. After the association, you can develop and schedule tasks that use these resources within the workspace.

Data Integration

data source

Data sources are used to connect different data storage services. Before you configure a synchronization task, you must define information about the data sources that you want to use in DataWorks. This way, when you configure a synchronization task, you can select the names of data sources to determine the database from which you want to read data and the database to which you want to write data. You can add multiple types of data sources to a workspace.

data synchronization

The data synchronization feature of Data Integration can be used to synchronize structured, semi-structured, and unstructured data. Structured data includes data in ApsaraDB RDS and PolarDB-X 1.0 data sources. Unstructured data includes data in Object Storage Service (OSS) and text files. Data Integration can synchronize only the data that can be abstracted to two-dimensional logical tables. Data Integration cannot synchronize fully unstructured data, such as MP3 files, in OSS. The data synchronization feature of Data Integration supports multiple synchronization methods, such as batch synchronization, real-time synchronization, and synchronization of full and incremental data in a single table or a database. In addition, the feature also supports serverless synchronization tasks. When you configure a serverless synchronization task, you do not need to configure a resource group for the task. This way, you can focus only on your business.

Data Modeling

data modeling

With the rapid development of enterprise business, the amount of business data exponentially grows, the data complexity increases, and various inconsistent data standards appear. This significantly increases the difficulty in data management. To resolve this issue, DataWorks provides Data Modeling to help structure and manage large amounts of disordered and complex data. Data Modeling helps enterprises obtain more value from business data.

reverse modeling

Reverse modeling allows you to generate models based on existing physical tables. This way, you do not need to manually create tables to generate models in Dimensional Modeling. This helps reduce the period of time that is required to generate models.

modeling workspace

If your data system involves multiple workspaces and you want to apply the same data warehouse plan to the workspaces, you can use the modeling workspace feature to share the same suit of data modeling tools among the workspaces. This allows you to plan data warehouses, perform dimensional modeling, and define metrics for the workspaces in a unified manner.

dimension table

A dimension table is used to store a dimension and the attributes of the dimension. The dimension is extracted from a data domain based on your data domain planning and may be used to analyze data in the data domain. For example, when you analyze e-commerce business data, possible dimensions include order, user, and commodity. The possible attributes of the order dimension include order ID, order creation time, buyer ID, and seller ID. The possible attributes of the user dimension include gender and birthdate. The possible attributes of the commodity dimension include commodity ID, commodity name, and commodity put-on-shelf time. You can create the following dimension tables: order dimension table, user dimension table, and commodity dimension table. The attributes of each dimension are used as the fields in the dimension table.

fact table

A fact table is used to store the actual data that reflects the situation of a business activity. The actual data may be generated in different business processes and organized and analyzed based on the planning of business processes. For example, you can create a fact table for the business process of order placing, and record the following information as fields in the fact table: order ID, order creation time, commodity ID, number of commodities, and sales amount. You can deploy the fact table in a data warehouse and perform extract, transform, load (ETL) operations to summarize and store data in the format defined in the fact table. This allows business personnel to access the data for subsequent data analysis.

aggregate table

An aggregate table is used to store the statistical data of multiple derived metrics that have the same statistical period and dimension in a data domain. An aggregate table is obtained based on the business abstraction and sorting results of business data, and can be used as a basis for subsequent business queries, online analytical processing (OLAP) analysis, and data distribution.

application table

An application table is suitable for different business scenarios. An application table is used to organize statistical data collected by atomic and derived metrics of the same statistical period, dimension, and statistic granularity. This allows you to perform subsequent business queries, OLAP analysis, and data distribution in an efficient manner.

data mart

A data mart is a data organization that is based on a business category. You can use data marts to organize data for a specific product or scenario. In most cases, a data mart belongs to an application layer and depends on the aggregate data in common layers.

data warehouse planning

A data warehouse architect or a model group member who uses DataWorks to perform data modeling can design data layers, business categories, data domains, business processes, data marts, and subject areas on the data warehouse planning page in the DataWorks console. After the design is complete, a model designer can manage the created models based on objects such as data layers, business categories, data domains, and business processes.

Data import layer: A data import layer is used to store basic raw data such as database data, logs, and messages. After raw data is processed by various ETL operations, the raw data is stored at a data import layer. You can store only operational data store (ODS) tables at a data import layer.

Common layer: A common layer is used to process and aggregate common data that is stored at a data import layer. You can create a unified metric dimension and create reusable fact data and aggregate data that are used for data analysis and collection at a common layer. You can store fact tables, dimension tables, and aggregate tables at a common layer.

Application layer: An application layer is used to store data that is processed and aggregated at a common layer. You can store statistical data that is collected in specific application scenarios or for specified products at an application layer. You can store application tables and dimension tables at an application layer.

data layer

By default, a data warehouse is divided into the following layers: ODS, dimension (DIM), data warehouse detail (DWD), data warehouse summary (DWS), and application data service (ADS). For more information, see the topics in the data warehouse layering directory.

ODS

This layer is used to receive and process raw data that needs to be stored in a data warehouse. The structure of a data table at the ODS layer is the same as the structure of a data table in which the raw data is stored. The ODS layer serves as the staging area for the data warehouse.

DWD

At this layer, data models are built based on the business activities of an enterprise. You can create a fact table that uses the highest granularity level based on the characteristics of a specific business activity. You can duplicate some key attribute fields of dimensions in fact tables and create wide tables based on the data usage habits of the enterprise. You can also reduce the association between fact tables and dimension tables to improve the usability of fact tables.

DWS

At this layer, data models are built based on specific subject objects that you want to analyze. You can create a general aggregate table based on the metric requirements of upper-layer applications and products.

ADS

This layer is used to store product-specific metric data and generate various reports.

DIM

At this layer, data models are built based on dimensions. You can store logical dimension tables and conceptual dimensions at this layer based on your business requirements. You can define dimensions, determine primary keys, add dimension attributes, and associate different dimensions for dimension tables. This ensures data consistency in data analysis and mitigates the risks of inconsistent data calculation specifications and algorithms.

subject area

A subject area is a collection of business subjects and is used to categorize data in a data mart from various analytical perspectives. You can classify business subjects into different subject areas based on your business requirements. For example, you can create a transaction subject area, a member subject area, and a commodity subject area for e-commerce data.

data domain

A data domain stores data of the same type. You can design and create data domains for your business data based on different dimensions, such as business types, data sources, and data usage. Data domains help you search for data in an efficient manner. The classification criteria of data domains vary based on data usage. For example, you can create a transaction data domain, a member data domain, and a commodity data domain for e-commerce data.

business process

A business process refers to a business activity that is performed by an enterprise in a specific data domain. A business process is a logical subject that needs to be analyzed in Data Modeling. For example, a transaction data domain can contain business processes such as adding commodities to the shopping cart, placing orders, and paying for orders.

composite metric

A composite metric is calculated based on specific derived metrics and calculation rules. A derived metric collects only statistics about a business activity within a specific period of time and cannot meet the requirements of users for calculating items such as growth rates and data value differences. For example, you cannot use a derived metric to calculate week-on-week growth rates for a business activity. To resolve this issue, DataWorks provides composite metrics that are calculated based on specific derived metrics and calculation rules. Composite metrics are fine-grained metrics that can help you collect statistics about your business in a flexible manner.

data metric

Data Modeling provides the data metric feature, which allows you to establish a unified metric system.

A metric system consists of atomic metrics, modifiers, periods, and derived metrics.

Atomic metric: An atomic metric is a measurement used for a business process. For example, you can create an atomic metric named Payment Amount for the Order Placing business process.

Modifier: A modifier limits the scope of business based on which a specific metric collects data. For example, you can create a modifier named Maternity and Infant Products to limit the statistical scope of the Payment Amount atomic metric.

Period: A period specifies the time range within which or the point in time at which a metric collects data. For example, you can create a period named Last Seven Days for the Payment Amount atomic metric.

Derived metric: A derived metric consists of an atomic metric, a period, and one or more modifiers. For example, you can create a derived metric named Payment Amount of Maternity and Infant Products in Last Seven Days.

lookup table

A lookup table defines the value range of fields that are defined by a field standard. For example, the lookup table that is referenced by a field standard named gender contains male and female.

field standard

A field standard is used to manage the values of fields that have the same meaning but different names in a centralized manner. A field standard can also be used to define the value range and measurement unit for the fields. If changes are made to a field standard, you can quickly identify and modify tables that contain fields defined by the field standard. This significantly improves data application efficiency and data accuracy.

DataStudio

node

The DataStudio service of DataWorks allows you to create various types of nodes, such as data synchronization nodes, compute engine nodes used for data cleansing, and general nodes used together with compute engine nodes to process complex logic. Compute engine nodes include ODPS SQL nodes, Hologres SQL nodes, and EMR Hive nodes. General nodes include zero load nodes that can be used to manage multiple other nodes and do-while nodes that can run node code in loops. You can combine different types of nodes in your business to meet your different data processing requirements.

workflow

The concept workflow is abstracted from business to help you manage and develop code based on your business requirements and improve task management efficiency.

Workflows help you develop and manage code based on your business requirements. A workflow provides the following features:

Allows you to develop and manage code by task type.

Supports a hierarchical directory structure. We recommend that you create a maximum of four levels of subdirectories for a workflow.

Allows you to view and optimize a workflow from the business perspective.

Allows you to deploy and perform O&M operations on tasks in a workflow as a whole.

Provides a dashboard for you to develop code with improved efficiency.

auto triggered workflow

An auto triggered workflow is a new development way that provides a visualized DAG development interface from the business perspective. You can integrate multiple types of tasks or nodes in an auto triggered workflow by performing drag operations in a visualized manner. This helps you configure dependencies for tasks or nodes in a convenient manner, facilitates data processing, improves task development efficiency, and simplifies the management of complex tasks and projects.

Notebook

The notebook feature of DataWorks provides an interactive environment and allows users to integrate code such as SQL and Python code, text, code execution results, and data visualization charts to perform intuitive data exploration, data analysis, and AI-based model development.

SQL script template

An SQL script template is a general logic chunk that is abstracted from an SQL script and can facilitate the reuse of code. SQL script templates can be used to develop only MaxCompute tasks.

Each SQL script template involves one or more source tables. You can filter source table data, join source tables, and aggregate source tables to generate a result table based on your business requirements. An SQL script template contains multiple input and output parameters.

scheduling dependency

A scheduling dependency is used to define the sequence in which tasks are run. If Node B can run only after Node A finishes running, Node A is the ancestor node of Node B, and Node B depends on Node A. In a direct acyclic graph (DAG), dependencies between nodes are represented by arrows.

data timestamp

A data timestamp refers to a date that is directly relevant to a business activity. The date reflects the actual time when business data is generated. The concept of data timestamp is crucial in offline computing scenarios. For example, in retail business, if you collect statistics on the turnover on October 10, 2024, the turnover is calculated in the early morning of October 11, 2024. In this case, the data timestamp is 20241010.

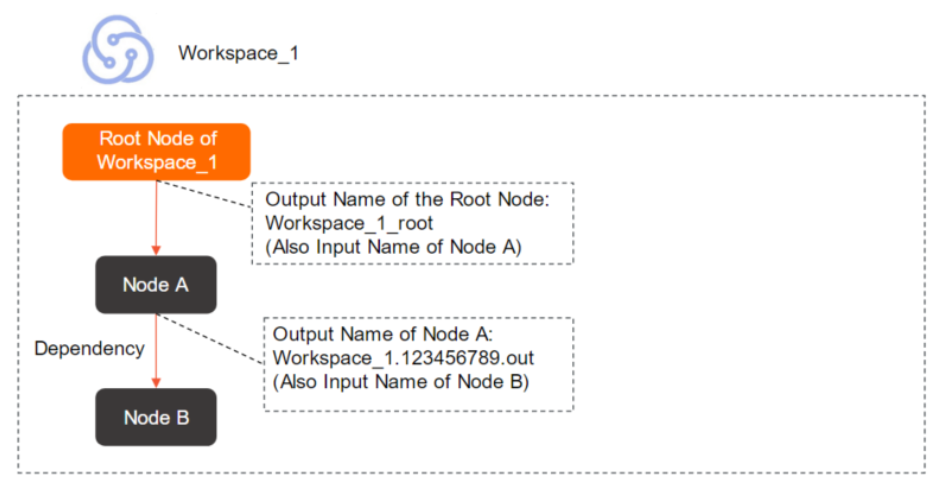

output name

An output name is the name of the output that is generated by a task. Each task has an output name. When you configure dependencies between tasks within an Alibaba Cloud account, the output name of a task is used to connect the task to its descendant tasks.

When you configure dependencies for a task, you must use the output name of the task instead of the task name or ID. After you configure the dependencies, the output name of the task serves as the input name of the descendant tasks of the task.

Note

NoteThe output name of a task distinguishes the task from other tasks within the same Alibaba Cloud account. By default, the output name of a task is in the following format:

Workspace name.Randomly generated nine-digit number.out. You can specify a custom output name for a task. You must make sure that the output name of the task is unique within your Alibaba Cloud account.output table name

We recommend that you use the name of the table generated by a task as the output table name. Proper configuration of an output table name can help check whether data is from an expected ancestor table when you configure dependencies for a descendant task. We recommend that you do not manually modify an output table name that is generated based on automatic parsing. The output table name serves only as an identifier. If you modify an output table name, the name of the table that is actually generated by executing SQL statements is not affected. The name of an actually generated table is subject to the SQL logic.

NoteAn output name must be globally unique. However, no such limit is imposed on an output table name.

scheduling parameter

A scheduling parameter is a variable that is used in code for scheduling and running a task. The value of a scheduling parameter is dynamically replaced when a task is scheduled to run. If you want to obtain information about the runtime environment, such as the date and time, during the repeated running of code, you can dynamically assign values to variables in the code based on the definition of scheduling parameters in DataWorks.

data catalog

A data catalog is a structured list or map that is used to display all data assets in an organization, such as databases, tables, and files. A data catalog records the metadata information of these data assets in DataWorks.

relationships among computing resources, data sources, and data catalogs

Computing resources, data sources, and data catalogs are independent objects but correlated with each other. They have the following relationships:

When you associate a computing resource with a workspace, the system generates a data source and a data catalog based on the computing resource.

When you add a data source to a workspace, the system generates a data catalog based on the data source.

When you create a data catalog, the system cannot generate a data source or computing resource based on the data catalog.

Operation Center

Scheduling time

The scheduling time is a point in time at which an auto triggered task is expected to run. The scheduling time can be accurate to the minute.

ImportantMultiple factors affect the running of a task. In some cases, a task may not start to run even if its scheduling time arrives. Before a task starts to run, DataWorks checks whether the following conditions are met for the task: The ancestor task of the task is run as expected, the scheduling time of the task arrives, and sufficient resources are available to run the task. If the conditions are met, the task is triggered to run.

data timestamp

A data timestamp refers to a date that is directly relevant to a business activity. The date reflects the actual time when business data is generated. The concept of data timestamp is crucial in offline computing scenarios. For example, in retail business, if you collect statistics on the turnover on October 10, 2024, the turnover is calculated in the early morning of October 11, 2024. In this case, the data timestamp is 20241010.

auto triggered task

An auto triggered task is a task that is triggered by the scheduling system based on the scheduling properties configured for the task. In the list of auto triggered tasks in Operation Center, you can perform O&M and management operations on an auto triggered task. For example, you can view the DAG of, test, backfill data for, and change the owner of the auto triggered task.

auto triggered instance

An auto triggered instance is an instance that is automatically generated for running based on the scheduling properties of an auto triggered task. For example, if an auto triggered task is configured to run once every hour, 24 instances are generated for the auto triggered task every day. One of the instances is automatically triggered to run every hour. Only instances have information such as the running status. In the list of auto triggered instances in Operation Center, you can perform O&M operations on an auto triggered instance. For example, you can stop and rerun the instance and set the status of the instance to successful.

data backfill

If you perform the data backfill operation, a data backfill instance is automatically generated for the specified task based on the selected time range. The data backfill feature is mainly used for historical data write-back and data rectification. You can use the data backfill feature to compute data for a period of time in the past or in the future to ensure data completeness and accuracy.

baseline

DataWorks allows you to associate tasks with a baseline to monitor the tasks. After you associate tasks with a baseline, the system automatically monitors the tasks based on the priority, committed completion time, and alert margin threshold of the baseline, and reports an alert if a risk that may affect the normal data output of the tasks is detected. The larger the number that indicates the priority of a baseline, the higher the priority. DataWorks preferentially allocates resources to the tasks in a baseline that has a high priority to ensure the output timeliness of the tasks. An alert margin threshold is a period of time that is reserved to handle exceptions. DataWorks calculates the alert time by subtracting an alert margin threshold from the committed completion time for a baseline. If DataWorks detects that a task in the baseline cannot generate data before the alert time, DataWorks sends an alert notification to the alert contact that you specify when you configure the baseline.

Data Governance Center

health score

A health score is a comprehensive metric that is used to evaluate the health status of data assets. Health scores range from 0 to 100. A higher score indicates healthier data assets. A health score is calculated by using the health assessment model provided by DataWorks based on governance items and reflects the data governance effectiveness of a tenant, workspace, or user. Data Governance Center provides health scores in the following dimensions: storage, computing, R&D, quality, and security. Each dimension has corresponding health score metrics, which helps users easily understand data governance effectiveness and intuitively learn about the health status of data assets.

governance item

A governance item is used by DataWorks to detect issues that need to be optimized or resolved in data assets during data governance, such as issues in the aspects of R&D specifications, data quality, security compliance, and resource utilization. Governance items are classified into mandatory governance items and optional governance items. By default, mandatory governance items are globally enabled and cannot be disabled. You can enable optional governance items based on your business requirements. For example, you can use governance items to detect the tasks that time out, nodes that fail to run over multiple consecutive times, or leaf nodes that are not accessed by users.

check item

A check item is an active governance mechanism that acts on the data production process. You can use a check item to check tasks for violations against constraints before the tasks are committed and deployed to identify potential issues such as full table scans or absent scheduling dependencies. If the content that does not meet specific requirements is detected, the system generates a check event and intercepts and handles the content. Check items can help you restrict and manage the data development process and ensure the standardization and normalization of data processing.

data governance plan

Data Asset Governance provides data governance plan templates for different scenarios, with a focus on achieving predetermined governance objectives within specific periods. A data governance plan template can be used to quickly determine highly relevant governance items and check items and identify objects that can be optimized. This helps governance owners keep a close eye on data governance effectiveness and helps the team timely realize governance objectives by performing quantitative assessments.

knowledge base

A knowledge base provides definitions of built-in check items and governance items in Data Governance Center to help data governance engineers quickly identify and understand issues that occur during data governance. The knowledge base also provides solutions to issues and operation guidance to help improve data governance efficiency.

Security Center

data permission

Security Center supports fine-grained permission requesting, request processing, and permission auditing. This allows you to manage permissions based on the principle of least privilege. In addition, Security Center allows you to view the request processing progress and follow up request processing in real time. For more information, see the topics in the Data access control directory.

data security

Security Center provides various features, such as data category and data sensitivity level, sensitive data identification, data access auditing, and data source tracking. The features can help identify data that has potential security risks at the earliest opportunity during workflow processing. This ensures data security and reliability. For more information, see Overview.

Data Quality

data quality monitoring

Data quality monitoring refers to the continuous tracking and detection of the status and changes of data objects, such as specific partitions in a partitioned table, to ensure that the data objects meet predefined quality standards. Data quality monitoring can help identify and resolve issues that may affect data quality at the earliest opportunity. You can enable scheduling events to trigger data quality monitoring in DataWorks to implement automatic quality check, and enable DataWorks to send alert notifications related to data monitoring results to the specified alert recipients.

monitoring rule

Monitoring rules serve as the specific conditions or logical criteria for evaluating whether data quality meets expectations. For example, "The age of customers cannot be less than 0" is a monitoring rule. In DataWorks, you can configure different monitoring rules based on your business requirements and apply the monitoring rules to a specific range of data. If some data does not meet monitoring rules, the system identifies the data and reports a data quality alert.

rule template

A rule template is a quality rule sample that contains predefined verification logic. You can directly use a rule template or modify the thresholds that are specified in a rule template to create monitoring rules based on your business requirements. DataWorks provides multiple types of rule templates. You can also execute custom SQL statements to create rule templates.

Built-in rule templates: You can create monitoring rules based on built-in rule templates provided by DataWorks.

Custom rule templates: If the built-in rule templates cannot meet your requirements for monitoring the quality of data specified by partition filter expressions, you can create monitoring rules based on custom rule templates. You can save frequently used custom rules as rule templates for future use.

Data Security Guard

data category and data sensitivity level

Data category and data sensitivity level: You can specify a sensitivity level for your data based on the data value, content sensitivity, impacts, and distribution scope. The data management principle and data development requirements vary based on the data sensitivity level.

sensitive data identification rule

Sensitive data identification rule: You can define the category of data and configure sensitive field types for data based on the source and usage of data. This helps you identify sensitive data in the current workspace. DataWorks provides built-in data categories and sensitive data identification rules. You can also create data categories and sensitive data identification rules based on your business requirements.

data masking rule

Data masking rule: You can configure a data masking rule for the identified sensitive data. Data masking management varies based on the data sensitivity level due to the business management and control requirements.

risk identification rule

Risk identification rule: Data Security Guard can proactively identify risky operations on data and report alerts based on intelligent analysis technologies and risk identification rules. This helps you perform more comprehensive risk management and effectively identify and prevent risks.

Data Map

metadata

Metadata describes data attributes, data structures, and other relevant information. Data attributes include the name, size, and data type. Data structures include the field, type, and length. Other relevant information includes the location, owner, output task, and access permissions.

data lineage

Data lineages are used to describe correlations among data that are formed during data processing, data forwarding, and data integration. A data lineage displays the entire process from data creation, data processing, data synchronization to data consumption, as well as the data objects that are involved in the process. In DataWorks, data lineages are displayed in a visualized manner. This can help users quickly locate issues and evaluate the objects that may be affected if users modify a table or a field. Visualized display of data lineages is crucial for maintaining a complex data processing procedure.

data album

A data album is used to organize and manage table categories from the business perspective. You can add a specific table to a data album. This way, you can search for and locate the table in an efficient manner.

DataAnalysis

SQL query

DataAnalysis allows you to execute standard SQL statements to query and analyze data in different types of data sources. For more information, see SQL query.

workbook

Workbooks are tools designed for editing and managing data online. You can import SQL query results or data in on-premises files to a workbook for further query, analysis, and visualization. You can also export or download data from a workbook or share data in a workbook with other users to meet data analysis requirements in a flexible manner. For information about how to create and manage workbooks, see Create and manage a workbook.

data insight

Data insight refers to the acquisition of profound data understanding and discoveries based on in-depth data analysis and interpretation. Data insight supports data exploration and data visualization. You can use the data insight feature to understand data distribution, create data cards, and combine data cards into a data report. In addition, data insight results can be shared by using long images. The data insight feature uses artificial intelligence (AI) technologies to help analyze data and interpret complex data for business decision-making.

DataService Studio

API

API stands for Application Programming Interface. In DataService Studio, developers can quickly encapsulate APIs based on various types of data sources. The APIs can be called in business applications, software, systems, and reporting scenarios to facilitate data retrieval and consumption.

function

A function can be used as a filter for an API. If you use a function as a pre-filter for an API, the function can be used to process the request parameters of the API. For example, the function can be used to change the value of a request parameter or assign a value to a request parameter. If you use a function as a post-filter for an API, the function can be used to perform secondary processing on the returned results of the API. For example, the function can be used to change the data structure of the returned results or add content to the returned results.

data push

The data push feature provided by DataWorks allows you to create data push tasks. You can write SQL statements for single- or multi-table queries in a data push task to define the data that you want to push and organize the data by using rich text or tables. In addition, you can configure scheduling properties for the data push task to periodically push data to destination webhook URLs.

Open Platform

OpenAPI

The OpenAPI module allows you to call DataWorks API operations to use different features of DataWorks and integrate your applications with DataWorks.

OpenEvent

The OpenEvent module allows you to subscribe to event messages. You can receive notifications about various change events in DataWorks and respond to the events based on your configurations at the earliest opportunity. For example, you can subscribe to table change events to receive notifications about the changes to core tables in real time. You can also subscribe to task change events to implement custom data monitoring of a dashboard that displays the status of real-time synchronization tasks.

Extensions

The Extensions module is a plug-in provided by DataWorks. You can use Extensions together with OpenAPI and OpenEvent to process user operations in DataWorks based on custom logic and block user operations. For example, you can develop an extension for task change control and use the extension to implement custom task deployment control.