By Jiyang Zhang (Zanye)

In order to efficiently store, retrieve, and maintain data, database systems employ various data organization structures. These structures serve specific purposes and optimizations, such as improving query speed, optimizing write performance, and reducing storage space. Common database structures include:

B-Tree is a balanced multi-way tree particularly suited for external storage, like hard disks. It optimizes read and write operations by reducing disk access frequency. B-Tree is widely used in database management systems and file systems to store indexes and data. A typical example is MySQL.

LSM Tree is a data structure designed for write-intensive scenarios, typically used in NoSQL databases. It writes data updates to in-memory structures and periodically merges them to disk, leveraging sequential write capabilities to greatly optimize write performance. The typical examples are LevelDB and HBase.

Heap Table is a simple way to organize data records. Records are inserted in no particular order, allowing data write performance to almost reach the upper limit of disk writes. However, due to its disordered nature, data query efficiency is lower than that of indexed tables. To improve query performance, Heap Table is often used in conjunction with index structures like B-Tree. A typical database example is PostgreSQL.

Skip List is a probabilistic data structure that contains "skipping" links for fast navigation. It has an average complexity of O(log n) for search, insertion, and deletion. It is also used in some versions of NoSQL databases as an efficient structure for in-memory indexes.

Each data organization structure has its advantages and application scenarios. The OLTP services of relational databases require balanced demands on concurrent reads and writes, MVCC, space utilization, efficient search and range query, optimized disk I/O, and balance. Currently, the PolarDB-X adopts a B-Tree index organization structure.

Clustered indexes, also known as primary key indexes, are commonly used in B-Tree indexes. With clustered indexes, queries can directly access all data in the corresponding data row. Notably, the storage engine of Alibaba Cloud's PolarDB for Xscale (PolarDB-X) also stores version information of data rows in the clustered index. During a query, PolarDB-X compares the query view information with the version information of row records on the clustered index to determine the version record required by the query. If the current version on the clustered index does not meet the requirement, a historical version of the corresponding record needs to be constructed through the Undo log.

Clustered indexes are very effective for primary key queries. However, if the query conditions are not determined by the primary key, the query should back to full scan. To solve this problem, non-clustered indexes are introduced. Non-clustered indexes are similar to clustered indexes, but there is an important difference:

A non-clustered index has no version information, that is, only the latest version can be found through a non-clustered index. When searching a non-clustered index, you must first check whether the version is the one required by the query. However, because there is no version information on the non-clustered index, it is necessary to further search the clustered index through the primary key information to obtain the version information.

The non-clustered index is used to find the corresponding clustered index record. This process is usually referred to as lookup.

The following table shows a typical use case for a non-clustered index.

Score table of Grade X (clustered index):

| Student ID (primary key) | Class | Score | Version number (hidden column) |

| . . . | . . . | . . . | . . . |

| 225208 | Class 3 | 100 | 7 |

| 225209 | Class 2 | 99 | 5 |

| . . . | . . . | . . . | . . . |

Class-student ID index (non-clustered index)

| Class (index column) | Student ID (primary key) |

| . . . | |

| Class 2 | 225209 |

| Class 3 | 225208 |

| . . . |

When querying all the student IDs of Class 2, we only need to search the non-clustered index. However, since it is not sure whether it is visible, we should also query the corresponding primary key record on the clustered index to determine its true version number information.

The cost of an SQL statement lookup depends on the frequency of the lookup and the overhead of the lookup itself.

The overhead of the lookup itself is produced by B-Tree search, that is, a query of non-clustered index records requires a query of the clustered index.

The frequency of lookup depends on the type of SQL statement, especially for Range queries:

SELECT sec FROM t1 WHERE sec BETWEEN ? AND ?When the PolarDB-X DN storage engine executes an SQL statement, it needs to go through the stages of table opening and B-Tree searching. The Range query will significantly increase the proportion of the lookup overhead. If each row of records needs to query the table, it will cause severe performance problems.

According to our test data, the performance difference between a full lookup and no lookup is more than 10 times.

Lookup operations also have an impact on the Buffer Pool, especially when the Buffer Pool resources are insufficient. A large number of lookup operations occupy free pages in the Buffer Pool, causing a database avalanche.

Considering the extreme case, if there is almost no modification on a table, this lookup operation to determine visibility is almost meaningless.

From the above analysis, it can be found that the lookup operation to determine visibility is costly and unnecessary in most cases. Currently, standalone databases such as MySQL introduce the minimum active transaction number to assist the visibility judgment of non-clustered indexes, which greatly reduces the lookup operations caused by visibility judgment.

However, for distributed databases such as PolarDB-X, the situation becomes more complex and this solution will no longer be applicable. In order to solve this problem, the PolarDB-X DN storage engine proposes a CSM (Commit Snapshot Manager) solution.

The following section will introduce the solution of the standalone MySQL database and extend to the CSM solution designed for distributed scenarios in PolarDB-X.

In order to solve this problem, MySQL introduces the minimum active transaction number to assist the visibility judgment of non-clustered indexes, which greatly reduces the lookup operations and the search cost.

The transaction ID number uniquely identifies a transaction. MySQL maintains a transaction ID number generator. The generator generates a globally unique transaction ID number that is monotonically increasing. When a transaction starts, it needs to obtain a transaction ID number from the transaction ID number generator as its own transaction identifier.

At the same time, MySQL maintains a global state variable: min_active_trx_id. min_active_trx_id indicates the smallest transaction ID number among all active transactions. This means that all transactions with transaction ID numbers smaller than the min_active_trx_id have been committed.

In addition, a special piece of persistent information is also maintained on each data page in a non-clustered index: the max_trx_id. max_trx_id indicates the largest transaction ID number among all transactions that have modified the data page in this data page.

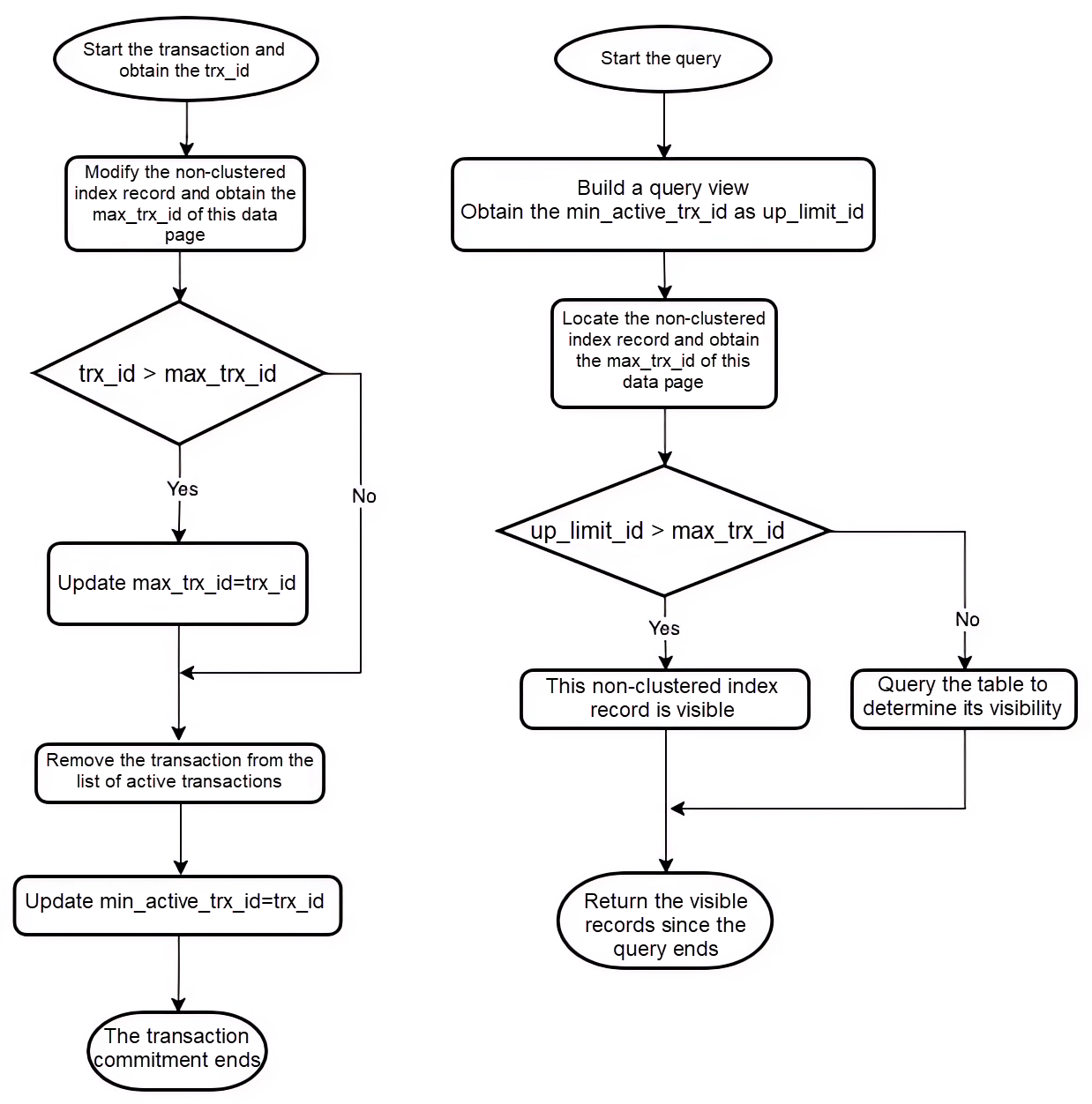

When a query is initiated, a view is built to take the min_active_trx_id of the current system as the up_limit_id of the view. When searching a non-clustered index, the visibility can be determined by comparing the up_limit_id to the max_trx_id of the data page. The following figure shows the relevant flowchart:

For distributed databases, the lookup problem is further obvious. The distributed queries of PolarDB-X use MVCC multi-version queries based on the global timestamp TSO. That is, the version number GCN of the distributed query view is specified by the TSO generated by the external global timing service, instead of being obtained from the latest status of the local trx in the database management system.

Due to network and system scheduling, when a distributed query is actually initiated, the state of the transaction system on the node may have changed many times, and the latest min_active_trx_id information of the transaction system state is no longer applicable to the building of the GCN view of this distributed query.

For example:

| Time | Distributed query | Distributed transaction A | Distributed transaction B |

| T1 | Initiate a distributed query to obtain the TSO from GMS and set GCN = 99 | ||

| T2 | Transaction (trx_id=90, GCN = 100) updates the max_trx_id = 90 of non-clustered index page_a on page_a | ||

| T3 | Transaction (trx_id=91, GCN = 101) is committed | ||

| T4 | The query obtains the view (up_limit_id=91, snapshot_GCN=99) on DN | ||

| T5 | Query the records on page_a, which are visible due to up_limit_id > max_trx_id |

For the distributed query initiated above, the record should actually be invisible to the view since (snapsho_GCN=99) < (rec_GCN=100).

The main reason for the above problem is that the commit order of all distributed transactions is not generated locally but by an external global coordinator. That is, the actual commit order of the branch transactions of multiple distributed transactions on each node may be different.

As a result, the visibility of distributed queries is much more complex than that of local standalone queries. At present, the theory of non-clustered index optimization based on standalone queries in the industry is no longer effective on distributed queries.

Therefore, how to reduce the additional lookup cost of non-clustered indexes due to searching for version information in a distributed database management system is one of the most critical issues in the design of a distributed database management system.

The reason why the optimization solution of non-clustered indexes on standalone database management systems is no longer applicable in distributed scenarios is that distributed queries require the min_active_trx_id of "that time", but the existing design of the database can only provide the min_active_trx_id of "this time".

The "moment" mentioned above is not a moment in physical time, but a logical "moment" like GCN. That is:

For the distributed query with snapshot_GCN = 99, what it requires is the min_active_trx_id corresponding to the transaction system when the DN is in GCN = 99.

An intuitive idea is that if the transaction system maintains the state information of the transaction system at all time points in the past and provides the ability to check the min_active_trx_id state at that time, it can solve the problem that non-clustered indexes need to query the table because they cannot determine the visibility in the distributed scenario.

Of course, the cost is huge. Taking 100,000 TPS as an example, at least 90 MB of data needs to be generated per minute.

The PolarDB-X storage engine uses the CSM solution to balance resource overheads and availability and maintains the approximate min_active_trx_id state at a very low cost. This solves the problem that non-clustered indexes frequently query the table in distributed scenarios. The CSM is a circular queue, and a Commit Snapshot is generated at a regular interval (for example, 1 second). More specifically:

Among them, sys_GCN is the maximum GCN number on the current node maintained by the PolarDB-X storage engine. The update timing is:

Obviously, both sys_GCN and min_active_trx_id are monotonically increasing. It means that the csm_GCN and up_limit_id are always ordered on the CSM circular queue.

When a distributed query is initiated, according to the snapshot_GCN of the view, it starts searching from the end of the CSM until it finds a CSM element whose csm_GCN is no greater than the snapshot_GCN, and takes the up_limit_id of this element as the up_limit_id of the view so that we can obtain the up_limit_id of "that time". Note that in order to ensure orderliness, the above lookup can be done by a binary lookup.

Obviously, this up_limit_id is only an approximation of the true up_limit_id of "that time". However, through the above steps, we can always obtain an approximation that is smaller than the true value. Fortunately, when we use a smaller up_limit_id for visibility judgments, it will not lead to a misjudgment.

We can see that the Commit Snapshot in the CSM also includes csm_SCN. This is because the PolarDB-X Standard Edition also supports the flashback query of AS OF syntax. You can use the flashback query to perform game rollback, business rollback, and restore deleted data. For the transaction system design of PolarDB-X DN storage engine, refer to the previous article About Database Kernel | Transaction System of PolarDB-X Storage Engine - Part1

SELECT sec FROM t1 AS OF SCN $SCN WHERE sec BETWEEN ? AND ?

SELECT sec FROM t1 AS OF TIMESTAMP $time WHERE sec BETWEEN ? AND ?The test results show that for consistent queries of distributed transactions, enabling CSM (32 KB memory resources + a small amount of computing overhead) can significantly improve the performance:

# Perform a stress test for the SQL statement to simulate the index that hits the sec column

SELECT sec FROM t1 WHERE sec BETWEEN ? AND ?| Range_size | 1 | 10 | 50 | 100 |

| CSM Off (QPS) | 82657 | 9333 | 1946 | 993 |

| CSM On (QPS) | 302332 | 105496 | 26002 | 13369 |

| Improvement Value (%) | 265% | 1030% | 1236% | 1246% |

Try out database products for free:

[Infographic] Highlights | Database New Features in June 2024

ApsaraDB - November 7, 2024

ApsaraDB - August 23, 2024

ApsaraDB - September 29, 2021

ApsaraDB - June 3, 2024

ApsaraDB - March 19, 2025

ApsaraDB - October 17, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB