By Jiyang Zhang (Cuanye)

PolarDB-X, also known as PolarDB for Xscale, is a high-performance cloud-native distributed database service developed by Alibaba Cloud. The Lizard transaction system plays a vital role as the core technology module in the storage engine of PolarDB for Xscale. This article provides a detailed explanation of the Lizard transaction system in two parts. The first part focuses on the Lizard SCN standalone transaction system, while the second part discusses the Lizard GCN distributed transaction system.

Relational databases play an essential role in supporting enterprise-level data as online storage solutions. However, with the exponential growth of data and innovative businesses, efficiently expanding online databases without data loss poses significant challenges to database architecture. Additionally, enterprises require databases that provide comprehensive transaction processing and data analysis capabilities. To address these challenges, distributed databases have emerged. Compared to traditional transactional databases, distributed databases focus on solving several core technical problems:

The development of distributed databases has gone through different stages and technological paths in terms of technology selection.

In the early stages of distributed database development, database middleware was utilized to implement data sharding and routing, enabling horizontal scalability to efficiently accommodate the rapid business growth. However, this approach deploys middleware on the application side, necessitating business awareness and coordination for any data-sharding changes. Furthermore, it does not support the ACID properties between shards. As a result, the middleware sharding solution only partially enhances data writing scalability and does not form a complete distributed database.

While the ultimate form of scalability is achieved through the scale-out solution with horizontal linear scalability, phased scale-up solutions can provide lightweight scalability to meet current requirements. Additionally, the development of cloud-native databases demands significant elasticity based on resource pooling. Cloud-native databases like PolarDB and Arora continuously pool resources across layers to realize elasticity and scalability.

Leveraging distributed computing and storage capabilities, the Share Nothing/Everything solution can scale business operations without impacting or compromising the ACID properties of standalone databases. It can also ensure continuous service availability based on distributed protocols, such as PolarDB-X.

PolarDB-X is a high-performance cloud-native distributed database service developed by Alibaba Cloud. It offers cloud-era database services with high throughput, large storage, low latency, easy scalability, and high availability. PolarDB-X includes the following features:

● Cloud-native architecture and the Share Nothing architecture provide extreme elasticity and horizontal scalability.

● Transparent distribution enables distributed database operations similar to standalone databases.

● The self-developed Lizard distributed transaction system ensures ACID compliance and global consistency.

● The independently developed distributed replication protocol X-Paxos ensures continuous business availability.

● The efficient interactive protocol X-Protocol enables pipeline processing of requests.

● The row-column hybrid storage enables HTAP (Hybrid Transactional/Analytical Processing) capabilities.

● It is fully open source and compatible with MySQL, embracing the MySQL ecosystem.

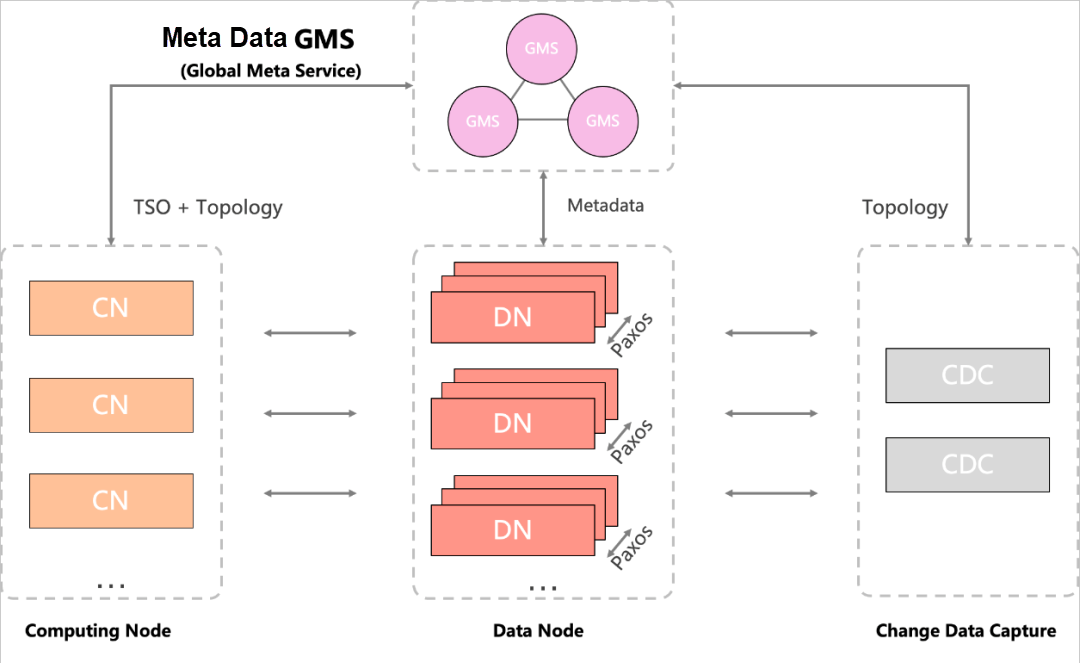

PolarDB-X employs the Timestamp Oracle (TSO) architecture for global timing service. The global timing service is provided by the cluster GMS. The CN (Computing Node) is used as the entry, providing basic data sharding and routing functions while realizing powerful execution engine capabilities. DN (Data Node) provides basic data storage services, distributed transactions, and high availability capabilities. The CDC (Change Data Capture) is used as a data flow channel to provide data ecological capabilities.

The following figure shows the architecture of PolarDB-X.

As a storage engine for DN and GMS nodes, it expands and develops a large number of core capabilities based on the MySQL open-source ecosystem to effectively support PolarDB-X products, including:

● Continuously incremental global auto-increment column

● Globally ordered TSO number sender

● Efficient and stable distributed transactions

● Secure and strict global consistency

● Continuously available distributed protocol

● Durable and reliable multi-replica storage

In data storage, the unique incrementing serial number is a common service that is often used for ordered storage of data or the sequencing of nodes or global events. The storage engine of PolarDB-X implements a sequence service, which is implemented by a logic engine.

A sequence includes a series of attributes: start value, min value, max value, increment by, cache/nocache, and cycle/nocycle. The syntax is as follows:

1. Create a SEQUENCE

CREATE SEQUENCE [IF NOT EXISTS] <Database name>.<Sequence name>

[START WITH <constant>]

[MINVALUE <constant>]

[MAXVALUE <constant>]

[INCREMENT BY <constant>]

[CACHE <constant> | NOCACHE]

[CYCLE | NOCYCLE];2. Access SEQUENCE

SELECT Nextval(seq);

SELECT Currval(seq);The underlying layer of sequence uses the InnoDB storage engine to store these attributes. Therefore, the sequence engine is defined as a logic engine that is responsible for sequence cache policy and access entries. The actual data is stored in the InnoDB table.

To ensure the uniqueness of the sequence, the sliding of the sequence window brings modification of the underlying data. This modification uses an autonomous transaction, which means that it is out of the context of the main transaction and is submitted automatically. If the main transaction is rolled back, the obtained sequence number will be discarded instead of being rolled back to ensure its uniqueness.

Two types of sequences are supported based on the type of lease. One type is a number sequence. The number window is used to achieve the best balance between the highest throughput and the lowest discardable numbers. The other type is a time sequence. The time window is used to achieve the best balance between the highest throughput and the least unavailable time.

The high availability of a sequence depends on the highly available solution of the storage engine in which the sequence is located. The modification logs of a sequence are replicated through the binary log and the X-Paxos protocol. If a switchover occurs, the sequence discards the data in one lease window to ensure uniqueness. In terms of performance, it reaches 30,000 QPS/Core, and can easily run on CPUs with hundreds of cores without performance hot spots.

In the MySQL ecosystem, the Auto Increment is widely used. In a standalone MySQL database, it can ensure that its ID generation is unique and continuous. In a distributed database, data may be distributed in different nodes. Common methods of Auto Increment are compatible, such as the segmentation method.

If the service has four shards, you can segment each shard:

With this method, the unique feature is achieved, but cannot be seen globally. It shows continuous increments and swings between shards with different numbers.

SEQUENCE implements a number generator by default. In other words, its window lease type is a number. For example, if the window is 100, its cache size is also 100. The number in the window is obtained from the memory, and when the cache is used up, it advances to the next window. In abnormal cases, a maximum of one window number can be lost to ensure its uniqueness. Therefore, the setting of cache size needs to coordinate between improving performance and losing as few window numbers as possible. Auto Increment in PolarDB-X can correspond to a sequence and use nextval to generate unique consecutive numbers for this field. These numbers are distributed to data shards in multiple nodes, and auto-increment columns are generated in the order in which they are inserted.

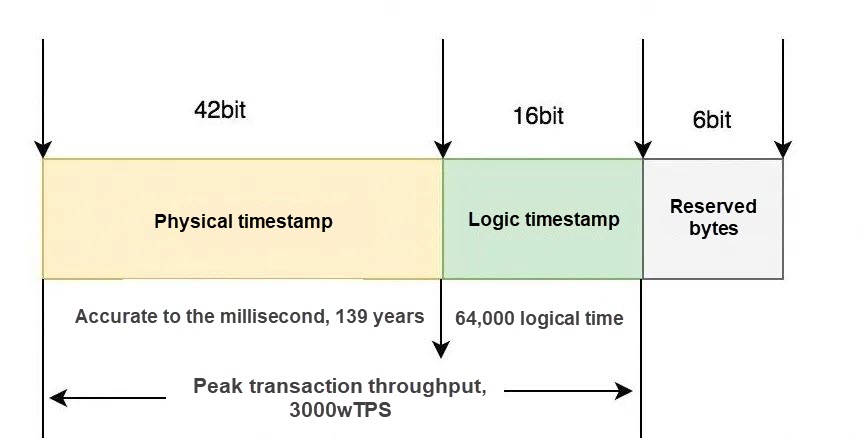

The TSO number sender is used to sequence the events of PolarDB-X and is the basis for distributed transactions and global consistency. It also needs guaranteed unique increment and achieves high throughput. In addition, TSO has a special format to present the readability time:

| Physical clock | Logical clock | Reserved bits |

| 42 bits | 16 bits | 6 bits |

The time generator implemented by sequence, or the time lease type, customizes the TSO format by using 42 bits to express millisecond-level physical time and 16 bits to express an incremental natural number. Theoretically, the second-level generator can achieve a maximum TPS throughput of 30 million, which is sufficient to support an ultra-large-scale distributed database.

The lease window is expressed by cache size. For example, cache size = 2s represents a lease window of 2 seconds, and the numbers in the 2-second window are generated from memory, and all highly available nodes are pushed up in advance until the beginning of the next 2 seconds. For this window, 2 seconds is the maximum physical waiting time available for the TSO number sender after abnormal switching to ensure uniqueness. The setting of cache size needs to coordinate between the highest throughput capacity and the least unavailable time.

The PolarDB-X storage engine must rely on a distributed transaction system to implement the ACID features of globally distributed transactions and ecosystem-wide consistency. However, the InnoDB transaction system of the MySQL community version has many disadvantages.

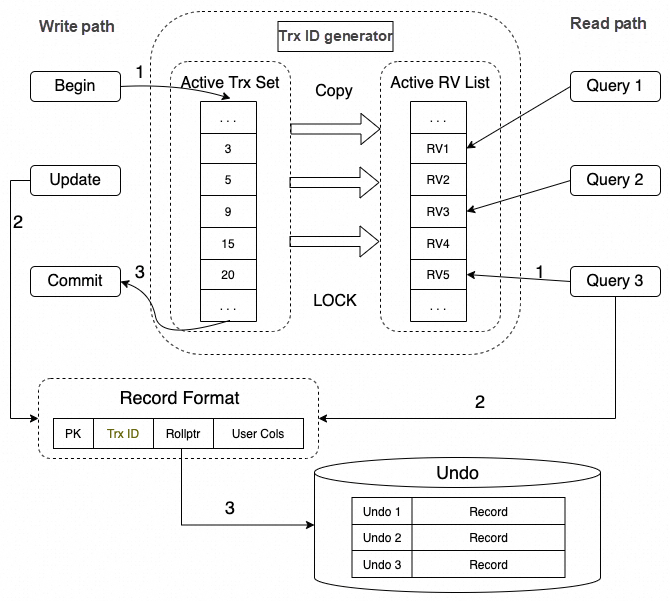

The InnoDB transaction system maintains a global active transaction in the memory structure, including the active transaction linked list, the active view (read view) linked list and other structures, and is protected by a large lock. The simplified structure of it is as follows:

On the write path:

On the read path:

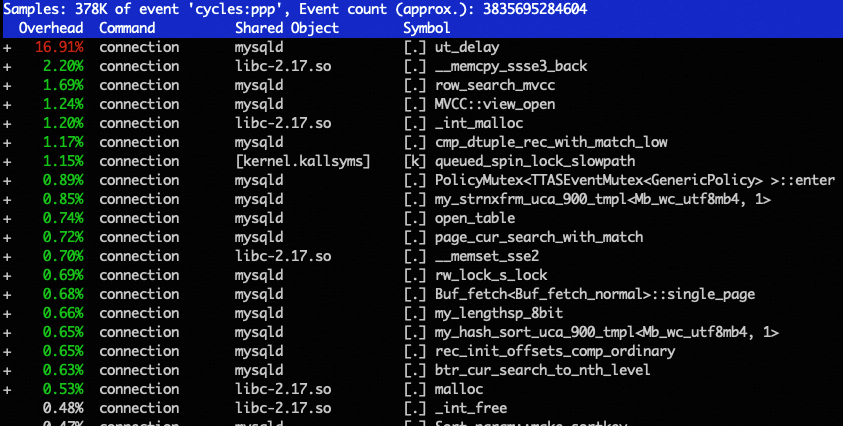

As is shown above, both the write path and the read path need to access the global structure (global active transaction array). This process needs to be completed under the protection of a large transaction lock. In the past, such designs were efficient and reliable. With the substantial enhancement of standalone CPU multi-core capability, such designs are increasingly becoming a bottleneck restricting performance improvement. Take the product of PolarDB-X DN nodes sold on the public cloud with maximum specifications(polarx.st.12xlarge.25, 90C, 720G, with maximum concurrent connections of 20,000) as an example. In the stress test Sysbench read_write scenario, nearly 17% of the time of CPU was spent on useless waiting:

In the InnoDB transaction system, the actual sequence of commits is determined internally, and the commit number generated by the InnoDB transaction system is trx->no, which is incremented internally and cannot be accessed or modified externally. When the two-phase commit protocol is used to commit distributed transactions in a distributed database cluster, the commit numbers of different shards are generated by themselves and are different from each other. The ability to unify ordering by TSO cannot be achieved.

The MVCC of the InnoDB transaction system depends on the view Read View, which is expressed by an array of active transaction IDs. It causes the following issues:

This brings major limits:

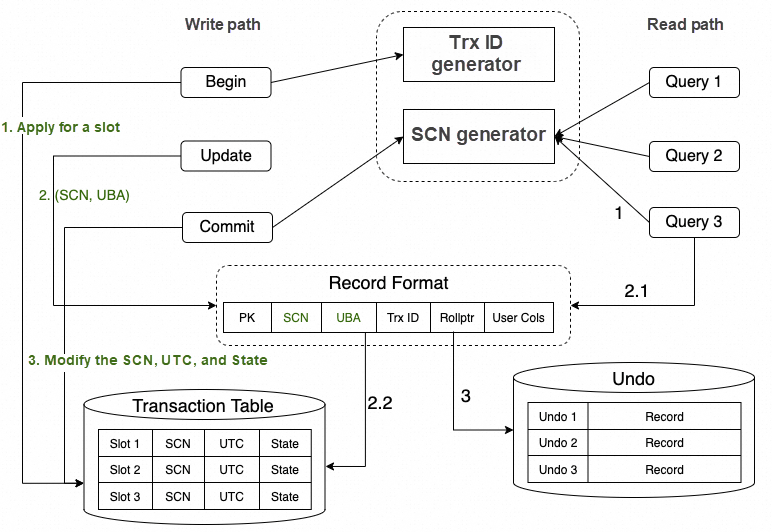

The MVCC mechanism of the relational database depends on the committed version of data to determine its visibility. Therefore, the Lizard standalone transaction system introduces the SCN (System Commit Number) to express the commit sequence of transactions and designs a transaction slot to persistently store the commit version number of transactions. The following diagram shows the system architecture:

Write transactions:

Read transactions:

Many users may have misoperations during online database operation and maintenance. For example, when updating or deleting statements, users may forget to specify the conditions or specify incorrect conditions. As a result, data is destroyed or lost due to human errors. Particularly, if the operation is related to important configuration information, it will severely affect the business operation. At this point, the DBA is often required to quickly roll back the data to restore the business.

However, the cost of a database rollback is usually high. Take a MySQL database as an example. The usual method is to take the most recent backup of a full database and replay the binary logs to a specified point in time. This process depends on the frequency of user backups and may take longer than a day. In addition, this feature relies on binary logs. If the binary logging feature is not enabled, by no means can you restore a database to a specified point in time.

The Lizard transaction system provides Native Flashback Query capabilities that allow users to retrieve data at a point in time in the past so that the data can be restored and the database can be saved.

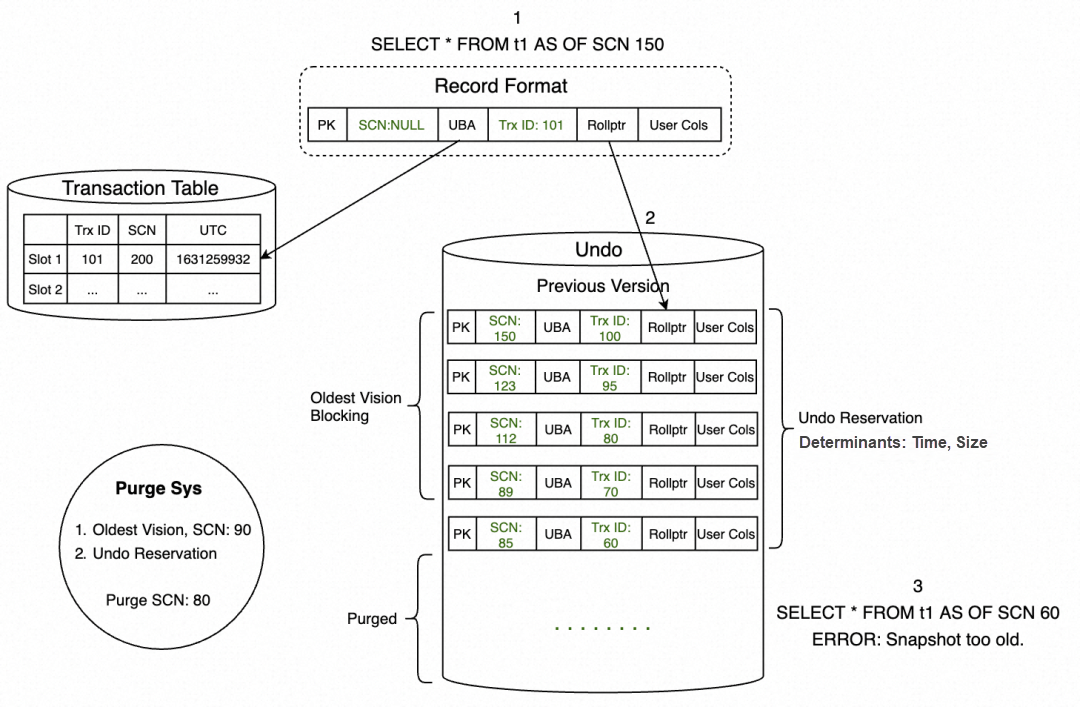

A FlashBack query is a consistent query of a database for a time point in the past. It needs the version number of the time point in the past, and the corresponding undo of the data for this version number to obtain a consistent version. To meet FlashBack queries, the Lizard SCN transaction system supports a customizable undo retention policy and a conversion mechanism between SCN and TIMESTAMP.

SELECT ... FROM tablename

AS OF [SCN | TIMESTAMP] expr;View Vision of Lizard SCN transaction system cannot recognize Timestamp as the version number for visibility comparison. Therefore, the system starts an SCN snapshot background task to record the relation between SCN and Timestamp according to the interval set by the user and saves it in the system table in MySQL library according to the retention period. The table structure is as follows:

CREATE TABLE `innodb_flashback_snapshot` (

`scn` bigint unsigned NOT NULL,

`utc` bigint unsigned NOT NULL,

`memo` text COLLATE utf8mb3_bin,

PRIMARY KEY (`scn`),

KEY `utc` (`utc`)

) /*!50100 TABLESPACE `mysql` */In the transaction system, the user's AS OF TIMESTAMP query first queries the snapshot table and finds the corresponding SCN. Then, this SCN is used as the query view to perform visibility comparison.

The Lizard SCN transaction system retains the settings of two dimensions to flexibly set the retention period of undo:

1. Time dimension:

The parameter innodb_undo_retention specifies how long undo is retained. Unit: second.

2. Space dimension:

The parameter innodb_undo_space_reserved_size specifies how much space undo reserves. Unit: MB.

The longer the time or larger the space that is reserved, the further back in time the query can retrace. However, this also results in space usage.

The core of flashback queries lies in historical versions. In the DN storage engine, the Purge system is in charge of cleaning up historical version data that is no longer needed in Undo. At present, the promotion strategy of the Purge system is to push hard and in time, resulting in the possibility of being cleaned up immediately once the historical version data is not needed.

Based on this, the Undo reservation mechanism is introduced. This mechanism blocks the progress of the Purge system, allowing the historical version of data to be retained in Undo for a long time so that users can retrieve the previous data version in case of misoperation.

However, the Undo space will expand quickly if all historical versions of the data are retained. To solve this problem, the current Undo Reservation mechanism will comprehensively consider two dimensions to block the progress of the Purge system: time and space.

Users can adjust the degree to which Undo Reservation blocks the Purge system based on actual needs. For example, users can set that historical version data can be kept as long as the space of Undo does not exceed 10 GB. In this case, if a database has a very small number of updates in a year, the Flashback Query function can even retrieve the data from a year ago.

The following figure, taking "SELECT... FROM tablename AS OF $SCN" as an example, briefly describes the operation process of this scheme.

As can be seen from the figure, the progress of the Purge system is blocked by the Undo reservation mechanism and only advances to cleaning up the historical data generated by the transactions with SCN at most 80.

Also, if a flashback query is initiated again, use SELECT * FROM t1 AS OF SCN 60 statement. If the data of the version is not found along the historical version chain, the historical data has been cleared by the Purge system and the "Snapshot too old." error is returned.

Compared with the InnoDB transaction system, the Lizard SCN transaction system brings huge advantages:

However, it also introduces some costs. The transaction commit only modifies the transaction slot, and the SCN on the row record is always NULL, so every visibility comparison needs to access the transaction slot according to the UBA address to determine the real commit version number SCN. To reduce the repeated access of the transaction slot, we have introduced Cleanout on the Lizard SCN transaction system, which is divided into two types: Commit Cleanout and Delayed Cleanout.

During the modification process of transactions, some records are collected. After the transaction is committed, part of the collected records are backfilled according to the committed SCN. Due to the need to ensure the speed of submission is not affected, only a small number of records are backfilled according to the current number of records and the load capacity of the system, and the result is quickly submitted and returned to the customer.

In the query process, after checking the transaction slot SCN according to the UBA address to determine its transaction status and commit version number, if the transaction has been committed, we can try to help clean out the row record, which is called Delayed Cleanout. In this way, the next query can directly access the row record SCN for visibility judgment and the access times to the transaction slot are reduced.

The transaction slot cannot be extended indefinitely, so the reusing solution is used to avoid space expansion. Transaction slots are continuously stored in a free_list linked list. During allocation, transaction slots in the free list are first to be obtained for reuse.

In addition, frequent accesses to the free_list linked list and extraction from it require accessing multiple data pages, which causes huge overhead. To avoid accessing multiple data pages, the transaction slot page will be put into the cache fast table first and can be directly obtained next time. In this way, the overhead caused by reading multiple data pages is greatly reduced.

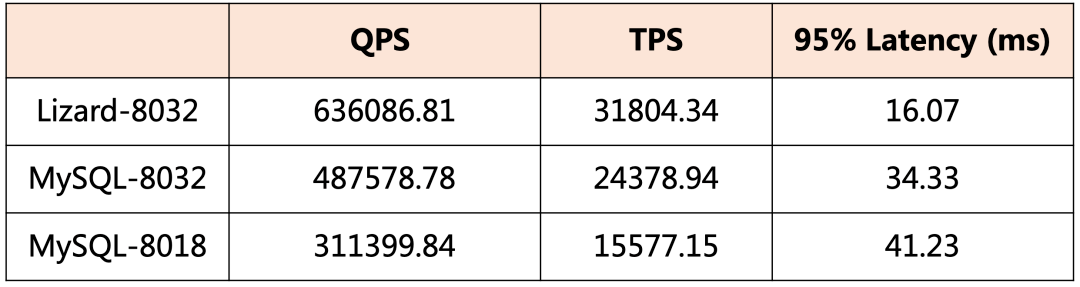

Although Cleanout brings some costs, the costs are shared in the query process, and there is no centralized hot spot competition. Therefore, the test result shows the overall throughput of the Lizard SCN transaction system is greatly improved compared with the InnoDB transaction system.

Note: The above data test environment is Intel 8269CY 104C. The data volume is 16 million, and the scenario is Sysbench Read Write 512 concurrency.

Compared with MySQL-8032, Lizard SCN presents 30% performance improvement and 53% latency reduction.

The next article will further describe the Lizard GCN distributed transaction system. Stay tuned!

[Infographic] Highlights | Database New Feature in December 2023

ApsaraDB - January 23, 2024

ApsaraDB - July 1, 2024

ApsaraDB - June 19, 2024

ApsaraDB - June 5, 2024

ApsaraDB - November 12, 2024

ApsaraDB - April 10, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB