By Yifei and Xiahua

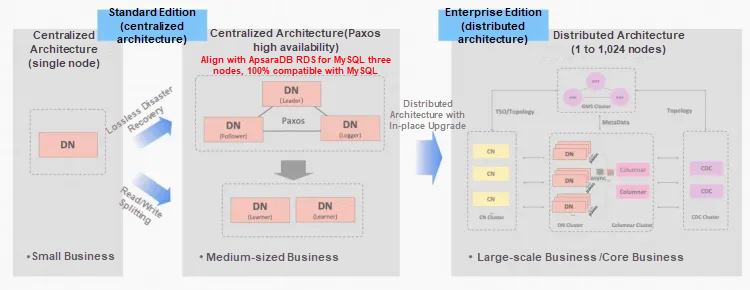

The PolarDB-X distributed database adopts an integrated centralized and distributed architecture. To flexibly handle mixed workload scenarios, the data node, which is responsible for data storage, uses multiple data structures. It utilizes a row-based storage structure to provide online transaction processing capabilities. As a 100% MySQL ecosystem-compatible database, the data node has been deeply optimized based on the InnoDB storage structure, significantly improving data access efficiency.

Real-world application workloads are diverse, and different data systems adopt data organization methods that suit their storage and retrieval characteristics. Commonly used structures include B-tree, LSM-tree, Hash Map, Heap Table, and R-tree. Among these, B-tree and their variants offer a balanced performance in efficient search, disk I/O optimization, range query support, concurrency control, and transaction safety, making them a popular choice for mainstream OLTP databases. MySQL InnoDB engine uses B+tree as its index organization structure.

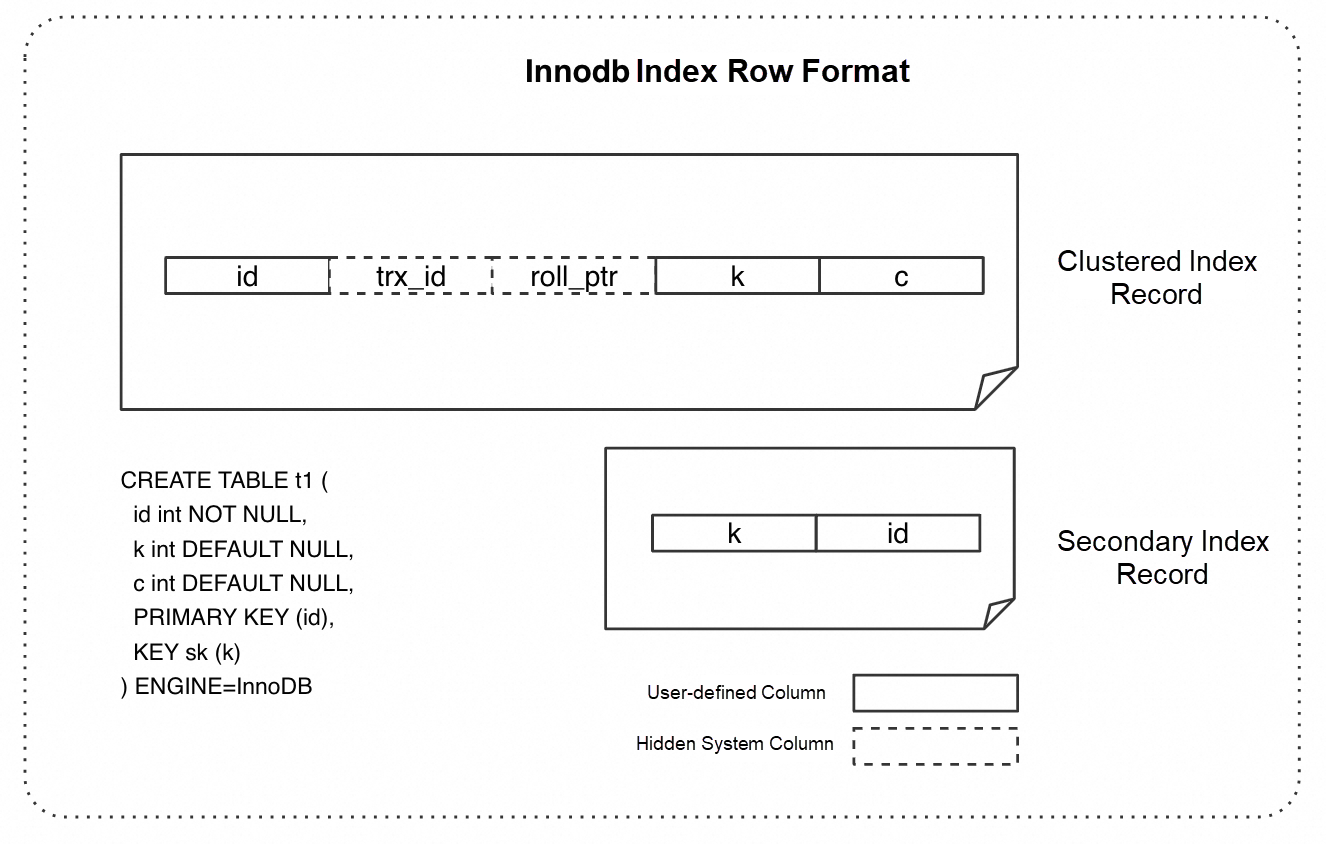

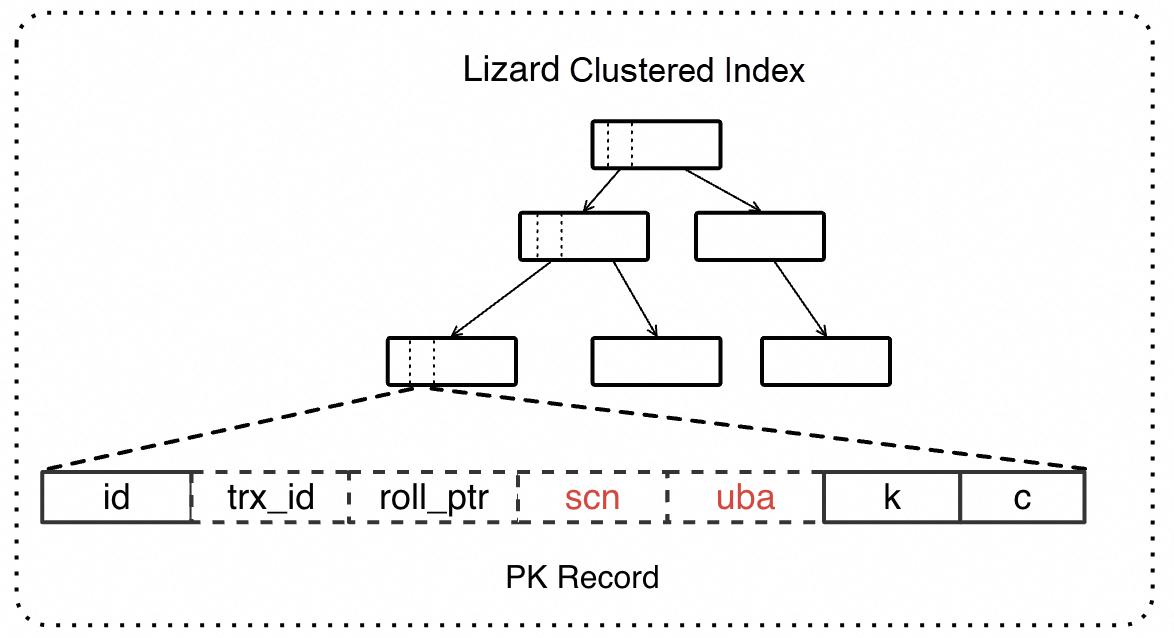

In the InnoDB storage engine, a user-defined table is actually implemented through multiple B+tree indexes. The clustered index B+tree corresponding to the primary key stores all the row data at its leaf nodes. For secondary indexes on other columns, the B+tree leaf nodes store only the corresponding secondary index keys and the primary key, as illustrated in the figure below.

Accessing InnoDB data involves two steps: locating the data via the B+tree index, and determining and constructing data visibility.

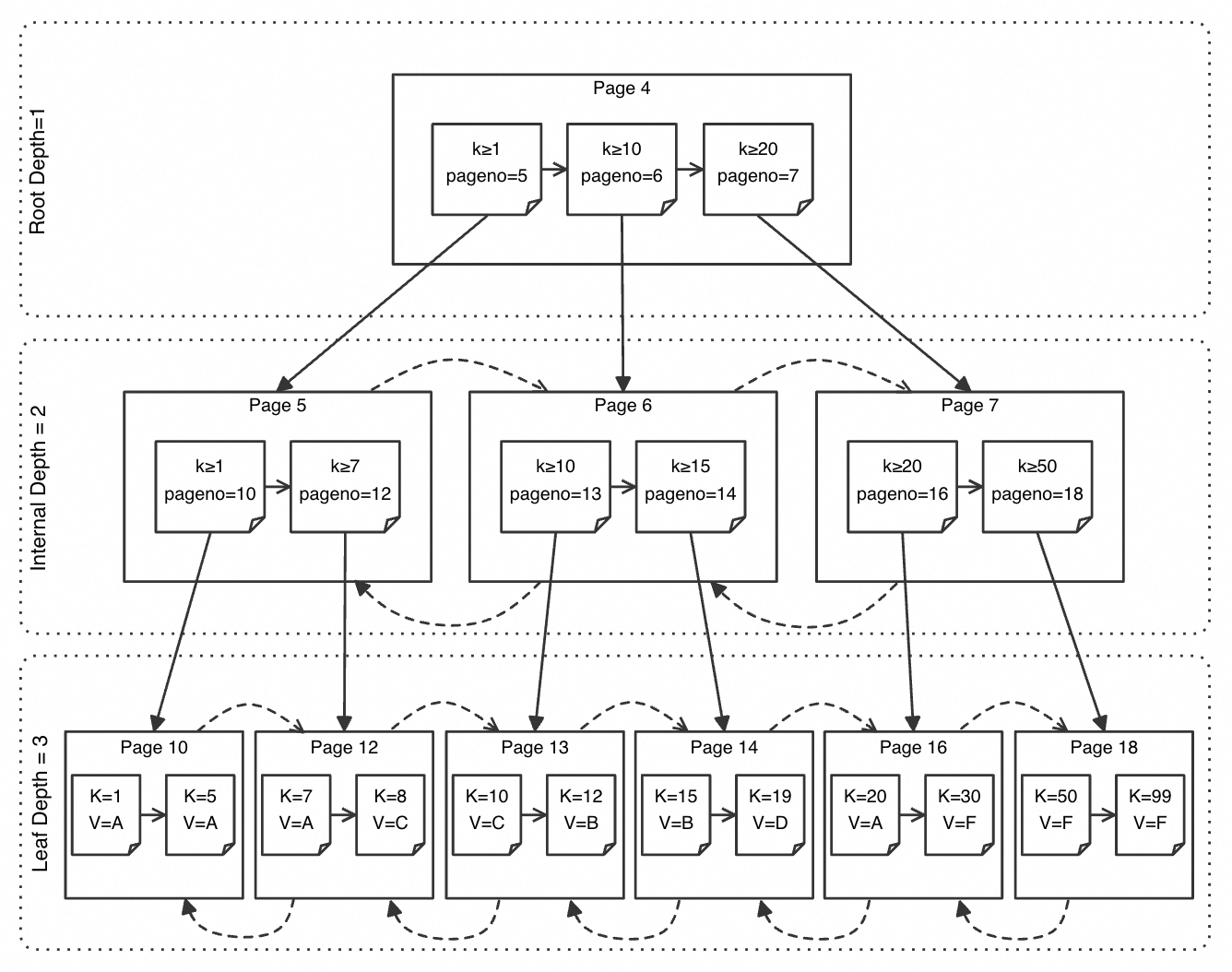

The process of locating a row record in a B+tree combines two mechanisms: tree search and page-level search.

• Tree Search: B+tree is a self-balancing multi-way tree. The search starts from the root node and proceeds downward through the tree structure, jumping from one page to another. The number of tree searches equals the height of the tree, with one page being accessed at each level.

• Page-level Search: Each page in a B+tree stores multiple key-value pairs. In leaf nodes, the records correspond to actual row data; in non-leaf nodes, the keys are consistent with those in the leaf nodes, while the values point to pages at the next level. Within non-leaf nodes, a binary search is used to find the target key, which determines the specific page to jump to the next level. Upon reaching a leaf node, another binary search is performed to accurately locate the required data.

This combination allows the B+tree to efficiently locate row records with a time complexity of O(klogn), where k represents the height of the B+tree and n represents the number of records per page. A B+tree with three or four levels is sufficient for most application scenarios. It is estimated that [1] a four-level B+tree with a branching factor of around 1000 can accommodate up to 26TB of data.

To speed up queries, an InnoDB logical table typically has one clustered index and multiple secondary indexes. Different query conditions may trigger scans on different B+tree indexes.

The clustered index is the default primary key index in InnoDB. Since the leaf nodes of a clustered index store the full row of data, the location process is complete once the leaf node is found.

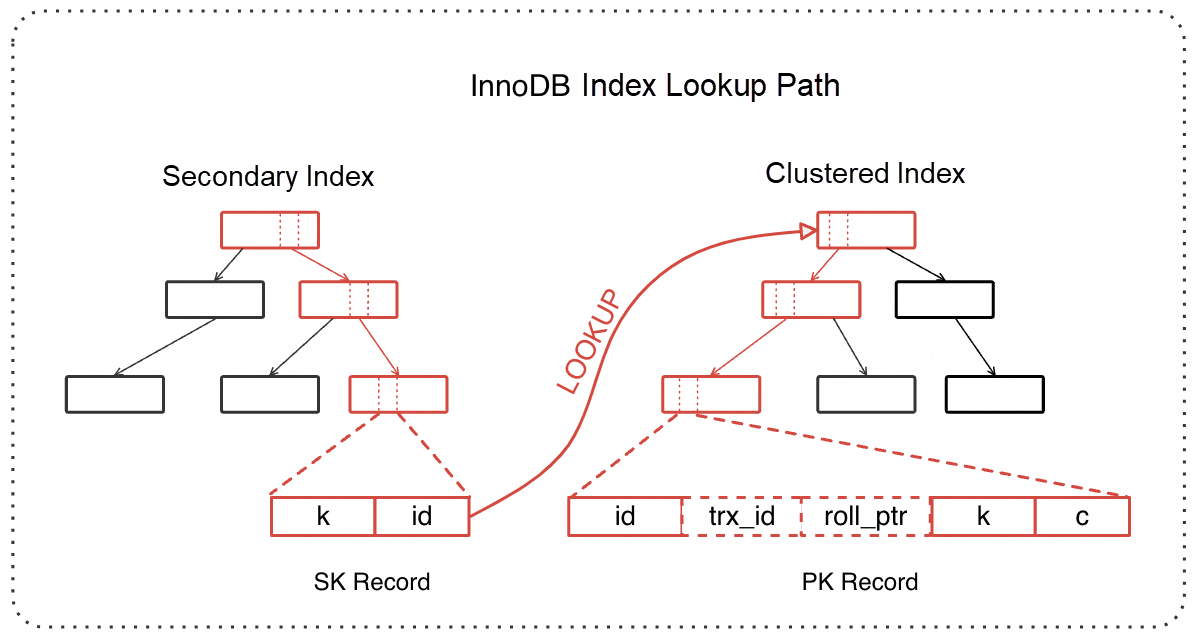

Since a secondary index does not contain the full row of data, using a secondary index for lookups might require a table scan back. Considering the table structure in the above example, for a query like select * from t1 where k > 1, choosing a secondary index avoids a full table scan. However, since the secondary index records do not have the value of the c field, after scanning to the secondary index records that satisfy k>1, it is necessary to use the pk value from the record to "go back" and scan the clustered index to retrieve the full row of data.

The scenario described above is a typical example of a secondary index lookup with a table scan back. A secondary index lookup with table scan back refers to the process where, due to the incomplete information stored in the secondary index, a scan of the secondary index B+tree is followed by a scan of the clustered index B+tree.

In the InnoDB storage engine, data location is only the first step in data access. In some scenarios, to meet the requirements of multi-version concurrency control (MVCC), it is also necessary to perform data visibility checks to ensure that the returned data complies with the transaction isolation level. The implementation of MVCC in InnoDB primarily relies on hidden system columns trx_id/roll_ptr on row records, the visibility view Read View, and the Undo log.

Each data row has two hidden columns for recording transaction information:

trx_id: The ID of the most recent transaction that modified the row.roll_ptr: A pointer to the Undo log, used to store the previous version of the row.The MVCC in InnoDB is mainly reflected in two aspects:

• Determine active transactions. The Read View is a snapshot of the currently active transactions at the time the read operation is initiated, created by copying the global active transaction array. When a transaction locates a record, it compares the trx_id of the record with its own Read View to determine if the current record is active.

• Construct historical versions. The Undo log contains the historical version data of the record. If the current version of the record is not visible, you can use the roll_ptr on the row to point to the undo log corresponding to the transaction to reconstruct the previous version of the record and implement the snapshot read mechanism.

• Cost of Data Location - Secondary Index Lookups with Table Scan Back: Since secondary indexes do not contain the full user data and the system columns required for visibility checks, lookups that require a table scan back to the clustered index make up a significant portion of the data location process. As shown by the red path in the figure below, a lookup with a table scan back involves an additional full scan of the clustered index B+tree compared with a clustered index query or a secondary index query without a table scan back. This extra PK LOOKUP path adds additional pressure on disk I/O, BufferPool free pages, and B+tree concurrency control. Especially in large-range queries, if each record requires a table scan back, the cost of these lookups can become a major expense, significantly impacting the query RT and overall throughput.

• Cost of Visibility Judgement - Read View. InnoDB visibility judgment requires accessing the global structure (global active transaction array), which needs to be completed under the protection of a large transaction lock. Additionally, the Read View of MVCC is an array without a fixed size and thus cannot be efficiently propagated. With the significant improvements in multi-core CPU capabilities, the current design is increasingly becoming a bottleneck for InnoDB transaction performance. Taking the largest available specification for a PolarDB-X DN on a public cloud (polarx.st.12xlarge.25, 90C, 720G, maximum concurrent connections 2W) as an example. In the stress test scenario of Sysbench read_write, the CPU spends nearly 17% of its time waiting unnecessarily.

To address the aforementioned issues with InnoDB, the Lizard transaction system designs a specific index structure that significantly reduces data access costs and enhances overall system performance.

To optimize the cost of visibility judgment, the Lizard transaction system introduces the scn/uba system columns in the clustered index. These columns represent the commit sequence of transactions, thereby decoupling the dependency on global structures. The Read View array is upgraded to a Vision that consists of only one SCN, making it easier to propagate. For a detailed technical explanation, please refer to Core Technology of PolarDB-X Storage Engine | Lizard Distributed Transaction System [2].

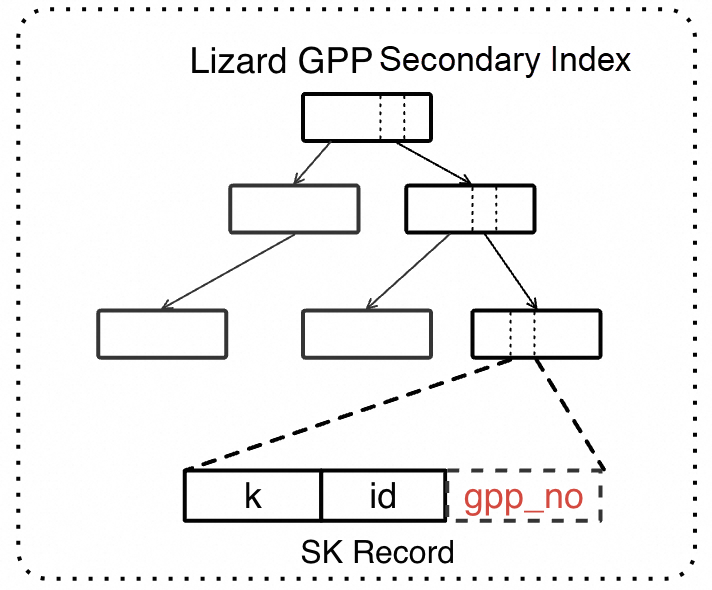

The PolarDB-X Lizard transaction engine significantly reduces the cost of secondary index lookups with table scan backs by implementing the Guess Primarykey Pageno (GPP) scheme. The format of the index rows is shown in the figure below.

To optimize the path for table scan back queries, Lizard introduces a new gpp_no system column in the secondary index. This column represents the "guess" pageno of the page where the corresponding clustered index record resides. The term "guess" indicates that gpp_no is an estimation of the primary key's location and is not guaranteed to be accurate. The following uses the insert process to explain how gpp_no is generated and recorded.

The insertion process of InnoDB follows the order of first inserting into the clustered index and then into the secondary index. When the primary key is successfully inserted, we know the exact location (pageno) of the primary key record. During the subsequent insertion into the secondary index, we use the pageno obtained from the previous step as the gpp_no in the secondary index record, which will be used for future index lookups.

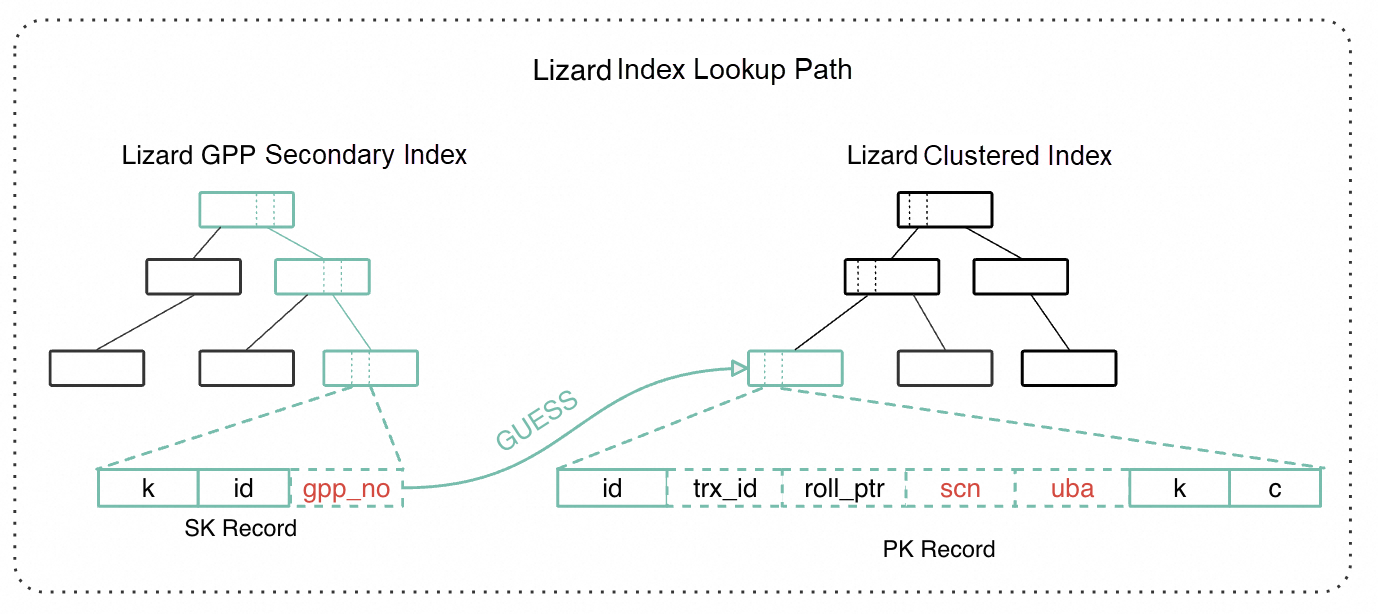

With the help of gpp_no, the GPP secondary index significantly shortens the path for index lookups. As shown by the green path in the figure below, after locating the record in the secondary index B+tree, we first obtain the page corresponding to the gpp_no and perform a binary search to try to retrieve the corresponding primary key record. If successful, this eliminates the need for a full B+tree scan of the clustered index. GPP acts as a shortcut for index lookups, intuitively reducing the response time of this step. It also reduces the pressure on the system's overall resources, such as the Buffer Pool and I/O, thereby significantly improving the overall system throughput.

• Space Cost

Lizard extends the secondary index with a hidden system column, gpp_no, which adds 4B to each secondary index record. Using a typical table structure from Sysbench testing as an example, the introduction of GPP increases the storage space of the entire table by 4.6%. Given the significant performance improvement, the space-for-time strategy is particularly suitable for secondary index lookups that require a table scan back.

• Hit Rate Cost

Since gpp_no is a "guess," there are naturally cases where gpp_no does not match the actual primary key pageno, leading to misses. This occurs after changes in the B+tree structure. When the clustered index B+tree updates data, the address change of the original record in the page will not cause GPP MISS if data changes are limited to within the leaf page. That is because the GPP extra information we record does not contain the offset of the record.

However, when data updates in the clustered index B+tree lead to a split of leaf nodes, half of the data in the original page is moved to a new page, making the gpp_no for those primary key records in the secondary index inaccurate. Similarly, during a merge of leaf nodes, the relocation of primary key records can also reduce the hit ratio.

Although there is a probability of misses, in test scenarios with random data inserts and a hit ratio of about 50%, the GPP secondary index still shows significant performance improvements, indicating that the benefit of a successful shortcut far outweighs the cost of a miss. In addition, for scenarios where the table structure is hidden primary keys or data is imported in the order of primary keys, the InnoDB B+tree insertion point can be triggered to split and optimize without relocating the data from the original leaf node to ensure that the GPP hit ratio remains high.

After a primary key record is moved to another data page due to B+tree splits or merges, its secondary index gpp_no will remain unhit. GPP implements a Cleanout mechanism for secondary index lookups to "repair" the hit ratio. During the index lookup process, if the gpp_no of the current record is incorrect, the system will re-scan the clustered index to find the correct page_no, then update the gpp_no with the accurate value so that the next index lookup can hit.

Although Cleanout introduces some overhead, it lays the foundation for a better hit ratio in subsequent lookups. Especially in read-heavy, write-light scenarios where the B+tree structure is relatively stable, the Cleanout mechanism can improve overall system throughput.

• Oracle Heap-Organized Table

Oracle's default Heap-Organized Table stores the actual data in the heap table. The B-tree index in the table does not store data, but only stores the row_id recorded in the heap table. After the B-tree scans the leaf node records, it also needs to locate the actual data in the heap table according to the row_id. Compared with Lizard GPP, its advantages are:

Its disadvantages are:

• PostgreSQL

Similar to Oracle, PostgreSQL also stores data in a heap, and all indexes are secondary indexes. Data access requires traversing both the B-tree and the heap. Its comparison with Lizard GPP is similar to that with Oracle.

• Oracle Index-Origanized Table

Oracle's IOT is similar to InnoDB indexes in design, as they both store data in the clustered index and secondary indexes require an index lookup. Similar to GPP, the row_id of the first inserted clustered index is recorded on the secondary index of the IOT. During an index lookup, the row_id is first used to find the clustered index record. If it hits, the full B-tree scan of the clustered index can be skipped. The differences from GPP are:

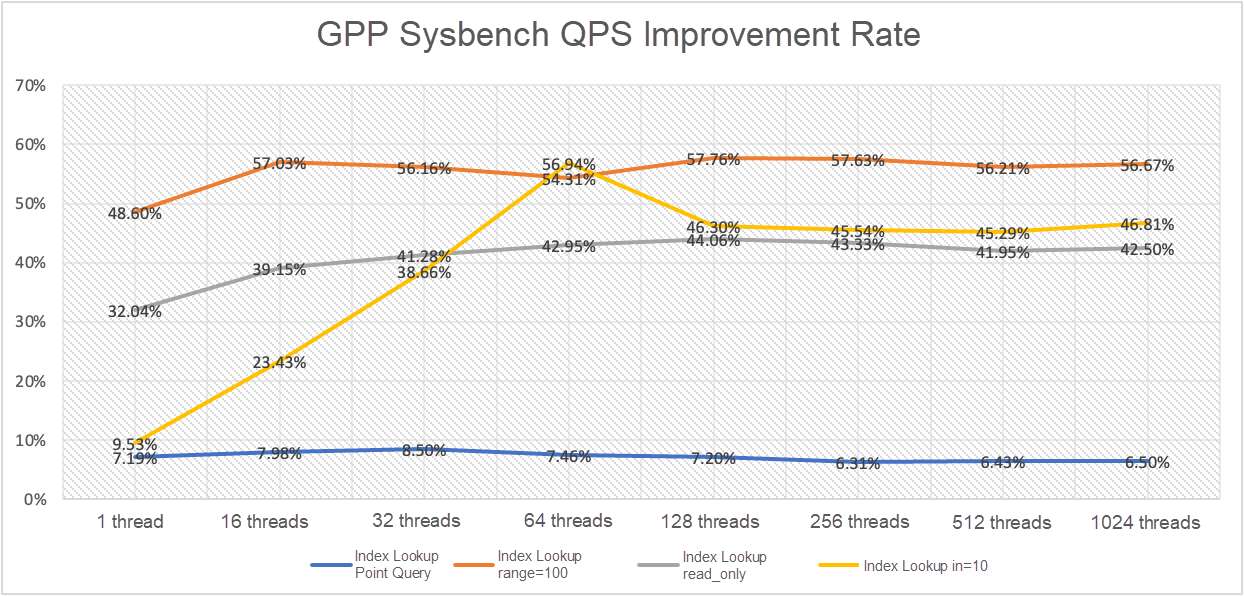

We deployed the polardbx-engine on an Intel 8269CY 104C physical machine[3]. The test data consisted of 16 Sysbench tables, each with 10 million rows. We tested both the clustered index SCN transaction system and the GPP secondary index.

• SCN MVCC Performance

| QPS | TPS | 95% Latency (ms) | |

|---|---|---|---|

| Lizard-8032 | 636086.81 | 31804.34 | 16.07 |

| MySQL-8032 | 487578.78 | 24378.94 | 34.33 |

| MySQL-8018 | 311399.84 | 15577.15 | 41.23 |

The table above shows the test results of the Lizard SCN transaction system in the Sysbench oltp_read_write scenario. Compared with MySQL-8032, the Lizard SCN transaction system showed a 30% performance improvement and a 53% reduction in latency.

• GPP Secondary Index

We created a read-only scenario for index lookups, mimicking Sysbench oltp_read_only/oltp_point_select.

The figure above shows the QPS improvement rate of GPP secondary indexes compared with polardbx-engine without GPP. It can be seen that GPP leads in all index lookup scenarios, especially in large-range queries (range=100), where the query performance improvement can reach over 50%.

Data Encryption without Business Changes? Enough with this Tool

ApsaraDB - January 23, 2024

ApsaraDB - May 16, 2025

ApsaraDB - November 26, 2025

ApsaraDB - October 17, 2024

ApsaraDB - March 17, 2025

ApsaraDB - October 17, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB