[Blockbuster] Alibaba Cloud Tair KVCache team and Alibaba Holding-AI Engine & Tech infra and Reliability Engineering team will open source the enterprise-level global KVCache management service Tair KVCache Manager. This article introduces the architecture design and implementation details of this service in detail.

With the rise of Agentic AI, the traditional single-server tiering solution centered on the inference engine can no longer meet the storage requirements of KVCache in the new era. As KVCache pooled storage is coming to the ground in large-scale Agent inference scenarios, it is necessary to build an enterprise-level KVCache management system with the capabilities of accurate capacity assessment, dynamic elastic scaling, multi-tenant isolation, high availability guarantee, and version collaborative management,To support cost-effective optimization and service reliability requirements under PB-level storage. In order to solve these problems, we designed and implemented a set of global KVCache management services for large model inference scenarios, supporting Alibaba Group RTP-LLM inference service is accelerated and extended to support many open source engines such as vLLM and SGLang, and is compatible with various Attention mechanisms including Sparse Attention and Sliding Window Attention

This article will start with the KVCache storage requirements in the Agent scenario, further analyze the challenges faced by KVCache storage in this scenario on the basis of the previous article, and introduce the architectural design choices and related implementations made by Tair KVCache Manager to address these challenges.

This series of technical articles will systematically disassemble the evolution path of KVCache technology for agent inference:

Tair KVCache, as an extension of the Tair product capabilities of Alibaba Cloud Database, is essentially a three-time transition of the caching paradigm:

🔹From Redis's "cache data → Reduce I/O"

🔹To GPU KVCache's "cache calculation intermediate state → reduce repeated calculation"

🔹Then to Tair KVCache's "large-scale and agent attention state management → reconstruction of large-scale model inference cost model", it indicates that the cache is being upgraded from auxiliary components to the core capability of AI infrastructure layer-making "state" storable, shareable and schedulable, supporting the large-scale inference base in the era of agent.

The rise of Agent not only lengthens the inference session context, but also makes the session mode change obviously. These changes pose severe challenges to the traditional single-machine hierarchical + affinity scheduling scheme:

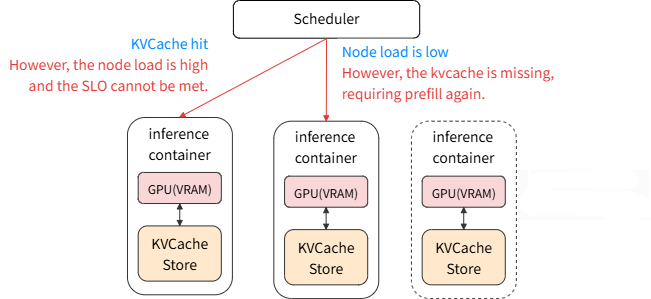

The above points lead to the increasing difficulty of scheduling in the traditional mode, and the conflict between KVCache hit rate and SLO scheduling target is becoming increasingly acute. For example, when the number of requests increases, the scheduler needs to perform more session migrations to ensure SLO, which in turn leads to a decrease in KVCache hit rate. This leads to a nonlinear growth or even avalanche of Prefill loads.

Even though the single-server tiering solution breaks the limitation of VRAM and further expands the capacity of KVCache, it is still difficult to fully meet the storage requirements of KVCache in the Agent scenario. To further address this issue, we need to confront the fundamental problem of this architecture: the overly tight coupling between computing resources and KVCache storage.

Fortunately, with the continuous evolution of high-performance network technology, the network bandwidth in the intelligent computing environment increases rapidly (single port jumps rapidly from 25Gbps to 200+Gbps), the interconnection scale expands continuously (thousand, ten thousand cards and even across available zones), and the interconnection models become more diverse (mainstream storage machine have been covered by high-performance network such as eRDMA). This gives us the possibility of decoupling computing resources and KVCache storage.

Taking Alibaba Cloud as an example, the typical bandwidth of KVCache cross-machine transmission in intelligent computing scenarios is about 20 GB/s: The EGS 8 card model corresponds to 20 GB/s general-purpose network bandwidth, and the Lingjun 8 card model corresponds to 25 GB/s storage bandwidth (another 200 to 400 GB/s ScaleOut network bandwidth).We take Deepseek and Qwen3-Coder typical Prefill throughput as examples for analysis, and both 20GB/s bandwidth can meet the cross-machine transmission requirements of KVCache. As high-performance networks continue to evolve, we believe that KVCache transmission bandwidth will be further expanded, Continuously reduce the cost of cross-machine transmission.

| Model | Single machine Prefill KVCache write bandwidth requirements | Time consuming to read 64K context at 20 Gb/s | Single Token KVCache capacity | Inference Model | Prefill throughput token/s | 64K context corresponds to KVCache capacity |

|---|---|---|---|---|---|---|

| DeepSeek-V3/R1 | 2.79GB/s | 0.21s | 70272 Byte | H800 * 8 | 42664 | 4.19GB |

| Qwen3-Coder-480B | 3.55GB/s | 0.76s | 253952 Byte | H20 * 8 | 15000 | 15.14GB |

(DeepSeek Prefill throughput from RTP-LLM reoccurrence report, 32.2K in the official DeepSeek disclosure. Qwen3-Coder Prefill throughput is a rough estimate in the optimized deployment mode, and the measured value in single server deployment is about 10000 token/s.)

From this external KVCache storage starts to move from inference binding to Global Pooling:

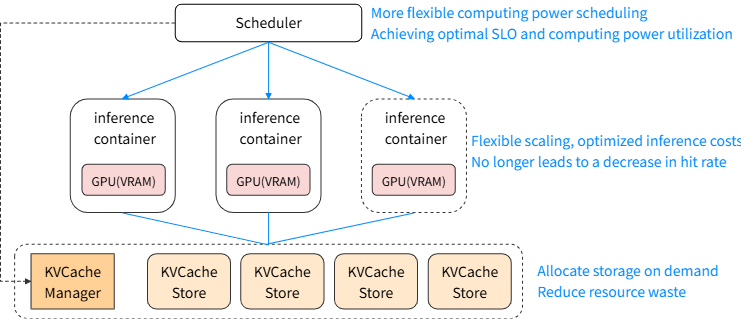

Combined with high-performance network and optimization in inference engines such as HiCache, global pooling greatly reduces the cost of KVCache not being able to make a local VRAM hit in inference (the cost of pulling from the remote end is much lower than that of reprefill), and relaxes the affinity constraint. Make it easier for the scheduler to achieve a high KVCache hit rate,In turn, you can focus on computing power scheduling to achieve higher computing utilization and SLO.

At the same time, based on the global pooled external KVCache storage, we can further separate the external KVCache storage from the inference container, which will bring the following benefits:

Of course, this separation does not mean giving up the storage resources on the inference host node, in fact, the unified pooling of these storage resources can make full use of these resources, and also help to improve the overall stability of the storage system.

After KVCache is globally pooled, a large number of existing storage systems are used for external KVCache storage, such as file storage, KV storage, memory pool, object storage, etc., all of which have their own interfaces. The rapid implementation of global KVCache pooled storage based on existing interfaces also encounters some problems.

Compared with traditional KV storage, LLM KVCache has its special business characteristics and storage mode:

The current storage system interface is designed for general scenarios, which directly erases the semantics and features of LLM, making it difficult for the underlying storage system to further optimize the KVCache features. Typical problems are: in order to facilitate multiple processes to write at the same time, a single Block is split into multiple keys, but the eviction time of multiple keys is not consistent,As a result, after some keys are evicted, the remaining keys occupy storage capacity but cannot provide hits, wasting storage resources.

The metadata performance of some storage systems is insufficient, and it is difficult to complete a large number of metadata queries in a short time:

Taking block_size = 64 as an example, a 64K context needs to query metadata of 1K blocks, determine the existence and read relevant information. Moreover, the KVCache transmission corresponding to 64K token only takes less than 1 second, and the time-consuming impact of querying metadata is more obvious.At the same time, the KVCache size of a single block is at the single digit MB level, and the storage of 100 TB needs to support the management of hundreds of millions of blocks. Most storage systems designed for HPC scenarios mainly focus on optimizing read and write bandwidth, and support for large-scale metadata queries with such a large number of blocks is limited.

Retrofitting the existing storage system or building a new storage system has a large amount of work:

High performance distributed storage systems inherently have high complexity. At the same time, due to the demand for interface commonality in the stock business scenario and the high stability and reliability requirements of the storage system, whether it is to add LLM-related capabilities to the existing storage system or to re-develop a storage system for LLM scenarios, a lot of research and development resources and time are required,And if multiple storage systems are used, each system must be developed.

Therefore, it is necessary to reuse existing engineering resources as much as possible to meet the urgent storage requirements of KVCache as soon as possible. At the same time, it also defines the actual requirements and load mode for the dedicated storage system for LLM scenarios, so that it can have more complete information and sufficient time to complete the design and development, and help it better meet the requirements of KVCache.

Combined with the above problems, building a metadata management layer that focuses on LLM semantic adaptation and storage management has become a practical and feasible path:

This layer does not replace the underlying storage, but acts as a metadata manager, exposing native interfaces that conform to LLM semantics upward to the inference engine, mapping operations downward to efficient calls to the underlying storage, and providing the refined and translated storage features to the underlying storage. This way of adding an abstraction layer can take into account both landing speed and long-term evolution -- In the short term, existing storage systems can be quickly utilized to support large-capacity KVCache pooled storage. In the medium to long term, the optimization goals and interface boundaries of dedicated storage systems are clearly defined to achieve a smooth evolution.

With the support of the inference engine and the improvement of the KVCache storage system, pooled KVCache has begun to move from small-scale experiments to large-scale deployment. When the number of PB of back-end storage supports KVCache storage for multiple Agent models, the need for various enterprise-level management capabilities arises.

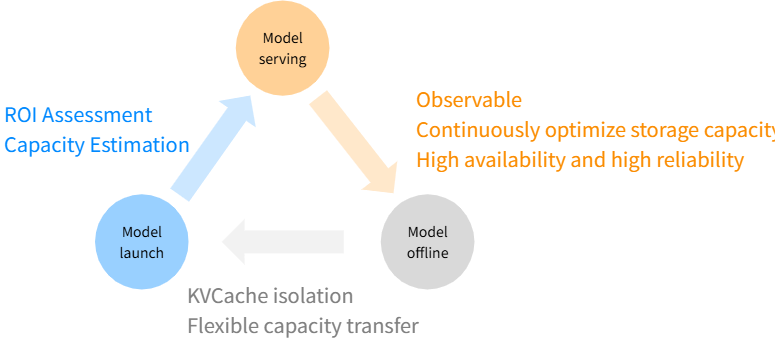

Before enabling external KVCache storage for inference services, it is necessary to assess the KVCache capacity requirements, the benefits and ROI of external KVCache storage, and identify the most profitable segment from a large number of existing inference services.

During operation, observability is required to promptly detect changes in system performance and continuously optimize storage capacity configuration based on actual online needs. Simultaneously, high availability and high reliability solutions are needed to prevent metadata service or storage system failures from causing inference service anomalies.

For some important model services, sufficient KVCache resources should be strictly guaranteed. Moreover, for long-tail model services, storage resources are shared as much as possible to reduce the workload of capacity configuration.

When switching model versions, ensure that the KVCache of the new and old models is isolated, and flexibly adjust the KVCache capacity ratio according to the changes in inference traffic between the new and old versions.

With the expansion of the deployment scale, such requirements continue to emerge, the enterprise-level capabilities of the KVCache management system put forward higher requirements.

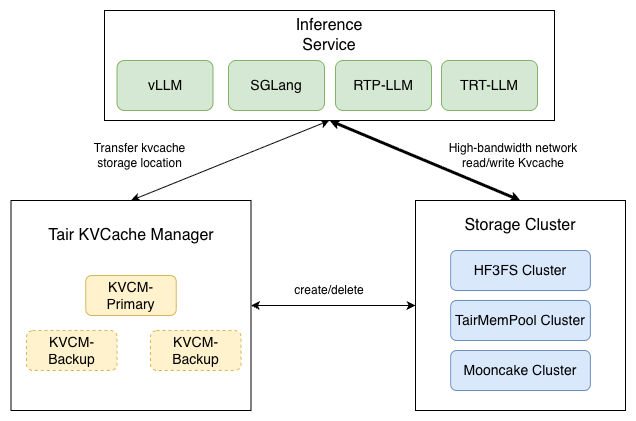

Against this background, Alibaba Cloud Tair and Alibaba Holding-AI Engine & Tech infra and Reliability Engineering team jointly built Tair KVCache Manager (hereinafter referred to as Tair KVCM), an enterprise-level management service product for large-scale KVCache.The system solves the above problems through the following design:

The final implementation significantly reduces the consumption of GPU computing resources and improves the quality of inference online services while maintaining low costs.

The following part of the article will introduce the basic concepts and architecture design of the system in detail.

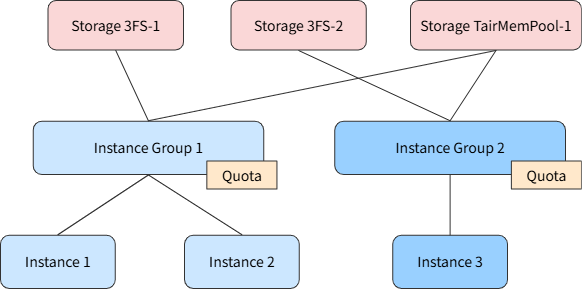

1. Storage: A Storage system

2. Instance Group

Common uses:

3. Instance: a KVCache Instance

Through the preceding abstraction, storage, quota, and instance are decoupled, allowing flexible control of KVCache storage mode and capacity, facilitating unified management of a large number of KVCache instances. Configure the capacity quota on the Instance Group to avoid configuring the storage capacity for the Instance separately, This simplifies the access process on the service side and facilitates capacity transfer during Model version switching.

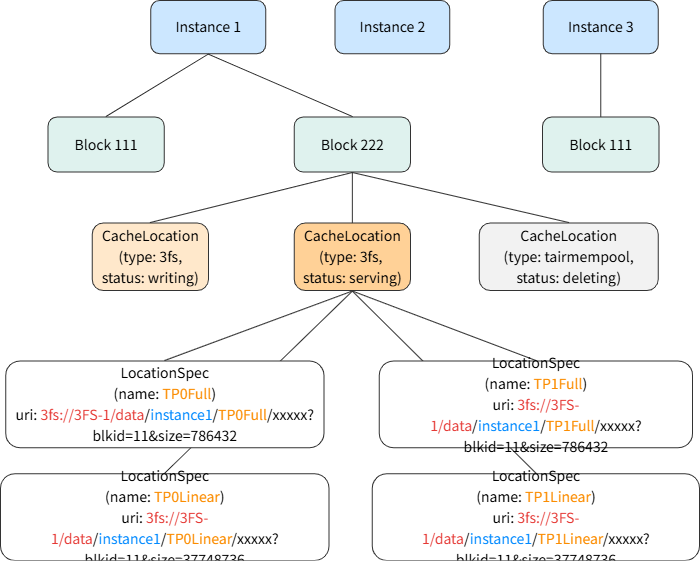

1. Block: A block is a series of tokens of a fixed length.

2. CacheLocation: A storage location for a single block.

Status: writing->serving->deleting

3. LocationSpec: part of the data of a single CacheLocation

The format of the organization storage location is the same as URI, but different expressions are allowed:

Based on the above abstraction, it not only satisfies the KVCache query requirements of the inference engine (composite matching with hybrid attention, etc.), but also retains the relevant business characteristics and semantics of LLM in Tair KVCM: prefix relationships between multiple blocks, associations with location and multiple specs, etc. Because Tair KVCM can perceive and retain LLM-related characteristics, it can also implement more optimizations for KVCache storage scenarios, such as more detailed classification of requests through prefixes and adjustment of eviction policies. Prefix-dependent data relationships are used to avoid invalid storage of suffixes. Linear attention is given to the blocks that most need to be retained, optimizing storage capacity without sacrificing hit rate, etc.

From the perspective of the underlying storage, reduced access complexity (no need to understand inference concepts, completely transparent), and refined translated storage characteristics (lifecycle relationships between storage objects, etc.) are available, leaving sufficient room for further optimization and development of dedicated storage systems.

Tair KVCM is deployed in centralized mode. Because it is only responsible for the metadata management of KVCache, combined with C++ as the development language, relying on scale up can meet the needs of a single inference cluster. At the same time, the abstraction of instance group makes it easy to split different groups using the same storage,Horizontal scale out to serve different inference clusters. The centralized mode greatly simplifies deployment and operation. It also avoids injecting KVCM logic into the inference container, thus avoiding potential problems such as an explosion in the number of connections to the metadata storage backend (such as Valkey).

Furthermore, by incorporating features such as 3FS Master, Tair KVCM can interact using only TCP, the inference engine, and backend storage, without heavily relying on an RDMA environment. For scenarios where only GPU machines exist within the RDMA environment, KVCM can be deployed on other machines outside the RDMA environment, mitigating the issue of high failure rates on GPU machines.

After the storage location of KVCache is obtained through Tair KVCM, the Connector in the inference engine will directly read and write the back-end storage, and the KVCache data stream completely bypasses Tair KVCM, reducing the read and write latency of KVCache and the bandwidth demand for Tair KVCM.

To facilitate isolation, Tair KVCM separates the metadata surface from the control surface. Here are some of the key interfaces.

● Management Interface:

● Metadata Interface:

Major metadata-related APIs:

API parameter design:

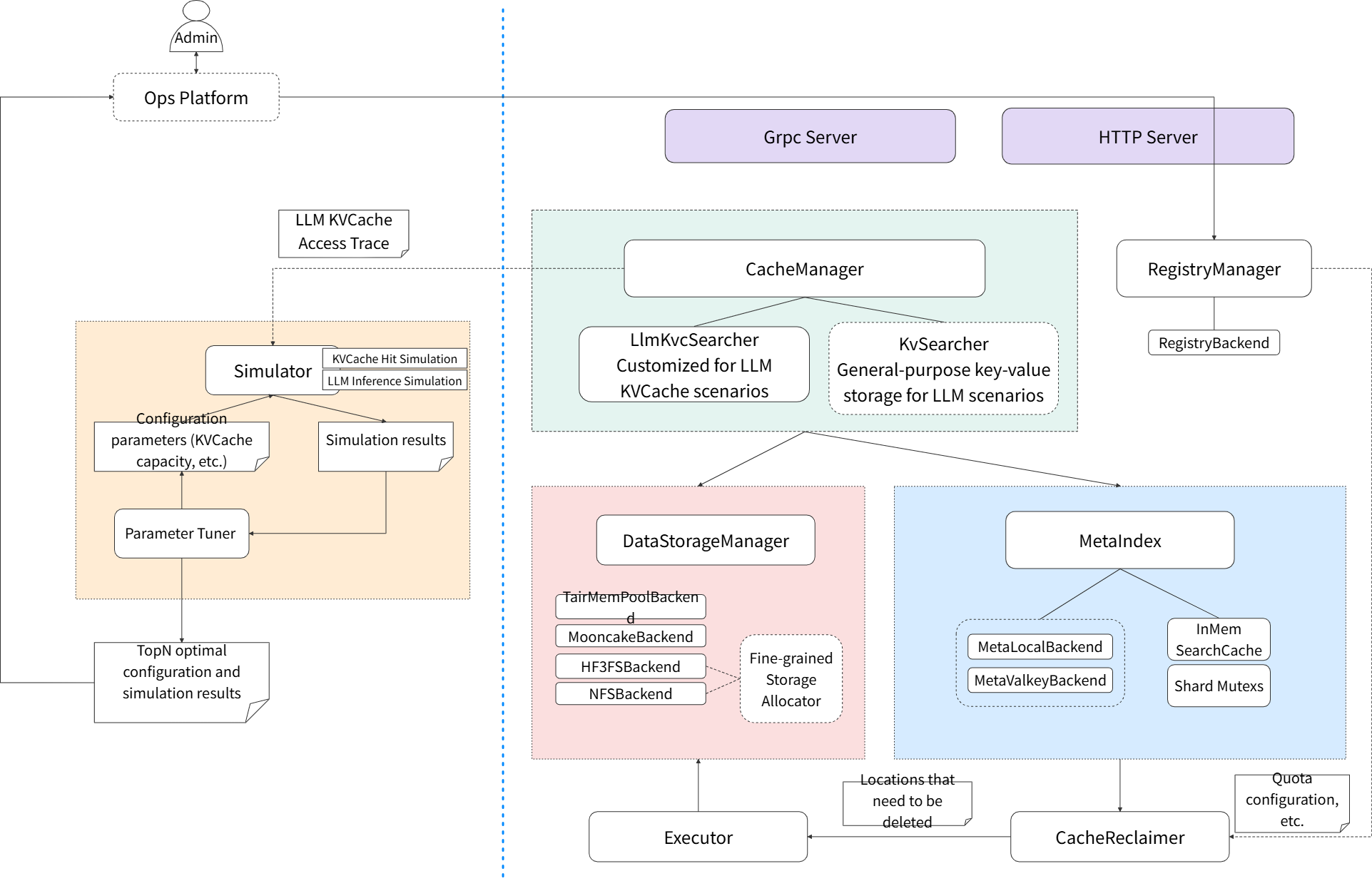

Access Layer (Server)

Read and write module (CacheManager)

1. Multiple matching modes supported. The inference engine supports multiple matching patterns when reading KVCache metadata:

2. Two-phase write mechanism. When the inference engine writes KVCache, it uses the following two-phase write mechanism:

3. High availability read and write support:

Storage management (DataStorage)

1. Compatible with multiple storage systems

2. DataStorageManager for unified management of multiple storage systems

3. Other features

4. Introduction to TairMemPool: TairMemPool is a high-performance KVCache memory pool solution jointly built by the Alibaba Cloud Tair team and the server R&D - custom computing and chip system team. Through the co-optimization of hardware and software, the scheme realizes the unified addressing and global access capability of multi-node memory,It supports multi-media/protocol access and KVCache dedicated transmission optimization. In a multi-network card environment, the network bandwidth utilization rate exceeds 90%, and it has enterprise-level high availability features, providing highly reliable storage support for the TairKVCache system.

Index management (MetaIndex)

1. Use KV system to store metadata

2. Interface Design

Supports the ReadModifyWrite interface and provides thread-safe updates for customizable modifiers.

Support Scan and RandomSample interfaces, support interfaces such as obtaining storage and cache capacity.

3. Other features

Use LRU Cache as the search cache for metadata storage to reduce query I/O and latency in high hit scenarios

Supports the configuration of sharding locks and batch operations to provide high-performance and high-concurrency services.

Capacity management (Reclaimer & Executor)

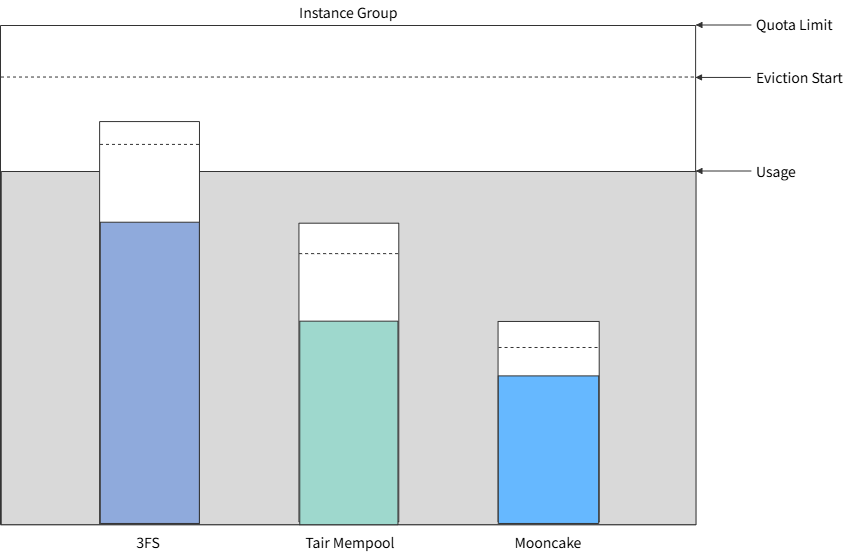

Capacity management in KVCM is implemented in two dimensions:

● Instance Group:

Quota configuration:

Water level configuration:

● Storage back-end capacity:

In terms of specific engineering implementation, KVCM implements asynchronous execution of deletion by putting the deletion task into the background thread pool and other engineering means, so that the deletion performance can be expanded to support a larger deployment scale. At the same time, it supports various common eviction modes such as TTL, LRU, and LFU, which can flexibly adapt to different capacity management requirements;Support real-time update of water level and Quota at Runtime; Support dynamic tuning of eviction parameters at Instance level in combination with Optimizer module; In the future, more accurate eviction based on prefix will be supported, for example, suffix block is evicted before father block, and pre-suffix correlation eviction of Linear and Full under mixed attention.

The Optimizer module is a cache strategy optimization analysis tool specially designed for LLM inference systems. It analyzes KVCache hit rate and storage capacity consumption mode by efficiently simulating and replaying real Trace data, and optimizes and guides Manager's capacity management through analysis results.

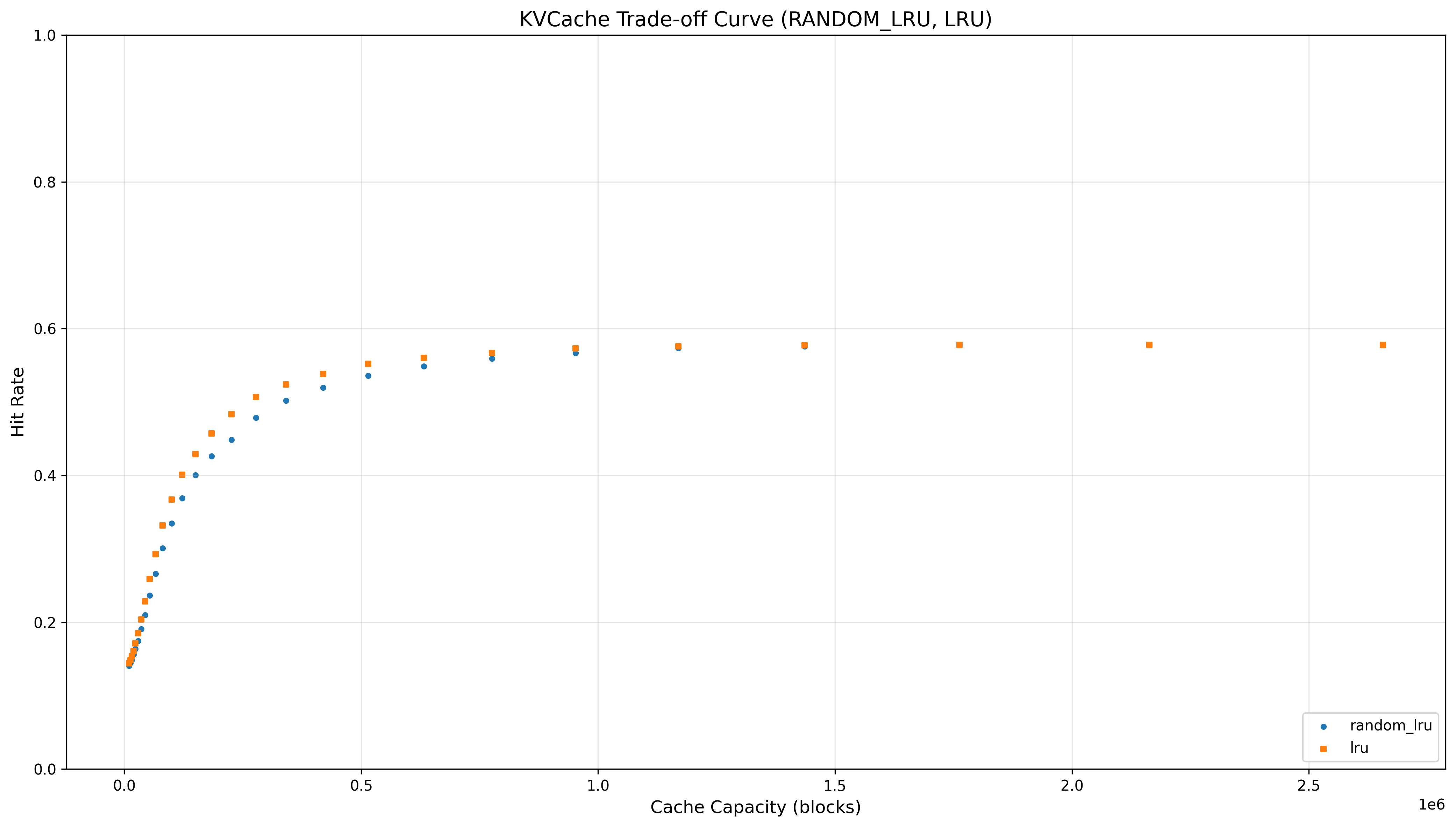

This module builds a prefix matching index based on Radix Tree, supports the parsing and conversion of real access Trace data in multiple formats, including Tair KVCM event, and can accurately simulate the read and write access mode under different eviction policies such as LRU and Random-LRU,It not only calculates and analyzes the impact of different capacity configurations on the cache hit rate, but also analyzes key indicators such as access time locality and access frequency distribution of each block in depth. The module supports parameter traversal and replay loops to automatically find the optimal storage parameter configuration to achieve the best trade-off between storage cost and performance.At the same time, the module has built-in flexible cache level configuration and unified eviction policy interface, which not only supports the fine analysis of a single cache layer, but also reserves an extended interface for the collaborative optimization of multi-level cache architecture.

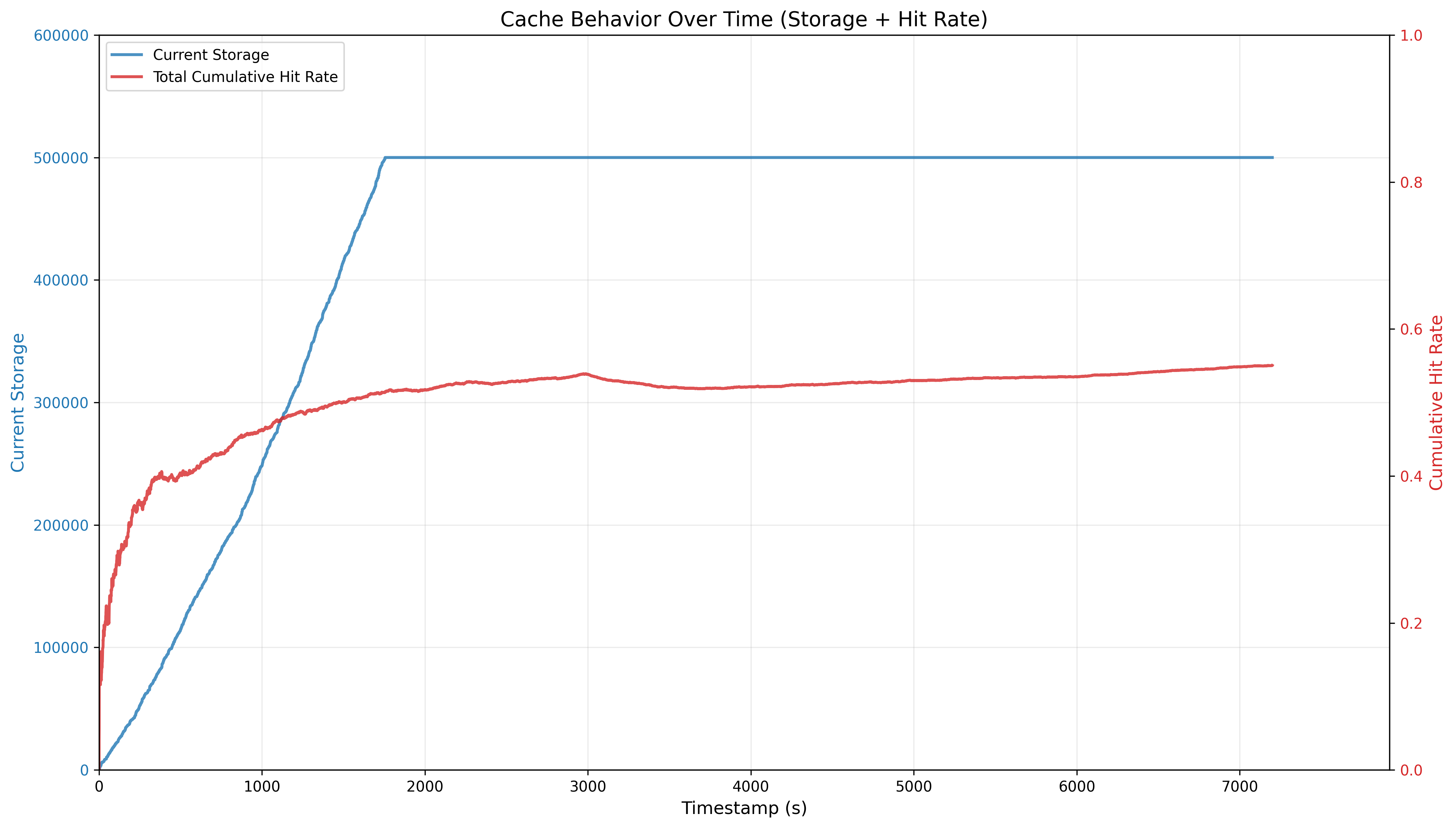

Through the unified Trace data structure and efficient Trace processing mechanism, the Optimizer module can accurately restore the cache behavior in real business scenarios and provide stable and fast performance analysis. The effect is shown in the figure, and the dynamic analysis in Figure 1 can track the synergistic changes in cache capacity usage and hit rate in real time;Figure 2 clearly depicts the KVCache hit rate benefits of different eviction strategies under different cache capacity configurations, so as to test the performance changes of different eviction strategies and guide the optimal capacity configuration.

Furthermore, Optimizer module supports deep integration with GPU inference Performance Model (computing profile) through flexible architecture design, which can quantitatively model the reduction effect of computation in prefill stage based on cache hit rate, and directly map the delay improvement (such as TTFT optimization) and throughput improvement brought by KVCache to the improvement of GPU resource utilization efficiency,And then quantifies the specific optimization suggestions for the reduction of the number of GPU nodes and the saving of operation cost, which provides the data-driven decision-making basis for the resource scheduling and cost optimization of the agent inference system. Details will be detailed in subsequent articles.

Support for more comprehensive LLM caching scenarios

As multimodal model capabilities improve, the demand for multimodal, multi-turn sessions is also increasing, necessitating further improvements to the multimodal support of the LLM KVCache interface.

For encoder cache and similar VLCacheIn the context-free KVCache scenario, add the traditional KV semantic interface.

In the face of more diverse deployment environments, there is also a lot of work:

1. Offline private environment: the offline environment self-sustaining host makes KVCM have more optimization targets besides the hit rate. More targeted eviction algorithms need to be designed for this type of scenario to optimize.

2. Super Pod: in this type of system, the bandwidth for GPU to access the memory pool through the ScaleUp network is greater than the bandwidth for CPU memory through PCIE. Therefore, it is natural to uniformly pool and manage the memory used to store KVCache. We will help implement the storage management of KVCache in the super node,Give full play to the performance advantages of super nodes.

Improve support for mainstream inference engines and back-end storage

Through cooperation with the inference engine community, more inference engines can obtain native support capabilities. At the same time, the transmission performance will be further optimized.

Adapts to more backend storage systems to meet the storage requirements of KVCache in more different scenarios.

Further enhance simulation and cache analysis capabilities and optimize storage algorithms

Enhanced inference co-simulation capability. Optimize the eviction algorithm to improve the hit rate and reduce the cost of inference.

Furthermore, most existing tiering algorithms are based on traditional data characteristics such as hot and cold data, which are not entirely suitable for the data tiering requirements of LLM KVCache scenarios. It is necessary to explore data tiering algorithms that better meet the needs of this scenario, taking into account the access characteristics of LLM.

Continuously enhance enterprise-class capabilities

For the pain points exposed under large-scale deployment, continue to enhance the relevant enterprise-level capabilities to provide more comprehensive management capabilities.

Hybrid Model Support | SGLang's Support Scheme for Hybrid Architecture Models like Mamba-Transformer

ApsaraDB - December 29, 2025

ApsaraDB - February 4, 2026

ApsaraDB - February 4, 2026

Alibaba Cloud Community - February 3, 2026

Alibaba Container Service - April 17, 2024

ApsaraDB - June 13, 2022

AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by ApsaraDB