By Mocheng

Indexes are basic components of databases. As early as the 1970s, SystemR has employed indexes to support multi-dimensional queries. In a standalone database, indexes can be divided into B-tree indexes, hash indexes, full-text indexes, and spatial indexes according to their usage and data structures. Generally, each table contains a primary key index, and any indexes other than the primary index are collectively referred to as secondary indexes.

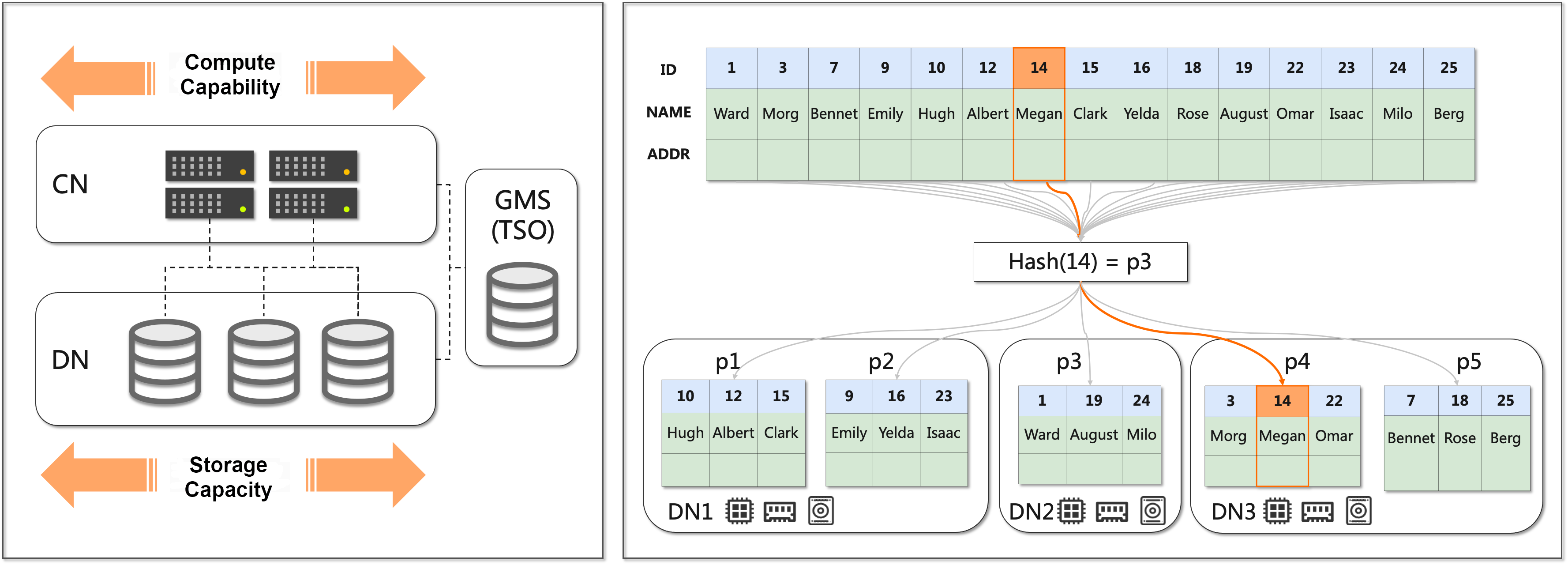

Distributed databases that adopt a shared-nothing and storage-compute separation architecture support horizontal expansion capabilities. Through data partitioning and stateless compute nodes, compute and storage resources can be independently scaled, so multiple distributed databases utilize this design, such as Spanner, CockroachDB, and YugabyteDB.

Architecture

The shared-nothing architecture introduces the concept of partitioning. Data needs to be partitioned based on a fixed partition key. As a result, queries that contain a partition key can quickly locate a specific partition, while other queries require full partition scans. This scenario is similar to queries in a standalone database, that is, queries by the primary key can quickly locate the page of the data, while queries by a non-primary key require full table scans.

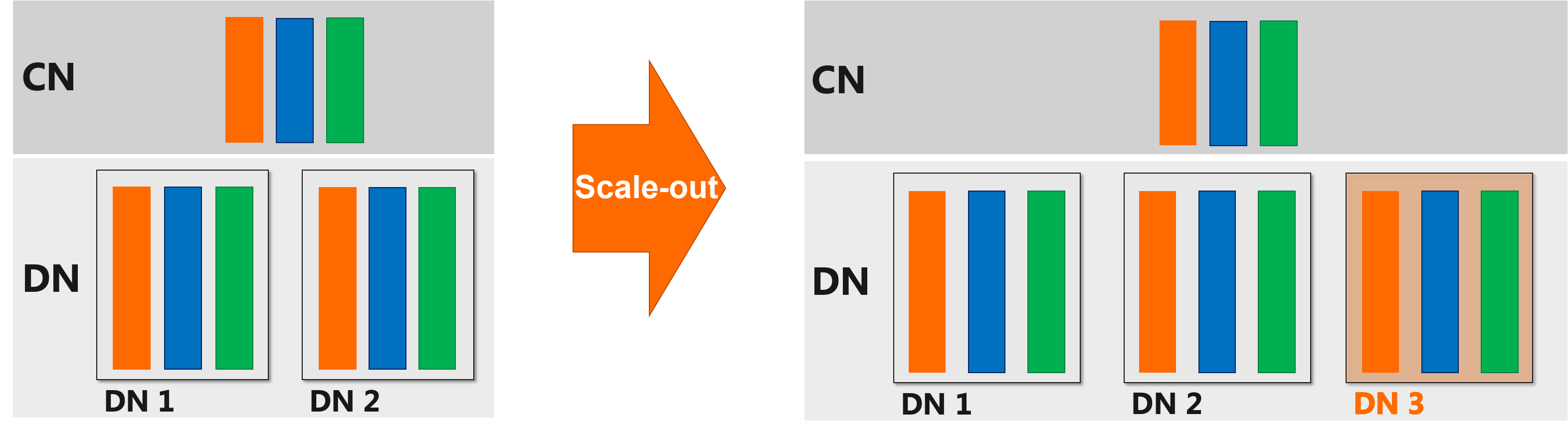

Unlike in a standalone database, scanning all partitions in a distributed database may increase the number of slow queries, reduce system throughput, and cause the system to lose the linear scaling capability. For example:

horizontal-expansion

Before scaling out: There are two data nodes (DNs) and two data partitions. Assume that a single DN can handle a physical QPS of 3 and the overall physical QPS is 6, with each query requiring a full partition scan, so the logical QPS is 6/2= 3

After scaling out: There are three data nodes and three data partitions. The overall physical QPS becomes 9, still with each query requiring a full partition scan, so the logical QPS becomes 9/3= 3. Machine costs have increased by 50%, but query performance has not been improved at all.

A standalone database uses a secondary index to avoid full table scans. Specifically, a secondary index chooses a non-primary key column as the key, and the primary key value is stored as the value (it may also be a reference to row records, but the specific implementation does not affect the overall idea). Queries with secondary indexes first locate the page via the index column of the secondary index, read the primary key values, and then return to the primary key index to query the entire row (this step is known as the index lookup). Essentially, the secondary index avoids full table scans by redundantly storing a copy of the data, which embodies the standard system optimization approach of reducing data query time at the cost of storage space.

To eliminate full partition scans in a distributed database, you can also use a similar idea of redundant index data, and the index uses a different partition key from that of the base table. During the query, locate a partition based on the partition key of the index, then search the partition key and primary key of the base table from the partition, and look up the table to obtain complete data. In this process, only a fixed number of partitions need to be scanned (for example, no more than two partitions for point queries).

Such an index that differs from partitions in the base table is referred to as a global secondary index (GSI), often simply called a global index. In contrast, an index that shares the same partition as the base table is known as a local secondary index (LSI).

As mentioned earlier, full partition scans can render a system non-scalable, so what if users could strictly ensure that all SQL statements contain the partition key?

In this case, a global index is not necessary. However, the complexity of practical scenarios makes this a rare occurrence.

More common situations are as follows:

For more scenario analysis, see Interpretation of PolarDB-X Data Distribution (3): TPC-C and Transparent Distribution. The conclusion is that in order to provide a user experience similar to a transparent distribution experience of standalone databases, global indexes are essential.

In standalone databases, indexes are very commonly used components and are highly accepted by users. If a global index can provide a similar user experience as a standalone database index, it can be considered a transparent index experience. The following are four key features that affect index use experience from the perspective of users:

|

Feature |

Indexes in MySQL |

"Opaque" global indexs |

|

Consistency |

Strong consistency |

Final consistency, weak consistency, and XX consistency |

|

Creation method |

Online DDL |

Third-party tools and subscription to BINLOG |

|

Usage |

Automatic selection by the optimizer |

Manual selection |

|

Compatibility with other DDL statements |

Supported |

Not supported |

It is not easy to meet these four features because all read, write, and schema change procedures should be designed accordingly. Related issues range from distributed transactions, CBO (Cost-Based Optimizer) index selection, and how to implement Asynchronous Online Schema Change, to how to deal with columns that include the on update current_timestamp attribute, and how affected rows are compatible with MySQL behavior. At the same time, high performance needs to be ensured.

The following section describes the technical explorations made by PolarDB-X to implement global secondary indexes that are compatible with the MySQL index experience.

For global indexes in an OLTP system, you must first ensure that the data is strongly consistent with the base table, so you need to use distributed transactions, logical multi-writes, and Asynchronous Online Schema Change (AOSC) to achieve this goal.

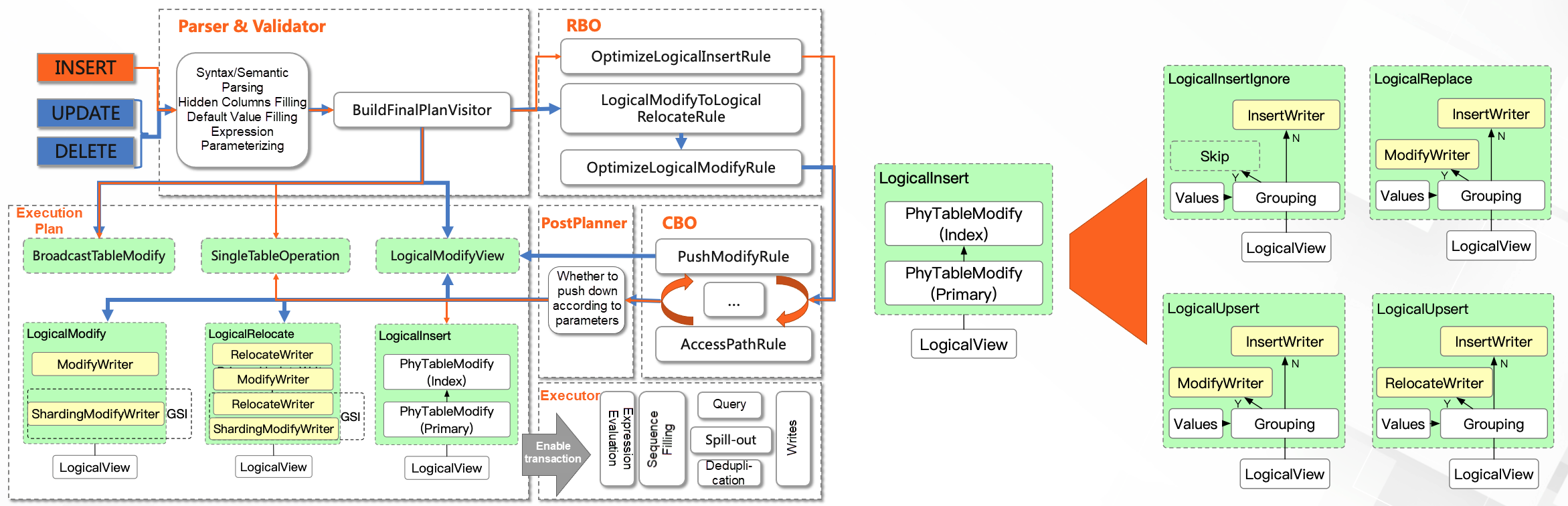

When data is written, the atomic commit of distributed transactions should be ensured because the data in the base table and GSI may be located in different partitions. In addition, due to concurrent writes, write-write conflicts must be handled. For a table without a global index, you can route the DML statement to the partition where the data resides and use the DN to implement concurrency control. However, for a table with GSI, when updating data, you must first read and lock the data to be changed, and then update the base table and indexes based on the primary key. This method of reading before writing is known as logical multi-writes.

logical_dml

It seems that reading data before data is written is not difficult to implement, which can be achieved by just SELECT + UPDATE/DELETE, but the practice is more complicated than expected.

First, the early DML implementation of PolarDB-X 1.0 (DRDS) completely relies on pushdown execution, lacking corresponding logic plans. MySQL has about 13 types of DML syntax, each of which needs to be supported, and the pushdown execution scheme is still retained for scenarios that can be pushed down.

Second, many detailed behaviors of MySQL are not described in the official documents and thus need to be adapted one by one according to the code, such as type conversion, affected_rows, and implicit default values. In addition, to support globally unique indexes, a conflict detection process needs to be added, resulting in four additional methods of executing INSERT statements. The preceding figure shows the execution process of logical multi-writes. For more information, see the source code interpretation.

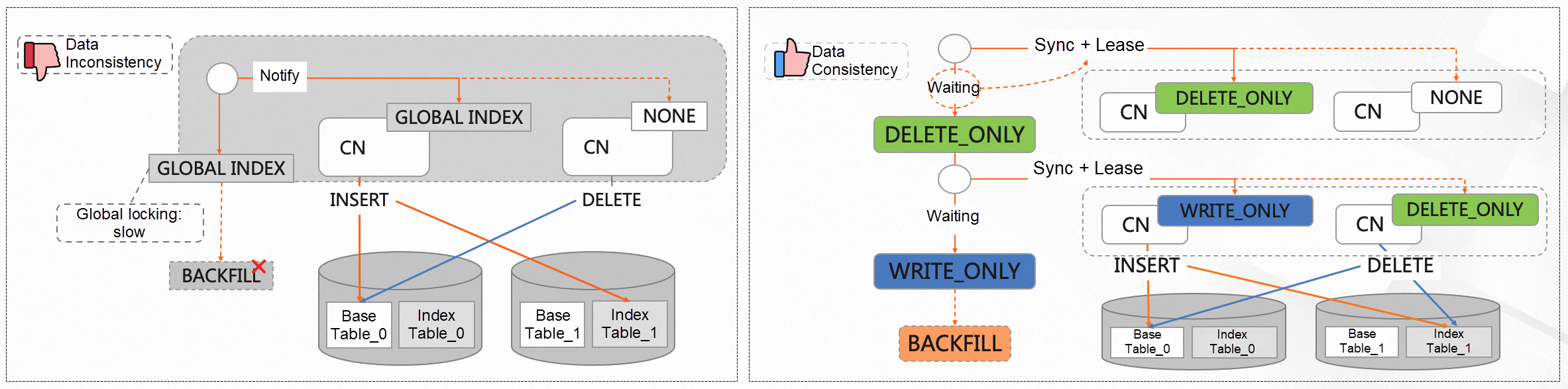

You also need to ensure data consistency when creating indexes. In distributed scenarios, as illustrated by the left figure, nodes may detect metadata at different times. As shown in the figure, a node knows that there is an index, so it inserts the index and writes to both the base table and the index table. The other node is unaware of the index, so it only deletes the content on the base table and neglects the index table, which results in an additional piece of data on the index table.

online_schema_change

To address this issue, PolarDB-X adopts a solution inspired by the scheme of Google F1, introducing several mutually compatible stages to ensure a smooth transition of metadata. During the Schema Change process, the times of metadata version switches have increased. So, we have optimized the evolution of metadata versions on a single compute node so that DDL operations will not affect read and write executions.

After introducing the above technologies, our entire DDL framework can create global indexes without blocking. MySQL has supported atomic DDL since version 8.0.

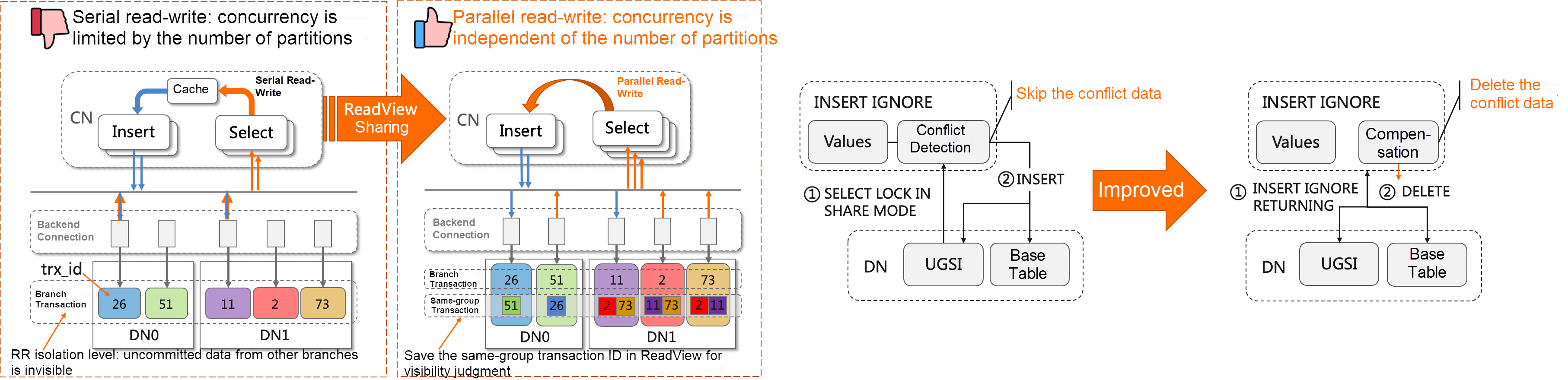

During data writing, write-write conflicts need to be handled due to concurrent writes. Similarly, during data reading, read-write conflicts need to be handled due to concurrent reads and writes. Modern databases use MVCC to resolve read-write conflicts. Before the query starts, a version number is obtained from the number issuer and used to determine whether the latest version of the data row is visible to the current transaction, so that the read data meets the specified isolation level. PolarDB-X supports TSO-based MVCC implementation, ensuring that the same snapshot is read from both the index table and the base table during the index lookup process.

The core objective of index selection is to enable users to utilize GSIs without manually specifying indexes. The solution is to automatically select an index based on CBO, which involves how the optimizer evaluates and selects execution plans that include index scans especially those on secondary indexes. Common names include IndexScan and IndexSeek, collectively referred to as IndexScan hereafter. In a standalone database, the TableScan is replaced by the IndexScan. If the index cannot cover the required columns, an index lookup is performed additionally. Optimization for IndexScans primarily involves column pruning and predicate pushdown, which employs an independent algorithm to calculate the cost of IndexScan and index lookup.

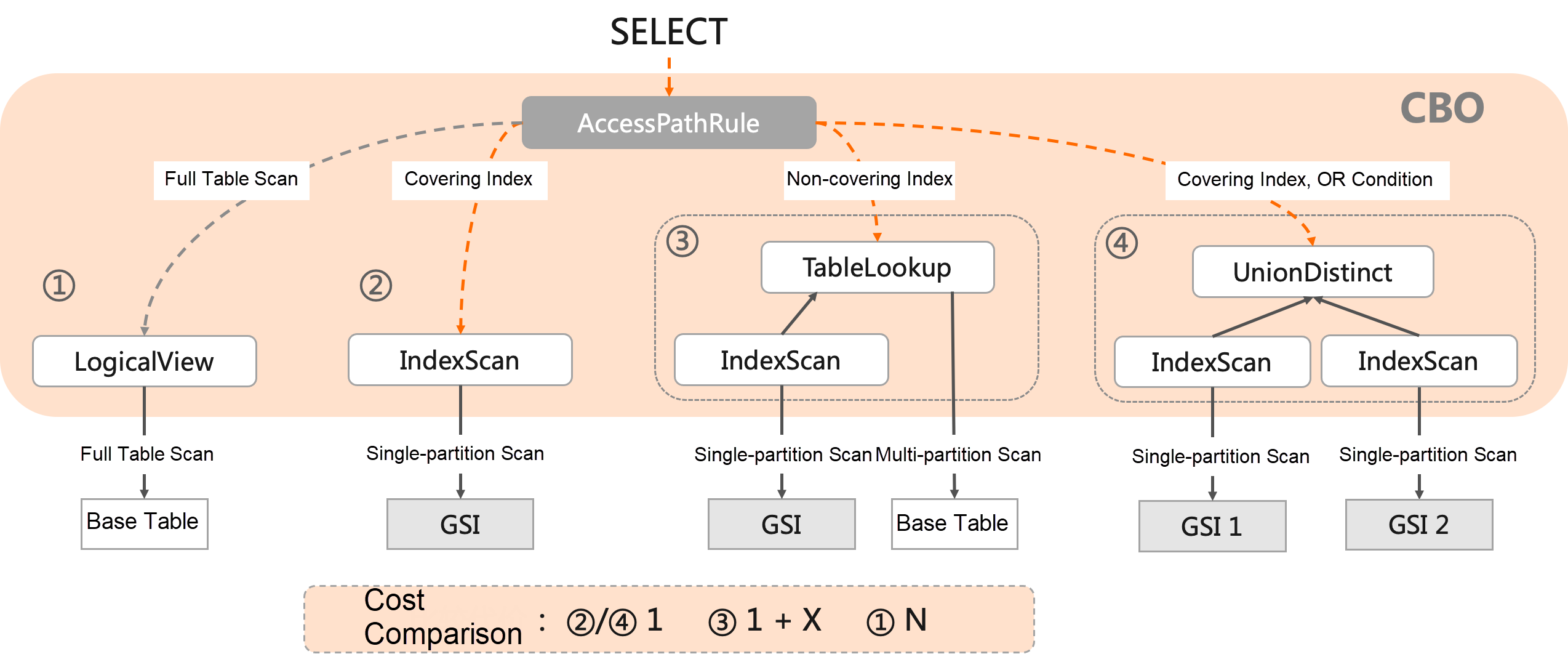

index_selection

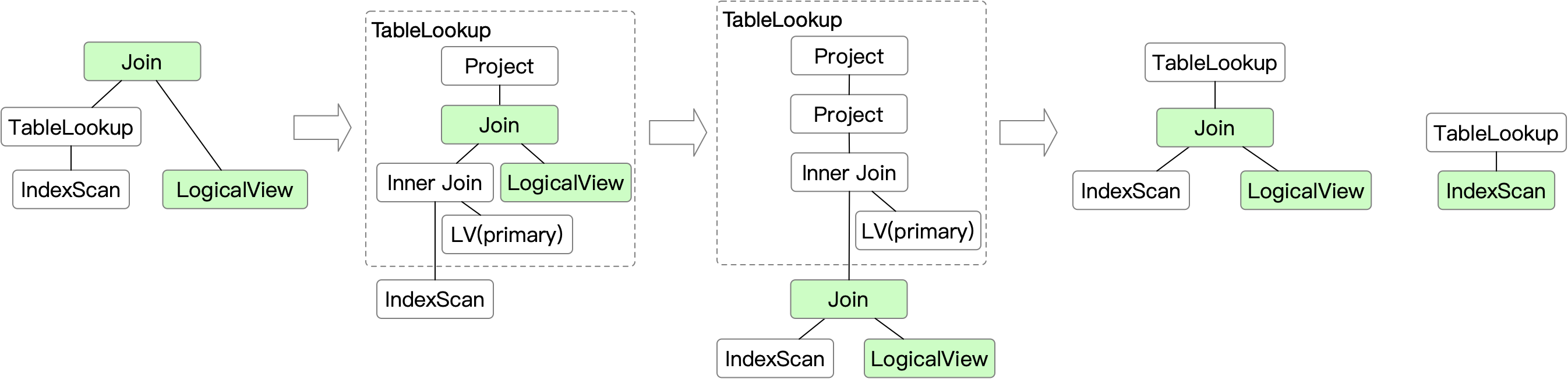

A key issue in cost evaluation is how to evaluate the cost of index lookups. A GSI itself is a logical table, and the lookup operation is equivalent to performing a Join between the index table and the base table on the primary key. Therefore, we have optimized the engineering to adapt the index lookup into a Project + Join operation, allowing us to integrate the entire cost evaluation related to the index into the cost evaluation for ordinary query plans.

index-pushdown

To incorporate plans containing IndexScan into the execution plan enumeration process, you need to adapt the index scan and lookup operators to the existing CBO framework. The specific implementation is shown in the preceding figure. AccessPathRule generates execution plans that use GSIs, and the most appropriate plan is selected by comparing costs in subsequent iterations.

Moreover, since lookups in a distributed database require network I/O, which costs more than looking up in a standalone database, PolarDB-X supports performing operations such as Join/Limit before the lookup, and pushing them together with index scans on DNs for execution. This reduces the data volume during lookups, thereby lowering network I/O.

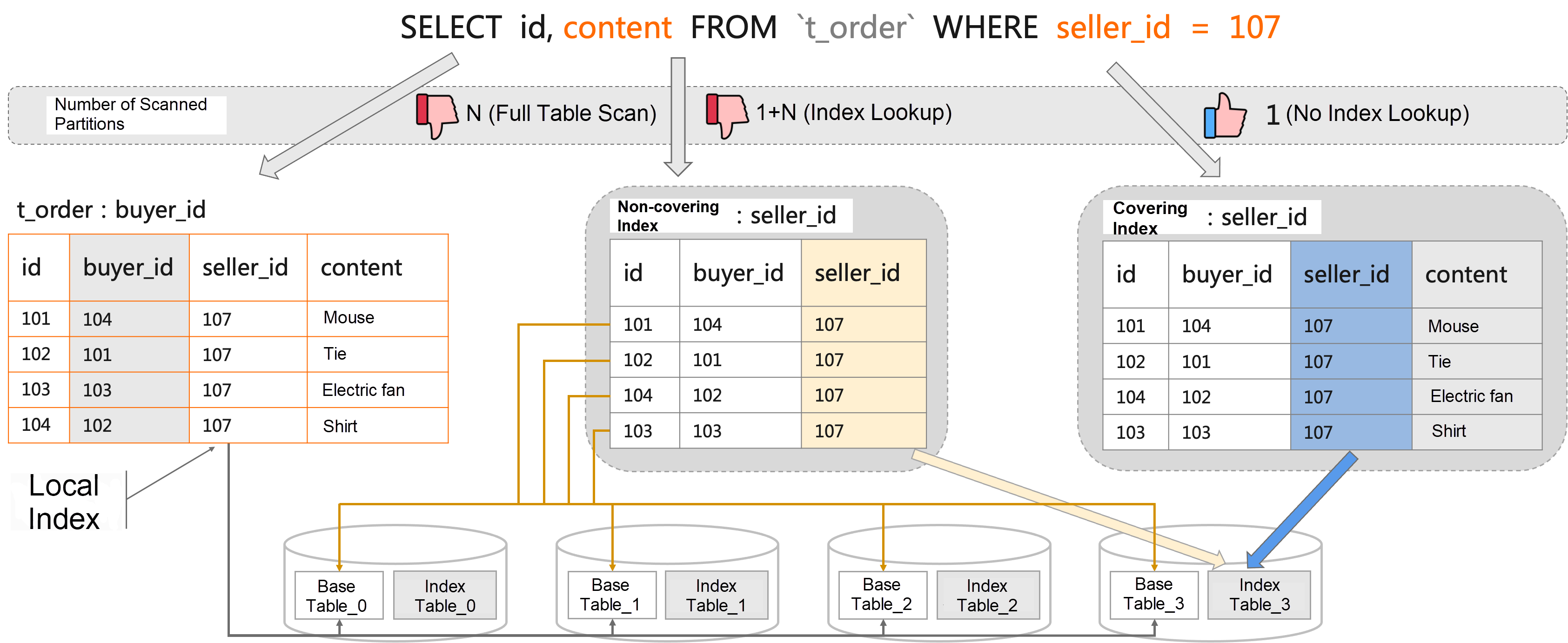

A covering index is a special index that allows users to save data from more columns in the index. The purpose is to meet the need for referenced columns required by more query statements and to minimize the need for a lookup. In standalone databases, the covering index is a common optimization method. For example, SQL Server has long supported using covering indexes to improve query performance.

covering_index

For distributed databases, lookups may also affect the horizontal scalability of the system. As shown in the preceding figure, the orders table is partitioned by buyer_id, so a full partition scan is required when you query data by seller_id. You may create a GSI on seller_id to optimize this, but since the index table typically only contains the partition key, the partition key of the base table, and the primary key, without the content column, a lookup is required. As the orders increase, the number of partitions involved in lookups also grows, eventually leading to a full partition scan. Thus, the goal of avoiding full partition scans by adding an index fails. To avoid this problem, PolarDB-X supports creating a covering index. You can use the COVERING syntax to add specified columns to a GSI, making it easier to achieve index coverage.

In addition to the column missing, the lack of historical versions may also lead to lookups. For example, MySQL only saves the transaction ID of the last write in the header of each page of the secondary index but does not save version information for secondary indexes. As a result, if historical versions need to be queried, a lookup is required. In PolarDB-X, undo-logs are recorded separately for GSIs during the writing process, so historical versions of the index can be read without additional lookups for historical version queries. Besides, PolarDB-X further supports executing Flashback Queries directly on the GSI.

Due to the additional overhead of using distributed transactions and logical multi-writes during data writes, write performance needs to be optimized to ensure system throughput. Specifically, distributed transactions use two-phase commit to ensure atomicity. Compared with standalone transactions, they add a prepare phase and the step of writing the commit-point. At the same time, TSO is used to obtain the commit timestamp, so the throughput of the TSO service may also become a bottleneck. Optimizations for distributed transactions include one-phase commit optimization, multi-partition optimization for a single machine, and TSO Grouping.

Logical multi-writes need to read data to the CN first for two reasons. First, PolarDB-X is compatible with the pessimistic transaction behavior of MySQL, where write operations use the current read. For UPDATE and DELETE statements that determine the update range based on predicates, the system first queries and locks the data to be modified to prevent transactions of different branches from reading inconsistent snapshots. Second, for INSERT statements, if there are unique constraints on the destination table, the system also needs to read the data first to perform unique constraint conflict detection.

Similar processes also exist in standalone databases. For example, when MySQL executes DML, the server layer first queries and locks the data to be modified from the innoDB, and then calls ha_innobase::write_row to write data. MySQL's unique constraint implementation also requires a unique constraint check before performing an INSERT. The difference is that the interaction between the MySQL server layer and the InnoDB layer occurs within a single machine, involving only memory and disk I/O, which has a lower cost. On the other hand, in a distributed database, the communication between CN and DN takes place through network machines, resulting in higher costs.

dml_optimize

When PolarDB-X executes DML, pushdown execution is preferred. For scenarios where the logical multi-write is required, engineering has been optimized for "querying and locking the data to be modified" and "unique constraint conflict detection".

Good compatibility with DDL is essential for transparent global indexes. Just imagine having to delete and rebuild the global index every time you modify the column type - it would be a real hassle. PolarDB-X is fully compatible with MySQL DDL statements, and GSI is automatically maintained by statements related to table, column, and partition changes. DDL execution algorithms are divided into Instant DDL and Online DDL. Instant DDL is mainly used to add columns, while Online DDL, based on AOSC, has detailed designs for different DDL statements. The following is a brief introduction to the typical implementations of ADD COLUMN and CHANGE COLUMN.

PolarDB-X supports the clustered global secondary index (Clustered GSI), which maintains the same structure as that of the base table and prevents all queries from index lookups. Therefore, when adding columns to the base table, you also need to add a column to the CSI. Generally, you can follow the AOSC process to ensure data consistency during the adding process. However, if the new column contains the ON UPDATE CURRENT_TIMESTAMP attribute, problems may occur. For example, in the following scenario, columns have been added to the physical table, but the CN is unaware of the new columns. Therefore, the DN independently fills the values in the base table and the index table, resulting in data inconsistency.

To solve this problem, we use a backfill to refresh the values of the newly added columns on the index after all CNs are aware of the metadata update, ensuring data consistency between the index and the base table.

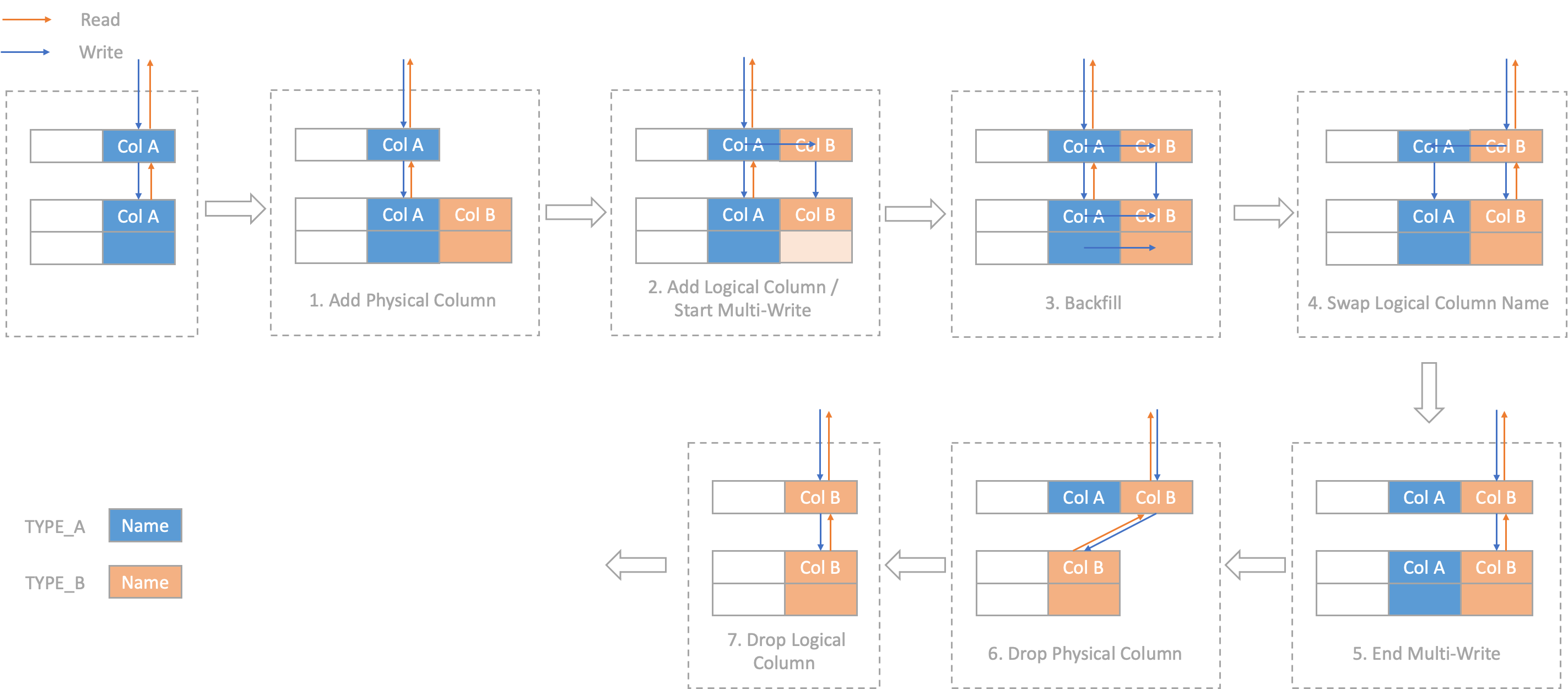

Changing the column type is one of the most complex operations in DDL and can significantly impact writes. For example, you still need to lock tables when changing the column type in MySQL 8.0. PolarDB-X supports Online Modify Column (OMC) through the add column - copy data - modify metadata mapping process combined with Instant Add Column, to enable non-locking column type changes with the GSI.

online_modify_column

The preceding figure shows the process of executing CHANGE COLUMN on a table without a GSI. It is divided into seven stages. First, add an invisible column COL_B. During writing, fill in both COL_A and COL_B with the same values. Then, backfill the existing data in the table from COL_A to COL_B. Finally, swap the metadata mappings of COL_A and COL_B, and delete COL_A to complete the column type change. The same process is also used in scenarios where a GSI exists, except that the GSI is treated the same in each step.

The underlying technology used for compatibility with DDL is similar to that used for creating GSIs (such as AOSC, data backfilling, logical multi-writes, and asynchronous DDL engine). However, in terms of its implementation, you need to consider the semantics of each DDL statement and the detailed behaviors of MySQL. For example, when backfilling data between old and new columns by using the UPDATE statement to change column types, the type conversion logic in MySQL ALTER TABLE and UPDATE commands differs. Therefore, we implement a specific logic for type conversion to mimic the behavior of ALTER TABLE within UPDATE.

In summary, it seems that the compatibility with DDL just supports certain syntax, but it actually involves a heavy workload.

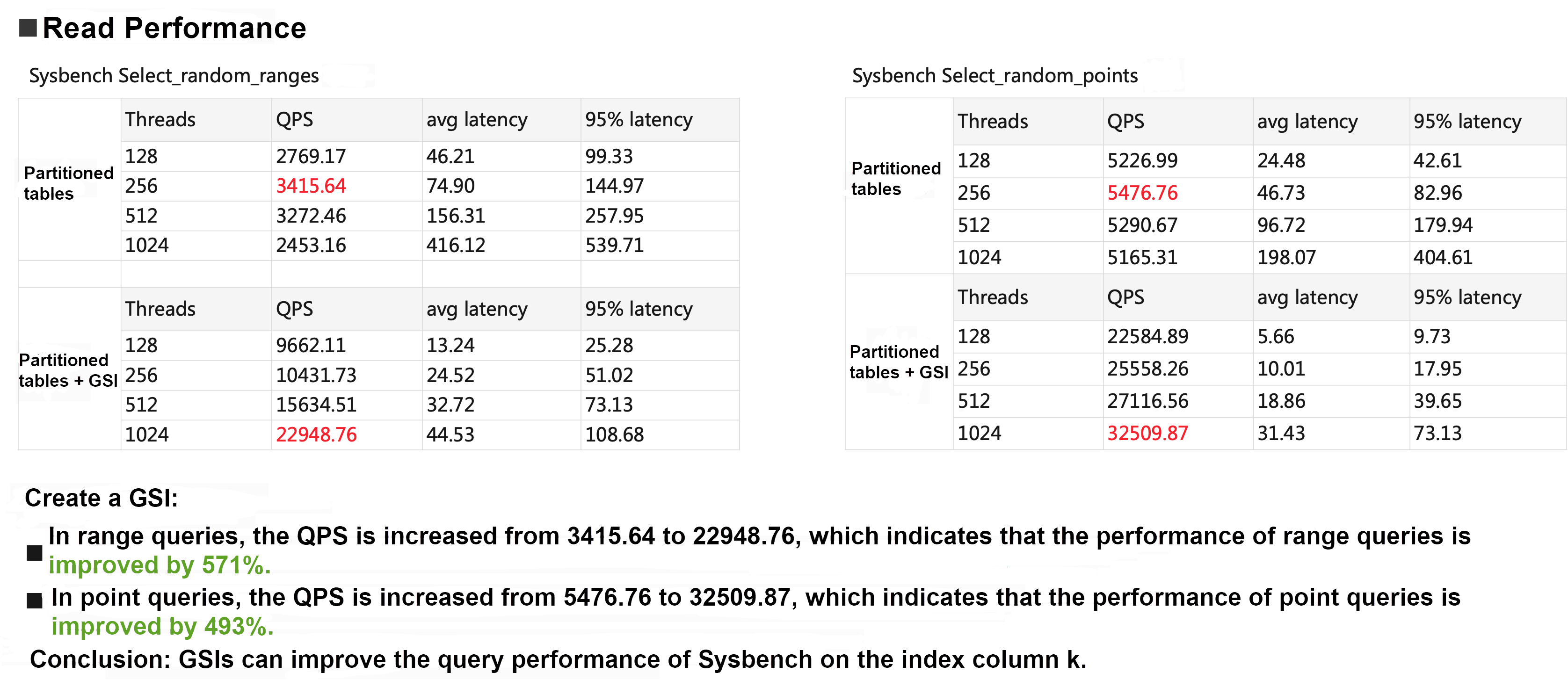

The impact of GSIs on the read and write performance is related to specific business scenarios. In essence, the write performance is reduced to improve the read performance. In the following examples, Sysbench is used to show the impact of GSIs on the read and write throughput.

sysbench_read

sysbench_write

Distributed databases based on the storage-compute separation and a shared-nothing architecture need to support global secondary indexes to eliminate full partition scans and ensure linear scalability. Standalone databases have long introduced the secondary index, which has been highly accepted by its users. A good global index experience should align with that of standalone databases. It should provide strong data consistency, and support creation via DDL statements and automatic index selection. At the same time, the index should not hinder the execution of other DDL statements.

PolarDB-X ensures strong consistency of index data through distributed transactions and logical multi-writes. It supports Online Schema Change, allowing index creation to run concurrently with writes. Covering indexes are also supported to address the full partition scan issue caused by index lookups. In addition, it carefully handles the compatibility with DDL statements involving tables with GSIs. This provides users with a transparent index use experience, lowering the use threshold of distributed databases.

Try out database products for free:

Optimizing Performance and Cost Efficiency: Advanced ApsaraDB for RDS Storage Architecture

ApsaraDB - June 19, 2024

ApsaraDB - August 13, 2024

ApsaraDB - November 7, 2024

ApsaraDB - January 23, 2024

ApsaraDB - March 26, 2025

ApsaraDB - November 12, 2024

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Lindorm

Lindorm

Lindorm is an elastic cloud-native database service that supports multiple data models. It is capable of processing various types of data and is compatible with multiple database engine, such as Apache HBase®, Apache Cassandra®, and OpenTSDB.

Learn MoreMore Posts by ApsaraDB