Continuing from the previous section, this article introduces the engineering practices jointly carried out by the Alibaba Cloud Tair KVCache team and the SGLang community to improve inference frameworks—enabling support for hybrid-architecture models.

With the trends toward long-context LLMs and agentic systems, Transformers face GPU memory and compute bottlenecks, while efficient semantic retrieval with Mamba models is constrained. Hybrid architectures emerged by combining the strengths of both, but they introduce system-level challenges: there is a fundamental conflict between Transformer’s token-granularity caching and Mamba’s request-granularity in-place update mechanism, which causes traditional optimization techniques such as prefix caching and speculative decoding to fail. This urgently calls for an architectural redesign of inference frameworks to address the difficulties of heterogeneous state management and scheduling.

Based on the SGLang Hybrid Models work, this article deeply analyzes the design principles, implementation challenges, and system-level optimization paths of hybrid architectures, providing a practical technical solution for efficient and reliable hybrid inference for large models.

● Hybrid architecture: SGLang pioneered a dual memory-pool design, perfectly accommodating the very different memory behaviors of both Transformer and Mamba.

● Technical solution: Using state snapshotting, it addresses Mamba’s “non-reversible” limitation, making cache reuse and speculative decoding possible.

● Optimization results: Benchmarks show hybrid models such as Qwen3-Next run extremely fast on SGLang.

This series of technical articles systematically analyzes the evolution path of KVCache technology tailored for agent-style reasoning:

As an extension of Alibaba Cloud Database Tair's capabilities, Tair KVCache represents three paradigm shifts in caching:

🔹 From Redis's "caching data → reducing I/O"

🔹 To GPU KVCache's "caching computational intermediate states → reducing redundant computation"

🔹 To Tair KVCache's "scalable, intelligent attention state management → reshaping the cost model of large model inference"This evolution marks a transformation of caching from a supporting component into a core capability of the AI infrastructure layer—making "state" storable, shareable, and schedulable, and establishing a scalable foundation for inference in the era of intelligent agents.

As large language model (LLM) inference services enter a new phase defined by long-context processing, multimodal interaction, and agentic behavior, the limitations of traditional architectures are becoming increasingly evident. Transformer models excel at semantic modeling thanks to their attention mechanism, but their computational cost grows quadratically with sequence length, and KV cache memory usage expands linearly. As a result, they encounter GPU memory constraints and compute bottlenecks in scenarios such as ultra-long texts and persistent conversations. Meanwhile, state space models represented by Mamba open up a new path with linear computational complexity and constant memory consumption, but their limited state capacity and irreversible context-compression mechanism make it difficult for them to support the fine-grained semantic recall required by complex reasoning tasks.

This tension has fueled the rise of hybrid architectures—interleaving Transformer full-attention layers with Mamba-style state space model layers in an attempt to strike a balance between efficiency and performance. However, deploying hybrid models is far from a simple matter of stacking modules; it conceals deeper, system-level challenges. Building on the SGLang Hybrid Models work, this article provides an in-depth analysis of the design principles, implementation challenges, and optimization paths, offering practical reference for building efficient LLM inference systems based on hybrid architectures.

State Space Models (SSMs) use recursive context-compression techniques to map a dynamically changing token sequence into a fixed-dimensional latent state. This design delivers two major breakthroughs in the computational paradigm:

1) Improved memory efficiency: during inference, the state dimension remains constant (O(1)), eliminating the memory bottleneck of traditional attention mechanisms whose memory grows linearly with sequence length (O(N)).

2) Reduced computational complexity: during autoregressive generation, computation grows only linearly with sequence length (O(N)). Compared with the quadratic complexity of attention (O(N2)), this is an order-of-magnitude improvement.

| Property | Attention layers | SSM layers |

|---|---|---|

| Computational complexity | O(N2) | O(N) |

| Memory consumption | O(N) | O(1) |

However, this design comes with inherent constraints: limited state capacity and an irreversible compression mechanism. The fixed-dimensional state of an SSM is like an “information funnel”—it struggles to preserve fine-grained, sensitive features in long-range context modeling, making it significantly weaker than attention-based architectures in complex pattern matching and precise semantic recall. This drawback is especially pronounced in scenarios such as multi-hop reasoning and long-document analysis, and it has become a key obstacle to deploying purely Mamba-based models.

To overcome this limitation, hybrid architectures emerged—by designing models that interleave attention and SSM layers, deeply integrating SSMs’ linear efficiency with attention’s semantic modeling capability. Advanced models such as Qwen3-Next and Kimi-Linear adopt architectures with mixed ratios of attention and SSM layers, achieving dual gains on long-context tasks: full-attention layers preserve the ability to capture critical semantic features and efficiently maintain long-context reasoning, while SSM layers replace part of the attention computation, significantly reducing memory bandwidth pressure and inference latency, thereby improving throughput.

Because attention layers and SSM layers differ fundamentally in their computational paradigms, engineering hybrid-architecture models for production requires careful treatment of state management across heterogeneous layers, as well as system-level optimizations.

First, systems must address the challenge of coordinated resource scheduling across these two paradigms: attention layers depend on prior KV cache for computation, while SSM layers rely on a fixed-dimensional SSM state for inference. This divergence leads to different memory-management requirements: attention layers require token-granularity KV-cache management, whereas SSM layers can maintain SSM state at request granularity. This mismatch makes it difficult for inference systems to manage both KV cache and SSM state for hybrid models.

The inconsistency between attention-layer and SSM-layer state management also increases the difficulty of adapting inference optimizations. SSM layers update state in an in-place overwrite manner; this compression behavior creates an irreversible update trajectory, which conflicts with optimization strategies that require state rollback, such as prefix caching and branching speculative decoding in agentic scenarios. When the system attempts to reuse shared context across requests (e.g., system prompt templates shared by many users, or knowledge-base documents), traditional KV-cache-block–based space-sharing mechanisms fail because they cannot accommodate the in-place update characteristics of SSM state. The system must design a unified caching protocol spanning both attention KV cache and SSM state-space mechanisms. Such cross-layer state synchronization must handle not only memory-management complexity but also potential race conditions.

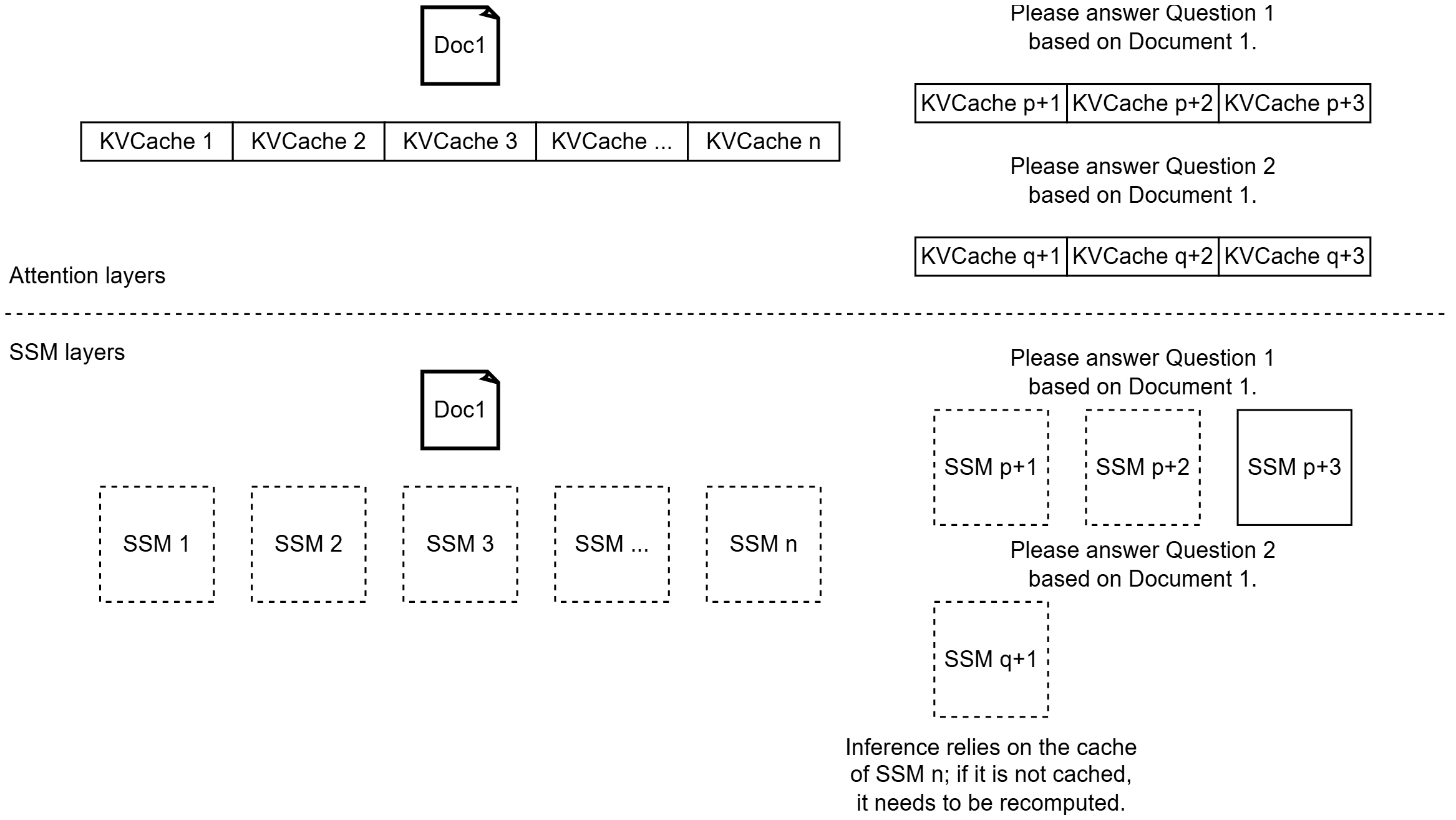

Take prefix caching as an example. Suppose we need to answer two questions based on Document 1. In the attention setting, because KV cache is maintained at token granularity, when answering Question 1 the KV cache for Document 1 is naturally computed and stored token by token; when answering Question 2, the system can directly reuse Document 1’s KV cache. In the state-space setting, however, the SSM state is overwritten in place. If the system does not explicitly cache the SSM state at a particular time step during inference, then after finishing Question 1 it will only retain the post-answer state (e.g., SSMp+3), while the completed state for Document 1 (e.g., SSMn) is missing. As a result, answering Question 2 must recompute from scratch, and prefix caching becomes ineffective.

At the distributed-deployment level, today’s mainstream prefill/decode (PD) disaggregation architectures and multi-tier KV-cache storage hierarchies have been heavily optimized around attention-based computation. KV cache is typically organized at token or page granularity and can be efficiently transferred and shared across SGLang instances or between SGLang and the underlying storage engine. This helps meet stricter SLAs in user experience and enables “store-to-save-compute” optimizations such as context reuse. A key challenge for productionizing such models is how to extend caching and communication mechanisms in existing distributed inference frameworks so they can both preserve efficient support for attention KV cache and also accommodate SSM-layer requirements—SSM state caching, cross-node transfer, and persistence.

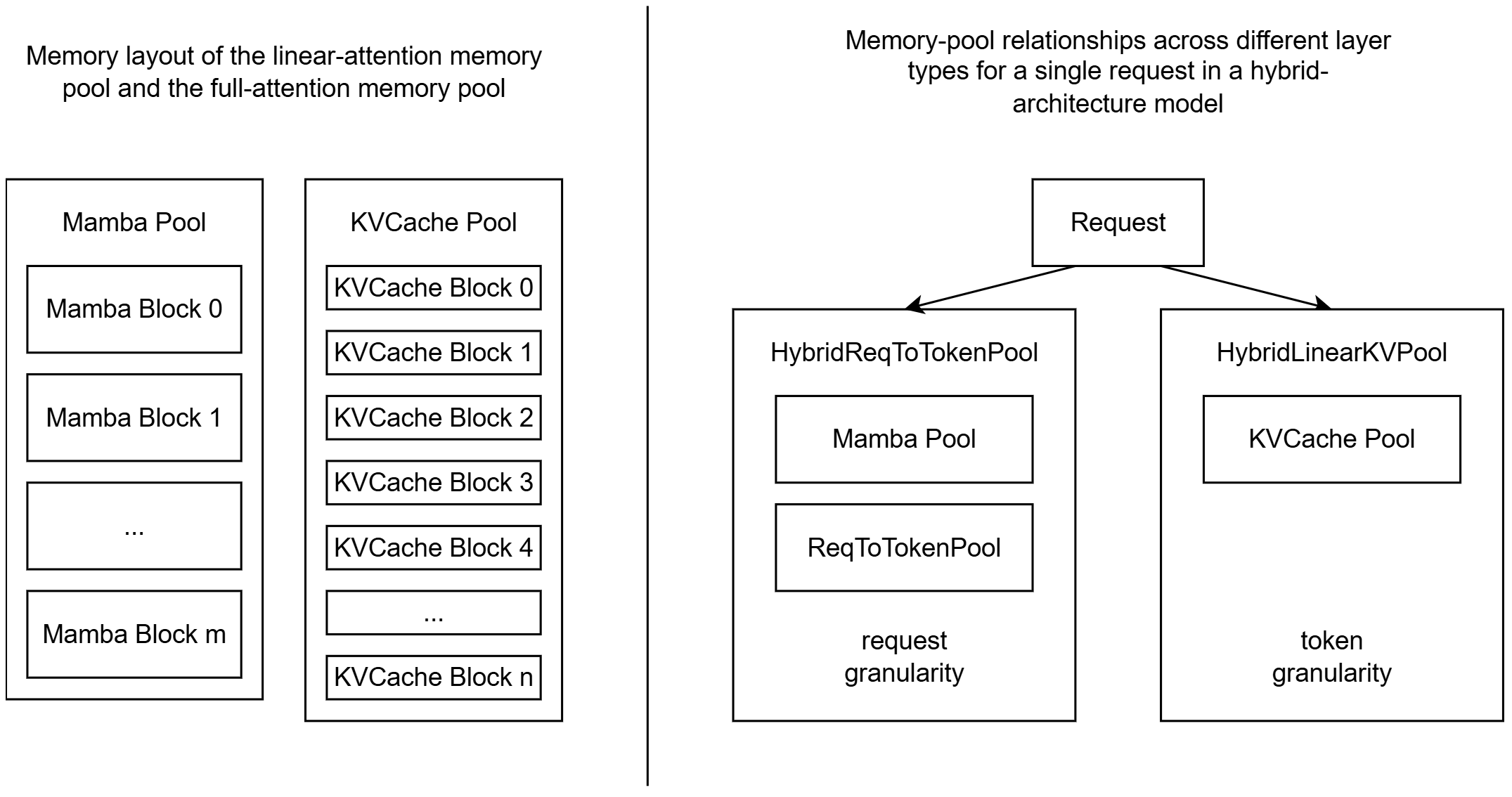

To address the unique memory-management challenges introduced by hybrid-architecture models, SGLang proposes a multi-pool memory architecture. The core idea is to carefully identify the distinct memory-behavior characteristics exhibited by different attention-related components, and then apply targeted, fine-grained memory-management strategies accordingly.

Specifically, in the SGLang framework, the KV cache generated by traditional attention layers exhibits “fine-grained growth with short-cycle fluctuations”: each newly generated token produces only a few KB of cache data, which accumulates and is released dynamically over the course of inference. In contrast, the SSM state required by the state space model mechanism introduced in hybrid architectures shows “large contiguous blocks with long holding periods”: the SSM state for a single request typically consumes several MB of storage and must be preserved intact until the request fully completes. If these two data structures with vastly different memory requirements are placed in the same memory pool, the alternating allocation units (KB-scale vs. MB-scale) would not only cause severe memory fragmentation, but also significantly increase engineering complexity and runtime overhead.

To that end, SGLang adopts a physically isolated dual-pool design, dividing total memory into two independent fixed-size regions: a Mamba state pool and an attention KV-cache pool. Their total capacities are statically configured and preallocated at service startup via the --mamba-full-memory-ratio parameter, effectively avoiding OOM risks that may be caused by runtime dynamic allocation.

The Mamba state pool is managed on a per-request basis. With the HybridReqToTokenPool data structure, the system allocates a fixed-size (typically MB-scale) contiguous memory page for each request during initialization, ties its lifetime to the request, and immediately reclaims it when the request finishes—ensuring efficient utilization of large memory blocks. The KV-cache pool, meanwhile, continues the fine-grained management strategy. Through HybridLinearKVPool, it maps attention layers to physical memory and is dedicated to supporting full-attention computation. This separated design not only avoids allocating useless KV cache for SSM layers, but also enables orthogonal management of the two memory-demand patterns, significantly improving overall memory utilization.

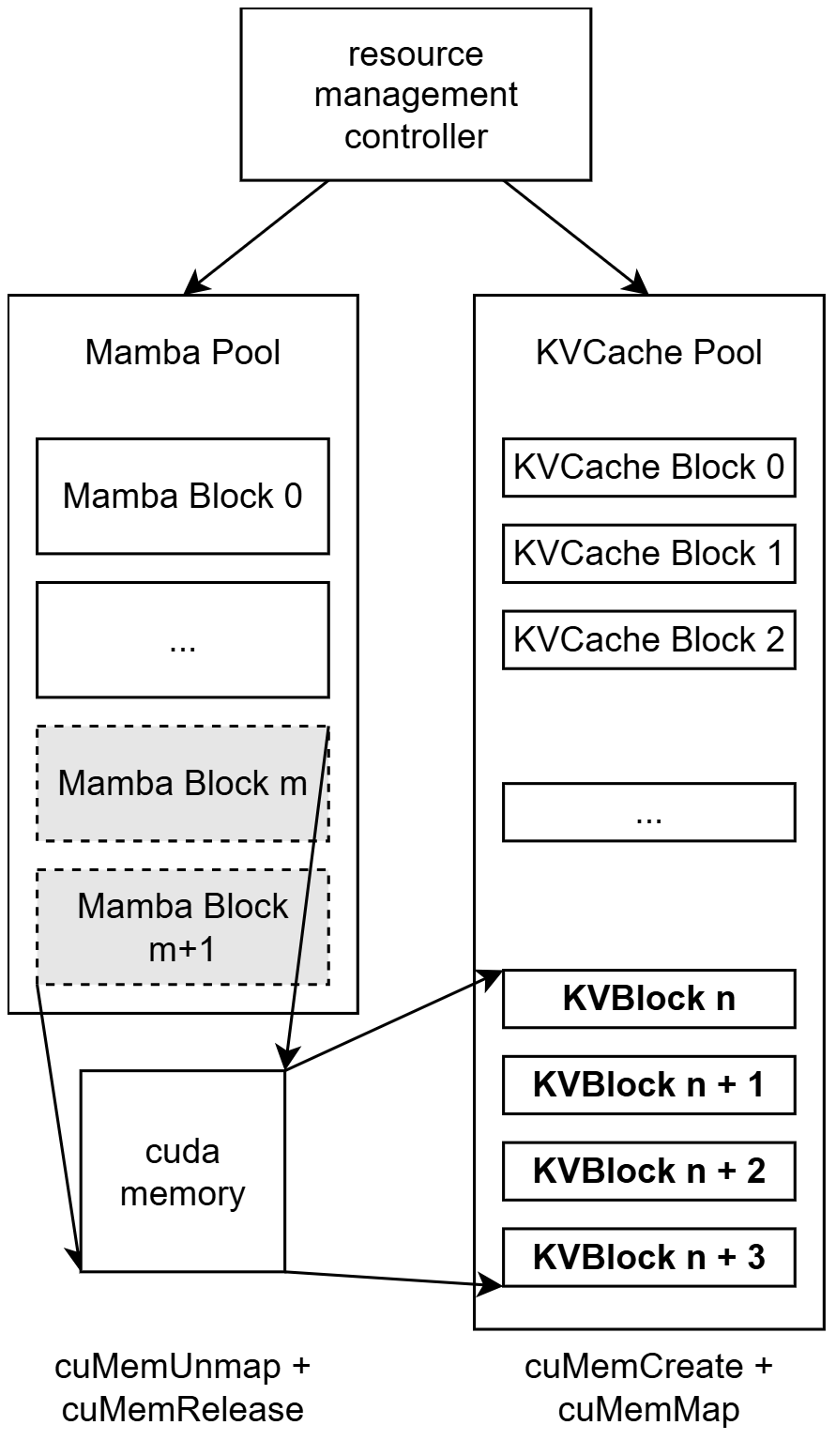

However, a fixed-ratio partitioning of pools is hard to adapt to fluctuating real-world workloads. For example, when the workload shifts from high-concurrency short-dialog tasks to low-concurrency but extremely long-context generation, the Mamba pool often becomes underutilized due to fewer requests, while the KV-cache pool can be quickly exhausted by surging long-sequence caching demand—thereby limiting batch size and creating performance bottlenecks.

To address this, SGLang introduces an elastic memory-pool mechanism on top of the multi-pool isolation architecture. While preserving the semantic isolation between Mamba state and KV cache, it enables runtime dynamic reallocation of capacity across pools. This mechanism first leverages CUDA virtual memory management at the storage-management layer: at startup, the system pre-reserves an oversized virtual address space and creates elastically resizable tensor data structures for each pool. These tensors do not immediately occupy physical GPU memory; instead, they serve as virtual placeholders. When demand for a certain type of cache increases, the control module dynamically maps physical GPU memory pages to the corresponding virtual address ranges, achieving “activate on demand” allocation. Conversely, when a pool’s utilization drops, the physical pages backing its free blocks are unmapped and released to reclaim resources. In long-text generation, for instance, when the workload shifts from short requests to long-sequence tasks, the inference batch size typically shrinks, reducing the total SSM state required by SSM layers. As Mamba-pool utilization falls, the system can automatically transfer its idle physical pages to the KV-cache pool, supporting continued expansion to longer contexts and effectively mitigating the imbalance caused by static allocation.

At the control-decision layer, the system uses a centralized scheduling module to perform intelligent and safe cross-pool resource reallocation. Each pool registers its metadata with this module during initialization. At runtime, if a pool requests expansion due to insufficient capacity, the control module evaluates the current utilization of all pools in real time and selects the most idle pool to trigger a shrink operation—i.e., releasing some of its physical GPU pages. After confirming the release succeeds, it authorizes the requester to complete the expansion. The entire process is strictly bounded by a fixed total GPU memory budget and requires neither service restarts nor global memory reallocation, avoiding OOM risks while ensuring the atomicity and safety of allocation operations.

Through the combined design of “multi-pool isolation + elastic scheduling,” SGLang retains the benefits of fine-grained management tailored to different memory access patterns (large contiguous blocks vs. fine-grained dynamic allocation) while also gaining adaptability to dynamic workloads. This improves overall GPU memory utilization while maintaining system stability, providing solid support for efficient inference with larger batches or longer contexts.

In LLM inference optimization, prefix caching significantly improves throughput and efficiency by reusing the computation results of shared prefixes across different requests. However, when applied to hybrid architectures that incorporate state space models, it faces a set of challenges. Prefix caching for full-attention layers relies on token-granularity KV-cache management and can be truncated based on prefix matching. In contrast, SSM-state management in SSM layers has fundamentally different properties: the state is updated in place during inference and cannot be rolled back by simply truncating the sequence as with full-attention KV cache, making it hard to accurately reconstruct the state corresponding to an arbitrary historical prefix. Meanwhile, a single SSM state is typically MB-scale; compared with per-token KV cache, caching at token granularity would cause storage overhead to explode. More importantly, most SSM-state caches exhibit an “all-or-nothing” reuse property—an SSM-state cache can be reused only when the entire prefix used to compute it matches, and it does not support partial or incremental state reuse. These factors make it difficult to apply a traditional Radix-tree structure to such hybrid models.

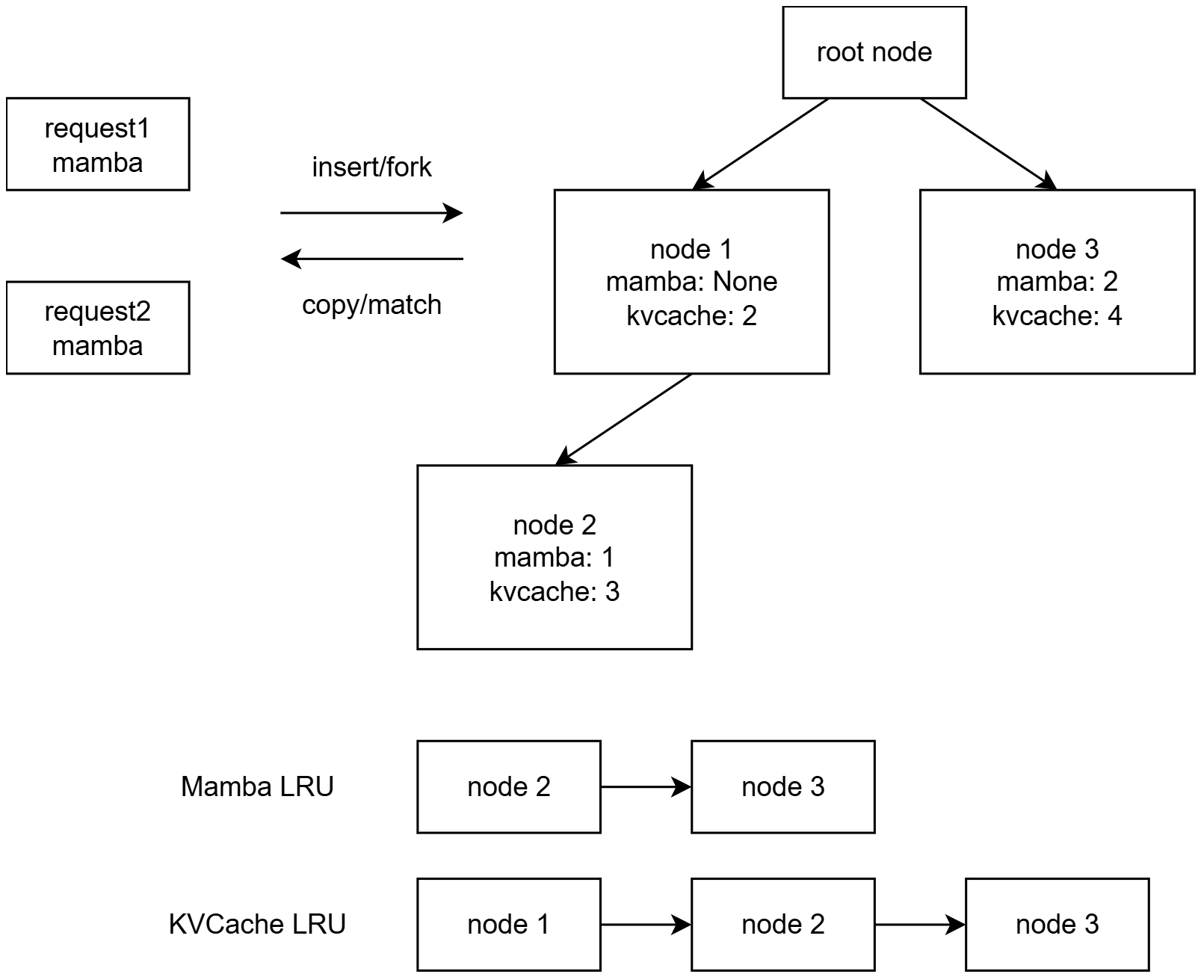

To address these challenges, SGLang introduces a new Radix tree, MambaRadixCache—a hybrid prefix-tree structure purpose-built for hybrid state-space and attention models. Without modifying existing Mamba inference kernels, this data structure enables coordinated and efficient management of both Mamba state and KV-cache entries.

During matching, the system searches the Radix tree for the node that shares the longest common prefix with the current input and has a valid cached SSM state. Since KV cache is immutable once written, the matched KV-cache blocks can be directly referenced and reused. SSM state, however, will be updated in place during subsequent inference, so the matched state must be fully copied as a snapshot for the new request. This prevents interference between concurrent requests caused by sharing the same state, ensuring isolation and inference correctness.

During insertion, after chunked prefill or token-by-token decoding, the system writes KV cache and SSM state into the Radix tree separately: for KV cache, it only needs to record the indices of the corresponding memory pages; for SSM state, it must allocate new memory pages to copy the state and associate the new page indices with the relevant tree nodes.

During eviction, MambaRadixCache uses a dual-LRU-queue mechanism to track access timestamps for KV cache and SSM state independently. KV-cache eviction strictly follows a leaf-to-root, level-by-level reclamation rule to preserve the integrity of the Radix-tree topology, whereas SSM state uses a more flexible elastic eviction strategy that allows freeing memory from nodes at any level.

With this design, MambaRadixCache enables hybrid models that combine SSM and attention layers to seamlessly integrate efficient prefix caching without modifying any low-level kernels or Mamba inference operators. It preserves the simplicity and high performance of the original computation logic while significantly reducing redundant computation and memory usage, providing critical infrastructure support for large-scale efficient inference.

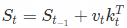

Speculative decoding is a core technique for accelerating LLM inference. In full-attention architectures, it significantly improves efficiency by generating and verifying candidate token sequences in parallel. However, applying it to state space models (SSMs) faces fundamental adaptation challenges. The root cause is the essential difference between SSM state updates and the KV cache in traditional attention: SSMs use in-place updates. After processing each new token, the internal state update St can be abstracted as a recurrence such as  , which overwrites the previous state irreversibly. While this design is efficient and elegant for sequence modeling, it prevents the system—during the verification stage of speculative decoding—from simply truncating or rolling back state as it would with KV cache. Once a candidate token is rejected, its modification to the SSM state has already taken permanent effect, and the historical state cannot be restored.

, which overwrites the previous state irreversibly. While this design is efficient and elegant for sequence modeling, it prevents the system—during the verification stage of speculative decoding—from simply truncating or rolling back state as it would with KV cache. Once a candidate token is rejected, its modification to the SSM state has already taken permanent effect, and the historical state cannot be restored.

Furthermore, existing speculative decoding methods such as Eagle-Tree rely on attention-mask mechanisms that are incompatible with SSM state evolution. Eagle-Tree dynamically constructs attention masks to support multi-path parallel verification, whereas SSMs do not explicitly maintain attention relationships between tokens. Their state is globally accumulated without local mask control, so the method cannot be applied directly.

To address these challenges, SGLang proposes a new cache-isolation–based architecture: it allocates an independent Mamba cache slot for each candidate token, creating physically isolated state sandboxes. Using the three-step candidate sequence “the → air → streets” as an example, the system maintains progressive state evolution in three separate cache slots—slot 1 stores the base state after being updated by “the”, slot 2 injects “air” on top of that, and slot 3 inherits the previous state and adds “streets”. When the verifier confirms that the prefix “the streets are” is valid, the system does not need to recompute intermediate steps; it simply promotes the final state in the corresponding slot (e.g., slot 3) to become the main SSM state, achieving efficient, lossless state switching.

In more complex Top-K > 1 scenarios where multiple candidate branches are generated at each step, the approach further introduces a parent-index precomputation mechanism. During speculative generation, the system explicitly records, for each candidate token, its parent node in the speculation tree. During verification, it follows this index back to the corresponding parent state and performs a recursive update:  , This design preserves Eagle-Tree’s multi-path exploration capability while aligning it with SSM state evolution, successfully extending efficient speculative decoding to SSM architectures and providing a practical path for real-time inference.

, This design preserves Eagle-Tree’s multi-path exploration capability while aligning it with SSM state evolution, successfully extending efficient speculative decoding to SSM architectures and providing a practical path for real-time inference.

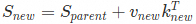

SGLang’s prefill/decode (PD) disaggregation architecture extends the transport protocol by introducing dedicated state-transfer channels for different attention mechanisms, enabling efficient disaggregated deployment of hybrid models. Beyond standard paged KV-cache transfer, the system designs independent parallel data paths for model-specific internal states—such as the SSM state in Mamba and the window cache in sliding-window attention—thereby enabling efficient transfer of non–attention KV-cache states. This design allows the system to flexibly adapt to various emerging attention mechanisms without large-scale refactoring of the core scheduling and communication logic.

Take a hybrid model that includes both attention layers and SSM layers as an example. The system maintains two independent memory pools: a paged KV-cache pool for attention layers, and a Mamba state pool dedicated to storing the SSM state required by SSM layers. When a new request arrives, the system first performs prefix matching via the MambaRadixTree. On a cache hit, it copies the matched MambaState into a newly allocated Mamba state buffer for the request and continues prefill inference from that point. After prefill completes, the prefill worker transfers the final Mamba state as a contiguous memory block to the decode worker in a single atomic operation; the decode worker uses dst_state_indices to tell the prefill worker which destination slot should receive the state. Unlike paged KV cache, which supports incremental transfer, Mamba state must be transferred as a whole and cannot be sent in segments. To ensure the state is placed correctly, the decode worker preallocates the corresponding KV-cache page slots and dedicated Mamba state slots during request scheduling, so the received state is written precisely to the memory locations required by subsequent decode steps—ensuring inference continuity and correctness.

To integrate a new hybrid state pool into the existing PD architecture for disaggregated serving, only minimal extensions are required on top of the current implementation. First, expose the buffer pointer, total size, and per-entry length for the new state type so it can be registered with the unified transport system. Second, define the state_indices generation logic on both the prefill and decode workers, specifying the source and destination addresses of the states to be transferred. This logic must be customized to the attention mechanism—for example, full attention or sparse attention typically uses token- or block-granularity KV-cache page indices; SSM layers use a single per-request index; and sliding-window attention can be managed via window page indices. Finally, register a unique state_type identifier for the new state in the KV-cache manager, and add the corresponding read/write and transfer-handling logic in the backend transport module. The entire process is highly modular and does not require intrusive changes to the core scheduling flow.

With these mechanisms, SGLang achieves unified, efficient, and extensible management of heterogeneous model states. It is compatible not only with traditional Transformer architectures but also seamlessly supports emerging variants such as Mamba and sliding-window attention (SWA), providing a solid foundation for high-performance disaggregated inference of hybrid-architecture LLMs.

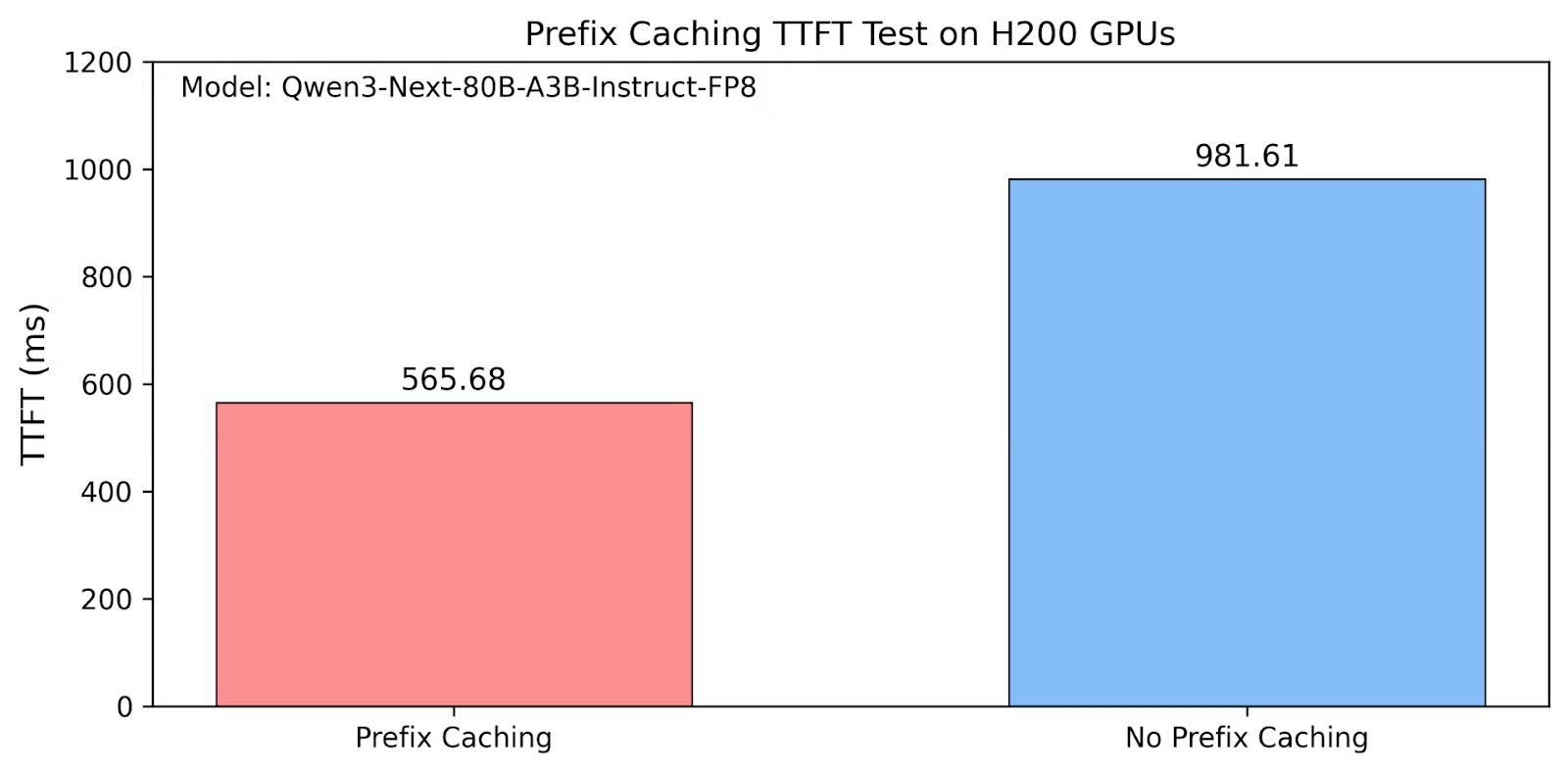

SGLang v0.5.5 was evaluated on an H200 GPU with Qwen3-Next-80B-A3B-Instruct-FP8, validating the effectiveness of the designs described above. As shown in the figure below, enabling prefix matching (“store-to-save-compute”) avoids redundant computation of matched prefixes and reduces TTFT to 57.63%.

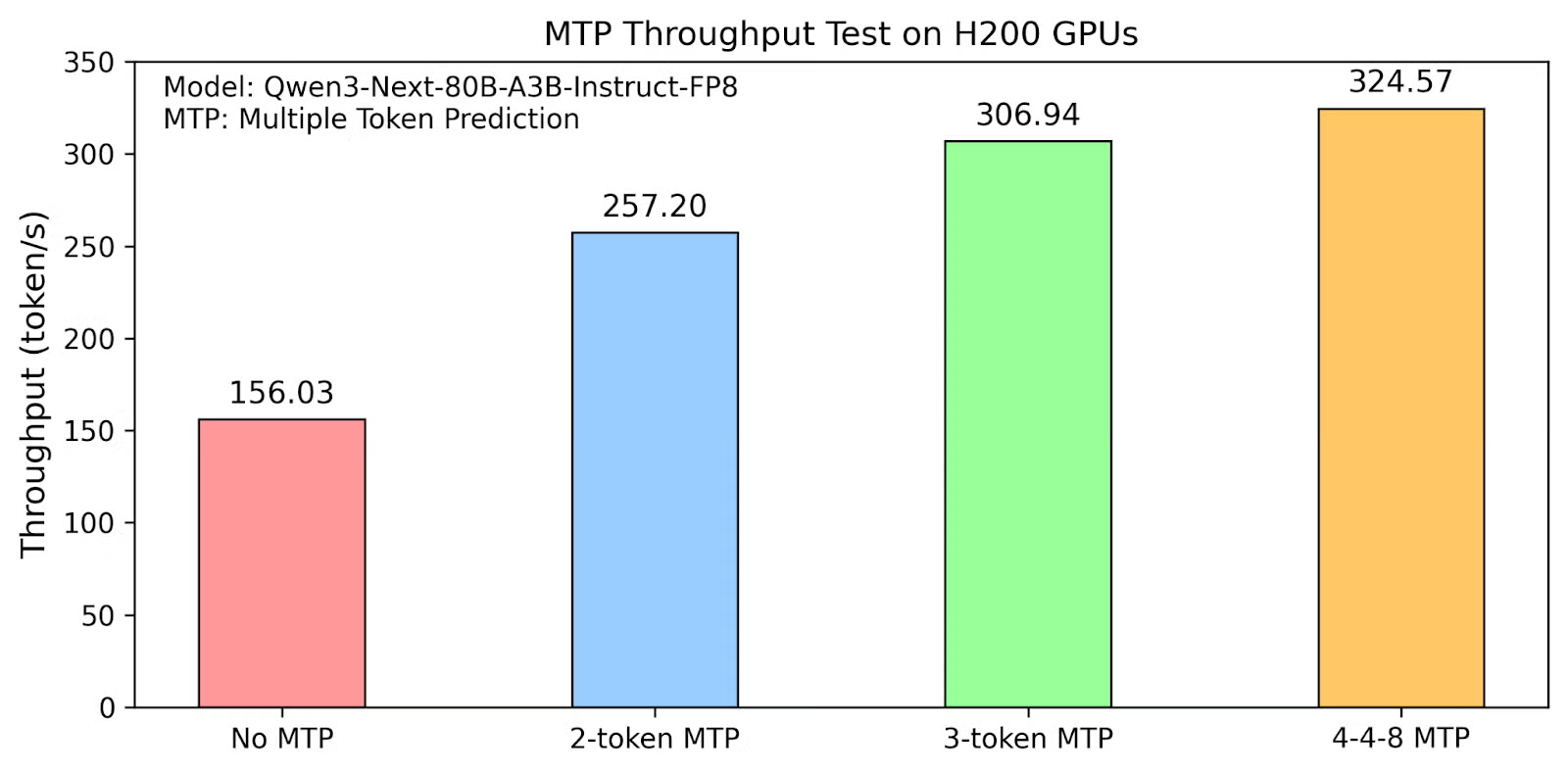

Speculative decoding was benchmarked for Qwen3-Next-80B-A3B-Instruct-FP8 with batch size = 1:

● With an MTP window of 2 tokens and top-k = 1, throughput reaches 257.20 tokens/s, with an average accepted length of 2.709 tokens.

● Expanding the MTP window to 3 tokens (still top-k = 1) increases throughput to 306.94 tokens/s, with an average accepted length of 3.413 tokens.

● Further setting the MTP window to 4 tokens, using top-k = 4 and 8 draft tokens, increases throughput to 324.57 tokens/s, with an average accepted length of 4.231 tokens.

As hybrid-architecture model inference continues to be optimized, SGLang is advancing along three major technical directions to further expand the application boundaries of hybrid models.

First, significant progress has been made in improving the generality and flexibility of caching mechanisms. The upgraded MambaRadixTree now fully supports flexible granularity configurations with page size > 1, and achieves deep compatibility with advanced mechanisms such as MTP (Multi-Token Prediction), the Overlap Scheduler, and Branching Position. This progress not only effectively addresses management overhead under ultra-long sequences, but also significantly improves memory utilization, establishing the system’s ability to efficiently adapt to diverse inference patterns.

Building on this foundation, Alibaba Cloud Tair KVCache will work closely with SGLang to drive deeper integration between the HiCache hierarchical KV caching architecture and hybrid models. This effort will involve restructuring multi-level hybrid storage, along with designing efficient storage-engine query interfaces and cache scheduling policies, with the goal of further improving cache hit rates and providing low-latency, high-throughput support for hybrid models in massive-data scenarios.

Finally, to ensure strict consistency between training and inference, ongoing work will focus on enabling bit-level deterministic inference. By eliminating numerical deviations caused by non-deterministic operations, this is expected to further improve experimental reproducibility and the reliability of production deployments, completing the closed loop from “high performance” to “high trustworthiness”.

ApsaraDB - December 29, 2025

XianYu Tech - August 10, 2021

ApsaraDB - June 7, 2022

XianYu Tech - December 24, 2021

Alibaba Clouder - March 10, 2021

ApsaraDB - February 4, 2026

AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by ApsaraDB