By Yanhua

In the database field, the term large-scale transactions specifically refers to DML operations with a long execution time and an effect on a large amount of data, which are required to be completed at one time under the ACID principle. The emergence of large-scale transactions is an extreme test for the processing capability of the database system, which is directly related to data integrity and system stability.

From the perspective of triggering, the emergence of large-scale transactions is closely related to specific application scenarios. For example, in data warehouses or big data processing, it is often necessary to integrate massive amounts of data, such as batch data import, complex report generation, large-scale data update or deletion, and DML update of large field columns. Each type has its specific application background.

From the perspective of the database itself, large-scale transactions can be divided into multi-row large-scale transactions and large-object large-scale transactions. The former refers to common large-scale transactions that contain modifications to many rows of data, while the latter refers to special large-scale transactions that contain large-scale object columns with a large amount of data. When handling these two types of large-scale transactions, the database needs to handle them separately to ensure less impact on other ordinary transactions.

Although large-scale transactions meet specific business requirements, they also pose significant challenges to the stability and performance of the database system:

• In terms of resource usage and performance bottlenecks, large-scale transactions not only occupy a large amount of memory and CPU resources but also cause a surge in disk I/O, which further affects the response time and throughput of the entire database. The direct manifestation is that the proportion of slow queries increases significantly when large-scale transactions are running.

• In terms of lock occupation, large-scale transactions may hold locks for a long time due to a wide range of data involved, which not only affects the normal execution of other transactions but also may lead to deadlocks, further deteriorating the system status. The direct manifestation is the lock wait timeout of the ordinary transaction execution.

• In terms of crash recovery, a large number of logs generated by large-scale transactions occupy a large amount of storage space. During system crash recovery, large-scale transaction logs significantly extend the recovery time (RTO) of the database, affecting business continuity.

• In terms of data consistency, during the execution of large-scale transactions, the probability of system failure increases synchronously with the scope of impact. Once a failure occurs, a large number of executed operations may need to be rolled back, which not only increases the complexity of data recovery but may also affect the consistency of a large amount of data, resulting in high repair costs.

Combined with the entire forwarding process of transactions in MySQL, let's take a look at how MySQL processes large-scale transactions:

• Before concurrent transactions enter the group commit process, each thread processes various SQL statements before the commit of transactions, generates binlog events, and writes them to the thread-private io_cache. If the thread encounters a large-scale transaction, such as 200M, it will exceed the default maximum memory cache of 32K for io_cache and need to be persisted in a temporary file.

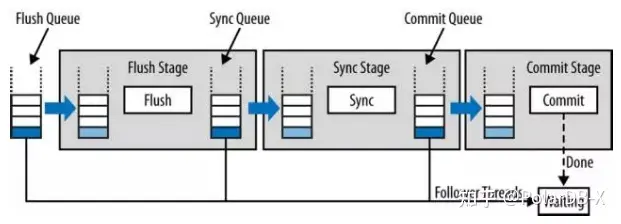

• After concurrent transactions enter the group commit process, according to the flush->sync->commit group commit process of MySQL, the commit of transactions in the same group is led by the leader thread and performed in the order of transactions in the group.

• In the flush phase, the leader thread copies the binlog events on the io_cache of each thread to the global binlog file in the order within the group (without disk flushing). When encountering a thread of a large-scale transaction, since the binlog event of the large-scale transaction is persisted to a temporary file, the content of the temporary file needs to be read from I/O and then written to the global binlog file. Compared with the memory copy of ordinary transactions, the flush of large-scale transactions here will cause the flush of the current group to take a longer time, affecting the commit of ordinary transactions in the same group.

• In the sync phase, due to the existence of large-scale transactions, the I/O duration of the sync action will also be longer.

• In the commit phase, the commit order of the engine is required to be consistent with the binlog event order by default, which in turn causes other ordinary transactions in the same group can continue to be committed only after the large-scale transaction engine is committed. The commit of ordinary transactions in the same group is affected.

• If a MySQL instance is deployed using both the primary and the secondary databases, the transaction is committed until the secondary database receives the complete transaction and responds with an ACK. Due to the existence of large-scale transactions in the group commit, the network transmission time and retransmission will be seriously increased, affecting the commit of ordinary transactions in the same group.

• In the above process, the group commit process strictly controls concurrency through locks. Only one group of transactions can be processed in each phase of flush, sync, and commit at the same time. Therefore, when a large-scale transaction affects the processing of ordinary transactions in the same group, the throughput of the entire system is also dragged down.

Faced with various challenges brought by the large-scale transaction issue, MySQL has not had a good solution. It can only try to mitigate its negative impact by adding restrictions to warn or directly interrupt long-running large-scale transactions, or adjusting parameters binlog_cache_size, sync_binlog, and net_write_timeout. However, if the business itself has to have a large-scale transaction application scenario and has high requirements for system stability and smoothness, then MySQL is unable to meet the requirements.

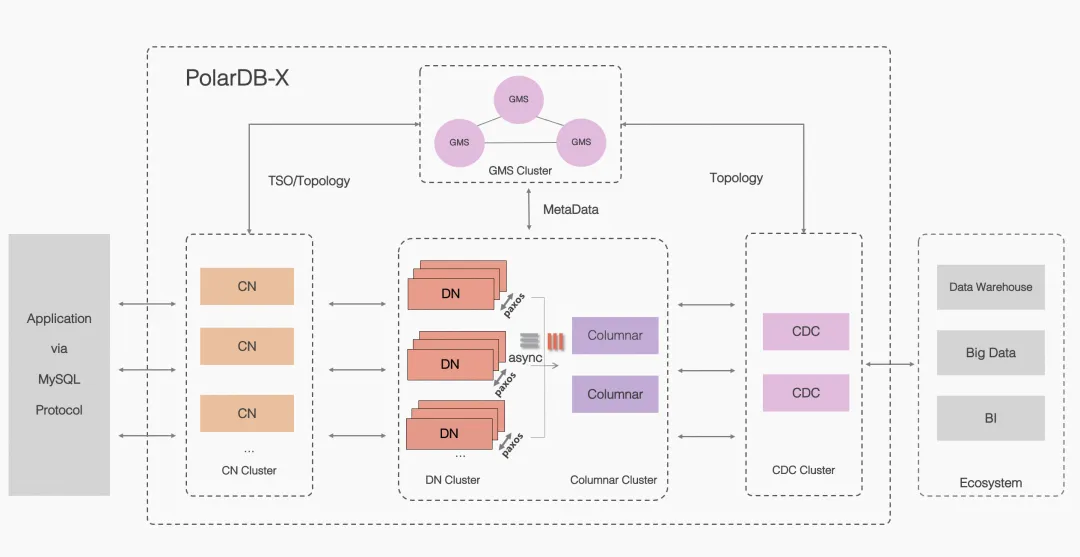

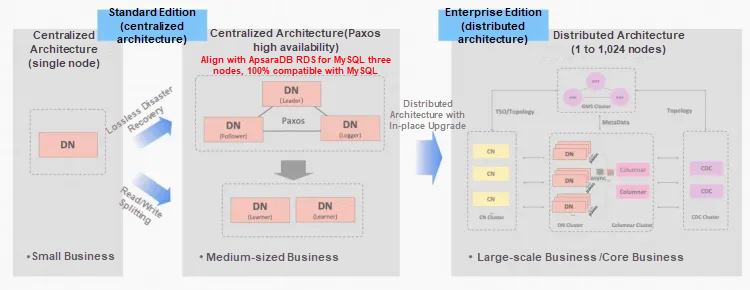

As the distributed edition of PolarDB, PolarDB-X adopts a Shared-Nothing architecture based on storage and computing separation, with capabilities such as high availability of financial-grade data, distributed scale-out, hybrid loads, low-cost storage, and high scalability. The PolarDB-X compute node (CN) adopts a stateless design and provides the capabilities of data-distributed routing execution and dynamic scheduling. The PolarDB-X storage node (DN) adopts multi-replica synchronous replication of the X-Paxos protocol to ensure strong data consistency.

To solve the issue of large-scale transactions, PolarDB-X adopts the idea of "divide and conquer": CN transparent distribution + DN large-scale transaction splitting + DN large-scale object splitting.

With the large data volume, the transaction commit on a single instance has a great impact. Then, you can first shard the data for storage and split a large-scale transaction on one instance into small transactions on multiple instances. To provide distributed databases that can be managed as easily as standalone MySQL databases, PolarDB-X CN provides easy-to-use and transparent distribution capabilities:

• By default, PolarDB-X uses a primary key for sharding. This way, you do not need to specify a "partition key" when you migrate business data to PolarDB-X.

• PolarDB-X ensures high performance and strong consistency for distributed transactions. It uses the TSO policy and distributed MVCC capabilities to ensure the isolation and consistency of distributed transactions.

• Supporting linear scaling of distributed databases, PolarDB-X uses consistent hashing for partitioning to effectively implement load balancing and reduce hotspotting. During a scale-out, PolarDB-X pushes down calculation processes and ensures data consistency. The scale-out of a PolarDB-X instance does not affect your business. PolarDB-X also supports parallel queries and throttling to ensure business continuity during scale-outs.

• PolarDB-X provides global binlogs to resolve the issues of data forwarding from compute nodes to data nodes in distributed databases. In distributed databases, if you restore nodes based on backup files that are created at different points in time, data inconsistency can occur. To resolve this issue, PolarDB-X ensures data consistency before backup and creates backup files based on the globally consistent data.

If you are interested, you can move to PolarDB-X Support for Ultra-large-scale transactions.

For large-scale transactions that cannot continue to be stored in shards on a single DN but still have a large amount of data, PolarDB-X DN provides the large-scale transaction splitting capability. To put it simply, a large-scale transaction is split into multiple small transactions while storing data on disks and network sending. The details are explained below.

• In the PolarDB-X DN group commit process based on X-Paxos multi-replica, the transaction commit process is expanded into three phases: flush -> sync+send -> wait+commit. Send indicates that the content of this group of transactions is asynchronously sent to other replicas in the background after the sync is completed, and wait indicates that each transaction in this group waits for the majority of transactions to complete synchronization before the commit starts. In large-scale transaction scenarios, if you do not enable large-scale transaction splitting, then the problem faced by MySQL cannot be solved by DN either.

• After large-scale transaction splitting is enabled, a large-scale transaction is logically split into several small transactions in the flush phase and each small transaction contains several complete events. During splitting, flush->sync->send is directly performed on each small transaction. The sync and send here will also carry along other ordinary transactions that have completed the flush action. By splitting, the jitter of large-scale transactions during flush, sync, and send actions can be smoothened as much as possible, avoiding severe jitter and network retransmission.

• Since the sync and send actions have been performed in advance in the flush phase, large-scale transactions do not need to do anything in the sync phase of the group commit.

• In PolarDB-X DN, the order in which transactions are committed in the engine is not required to be consistent with that in the binlog by default. Transactions in the same group can be committed to the engine in parallel. Therefore, in the commit phase of group commit, large-scale transactions keep the same wait state as ordinary transactions and then commit respectively so that the commit phase of large-scale transactions no longer blocks that of ordinary transactions.

• In large-scale transaction scenarios, enabling large-scale transaction splitting balances the overall transaction commit. Ordinary transactions that are committed in the same group as large-scale transactions (in the same phase or different phases) but earlier than large-scale transactions are no longer blocked by large-scale transactions in the same group. For those that are committed in the same phase as large-scale transactions but later than large-scale transactions, due to the sequence of transaction commit, they still have to wait for large-scale transactions to be committed. However, because of the more lightweight send action after splitting, the probability of network packet loss or timeout retransmission will be minimized. Compared with the case of no splitting, the commit latency of large-scale transactions will be lower, so the impact on the ordinary transactions queued behind is also much smaller.

PolarDB-X DN also provides a variety of parameters for the evaluation threshold and splitting granularity of large-scale transactions.

• consensus_large_trx, the main switch for large-scale transaction splitting, enabled by default

• consensus_max_log_size, the evaluation threshold for large-scale transactions, 20M by default

• consensus_large_trx_split_size, the splitting granularity for large-scale transactions, 2M by default

Since the theory is a bit convoluted, let's take a look at a practical example. We lower the evaluation threshold and splitting granularity of large query transactions, force ordinary transactions to be marked as large-scale transactions, split large-scale transactions, and then execute show binlog event statements to view the event composition after splitting large-scale transactions.

# When there is no large-scale transaction splitting

#consensus_large_trx=OFF

| master-bin.000001 | 456 | Consensus_log | 1 | 515 | ##CONSENSUS FLAG: 0 TERM: 19 INDEX: 40 LENGTH: 509 RESERVE: 0' |

| master-bin.000001 | 515 | Gcn | 1 | 547 | SET @@SESSION.INNODB_COMMIT_SEQ=1024 |

| master-bin.000001 | 547 | Gtid | 1 | 626 | SET @@SESSION.GTID_NEXT= '64a87b61-0eb6-11ef-b4bd-0242c0a80505:11' |

| master-bin.000001 | 626 | Query | 1 | 714 | XA START X'7878',X'',1 |

| master-bin.000001 | 714 | Table_map | 1 | 768 | table_id: 92 (d1.t1) |

| master-bin.000001 | 768 | Write_rows | 1 | 900 | table_id: 92 flags: STMT_END_F |

| master-bin.000001 | 900 | Query | 1 | 986 | XA END X'7878',X'',1 |

| master-bin.000001 | 986 | XA_prepare | 1 | 1024 | XA PREPARE X'7878',X'',1 |

# When large-scale transaction splitting is enabled

#consensus_large_trx=ON

#consensus_max_log_size=150

#consensus_large_trx_split_size=50

#consensus_large_event_split_size=150

| master-bin.000002 | 456 | Consensus_log | 1 | 515 | ##CONSENSUS FLAG: 4 TERM: 13 INDEX: 8 LENGTH: 111 RESERVE: 0' |

| master-bin.000002 | 515 | Gcn | 1 | 547 | SET @@SESSION.INNODB_COMMIT_SEQ=1024 |

| master-bin.000002 | 547 | Gtid | 1 | 626 | SET @@SESSION.GTID_NEXT= '64a87b61-0eb6-11ef-b4bd-0242c0a80505:5' |

| master-bin.000002 | 626 | Consensus_log | 1 | 685 | ##CONSENSUS FLAG: 4 TERM: 13 INDEX: 9 LENGTH: 88 RESERVE: 0' |

| master-bin.000002 | 685 | Query | 1 | 773 | XA START X'7878',X'',1 |

| master-bin.000002 | 773 | Consensus_log | 1 | 832 | ##CONSENSUS FLAG: 4 TERM: 13 INDEX: 10 LENGTH: 54 RESERVE: 0' |

| master-bin.000002 | 832 | Table_map | 1 | 886 | table_id: 92 (d1.t1) |

| master-bin.000002 | 886 | Consensus_log | 1 | 945 | ##CONSENSUS FLAG: 4 TERM: 13 INDEX: 11 LENGTH: 132 RESERVE: 0' |

| master-bin.000002 | 945 | Write_rows | 1 | 1077 | table_id: 92 flags: STMT_END_F |

| master-bin.000002 | 1077 | Consensus_log | 1 | 1136 | ##CONSENSUS FLAG: 4 TERM: 13 INDEX: 12 LENGTH: 86 RESERVE: 0' |

| master-bin.000002 | 1136 | Query | 1 | 1222 | XA END X'7878',X'',1 |

| master-bin.000002 | 1222 | Consensus_log | 1 | 1281 | ##CONSENSUS FLAG: 8 TERM: 13 INDEX: 13 LENGTH: 38 RESERVE: 0' |

| master-bin.000002 | 1281 | XA_prepare | 1 | 1319 | XA PREPARE X'7878',X'',1 |In PolarDB-X DN, a complete transaction is marked by a Consensus_log. During large-scale transaction splitting, Consensus_log also assumes the role of the split transaction. When there is no large-scale transaction splitting, the transaction has only one Consensus_log. When large-scale transaction splitting is enabled, the transaction is encapsulated into six independent transaction blocks using a new Consensus_log based on each event.

The specific difference of Consensus_log is in the FLAG field. Except for the flag of the last split large-scale transaction, which is 8 bits, the flag of the other split blocks is 4 bits. The Consensus_log flag of unsplit ordinary transactions is 0. We will not dive into the details of the code here.

For large-scale transactions that cannot continue to be stored in shards on a single DN, with the limited number of data rows modified by transactions and large-scale objects in a single row, resulting in a large amount of data modification in the total transaction, PolarDB-X DN provides the large-scale transactions splitting capability. To put it simply, a large event continues to be physically split during the original large-scale transaction splitting process. The details are explained below.

• In the flush phase of large-scale transaction splitting mentioned earlier, for a single event in a large-scale transaction that is determined to be a large event, it will continue to be physically split into multiple blocks according to the large-scale object splitting granularity, with each block being marked as a split transaction block. Since the event itself has the minimum recognition granularity, it can only be physically split here.

• To be compatible with the binlog service, the blocks split from the large event are actually only sent to each small transaction block during the send process, while the local binlog file stores the same number of transaction blocks with the last transaction block being a complete large event and the previous transaction blocks being Consensus_empty event. After the network of the secondary database receives the large event in blocks from the primary database, the event is persisted to the binlog in the same way as the primary database.

• The whole process is the same as that of other split large-scale transactions. In the splitting process, flush->sync->send is directly performed on each small transaction block. The sync and commit phases are the same. The actual effect is also the same as that of large-scale transaction splitting.

PolarDB-X DN also provides a variety of parameters for the evaluation threshold and splitting granularity of large-scale objects.

• consensus_max_log_size, the splitting threshold for large-scale objects, 20M by default

• consensus_large_event_split_size, the splitting granularity for large-scale objects, 2M by default

Taking the same transaction above as an example, we lower the evaluation threshold and granularity of large-scale object transactions, force the ordinary Write_rows event to be marked as the large event, split large-scale objects, and then execute show binlog event statements to view the event composition after splitting large-scale objects.

# When large-scale object splitting is enabled

#consensus_large_trx=ON

#consensus_max_log_size=120

#consensus_large_trx_split_size=50

#consensus_large_event_split_size=50

| master-bin.000003 | 456 | Consensus_log | 1 | 515 | ##CONSENSUS FLAG: 4 TERM: 17 INDEX: 28 LENGTH: 111 RESERVE: 0' |

| master-bin.000003 | 515 | Gcn | 1 | 547 | SET @@SESSION.INNODB_COMMIT_SEQ=1024 |

| master-bin.000003 | 547 | Gtid | 1 | 626 | SET @@SESSION.GTID_NEXT= '64a87b61-0eb6-11ef-b4bd-0242c0a80505:9' |

| master-bin.000003 | 626 | Consensus_log | 1 | 685 | ##CONSENSUS FLAG: 4 TERM: 17 INDEX: 29 LENGTH: 88 RESERVE: 0' |

| master-bin.000003 | 685 | Query | 1 | 773 | XA START X'7878',X'',1 |

| master-bin.000003 | 773 | Consensus_log | 1 | 832 | ##CONSENSUS FLAG: 4 TERM: 17 INDEX: 30 LENGTH: 54 RESERVE: 0' |

| master-bin.000003 | 832 | Table_map | 1 | 886 | table_id: 92 (d1.t1) |

| master-bin.000003 | 886 | Consensus_log | 1 | 945 | ##CONSENSUS FLAG: 164 TERM: 17 INDEX: 31 LENGTH: 23 RESERVE: 0' |

| master-bin.000003 | 945 | Consensus_empty | 1 | 968 | ##CONSENSUS EMPTY' |

| master-bin.000003 | 968 | Consensus_log | 1 | 1027 | ##CONSENSUS FLAG: 36 TERM: 17 INDEX: 32 LENGTH: 23 RESERVE: 0' |

| master-bin.000003 | 1027 | Consensus_empty | 1 | 1050 | ##CONSENSUS EMPTY' |

| master-bin.000003 | 1050 | Consensus_log | 1 | 1109 | ##CONSENSUS FLAG: 68 TERM: 17 INDEX: 33 LENGTH: 132 RESERVE: 0' |

| master-bin.000003 | 1109 | Write_rows | 1 | 1241 | table_id: 92 flags: STMT_END_F |

| master-bin.000003 | 1241 | Consensus_log | 1 | 1300 | ##CONSENSUS FLAG: 4 TERM: 17 INDEX: 34 LENGTH: 86 RESERVE: 0' |

| master-bin.000003 | 1300 | Query | 1 | 1386 | XA END X'7878',X'',1 |

| master-bin.000003 | 1386 | Consensus_log | 1 | 1445 | ##CONSENSUS FLAG: 8 TERM: 17 INDEX: 35 LENGTH: 38 RESERVE: 0' |

| master-bin.000003 | 1445 | XA_prepare | 1 | 1483 | XA PREPARE X'7878',X'',1 |It can be seen that after large-scale transaction/large-scale object splitting is enabled, in addition to the existing large-scale transaction splitting, Write_rows exceeding the threshold are also split into three blocks. The flag of the first block is 164 bits, the second block 36 bits, and the last block 68 bits. Consensus_log flag of the split blocks of other large-scale transactions is 4 or 8 bits.

Currently, PolarDB-X Standard Edition is open-source. DN is exactly the same in PolarDB Enterprise Edition and Standard Edition. Enterprise Edition refers to an enterprise-level combination of CN and DN, and Standard Edition is only a three-node high-availability deployment of DN. Here, we take the latest open-source PolarDB-X Standard Edition as an example to verify the effect of large-scale transaction splitting of PolarDB-X DN.

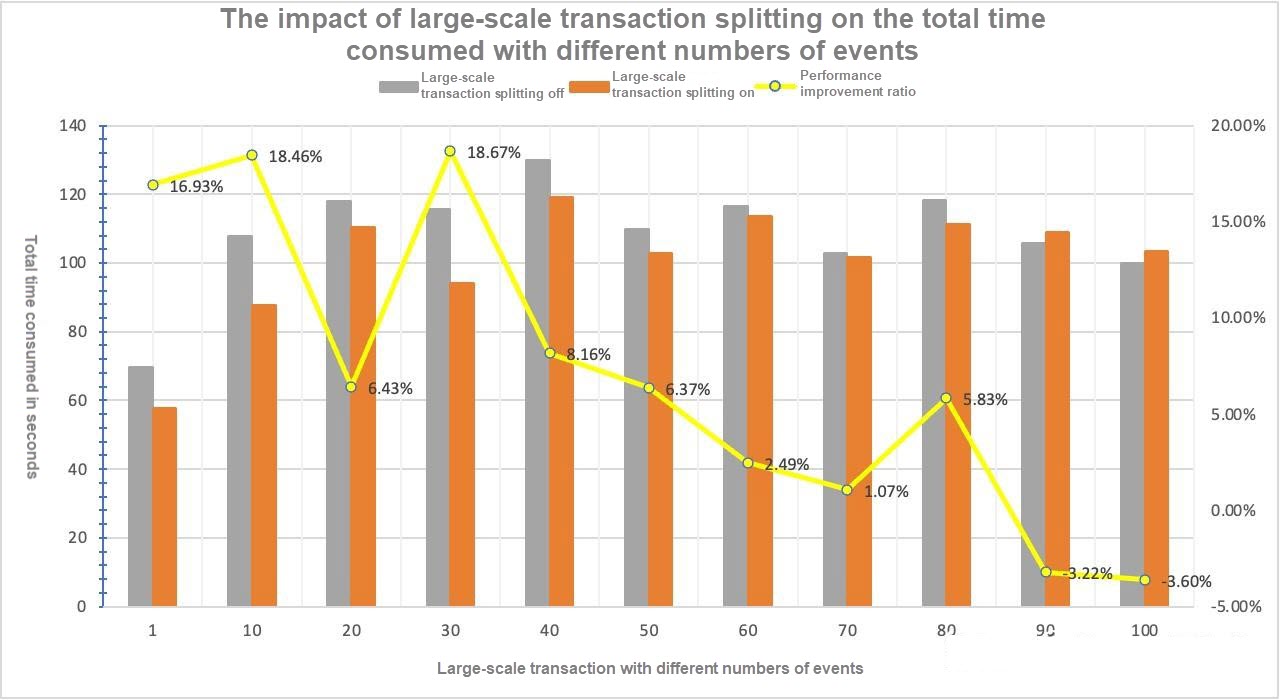

We deploy a DN Standard Edition cluster (Leader+Flollower+Logger) based on the specifications and parameters of 32C128GB on the same physical machine with 96C754GB memory. Performance testing uses the sysbench tool to test the oltp_read_write scenario performance metrics of 10 million rows of data in 20 tables.

To build a large-scale transaction scenario, we adjust the number of --index_updates, --non_index_updates, and --delete_inserts to create large-scale transactions. The size of ordinary oltp_read_write transaction events is 2,390 bytes and the size of created oltp_read_write large-scale transaction events is 40M or 200M.

The test is conducted for 50 concurrent requests with different numbers of events (1, 10, 20 ... 100) to see the impact of large-scale transaction splitting on the total time consumed. When large-scale transaction splitting is disabled, a single large-scale transaction DN of 200M cannot run (heartbeat timeout to re-elect the leader). Therefore, for scenarios with large-scale transactions disabled, parameters such as heartbeat and packet timeout need to be increased.

The test results show that for the read-and-write scenarios of completely large-scale transactions

• When large-scale transaction splitting is disabled, a single large-scale transaction cluster of 200M cannot run, and the heartbeat and packet-timeout must be adjusted. Such adjustments in actual generation will inevitably affect the stability of the cluster. However, the stability of the cluster is not affected after adopting the large-scale transaction splitting solution.

• Compared with the case where large-scale transaction splitting is not enabled, when the number of events does not exceed that of concurrency, the performance is improved significantly (6%~18%). The reason is that when the concurrency is 1, each large-scale transaction is most likely not in the same group commit, and the commit performance of a single large-scale transaction is better when split than that of no splitting. When the event exceeds the concurrency, the performance is basically not improved, because sync+send is split into multiple times with increased latency.

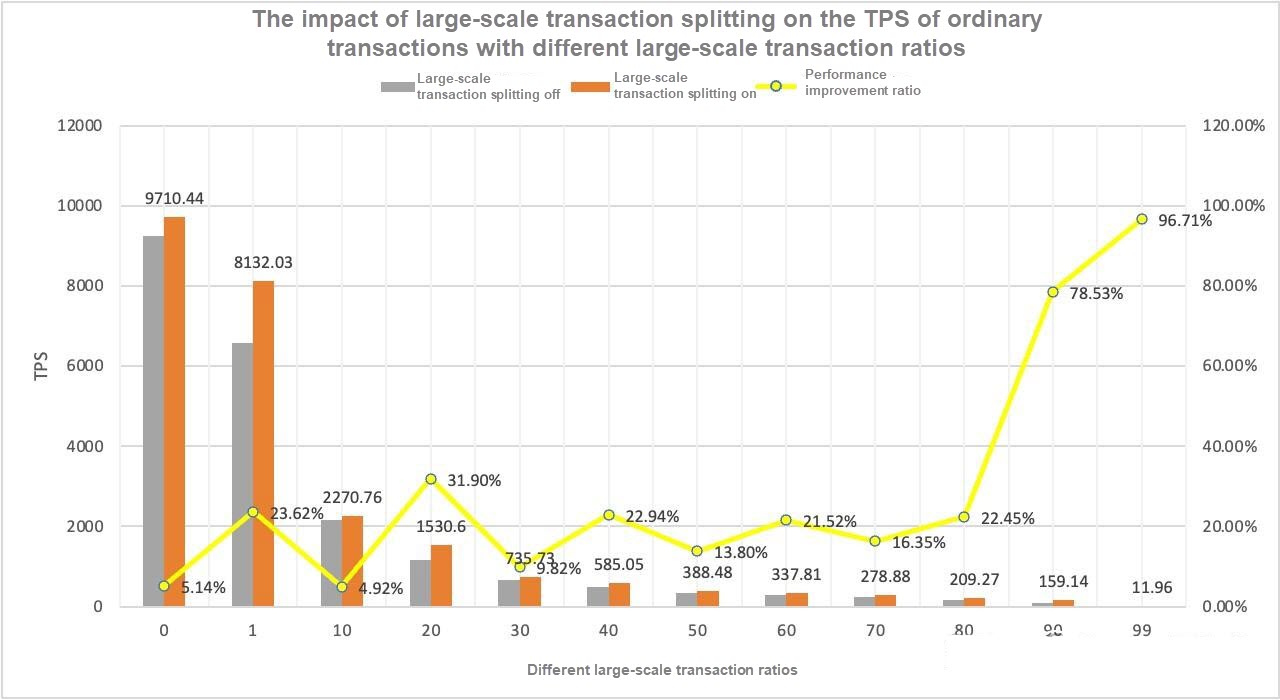

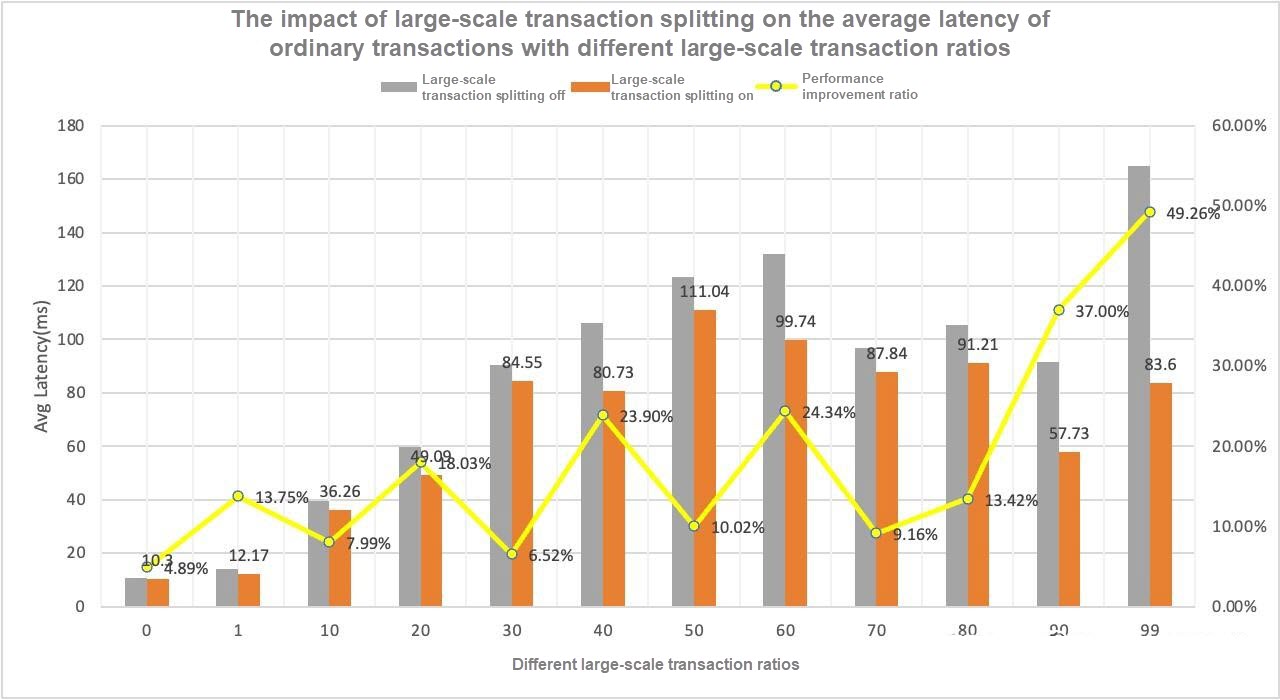

A test is conducted to see the impact of large-scale transaction splitting on the TPS/average latency of ordinary transactions with different large-scale transaction ratios. To construct the large-scale transaction ratio, we start two sysbench tasks, one of which runs a large-scale transaction with X threads and the other of which runs an ordinary transaction with 100-X threads. The proportion of large-scale transactions is controlled by adjusting X. After large-scale transaction splitting is disabled, even if the timeout parameter is adjusted, the system still cannot support the test of 200M large-scale transaction and ordinary transaction concurrency. Here, we can only continue to select 40M large-scale transactions for comparative testing.

The test results show that for the read-and-write scenarios in which large-scale transactions and ordinary transactions are mixed

• When large-scale transaction splitting is disabled, large-scale transactions of 200M cannot run concurrently with ordinary transactions. System availability is lost. After large-scale transaction splitting is enabled, system availability is not affected.

• When ordinary transactions and large-scale transactions are executed concurrently, with the increasing proportion of large-scale transaction concurrency, the TPS decreases significantly and the average latency of ordinary transactions gradually increases. When the proportion of large-scale transactions is greater than 60%, the trend is affected because the TPS of ordinary transactions is already very low, and the latency tends to be stable without comparative value any longer.

• As the proportion of large-scale transactions increases, large-scale transaction splitting can improve the TPS performance of ordinary transactions by 5% to 30%, and reduce the average latency by 5% to 35%.

PolarDB-X leverages the flexibility of the distributed architecture to distribute large-scale transactions and reduce the pressure on a single node, ensuring the stability and high performance of the overall system. CN transparent distribution allows seamless migration and scale-out without the need for users to pay attention to the details of data distribution. The transaction splitting mechanism at the DN layer can process large-scale transactions in detail, regardless of the amount of data or large-scale objects, to reduce the size of a single transaction, avoid blocking, and keep transactions smooth through logical and physical splitting. With the intelligent "divide and conquer" strategy, PolarDB-X provides advanced and practical solutions for large-scale transaction processing issues, strengthening the stability and efficiency of database processing capabilities in extreme scenarios.

Core Technology of PolarDB-X Storage Engine | Lizard Lock-free Backup

New Features You Should Not Miss in ApsaraDB RDS for PostgreSQL 17

ApsaraDB - July 1, 2024

ApsaraDB - January 23, 2024

ApsaraDB - January 17, 2025

ApsaraDB - May 16, 2025

ApsaraDB - March 26, 2025

ApsaraDB - October 24, 2024

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB