If your business needs strong database features like fast read/write performance, scalability, availability, complex data retrieval, and big data analysis, but your current databases aren't sufficient or are too costly to upgrade, you can use DataWorks Data Integration to move data from your existing databases to Tablestore tables. You can also transfer data between Tablestore tables across different instances or Alibaba Cloud accounts, or move Tablestore data to Object Storage Service (OSS) or MaxCompute, allowing you to back up Tablestore data and use it with other services.

Use cases

DataWorks Data Integration is a stable, efficient, and scalable data synchronization platform. It is suitable for data migration and synchronization between multiple disparate data sources, such as MySQL, Oracle, MaxCompute, and Tablestore.

Tablestore lets you use DataWorks Data Integration to migrate database data to Tablestore, migrate Tablestore data across instances or Alibaba Cloud accounts, and migrate Tablestore data to OSS or MaxCompute.

Migrate database data to Tablestore

DataWorks provides a stable and efficient data synchronization feature among disparate data sources. You can migrate data from various databases to Tablestore.

For information about the data sources and Reader and Writer plug-ins supported by DataWorks, see Supported data source types, Reader plug-ins, and Writer plug-ins.

Migrate or synchronize Tablestore data across instances or Alibaba Cloud accounts

Configure Tablestore-related Reader and Writer plug-ins in DataWorks to synchronize data in Tablestore data tables or time series tables. The following table describes Tablestore-related Reader and Writer plug-ins.

Plug-in | Description |

OTSReader | The plug-in is used to read data from Tablestore tables. Specify the range of data that you want to extract to perform incremental extraction. |

OTSStreamReader | The plug-in is used to export data in Tablestore tables in incremental mode. |

OTSWriter | The plug-in is used to write data to Tablestore. |

Migrate Tablestore data to OSS or MaxCompute

Migrate Tablestore data to OSS or MaxCompute as needed.

MaxCompute is a fully managed data warehouse service that can process terabytes or petabytes of data at high speeds. Use MaxCompute to back up Tablestore data or migrate Tablestore data to MaxCompute and use Tablestore data in MaxCompute.

OSS is a secure, cost-effective, and highly reliable service that can store large amounts of data. Use OSS to back up Tablestore data or synchronize Tablestore data to OSS and download objects from OSS to your local devices.

Migration solutions

Use DataWorks Data Integration to migrate data between Tablestore and various data sources.

Use a data import solution to synchronize the following types of data to Tablestore: MySQL, Oracle, Kafka, HBase, and MaxCompute. You can also synchronize data across Tablestore data tables or time series tables.

Use a data export solution to synchronize data from Tablestore to MaxCompute or OSS.

Import data

The following table describes data import solutions.

Solution | Description |

Synchronize MySQL data to Tablestore | Migrate data in MySQL databases only to Tablestore data tables. During migration, the Reader script configurations of MySQL and the Writer script configurations of Tablestore are used. The following items describe the source and destination configurations:

|

Synchronize Oracle data to Tablestore | Migrate data in Oracle databases only to Tablestore data tables. During migration, the Reader script configurations of Oracle and the Writer script configurations of Tablestore are used. The following items describe the source and destination configurations:

|

Synchronize Kafka data to Tablestore | Migrate Kafka data to Tablestore data tables or time series tables. Important

During migration, the Reader script configurations of Kafka and the Writer script configurations of Tablestore are used. The following items describe the source and destination configurations:

|

Synchronize HBase data to Tablestore | Migrate data in HBase databases only to Tablestore data tables. During migration, the Reader script configurations of HBase and the Writer script configurations of Tablestore are used. The following items describe the source and destination configurations:

|

Synchronize MaxCompute data to Tablestore | Migrate MaxCompute data only to Tablestore data tables. During migration, the Reader script configurations of MaxCompute and the Writer script configurations of Tablestore are used. The following items describe the source and destination configurations:

|

Synchronize PolarDB-X 2.0 data to Tablestore | Migrate data from PolarDB-X 2.0 only to Tablestore data tables. During migration, the Reader script configurations of PolarDB-X 2.0 and the Writer script configurations of Tablestore are used.

|

Synchronize data between Tablestore data tables | Migrate data from a Tablestore data table only to another Tablestore data table. During migration, the Reader script configurations and the Writer script configurations of Tablestore are used. For information about the source and destination configurations, see Tablestore data source. When you specify the Reader script configurations and the Writer script configurations of Tablestore, refer to the configurations that are used to read and write data in tables in the Wide Column model. |

Synchronize data between Tablestore time series tables | Migrate data from a Tablestore time series table only to another Tablestore time series table. During migration, the Reader script configurations and the Writer script configurations of Tablestore are used. For information about the source and destination configurations, see Tablestore data source. When you specify the Reader script configurations and the Writer script configurations of Tablestore, refer to the configurations that are used to read and write data in tables in the TimeSeries model. |

Export data

The following table describes data export solutions.

Solution | Description |

Synchronize Tablestore data to MaxCompute | Use MaxCompute to back up Tablestore data or migrate Tablestore data to MaxCompute and use Tablestore data in MaxCompute. During migration, the Reader script configurations of Tablestore and the Writer script configurations of MaxCompute are used. The following items describe the source and destination configurations:

|

Synchronize Tablestore data to OSS | Download objects that are synchronized from Tablestore to OSS and store the objects in OSS as the backup of the data in Tablestore. During migration, the Reader script configurations of Tablestore and the Writer script configurations of OSS are used. The following items describe the source and destination configurations:

|

Prerequisites

After you determine a migration solution, make sure that the following preparations are made:

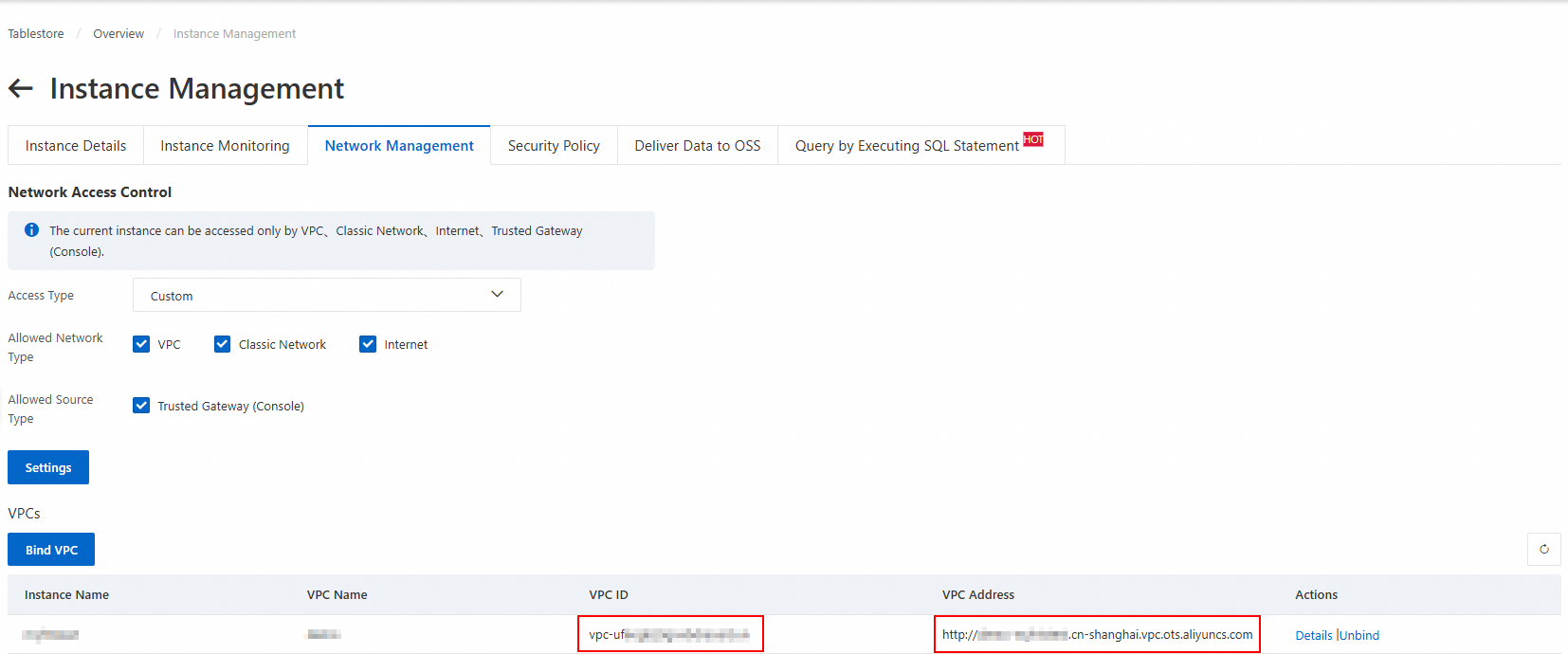

The network connection between the source and DataWorks and between the destination and DataWorks is established.

The following operations are performed on the source service: confirm the version, prepare the account, configure the required permissions, and perform service-specific configurations. For more information, see the configuration requirements in the documentation of the source.

The destination service is activated, and the required resources are created. For more information, see the configuration requirements in the documentation of the destination.

Usage notes

If you encounter any issues, submit a ticket.

Make sure that DataWorks Data Integration supports data migration of the specific product version.

The data type of the destination must match the data type of the source. Otherwise, dirty data may be generated during migration.

After you determine the migration solution, make sure to read the limits and usage notes in the documentation of the source and destination.

Before you migrate Kafka data, you must select a Tablestore data model to store the data based on your business scenario.

Configuration process

Determine your migration solution and learn about how to configure data migration using DataWorks Data Integration for your migration solution.

The following table describes the configuration steps.

No. | Step | Description |

1 | Create the required data sources based on the migration solution.

| |

2 | Configure a batch synchronization task using the codeless UI | DataWorks Data Integration provides the codeless UI and step-by-step instructions to help you configure a batch synchronization task. The codeless UI is easy to use but provides only limited features. |

3 | Verify migration results | View the imported data in the destination based on the migration solution.

|

Examples

Import data

Using DataWorks Data Integration, synchronize data from databases such as MySQL, Oracle, and MaxCompute to Tablestore data tables. You can also synchronize Tablestore data across accounts or instances. Examples include synchronizing data from one data table to another.

This section uses the synchronization of MaxCompute data to a Tablestore data table in the codeless UI as an example to describe the procedure.

Preparations

Step 1: Add a Tablestore data source and a MaxCompute data source

Step 2: Configure a batch synchronization task using the codeless UI

Step 3: View the synchronization result

Export data

Use DataWorks Data Integration to export Tablestore data to MaxCompute or OSS.

Synchronize Tablestore data to OSS

Billing

When you use a migration tool to access Tablestore, you are charged for data reads and writes. After the data is written, Tablestore charges storage fees based on the data volume. For more information about billing, see Billing overview.

DataWorks billing consists of software and resource fees. For more information, see Billing.

Other solutions

Download Tablestore data to a local file as needed.

You can also use migration tools such as DataX and Tunnel Service to import data.

Migration tool | Description |

DataX abstracts the synchronization between different data sources into a Reader plugin that reads data from the source and a Writer plugin that writes data to the destination. | |

Tunnel Service is an integrated service for consuming full and incremental data based on the Tablestore data API. By creating a data channel for a data table, you can easily consume historical and new data from the table. This service is suitable for data migration and synchronization when the source table is a Tablestore data table. For more information, see Synchronize data from one data table to another. | |

Data Transmission Service (DTS) is a real-time data streaming service provided by Alibaba Cloud. It supports data interaction between data sources such as relational databases (RDBMS), NoSQL databases, and online analytical processing (OLAP) systems. It integrates data synchronization, migration, subscription, integration, and processing to help you build a secure, scalable, and highly available data architecture. For more information, see Synchronize PolarDB-X 2.0 data to Tablestore and Migrate PolarDB-X 2.0 data to Tablestore. |

Appendix: Field type mappings

This section describes the field type mappings between common services and Tablestore. Configure field mappings based on the field type mappings.

Field type mapping between MaxCompute and Tablestore

Field type in MaxCompute | Field type in Tablestore |

STRING | STRING |

BIGINT | INTEGER |

DOUBLE | DOUBLE |

BOOLEAN | BOOLEAN |

BINARY | BINARY |

Field type mapping between MySQL and Tablestore

Field type in MySQL | Field type in Tablestore |

STRING | STRING |

INT or INTEGER | INTEGER |

DOUBLE, FLOAT, or DECIMAL | DOUBLE |

BOOL or BOOLEAN | BOOLEAN |

BINARY | BINARY |

Field type mapping between Kafka and Tablestore

Kafka Schema Type | Field type in Tablestore |

STRING | STRING |

INT8, INT16, INT32, or INT64 | INTEGER |

FLOAT32 or FLOAT64 | DOUBLE |

BOOLEAN | BOOLEAN |

BYTES | BINARY |

icon to the right of Workspace Directories, and select

icon to the right of Workspace Directories, and select