NimoShake, also known as DynamoShake, is a data synchronization tool developed by Alibaba Cloud. You can use this tool to migrate an Amazon DynamoDB database to ApsaraDB for MongoDB.

Prerequisites

An ApsaraDB for MongoDB instance is created. For more information, see Create a replica set instance or Create a sharded cluster instance.

Background information

This topic describes how to use NimoShake.

NimoShake is used to migrate data from an Amazon DynamoDB database. The destination must be an ApsaraDB for MongoDB instance.For more information, see NimoShake overview.Precautions

Resources of the source and destination databases are occupied during full data migration. This may increase the load of the database servers. If you attempt to migrate a large volume of data or if the server specifications are insufficient, the databases may be overloaded or become unavailable. Before you migrate data, evaluate the performance of the source and destination databases. We recommend that you migrate data during off-peak hours.

Terms

- Resumable upload: In a resumable upload task, data is split into multiple chunks.

When transmission is interrupted due to network failures or other causes, the task

can be resumed from where it was left off rather than restarting from the beginning.

Note Resumable upload is supported in incremental migration, but not in full migration. If an incremental migration task is interrupted due to disconnection and the connection is recovered within a short time range, the task can be resumed. In some cases such as a long-period disconnection or the loss of a previous checkpoint, a full migration may be triggered.

- Checkpoint: Resumable upload is based on checkpoints. Default checkpoints are written to the ApsaraDB for MongoDB database named dynamo-shake-checkpoint. Each collection records a checkpoint list and the status_table collection records whether the current task is a full or incremental migration.

NimoShake features

NimoShake performs full migration the first time and then incremental migration.

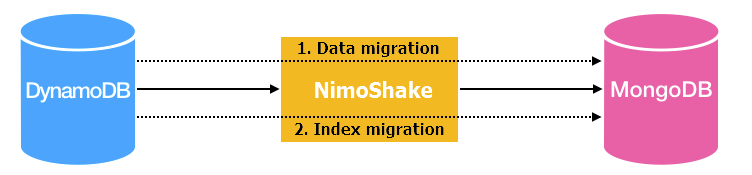

- Full migration: contains data migration and index migration. The following figure

shows the basic architecture of full migration.

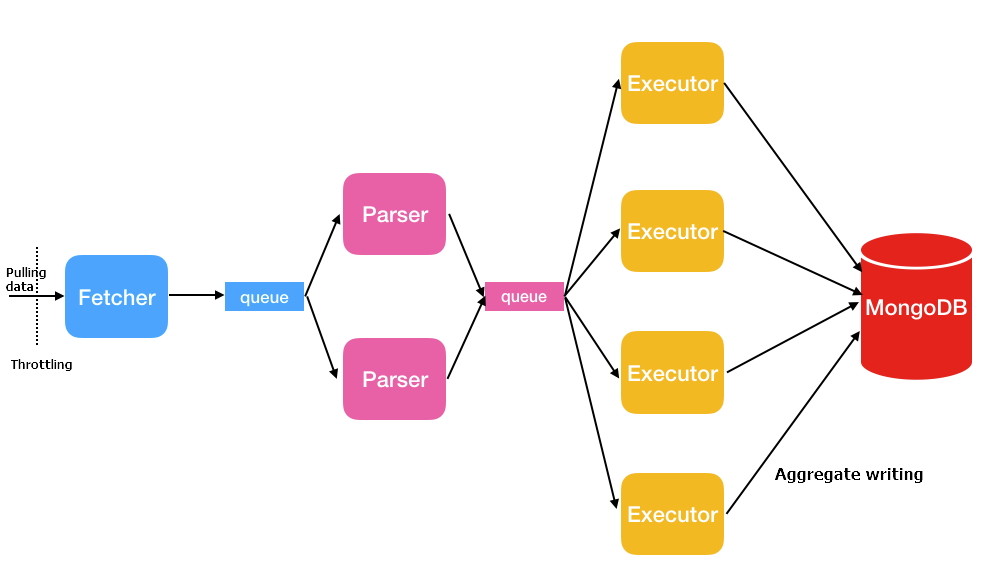

- Data migration: NimoShake uses multiple concurrent threads to pull source data, as

shown in the following figure.

Thread Description Fetcher Calls the protocol conversion driver provided by Amazon to capture data in the source collection in batches and then place the batches into queues until all source data is captured.Note Only one fetcher thread is provided.Parser Reads data from queues and parses data into the BSON structure. After data is parsed, the parser thread writes data to queues of the executor thread as entries. Multiple parser threads can be started and the default value is 2. You can specify the number of parser threads through theFullDocumentParserparameter.Executor Pulls data from queues and then aggregates and writes data to the ApsaraDB for MongoDB database. Up to 16 MB data in 1,024 entries can be aggregated. Multiple executor threads can be started and the default value is 4. You can specify the number of executor threads through theFullDocumentConcurrencyparameter. - Index migration: NimoShake writes indexes after data migration is complete. Indexes

include self-contained indexes and user-created indexes.

- Self-contained indexes: If you have both a partition key and a sort key, NimoShake creates a unique composite index and writes the index to the ApsaraDB for MongoDB database. NimoShake also creates a hash index for the partition key and writes the index to the ApsaraDB for MongoDB database. If you have only a partition key, NimoShake writes a hash index and a unique index to the ApsaraDB for MongoDB database.

- User-created indexes: If you have a user-created index, NimoShake creates a hash index based on the primary key and writes the index to the ApsaraDB for MongoDB database.

- Data migration: NimoShake uses multiple concurrent threads to pull source data, as

shown in the following figure.

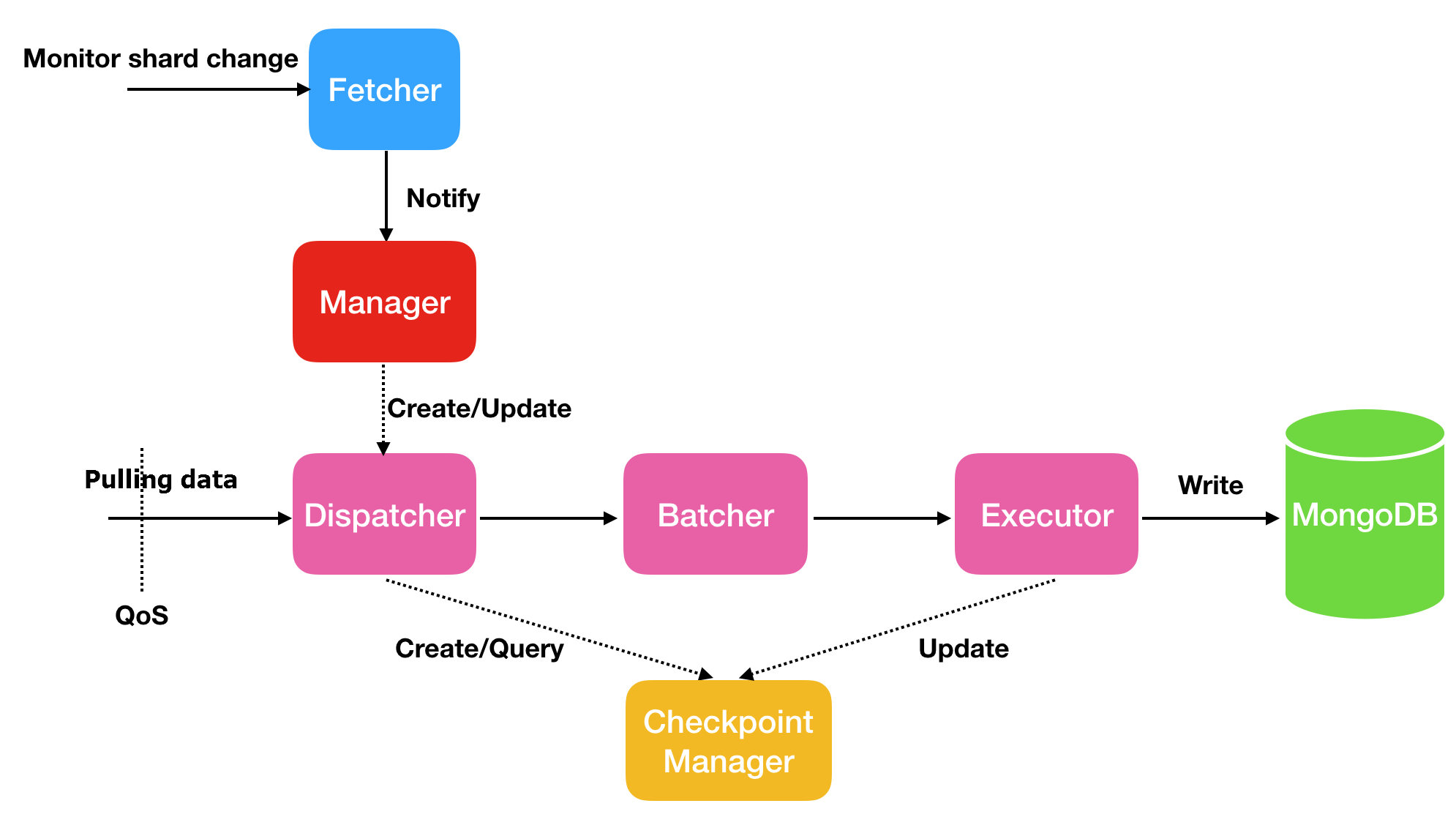

- Incremental migration: NimoShake migrates data but not the generated indexes. The

following figure shows the basic architecture of incremental migration.

Thread Description Fetcher Senses the changes of shards in a stream.Manager Sends messages or creates a dispatcher to process messages. One shard corresponds to one dispatcher.Dispatcher Pulls incremental data from the source. In resumable upload, data is pulled from the last checkpoint instead of the very beginning.Batcher Parses and encapsulates the incremental data pulled from the dispatcher thread.Executor Writes the encapsulated data to the ApsaraDB for MongoDB database and updates the checkpoint.

Migrate an Amazon DynamoDB database to ApsaraDB for MongoDB

This section uses Ubuntu to describe how to use NimoShake to migrate an Amazon DynamoDB database to ApsaraDB for MongoDB.

Was this helpful?