By Dongdao

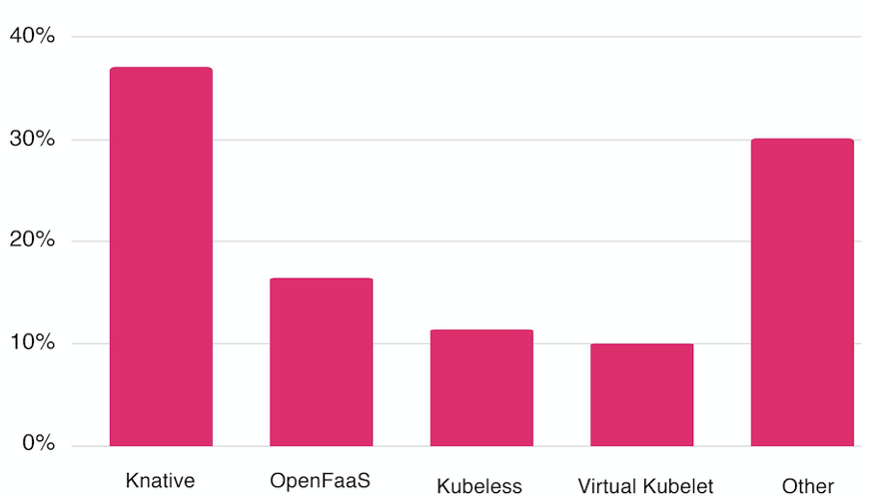

According to the Annual Survey Report released by the Cloud Native Computing Foundation (CNCF), serverless technologies have gained greater recognition in 2019. In particular, 41% of the respondents said they were already using serverless, and 20% said they planned to use serverless in the coming 12 to 18 months. Among various open-source serverless projects, Knative is the most popular. As shown in the following figure, Knative occupies 34% of the market share, far ahead of OpenFaaS, which is ranked second. Knative is the preferred choice for building a serverless platform.

The popularity of Knative cannot be separated from the container ecosystem. Unlike the OpenFaaS model, Knative does not require you to make major changes to applications. You can deploy any containerized application in Knative. In addition, Knative provides a more focused application model on top of Kubernetes, allowing applications to be automatically upgraded and traffic to be automatically split for canary release.

Before cloud computing emerged, if an enterprise needed to provide external services on the Internet, it had to lease a physical machine from an IDC and then deploy applications on the physical machine. Over more than a decade, the performance of physical machines has increased steadily following the prediction of Moore's Law. As a result, a single application cannot fully use the resources on the entire physical machine. Therefore, we need technology to improve resource utilization. In normal cases, if one application does not fully occupy a machine, you can simply deploy a few more. However, the hybrid deployment of multiple applications on the same physical machine leads to many problems. For example, the following problems may be caused:

Virtual Machines (VMs) perfectly resolve the preceding problems. The VM technology allows you to deploy multiple hosts on the same physical machine through virtualization and deploy only one application on each host. In this way, you can deploy multiple applications on one physical machine while ensuring that applications are independent of each other.

As its business continues to grow, an enterprise may need to maintain many applications. Each application requires many release, upgrade, rollback, and other operations, and these applications may need to be deployed in many regions. This makes O&M more complex, especially the maintenance of application runtime environments. Fortunately, container technology emerged to solve these problems. With lightweight isolation at the kernel level, container technology not only provides almost the same isolation experience as VMs but also introduces a major innovation - the container image. Container images facilitate the replication of application runtime environments. Developers only need to deploy application dependencies to an image. When the image runs, the built-in dependencies are directly used to provide services. This feature resolves runtime environment issues during application release, upgrade, rollback, and multi-region deployment.

After container technology was widely adopted, we found it greatly facilitated the maintenance of instance runtime environments. At this time, the coordination between multiple instances of an application and between multiple applications became the biggest issue. Therefore, Kubernetes emerged soon after container technology. Different from VM and container technologies, Kubernetes is inherently a distributed design that is oriented to the final state, rather than single-machine capabilities. Kubernetes abstracts a more developer-friendly API to allocate Infrastructure-as-a-Service (IaaS) resources. Users do not need to worry about the specific allocation details. The Kubernetes Controller automatically allocates resources and implements failover and load balancing based on the final state. This allows application developers to ignore the specific running status of instances, so long as Kubernetes can allocate resources whenever needed.

In both the early physical machine mode and the current Kubernetes mode, application developers just want to run applications instead of managing underlying resources. In the physical machine mode, you need exclusive physical machines. In Kubernetes mode, you can ignore anything about the physical machine where your business processes are running but you cannot predict the physical machine in advance. The physical machine, where an application runs, is not important as long as the application can run. Throughout the evolution from physical machines to VMs, containers, and Kubernetes, the requirements for using IaaS resources on an application have been lowered. The coupling between IaaS and applications is decreasing. The basic platform only needs to allocate corresponding IaaS resources to applications that need to run. Application managers are simply IaaS users, who do not need to know the details about IaaS resource allocation.

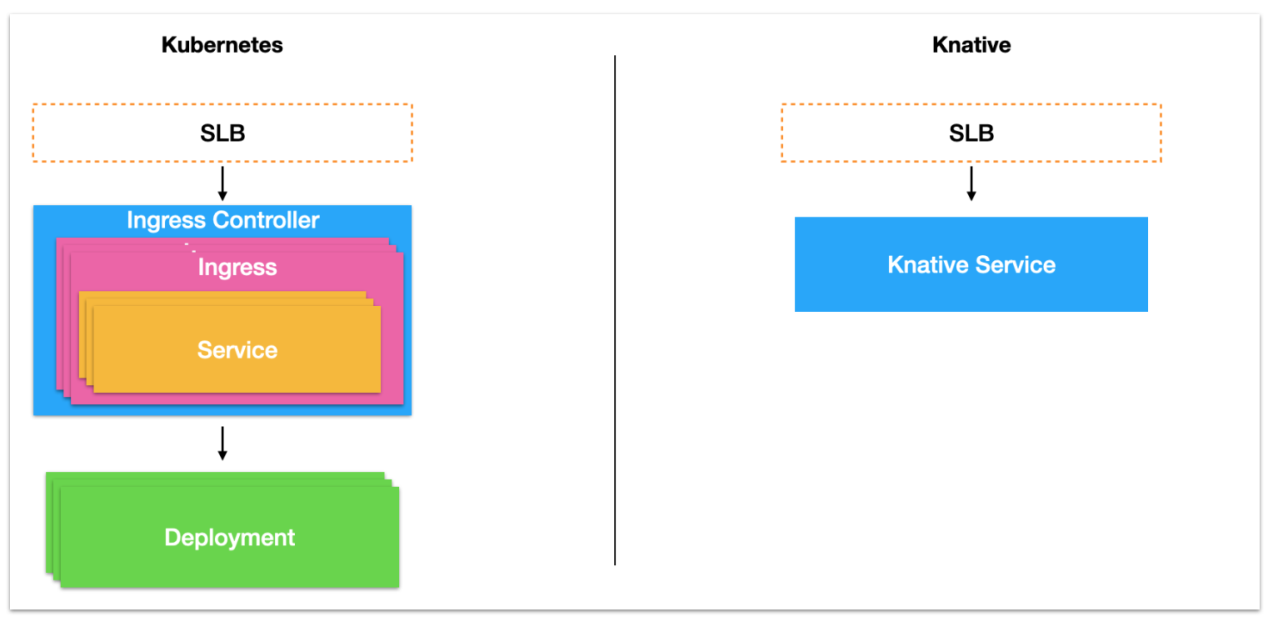

Before introducing Knative, let us use a web application as an example to learn how to distribute traffic to an application and release the application in Kubernetes mode. In the following figure, the Kubernetes mode is shown on the left side and the Knative mode is shown on the right side.

The requirements for the Kubernetes mode are as follows:

The requirements for the Knative mode are as follows:

Certainly, Knative cannot completely take over from Kubernetes. After all, Knative is built on top of the capabilities of Kubernetes. Kubernetes and Knative not only differ in the resources that users need to directly manage but also have significant conceptual differences:

Kubernetes is used to decouple IaaS from applications and reduce costs of IaaS resource allocation. In a word, Kubernetes focuses on IaaS resource orchestration. In contrast, Knative focuses on the application layer and orchestrates applications mainly through scaling.

Knative is a Kubernetes-based serverless orchestration engine designed to formulate cloud-native and cross-platform serverless orchestration standards. Knative implements serverless standards by integrating container building, auto scaling-based workload management, and the eventing model. Serving is the core module for running serverless workloads.

Kubernetes is an abstraction oriented to IaaS management. If you directly deploy applications through Kubernetes, you must maintain a large number of resources.

You can use the Knative Service alone to define the hosting of an application.

Knative routes traffic through gateways and can then split the traffic by percentage. In this way, a solid foundation is laid for basic capabilities such as auto scaling and canary release.

Serving allows you to manage multiple versions of applications. In this way, multiple versions of an application can provide online services at the same time.

You can set different traffic percentages for different versions so that features such as canary release can be implemented easily.

Scaling is the core capability of Knative and helps reduce application costs. This capability enables Knative to automatically scale out an application when traffic increases and scale in an application when traffic decreases.

Each canary version has a scaling policy. The scaling policy is associated with the traffic allocated to the corresponding version. Based on the allocated traffic, Knative makes decisions to scale in or out an application.

For more information about Knative, see the official Knative website or Guide to developing cloud-native applications by using Knative (page in Chinese).

The Kubernetes community version requires that you purchase a host in advance and register the host as a Kubernetes node to schedule pods. However, purchasing a host in advance is illogical for application developers. Application developers only want to allocate IaaS resources to their application instances that need to run, but do not want to maintain complex IaaS resources. In other words, it's great that Kubernetes is fully compatible with the Kubernetes API developed by the community. This way, it can automatically allocate resources when required but does not require manual maintenance and management of IaaS resources. This matches the resource requirements of application developers. Alibaba Cloud Serverless Kubernetes (ASK) was developed to achieve this objective.

ASK is a serverless Kubernetes cluster that allows you to deploy container applications without purchasing any nodes, maintaining nodes in the cluster, or planning the capacity of the nodes. The ASK cluster is billed in pay-as-you-go mode based on the amount of CPU and memory resources configured for the application. ASK clusters are fully compatible with Kubernetes and make it much easier to get started with Kubernetes. This allows you to focus on applications instead of underlying infrastructure management.

In other words, you can create an ASK cluster and deploy your services in it without preparing ECS resources in advance. For more information about ASK, see Overview of ASK.

According to the preceding summary of the development of serverless, the coupling between IaaS and applications is decreasing. The basic platform only needs to allocate corresponding IaaS resources to applications that need to run. Application managers are simply IaaS users, who do not need to know the details about IaaS resource allocation. ASK is a platform that allocates IaaS resources at any time when required. Knative is responsible for detecting the real-time status of an application and automatically applies for IaaS resources, that is, pods, from ASK when required. The combination of Knative and ASK brings you the ultimate serverless experience.

By default, the Knative community supports various implementations of gateways, such as Istio, Gloo, Contour, Kourier, and Ambassador. Istio is the most popular among the various implementations because Istio not only can serve as a gateway but also can serve as a service mesh. Although these gateways provide a full range of features, they were not initially designed to serve as gateways for serverless services. First, you must have a resident gateway instance. To ensure high availability, you must deploy at least two instances to back up each other. Second, the control ends of these gateways must also be resident. The fees of IaaS resources and O&M of these resident gateways are necessary business costs.

To provide users with the ultimate serverless experience, we have implemented Knative Gateway through Alibaba Cloud Server Load Balancer (SLB). Knative Gateway provides all the necessary features and supports cloud products. No resident resources are required. Therefore, both IaaS costs and O&M complexity are reduced.

Reserved instances are an exclusive feature of ASK Knative. By default, the Knative community version can scale in an application to zero when no traffic is generated. However, this causes cold start problems when Knative scales the application out from zero. Cold start problems include a long application startup time in addition to IaaS resource allocation, Kubernetes scheduling, and image retrieval problems. The application startup time may range from milliseconds to minutes and is almost uncontrollable on a general-purpose platform. Certainly, these problems exist in all serverless products. Most conventional FaaS products maintain a public IaaS pool to run different functions. To prevent the pool from being used up and minimize the cold start time, most FaaS products set limits on function execution. For example, the following limits are set:

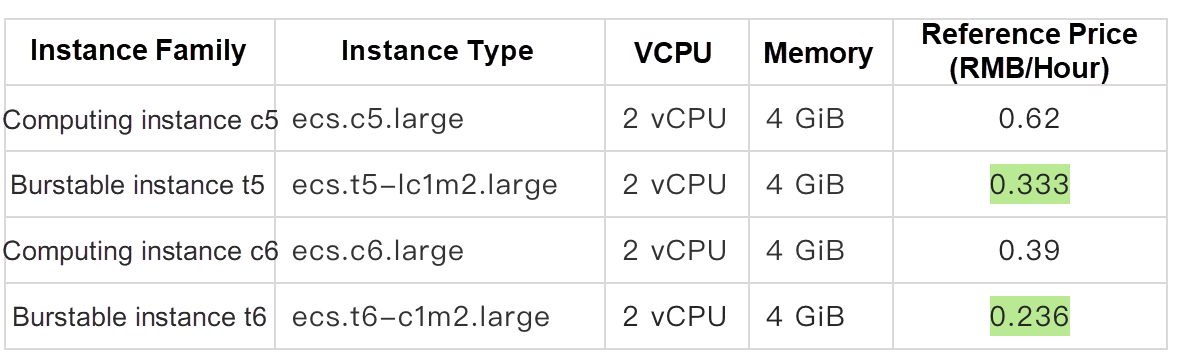

To resolve these problems, ASK Knative provides cost-effective reserved instances to balance costs and cold start problems. Alibaba Cloud provides Elastic Container Instances (ECIs) of varying types. Instances of different types provide different computing capabilities and are sold at different prices. The following table compares the prices of 2-core 4 GB computing instances and 2-core 4 GB burstable instances.

According to the preceding comparison, burstable instances are 46% cheaper than computing instances. Therefore, using burstable instances to provide services when no traffic is generated not only resolves the cold start problem but also reduces costs.

Burstable instances not only have a price advantage but also feature CPU credits that can be used to meet performance requirements during traffic spikes. Burstable instances can accumulate CPU credits continuously. When its performance cannot meet requirements under a heavy workload, a burstable instance can consume the CPU credits to seamlessly improve its computing performance without affecting the environments or applications running on your instances. The CPU credit mechanism allows you to shift remaining computing resources in off-peak hours to peak hours to ensure the overall performance of businesses. They operate on a principle similar to that used by gas-electric hybrid cars. For more information about burstable instances, see Overview of burstable instances.

In other words, ASK Knative enables standard computing instances to be replaced with burstable instances during off-peak hours and enables burstable instances to seamlessly switch to standard computing instances when a request is received. In this way, ASK Knative helps you reduce costs during off-peak hours and improve investment efficiency because all the CPU credits accumulated during off-peak hours can be consumed during peak hours.

Using a burstable instance as a reserved instance is only the default policy. You can also specify other desired types of instances as reserved instances. Certainly, you can specify the system to reserve at least one standard instance, so that the reserved instance feature is disabled.

After you create an ASK cluster, you can apply for the Knative feature by joining the provided DingTalk group. Then, you can directly use the capabilities provided by Knative in the ASK cluster.

After Knative is activated, an ingress-gateway service is created in the knative-serving namespace. The service is a loadbalancer service and will automatically create an SLB instance by using the Cloud Controller Manager (CCM). In the following example, 47.102.220.35 is the public IP address of the SLB instance. You can use this public IP address to gain access to Knative.

# kubectl -n knative-serving get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-gateway LoadBalancer 172.19.8.35 47.102.220.35 80:30695/TCP 26hTo explain subsequent operations, let us consider a cafe. Assume that this cafe offers two types of drinks: coffee and tea. We will deploy the coffee service first and then the tea service. Then, we will demonstrate features such as version upgrade, traffic splitting for canary release, custom ingress, and auto scaling.

Save the following content to the coffee.yaml file and then deploy the service to the cluster by using kubectl.

# cat coffee.yaml

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: coffee

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/target: "10"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/knative-sample/helloworld-go:160e4dc8

env:

- name: TARGET

value: "coffee"Run kubectl apply -f coffee.yaml to deploy the coffee service. Wait a moment and then you can find that the coffee service has been deployed.

# kubectl get ksvc

NAME URL LATESTCREATED LATESTREADY READY REASON

coffee http://coffee.default.example.com coffee-fh85b coffee-fh85b TrueIn the execution result of the preceding command, coffee.default.example.com is the unique subdomain name generated by Knative for each Knative service (ksvc). At this time, you can run curl commands to specify the host and the preceding public IP address of the SLB instance. After the settings, you can gain access to the deployed service. In the following example, Hello coffee! is the data returned by the coffee service.

# curl -H "Host: coffee.default.example.com" http://47.102.220.35

Hello coffee!The autoscaler is a first-class object in Knative and the core capability provided by Knative to help users reduce costs. The default policy for the Knative Pod Autoscaler (KPA) can automatically adjust the number of pods based on real-time traffic requests. Let us try out the scaling capability of Knative. First, let's check the current pod information.

# kubectl get pod

NAME READY STATUS RESTARTS AGE

coffee-bwl9z-deployment-765d98766b-nvwmw 2/2 Running 0 42sYou can find that one pod is running. Next, prepare for a stress test. Before starting the stress test, check the configurations of the application where the coffee service is deployed. These configurations can be viewed in the preceding yaml file and are shown as follows. Specifically, autoscaling.knative.dev/target: "10" indicates that the maximum number of concurrent pods is 10. If more than 10 concurrent pods are requested, you must scale out the application by adding pods. For more information about KPA, see Autoscaler (page in Chinese).

# cat coffee-v2.yaml

... ...

name: coffee-v2

annotations:

autoscaling.knative.dev/target: "10"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/knative-sample/helloworld-go:160e4dc8

... ...Now let's start the stress test to try it out. You can run the hey command to initiate a stress test request. The download links for the hey command are as follows:

hey -z 30s -c 90 --host "coffee.default.example.com" "http://47.100.6.39/?sleep=100"The meanings of different parts of the preceding command are as follows:

-z 30s specifies that the stress test lasts for 30 seconds.-c 90 specifies that 90 concurrent requests are initiated in the stress test.--host "coffee.default.example.com" specifies the host to bind."http://47.100.6.39/?sleep=100" specifies the request URL. In particular, sleep=100 indicates that the image test sleeps for 100 milliseconds, just like a real online service.Run the preceding command to perform a stress test and observe the change in the number of pods. You can run the following command to monitor the number of pods: watch -n 1 'kubectl get pod'. In the following example, the change in the number of pods is shown on the left side and the execution process of the stress test command is shown on the right side. You can use this GIF to observe the change in pods during the stress test. When the stress test begins, Knative automatically scales out pods. Therefore, the number of pods increases. After the stress test ends, Knative detects that the traffic decreases and then automatically scales in pods. This is a fully automated scale-in and scale-out process.

The preceding Highlights section describes how ASK Knative uses reserved instances to resolve the cold start problems and reduce costs. This section describes how to switch between reserved instances and standard instances.

After the preceding stress test ends, wait a few minutes, and then run kubectl get pod to check the number of pods. You may find that only one pod exists and the pod is named in the form of xxx-reserve-xx. In the name, reserve indicates that the instance is a reserved instance. In this case, the reserved instance is already used to provide services. When no requests are received for a long time, Knative automatically scales out reserved instances and reduces the number of standard instances to zero to reduce costs.

# kubectl get pod

NAME READY STATUS RESTARTS AGE

coffee-bwl9z-deployment-reserve-85fd89b567-vpwqc 2/2 Running 0 5m24sWhat will happen if ingress traffic is received at this time? Let's test it out. According to the following GIF, standard instances will be automatically scaled out when ingress traffic is received. After the standard instances enter the ready state, the reserved instances will be scaled in.

The default type of reserved instances is ecs.t5-lc1m2.small(1c2g). Certainly, memory resources, such as JVM resources, for some applications are allocated by default when the applications start. If an application requires 4 GB of memory, you may select the ecs.t5-c1m2.large(2c4g) instance type for reserved instances. Therefore, we also provide a method for specifying the type of reserved instances. You can specify the type of reserved instances by using annotations when submitting a Knative service. For example, you can specify the following configuration: knative.aliyun.com/reserve-instance-eci-use-specs: ecs.t5-lc2m1.nano. In this example, ecs.t5-lc2m1.nano indicates the type of reserved instances. Save the following content to the coffee-set-reserve.yaml file:

# cat coffee-set-reserve.yaml

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: coffee

spec:

template:

metadata:

annotations:

knative.aliyun.com/reserve-instance-eci-use-specs: "ecs.t5-c1m2.large"

autoscaling.knative.dev/target: "10"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/knative-sample/helloworld-go:160e4dc8

env:

- name: TARGET

value: "coffee-set-reserve"Run the following command to submit the file to the Kubernetes cluster: kubectl apply -f coffee-set-reserve.yaml. Wait a while until the new version is scaled in and switched to a reserved instance. Then, check the pod list:

# kubectl get pod

NAME READY STATUS RESTARTS AGE

coffee-vvfd8-deployment-reserve-845f79b494-lkmh9 2/2 Running 0 2m37sRun set-reserve to view the type of reserved instance. You can find that the instance type has been set to 2-core CPU, 4 GB memory, and ecs.t5-c1m2.large.

# kubectl get pod coffee-vvfd8-deployment-reserve-845f79b494-lkmh9 -oyaml |head -20

apiVersion: v1

kind: Pod

metadata:

annotations:

... ...

k8s.aliyun.com/eci-instance-cpu: "2.000"

k8s.aliyun.com/eci-instance-mem: "4.00"

k8s.aliyun.com/eci-instance-spec: ecs.t5-c1m2.large

... ...Before the upgrade, let's check the current pod information:

# kubectl get pod

NAME READY STATUS RESTARTS AGE

coffee-fh85b-deployment-8589564f7b-4lsnf 1/2 Running 0 26sNow let us upgrade the coffee service. Save the following content to the coffee-v1.yaml file:

# cat coffee-v1.yaml

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: coffee

spec:

template:

metadata:

name: coffee-v1

annotations:

autoscaling.knative.dev/target: "10"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/knative-sample/helloworld-go:160e4dc8

env:

- name: TARGET

value: "coffee-v1"The following are new in the current version:

coffee-v1. If no name is specified, the system automatically generates a name for the revision."v1". Therefore, according to the HTTP return code, you can determine that version v1 is providing the current service.Run the following command to deploy version v1: kubectl apply -f coffee-v1.yaml. Continue to run the following command to verify the version after deployment is complete: curl -H "Host: coffee.default.example.com" http://47.102.220.35.

After a few seconds, you will receive the following response: Hello coffee-v1!. In this process, the service is not interrupted and you do not need to perform any manual switching. After modification, you can submit the file so that the pod is automatically switched from the old version to the new version.

# curl -H "Host: coffee.default.example.com" http://47.102.220.35

Hello coffee-v1!Now let's check the status of pods. You can find that the pod of the earlier version is automatically replaced with the pod of the new version.

# kubectl get pod

NAME READY STATUS RESTARTS AGE

coffee-v1-deployment-5c5b59b484-z48gm 2/2 Running 0 54sFor more demos of complex features, see Demos (page in Chinese).

Knative is the most popular serverless orchestration framework in the Kubernetes ecosystem. The Knative community version can provide services only when a resident controller and a resident gateway are available. These resident instances not only incur IaaS costs but also make O&M more complex, increasing the difficulty in developing serverless applications. Therefore, we use ASK to fully manage Knative Serving. Knative Serving is an out-of-the-box service that allows you to save on the costs of these resident instances. We not only provide gateway capabilities through SLB products but also provide various types of reserved instances based on burstable instances. ASK allows you to greatly reduce IaaS costs and improve investment efficiency by accumulating CPU credits during off-peak hours and consuming the accumulated CPU credits during peak hours.

For more information about Knative, see the official Knative website or Guide to developing cloud-native applications by using Knative (page in Chinese).

Backup and Recovery Solution for Disk-based Data Volumes in Kubernetes Clusters

228 posts | 33 followers

FollowAlibaba Cloud Native - September 11, 2023

Alibaba Cloud Native Community - September 19, 2023

Alibaba Clouder - June 23, 2020

Alibaba Container Service - April 18, 2024

Alibaba Container Service - July 22, 2021

Alibaba Developer - September 7, 2020

228 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by Alibaba Container Service

5015614084248347 February 23, 2021 at 12:45 pm

You might find many businesses deploying serverless computing out there. However, if you are looking for all-inclusiveness, DataVizz is your one-stop-solution. From Data Management