By Yuanyi

With the rise of the AI revolution, various AI applications are emerging. It is widely recognized that AI applications heavily rely on GPU resources, which can be quite expensive. Therefore, reducing the cost of GPU resources has become a top priority for users. The combination of AI and Serverless technology offers a solution to effectively utilize resources on-demand and lower resource costs.

In cloud-native scenarios, is there an out-of-the-box, standardized, and open plan that meets these requirements? The answer is yes. Container Service for Kubernetes (ACK) Serverless provides the Knative + KServe solution, enabling users to quickly deploy AI inference services and utilize them as needed. When there are no requests, GPU resources can automatically scale down to 0, resulting in significant cost savings in AI scenarios.

ACK Serverless is a secure and reliable container service built on Alibaba Cloud's elastic computing infrastructure, fully compatible with the Kubernetes ecosystem. With ACK Serverless, you can quickly create Kubernetes container applications without the hassle of managing and maintaining Kubernetes clusters. It offers support for various GPU resource specifications and provides billing based on the actual resources consumed by your applications. For more information, see Billing of ACK Serverless clusters.

Knative is an open-source Serverless application architecture built on top of Kubernetes. It offers features such as automatic elasticity, scaling to zero, and canary release based on requests. By deploying Serverless applications with Knative, you can focus on developing application logic and utilize resources on-demand.

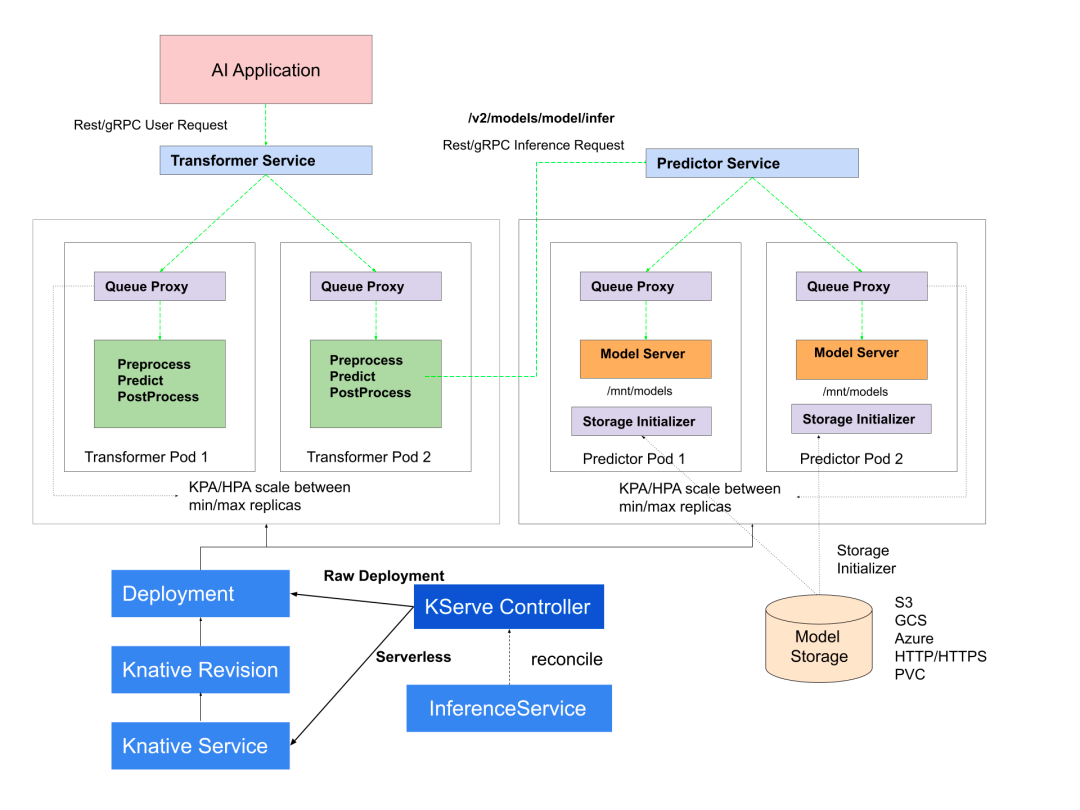

KServe provides a simple Kubernetes Custom Resource Definition (CRD) that can deploy single or multiple trained models to model service runtimes, including TFServing, TorchServe, Triton, and other inference servers. These model service runtimes offer pre-built model services, while KServe provides essential API primitives to facilitate the creation of custom model service runtimes. When deploying an inference model with InferenceService based on Knative, you can experience the following Serverless capabilities:

• Scale-to-zero

• Automatic elasticity based on Request Per Second (RPS), concurrency, and CPU/GPU metrics

• Versioning

• Traffic management

• Security authentication

• Out-of-the-box observability

The KServe Controller is primarily responsible for the control plane of the KServe model service. It coordinates the custom resources of InferenceService and creates Knative Services. It also enables autoscaling based on request traffic and scales down to zero when there is no incoming traffic.

In this article, we will deploy an InferenceService with a predictor that utilizes a scikit-learn model trained on the iris dataset. The dataset consists of three output categories: Iris Setosa (Index: 0), Iris Versicolour (Index: 1), and Iris Virginica (Index: 2). Ultimately, you can send an inference request to the deployed model to predict the corresponding category of iris.

• Activate ACK Serverless [1].

• Deploy KServe [2].

Currently, Alibaba Cloud Knative supports the one-click deployment of KServe. It also supports gateway capabilities such as ASM, ALB, MSE, and Kourier.

kubectl apply -f - <<EOF

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "sklearn-iris"

spec:

predictor:

model:

modelFormat:

name: sklearn

storageUri: "gs://kfserving-examples/models/sklearn/1.0/model"

EOFCheck the service status:

kubectl get inferenceservices sklearn-irisExpected output:

NAME URL READY PREV LATEST PREVROLLEDOUTREVISION LATESTREADYREVISION AGE

sklearn-iris http://sklearn-iris-predictor-default.default.example.com True 100 sklearn-iris-predictor-default-00001 51s1. Obtain the service access address

$ kubectl get albconfig knative-internet

NAME ALBID DNSNAME PORT&PROTOCOL CERTID AGE

knative-internet alb-hvd8nngl0lsdra15g0 alb-hvd8nngl0lsdra15g0.cn-beijing.alb.aliyuncs.com 24m2. Prepare your inference input request in the file

The iris is a dataset consisting of three kinds of iris and 50 data sets. Each sample contains 4 features, namely the length and width of the sepals and the length and width of the petals.

cat <<EOF > "./iris-input.json"

{

"instances": [

[6.8, 2.8, 4.8, 1.4],

[6.0, 3.4, 4.5, 1.6]

]

}

EOF3. Access

INGRESS_DOMAIN=$(kubectl get albconfig knative-internet -o jsonpath='{.status.loadBalancer.dnsname}')

SERVICE_HOSTNAME=$(kubectl get inferenceservice sklearn-iris -o jsonpath='{.status.url}' | cut -d "/" -f 3)

curl -v -H "Host: ${SERVICE_HOSTNAME}" "http://${INGRESS_DOMAIN}/v1/models/sklearn-iris:predict" -d @./iris-input.jsonExpected output:

* Trying 39.104.203.214:80...

* Connected to 39.104.203.214 (39.104.203.214) port 80 (#0)

> POST /v1/models/sklearn-iris:predict HTTP/1.1

> Host: sklearn-iris-predictor-default.default.example.com

> User-Agent: curl/7.84.0

> Accept: */*

> Content-Length: 76

> Content-Type: application/x-www-form-urlencoded

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< content-length: 21

< content-type: application/json

< date: Wed, 21 Jun 2023 03:17:23 GMT

< server: envoy

< x-envoy-upstream-service-time: 4

<

* Connection #0 to host 39.104.203.214 left intact

{"predictions":[1,1]}{"predictions": [1, 1]} is returned, which indicates that both samples sent to the inference Service match index is 1. This means that the irises in both samples are Iris Versicolour.

ACK Serverless has been upgraded to meet the new requirements arising from the rapid growth of AI and other emerging scenarios. It enables enterprises to seamlessly transition to a Serverless business architecture in a standardized, open, and flexible manner. With the combined power of ACK Serverless and KServe, you can unlock the ultimate Serverless experience in AI model inference scenarios.

[1] Activate ACK Serverless

https://www.alibabacloud.com/help/ack/serverless-kubernetes/user-guide/create-an-ask-cluster-2

[2] Deploy KServe

https://www.alibabacloud.com/help/en/ack/serverless-kubernetes/user-guide/kserve/

Observability | How to Use Prometheus to Achieve Observability of Performance Test Metrics

Simplifying Kubernetes Multi-cluster Management with the Right Approach

212 posts | 13 followers

FollowAlibaba Container Service - August 30, 2024

Alibaba Container Service - May 27, 2025

Alibaba Cloud Native Community - September 20, 2023

Alibaba Container Service - July 22, 2024

Alibaba Container Service - May 19, 2025

Alibaba Container Service - December 26, 2024

212 posts | 13 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn More Conversational AI Service

Conversational AI Service

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Native