By Yuanyi and Kunlun

Based on the cloud-native architecture, the AI model services of Shuhe Group provide intelligent decision support for different business processes. However, with the rapid development of the business, Shuhe also faces some challenges. The underlying application resources that support model computing cannot adjust the machine resources to support computing capabilities based on the number of requests. Additionally, the growing number of online inference services made the management of Shuhe's model services more complex.

To address these issues, Shuhe adopted Alibaba Cloud Serverless Kubernetes (ASK) to deploy online models without Kubernetes node management. By dynamically utilizing PODs based on real-time traffic, they were able to reduce resource costs by 60%. ASK Knative service solved problems related to gray release and multi-version coexistence of Shuhe's models. Leveraging the advantages of ASK's auto scaling and scaling to 0, they reduced operating costs and significantly improved service availability.

Currently, ASK has been deployed in over 500 AI model services, providing hundreds of millions of query decision-making services daily with unlimited horizontal expansion capabilities. The Shuhe AI model service supports automatic capacity adjustment to meet different business pressures, ensuring stable operations. Furthermore, the cloud-native architecture solution reduced the average deployment cycle from 1 day to 0.5 days, greatly improving R&D iteration efficiency and accelerating the commercialization of applications.

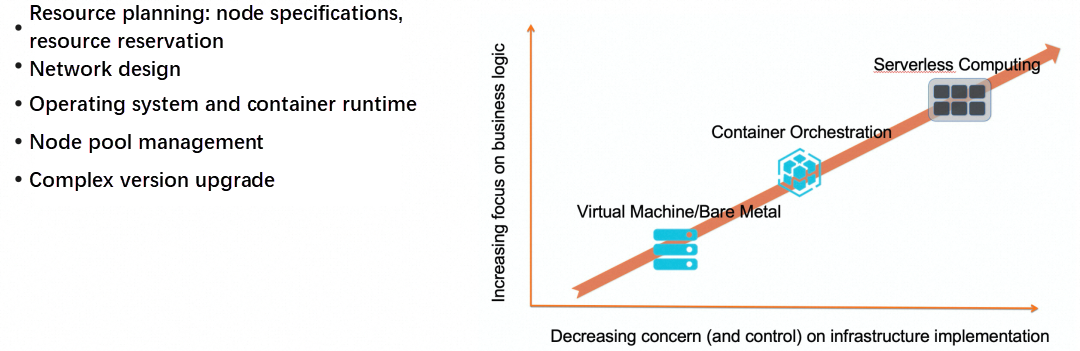

As an open-source container orchestration system, Kubernetes is widely used for developing and managing cloud-native applications. It offers advantages such as reducing operation and maintenance costs, improving efficiency, and forming a cloud-native ecosystem. However, Kubernetes often presents users with various challenges, including resource planning, capacity planning, node-pod affinity, container network planning, node lifecycle management, and compatibility issues with operating system and container runtime versions. These complexities are not what users ideally want to deal with. Users desire to focus on their business logic while minimizing their involvement with these infrastructural aspects. The core concept of Serverless is to enable developers to prioritize business logic and reduce infrastructure concerns. Hence, we have simplified Kubernetes to deliver the capabilities of Serverless Kubernetes.

What are the advantages of Serverless Kubernetes? The main advantages are maintenance-free operation, automatic scalability, and pay-as-you-go pricing.

Firstly, Serverless Kubernetes components are fully managed, eliminating the need for manual maintenance. Kubernetes can also perform automatic upgrades. Secondly, Serverless Kubernetes offers ultimate flexibility by scaling capacity within seconds based on business requirements. This allows for automatic capacity planning as the business grows. Lastly, with Serverless Kubernetes, users only pay for the resources.

To enable more users to experience the best practice, we have created ASK as an experience scenario. Through the popular open-source AI project Stable Diffusion, users can easily deploy AI models with enterprise-level elasticity in a real cloud environment.

With the increasing capabilities of generative AI technology, there is a growing focus on improving R&D efficiency through AI models. Stable Diffusion, a well-known project in the field of AI Generated Content (AIGC), enables users to generate desired scenes and images quickly and accurately. However, when using Stable Diffusion in Kubernetes, the following challenges arise:

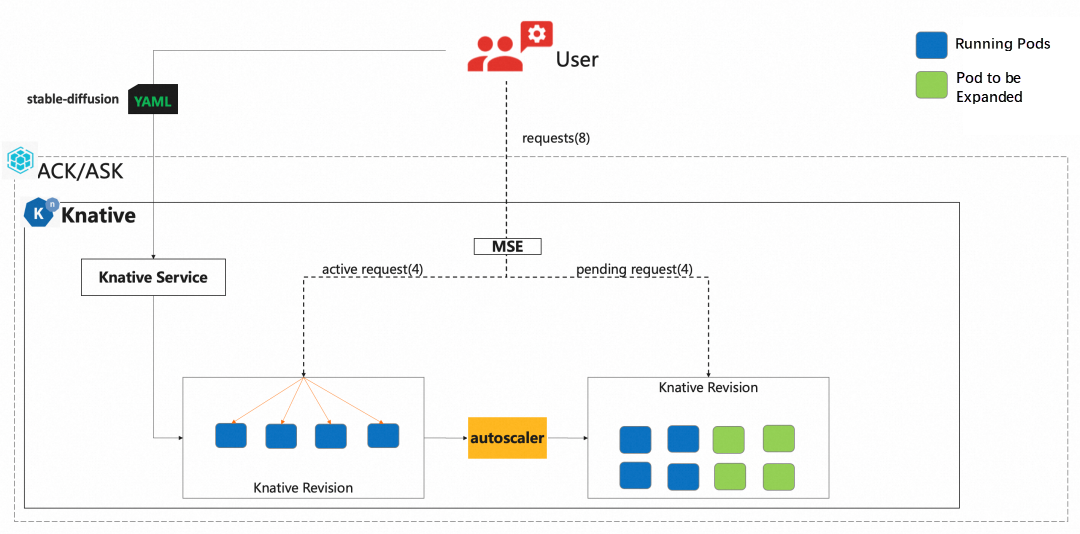

• The throughput rate of a single pod for processing requests is limited. Overloading occurs when multiple requests are forwarded to the same pod. Therefore, it is crucial to accurately control the number of concurrent requests processed by a single pod.

• GPU resources are valuable. Users aim to utilize resources on-demand and release GPU resources during low business hours.

To address these issues, we offer the ASK + Knative solution, which achieves precise elasticity based on concurrency, provides scaling to 0, and enables on-demand resource utilization for a stable diffusion service suitable for production.

To resolve the above problems, we have implemented the Knative + MSE mode in ASK, which includes:

• Extending the Knative elastic plug-in mechanism based on MSE Gateway to achieve precision elasticity based on parallelism.

• Supporting scaling to 0 and enabling automatic elasticity as per demand.

• Adopting multi-version management and image acceleration to accelerate model releases and iterations.

Next, we will introduce how to deploy the Stable Diffusion service in ASK.

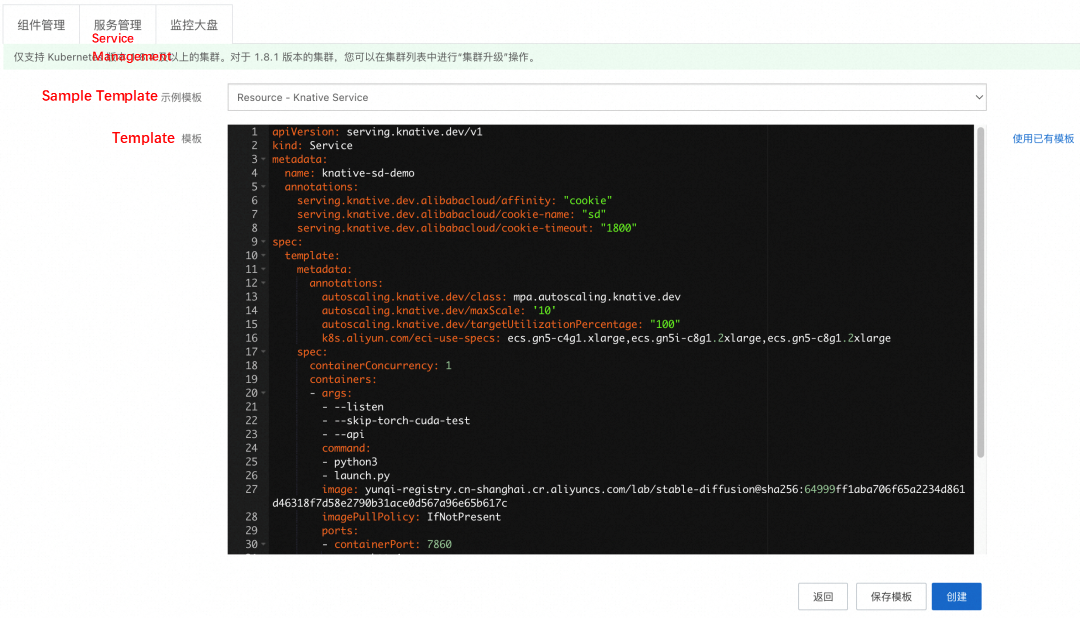

1. On the Clusters page, click the cluster knative-sd-demo to go to the Cluster Information page. In the left-side navigation pane, choose Applications > Knative.

2. On the Knative page, click the Services tab and click Create from Template.

3. From the Namespace drop-down list, select default. From the Sample Template drop-down list, select Resouce-Knative Service, paste the following YAML template to the template, and then click Create.

By default, a service named knative-sd-demo is created.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: knative-sd-demo

annotations:

serving.knative.dev.alibabacloud/affinity: "cookie"

serving.knative.dev.alibabacloud/cookie-name: "sd"

serving.knative.dev.alibabacloud/cookie-timeout: "1800"

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/class: mpa.autoscaling.knative.dev

autoscaling.knative.dev/maxScale: '10'

autoscaling.knative.dev/targetUtilizationPercentage: "100"

k8s.aliyun.com/eci-use-specs: ecs.gn5-c4g1.xlarge,ecs.gn5i-c8g1.2xlarge,ecs.gn5-c8g1.2xlarge

spec:

containerConcurrency: 1

containers:

- args:

- --listen

- --skip-torch-cuda-test

- --api

command:

- python3

- launch.py

image: yunqi-registry.cn-shanghai.cr.aliyuncs.com/lab/stable-diffusion@sha256:64999ff1aba706f65a2234d861d46318f7d58e2790b31ace0d567a96e65b617c

imagePullPolicy: IfNotPresent

ports:

- containerPort: 7860

name: http1

protocol: TCP

name: stable-diffusion

readinessProbe:

tcpSocket:

port: 7860

initialDelaySeconds: 5

periodSeconds: 1

failureThreshold: 3Parameters:

• Support cookie session persistence: serving.knative.dev.alibabacloud/affinity

• Support multiple GPU specifications: k8s.aliyun.com/eci-use-specs

• Support parallelism settings: containerConcurrency

4. On the Services tab, refresh the page. When the status of the knative-sd-demo changes to Success, the Stable Diffusion service is deployed.

Deploy the stress testing service portal-server to display the effect of Stable Diffusion and initiate stress testing.

1. On the Knative page, click the Services tab and click Create from Template.

2. Select default from the Namespace drop-down list. Select Custom from the Sample Template drop-down list. Paste the following YAML template of the portal-server to the template. Click Create.

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: portal-server

name: portal-server

spec:

replicas: 1

selector:

matchLabels:

app: portal-server

template:

metadata:

labels:

app: portal-server

spec:

serviceAccountName: portal-server

containers:

- name: portal-server

image: registry-vpc.cn-beijing.aliyuncs.com/acs/sd-yunqi-server:v1.0.2

imagePullPolicy: IfNotPresent

env:

- name: MAX_CONCURRENT_REQUESTS

value: "5"

- name: POD_NAMESPACE

value: "default"

readinessProbe:

failureThreshold: 3

periodSeconds: 1

successThreshold: 1

tcpSocket:

port: 8080

timeoutSeconds: 1

---

apiVersion: v1

kind: Service

metadata:

annotations:

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-address-type: internet

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-instance-charge-type: PayByCLCU

name: portal-server

spec:

externalTrafficPolicy: Local

ports:

- name: http-80

port: 80

protocol: TCP

targetPort: 8080

- name: http-8888

port: 8888

protocol: TCP

targetPort: 8888

selector:

app: portal-server

type: LoadBalancer

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: pod-list-cluster-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["list"]

- apiGroups: ["networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: pod-list-cluster-role-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: pod-list-cluster-role

subjects:

- kind: ServiceAccount

name: portal-server

namespace: default

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: portal-server

namespace: default3. Choose Network > Services. On the Services page, view the portal-server, and obtain the access IP address 123.56.XX.XX.

4. Enter http://123.56.XX.XX in the browser, and then click Stable Diffusion on the page to go to the Stable Diffusion access page.

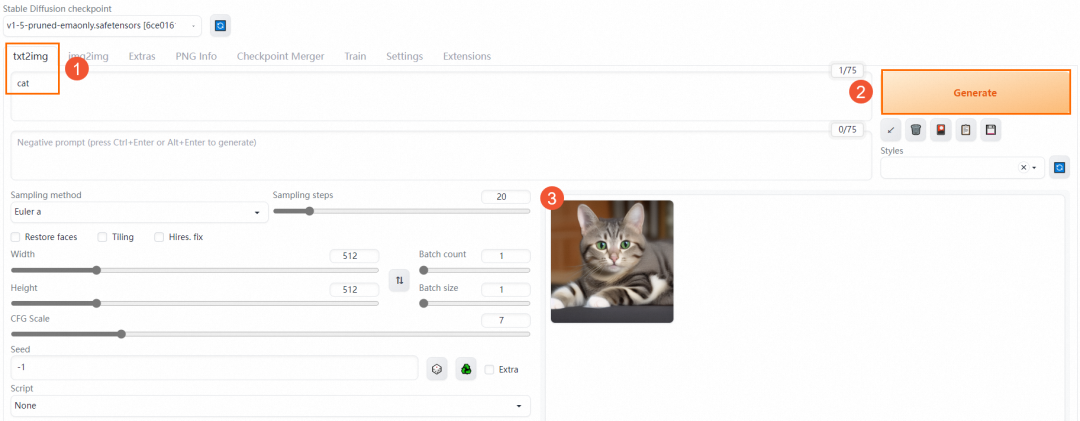

a) The Stable Diffusion access page is shown as follows. For example, enter "cat" in the following text box and click Generate. The image information related to the input is displayed.

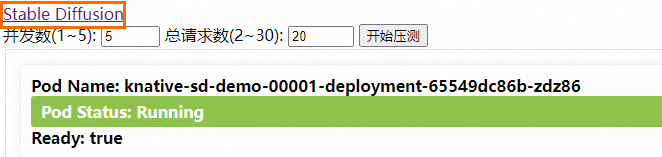

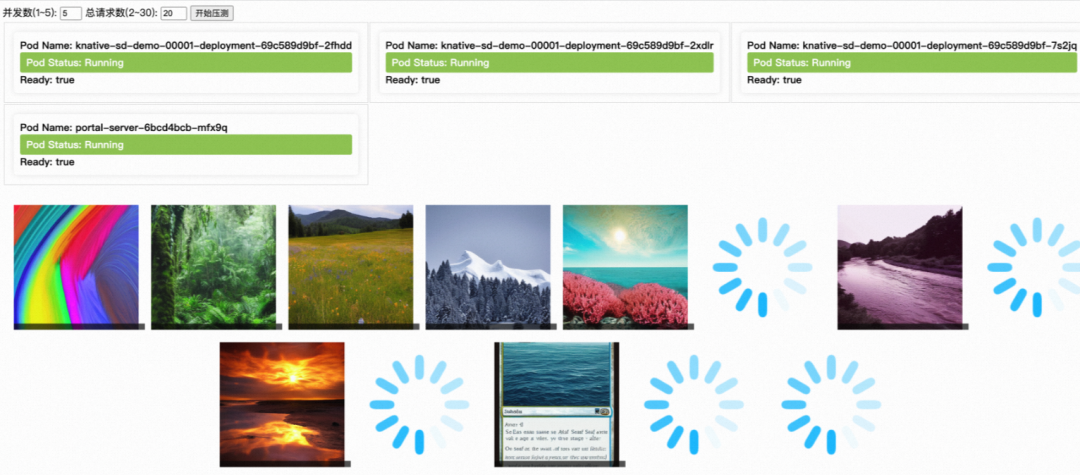

b) On the Access to Pressure Test page, set Concurrency to 5 and Total Requests to 20. Then, click Start Pressure Test to view the result.

During the stress test, you can see that five pods are created, and an image is generated for each request. After the image is generated, it is displayed on the page.

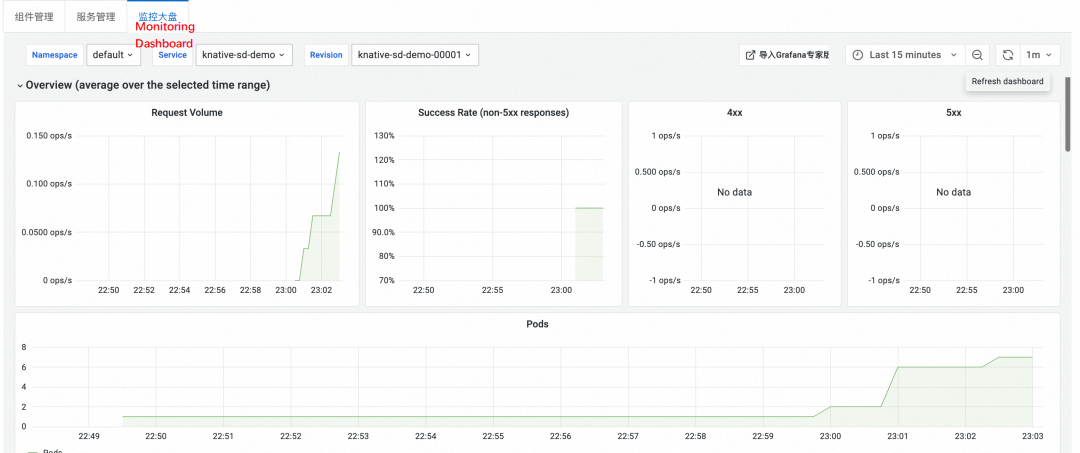

In addition, Knative provides out-of-the-box observability capabilities. On the Knative page, click the Dashboards tab. You can view the monitoring data of the Stable Diffusion service, including request volume, request success rate, 4xx (client error), 5xx (server error), and pod scaling trend.

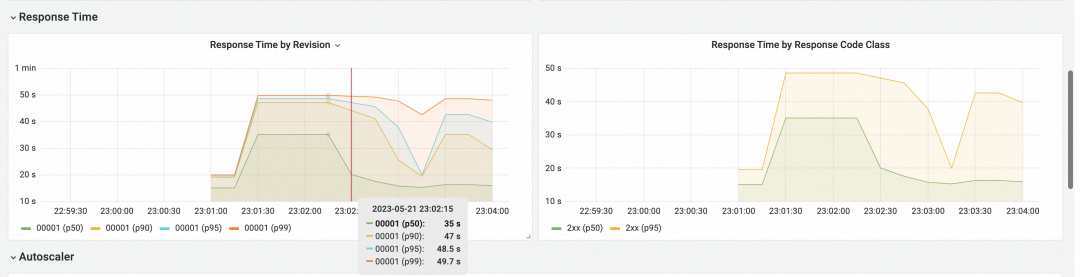

In the Response Time section, view the response latency data of Knative, including P50, P90, P95, and P99.

Enterprise-grade AI services can be easily deployed based on ASK Knative features such as precise concurrency elasticity, scaling to 0, and multi-version management. You are welcome to give it a try.

In-depth Analysis of Traffic Isolation Technology of Online Application Nodes

666 posts | 55 followers

FollowAlibaba Developer - February 7, 2022

Alibaba Container Service - April 28, 2020

Alibaba Cloud Community - January 26, 2026

Alibaba Container Service - October 21, 2019

Alibaba Clouder - April 26, 2021

Alibaba Developer - September 6, 2021

666 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by Alibaba Cloud Native Community