By Huizhi

Kubernetes natively supports some types of Persistent Volumes (PV) for storage, such as iSCSI, NFS, and CephFS (For more information, check the link. These in-tree storage codes are placed in the Kubernetes code repository. The problem here is the strong coupling between the Kubernetes code and the third-party storage vendor’s code. which may lead to the following challenges:

The CSI standard has solved these problems by decoupling the third-party storage code from the Kubernetes code. As such, developers from a third-party storage vendor can implement the CSI without worrying about whether the container platform is Kubernetes or Swarm.

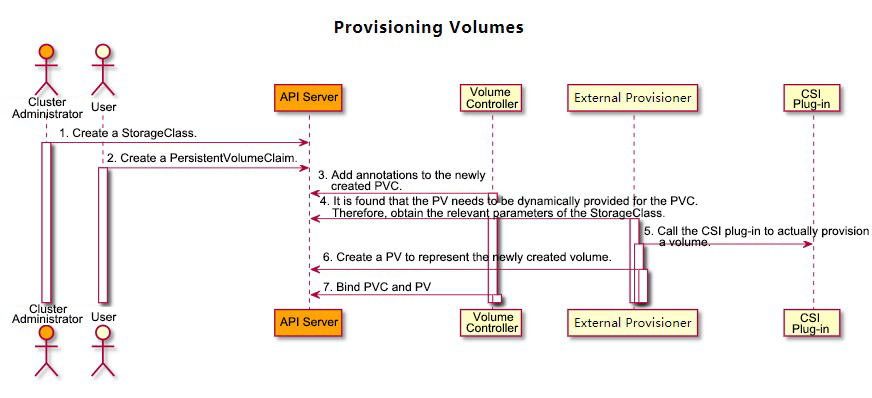

Before we get into the details of the CSI and its components, let’s first look at the CSI storage procedure in Kubernetes. The article, Get to Know Kubernetes Persistent Storage Process, describes the three stages through which a Pod goes when mounting a volume in Kubernetes: Provision and Delete, Attach and Detach, Mount/Unmount. This section describes how Kubernetes uses CSI in these three stages.

1) Cluster administrator creates a StorageClass that contains the CSI plug-in name (provisioner:pangu.csi.alibabacloud.com) and the required parameters (parameters: type = cloud_ssd). The sc.yaml file is as follows:

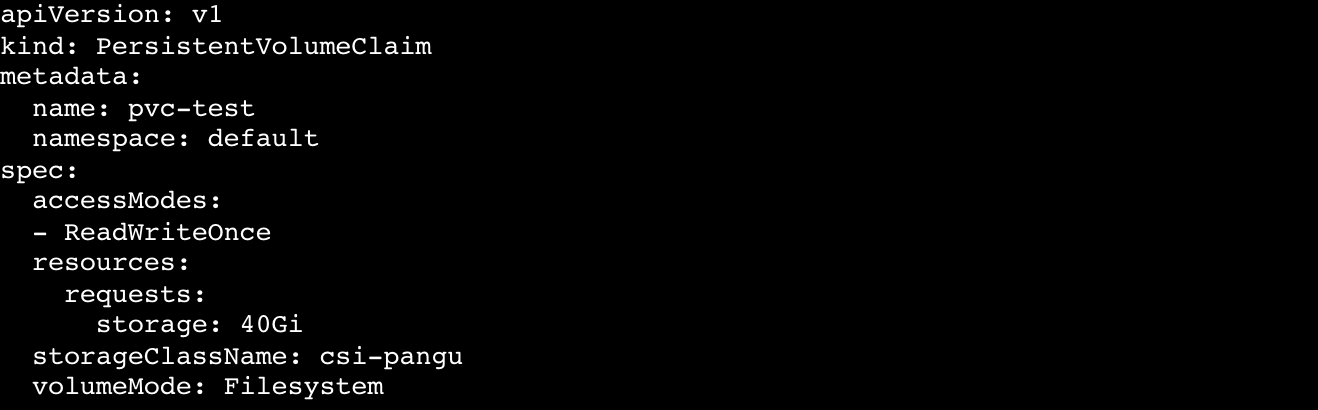

2) User creates PersistentVolumeClaim, and PVC specifies the storage size and the StorageClass, as shown above. The pvc.yaml file is as follows:

3) PersistentVolumeController cannot find the PV corresponding to the newly created PVC in the cluster whose storage type is out-of-tree. Therefore, it adds an annotation to the PVC: volume.beta.kubernetes.io/storage-provisioner=[out-of-tree CSI plug-in name]. The plug-in name in this example is provisioner: pangu.csi.alibabacloud.com.

4) External Provisioner component finds that the annotation of the PVC contains “volume.beta.kubernetes.io/storage-provisioner” and the value is itself. Then, it starts to provision a volume.

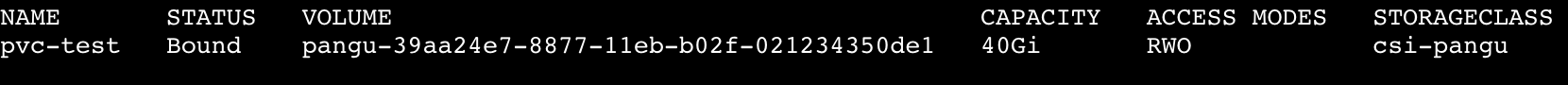

5) When the external CSI plug-in is successfully returned, the volume is provisioned. External Provisioner component will provision a PersistentVolume in the cluster.

6) PersistentVolumeController binds the PV and the PVC.

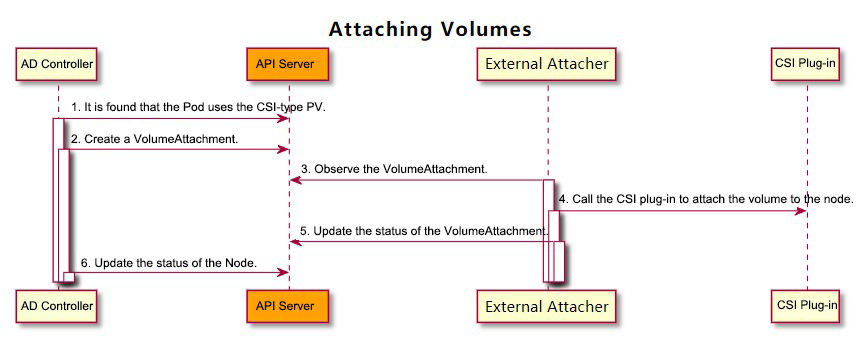

1) AttachDetachController finds that the Pod that uses the CSI-type PV is scheduled to a node. At this time, the AttachDetachController will call the Attach function of the internal in-tree CSI plug-in (csiAttacher).

2) Internal in-tree CSI plug-in (csiAttacher) creates a VolumeAttachment object to the cluster.

3) External Attacher sees the VolumeAttachment object and calls the ControllerPublish function of the external CSI plug-in to connect the volume to the corresponding nodes. After the external CSI plug-in is successfully mounted, External Attacher will update the .Status.Attached of the VolumeAttachment object to true.

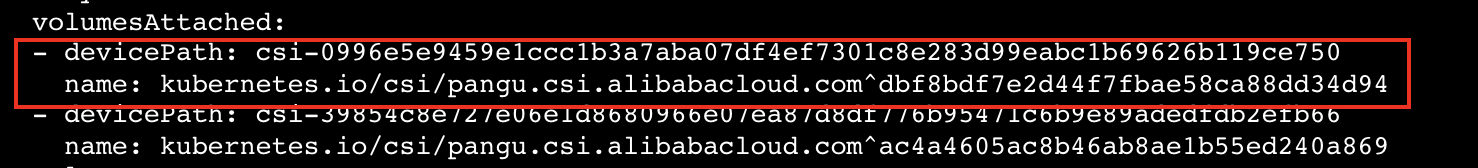

4) The internal in-tree CSI plug-in (csiAttacher) of AttachDetachController finds that the .Status.Attached of the VolumeAttachment object is set to true. Then, it updates the internal status (ActualStateOfWorld) of AttachDetachController, which will be displayed in the .Status.VolumesAttached on Node.

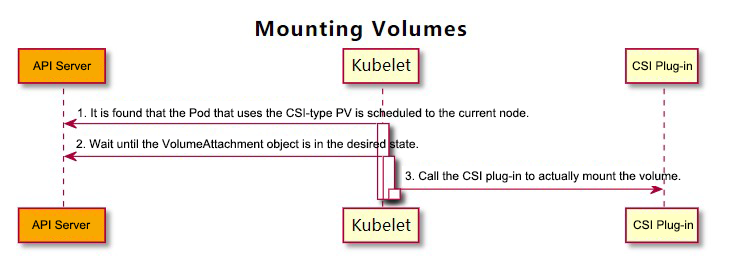

1) Volume Manager (Kubelet component) perceives that a new Pod that uses PV of CSI type is scheduled to the current node. Therefore, it calls the WaitForAttach function of the internal in-tree CSI plug-in (csiAttacher).

2) Internal in-tree CSI plug-in (csiAttacher) waits until the status of .Status.Attached of the VolumeAttachment object in the cluster is changed to true.

3) The internal in-tree CSI plug-in (csiAttacher) calls the MountDevice function, which internally calls the NodeStageVolume function of the external CSI plug-in through a UNIX domain socket. Then, the plug-in (csiAttacher) calls the SetUp function of the internal in-tree CSI plug-in (csiMountMgr). The SetUp function calls the NodePublishVolume function of the external CSI plug-in through a UNIX domain socket.

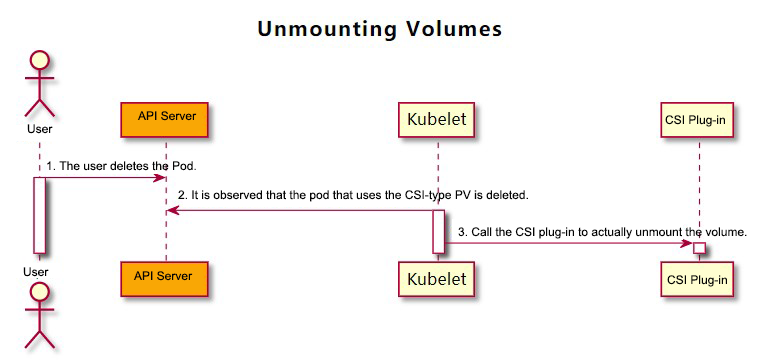

1) User deletes related Pods.

2) Volume Manager (Kubelet component) perceives that the Pod containing the CSI storage volume is deleted. Then, it calls the TearDown function of the internal in-tree CSI plug-in (csiMountMgr). The TearDown function calls the NodeUnpublishVolume function of the external CSI plug-in through Unix domain socket.

3) Volume Manager (Kubelet component) calls the UnmountDevice function of the internal in-tree CSI plug-in (csiAttacher). The UnmountDevice function internally calls the NodeUnpublishVolume function of the external CSI plug-in through Unix domain socket.

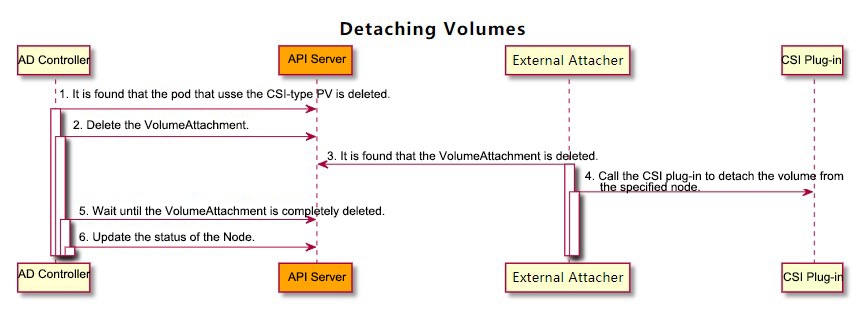

1) AttachDetachController finds that the Pod containing the CSI storage volume is deleted, and then it calls the Detach function of the internal in-tree CSI plug-in (csiAttacher).

2) csiAttacher deletes the VolumeAttachment object in the cluster, which will not be deleted immediately because of the finalizer.

3) External Attacher perceives that the DeletionTimestamp field of the VolumeAttachment object in the cluster is not empty. Then, it calls the ControllerUnpublish function of the external CSI plug-in to remove the volume from the corresponding Node.

4) After the volume is removed by the external CSI plug-in, External Attacher will remove the finalizer field of the VolumeAttachment object. At this time, the VolumeAttachment object is completely deleted.

5) The internal in-tree CSI plug-in (csiAttacher) of AttachDetachController perceives that the VolumeAttachment object has been deleted. Then, it updates the internal status of the AttachDetachController; at the same time,

6) AttachDetachController updates the Node. Now, there is no mounted volume displayed on the .Status.VolumesAttached of Node.

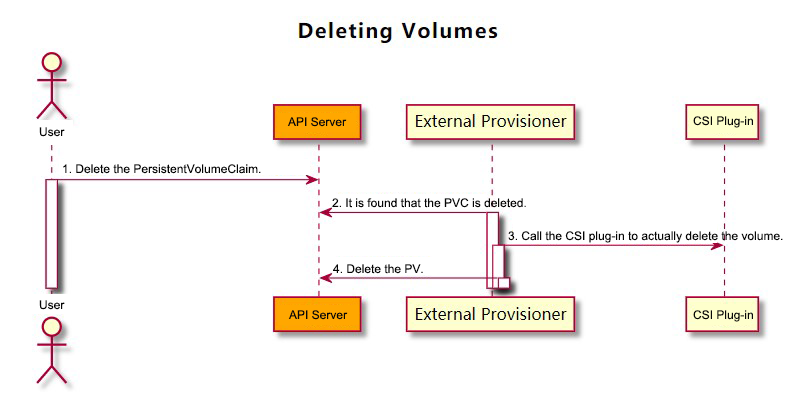

1) User deletes the related PVC.

2) External Provisioner component finds that the PVC is deleted. Then, it performs different operations based on the reclaim policy of the PVC:

Delete: call the DeleteVolume function of the external CSI plug-in to delete the volume. Once the volume is successfully deleted, Provisioner deletes the corresponding PV in the cluster.

To make Kubernetes adapt to the CSI standard, the community has integrated the storage logic related to Kubernetes into the CSI Sidecar component.

Node-Driver-Registrar will register the external CSI plug-in to Kubelet, thus making Kubelet call external CSI plug-in functions (including NodeGetInfo, NodeStageVolume, NodePublishVolume, and NodeGetVolumeStats) through a specific unix domain socket.

After Node-Driver-Registrar successfully registers the external CSI plug-in to Kubelet:

Create or delete the actual volume and the PV that represents the volume.

The parameter provisioner must be specified to enable External-Provisioner. The name of the Provisioner, which corresponds to the provisioner field in the StorageClass, is specified in this parameter.

External-Provisioner will watch the PVC and PV in the cluster after being enabled.

For the PVC in the cluster:

Use the following criteria to determine whether a PVC needs to create volumes dynamically:

1) Whether the annotation of the PVC contains the “volume.beta.kubernetes.io/storage-provisioner” key (created by the PersistentVolumeController) and its value is the same as the Provisioner name.

2) If the VolumeBindingMode field of the StorageClass corresponding to PVC is WaitForFirstConsumer, the annotation of the PVC must contain “volume.kubernetes.io/selected-node” key (for more information, see how the scheduler processes WaitForFirstConsumer), and its value is not null. If the value is Immediate, the Provisioner needs to provide dynamic storage volumes immediately.

For the PV in the cluster:

Use the following criteria to determine whether the PV needs to be deleted:

1) Check whether the .Status.Phase is Release.

2) Check whether the .Spec.PersistentVolumeReclaimPolicy is Delete.

3) Check whether the PV contains the annotation (pv.kubernetes.io/provisioned-by), and the value is itself.

It is primarily used to attach or detach volumes.

External-Attacher internally watches the VolumeAttachment and PersistentVolume in the cluster at all times.

For VolumeAttachment:

For PersistentVolume:

It is primarily used to resize the storage volume.

External-Resizer internally watches the PersistentVolumeClaim in the cluster.

For PersistentVolumeClaim:

Volume Manager (Kubelet component) perceives that the volume needs to be resized online. Then, it calls the NodeExpandVolume interface of the external CSI plug-in through a specific UNIX domain socket to implement file system resizing.

Livenessprobe is used to check whether the CSI plug-in is normal.

It exposes a /healthz HTTP port to serve the probe of Kubelet and internally calls the Probe interface of the external CSI plug-in through a specific UNIX domain socket.

Third-party storage vendors must implement the following three interfaces of CSI plug-ins: IdentityServer, ControllerServer, and NodeServer.

The IdentityServer is mainly used to identify the ID information of CSI plug-ins.

// IdentityServer is the server API for Identity service.

type IdentityServer interface {

// Obtain CSI plug-in information, such as name and version

GetPluginInfo(context.Context, *GetPluginInfoRequest) (*GetPluginInfoResponse, error)

// Obtain CSI plug-in capabilities, such as ControllerService capability

GetPluginCapabilities(context.Context, *GetPluginCapabilitiesRequest) (*GetPluginCapabilitiesResponse, error)

// Obtain CSI plug-in health status

Probe(context.Context, *ProbeRequest) (*ProbeResponse, error)

}The ControllerServer is responsible for creating, deleting, attaching, and detaching volumes and snapshots.

// ControllerServer is the server API for Controller service.

type ControllerServer interface {

// Create a volume

CreateVolume(context.Context, *CreateVolumeRequest) (*CreateVolumeResponse, error)

// Delete a volume

DeleteVolume(context.Context, *DeleteVolumeRequest) (*DeleteVolumeResponse, error)

// Attach the volume to a specific node

ControllerPublishVolume(context.Context, *ControllerPublishVolumeRequest) (*ControllerPublishVolumeResponse, error)

// Detach the volume from a specific node

ControllerUnpublishVolume(context.Context, *ControllerUnpublishVolumeRequest) (*ControllerUnpublishVolumeResponse, error)

// Verify the volume capacity, for example, whether it supports cross-node multi-read and write

ValidateVolumeCapabilities(context.Context, *ValidateVolumeCapabilitiesRequest) (*ValidateVolumeCapabilitiesResponse, error)

// List the information about all storage volumes

ListVolumes(context.Context, *ListVolumesRequest) (*ListVolumesResponse, error)

// Get the available capacity of the storage resource pool

GetCapacity(context.Context, *GetCapacityRequest) (*GetCapacityResponse, error)

// Obtain ControllerServer features, such as support for snapshots

ControllerGetCapabilities(context.Context, *ControllerGetCapabilitiesRequest) (*ControllerGetCapabilitiesResponse, error)

// Create a snapshot

CreateSnapshot(context.Context, *CreateSnapshotRequest) (*CreateSnapshotResponse, error)

// Delete a snapshot

DeleteSnapshot(context.Context, *DeleteSnapshotRequest) (*DeleteSnapshotResponse, error)

// Obtain the information about all snapshots

ListSnapshots(context.Context, *ListSnapshotsRequest) (*ListSnapshotsResponse, error)

// Resize the storage volume

ControllerExpandVolume(context.Context, *ControllerExpandVolumeRequest) (*ControllerExpandVolumeResponse, error)

}NodeServer takes charge of volume mounting and unmounting.

// NodeServer is the server API for Node service.

type NodeServer interface {

// Format the volume and mount it to the temporary global directory

NodeStageVolume(context.Context, *NodeStageVolumeRequest) (*NodeStageVolumeResponse, error)

// Unmount the volume from the temporary global directory

NodeUnstageVolume(context.Context, *NodeUnstageVolumeRequest) (*NodeUnstageVolumeResponse, error)

//Bind-mount the volume from the temporary directory to the target directory

NodePublishVolume(context.Context, *NodePublishVolumeRequest) (*NodePublishVolumeResponse, error)

// Unmount the volume from the target directory

NodeUnpublishVolume(context.Context, *NodeUnpublishVolumeRequest) (*NodeUnpublishVolumeResponse, error)

// Obtain the capacity information of the storage volume

NodeGetVolumeStats(context.Context, *NodeGetVolumeStatsRequest) (*NodeGetVolumeStatsResponse, error)

// Resize the storage volume

NodeExpandVolume(context.Context, *NodeExpandVolumeRequest) (*NodeExpandVolumeResponse, error)

// Obtain NodeServer features, such as retrieving the storage volume capacity information

NodeGetCapabilities(context.Context, *NodeGetCapabilitiesRequest) (*NodeGetCapabilitiesResponse, error)

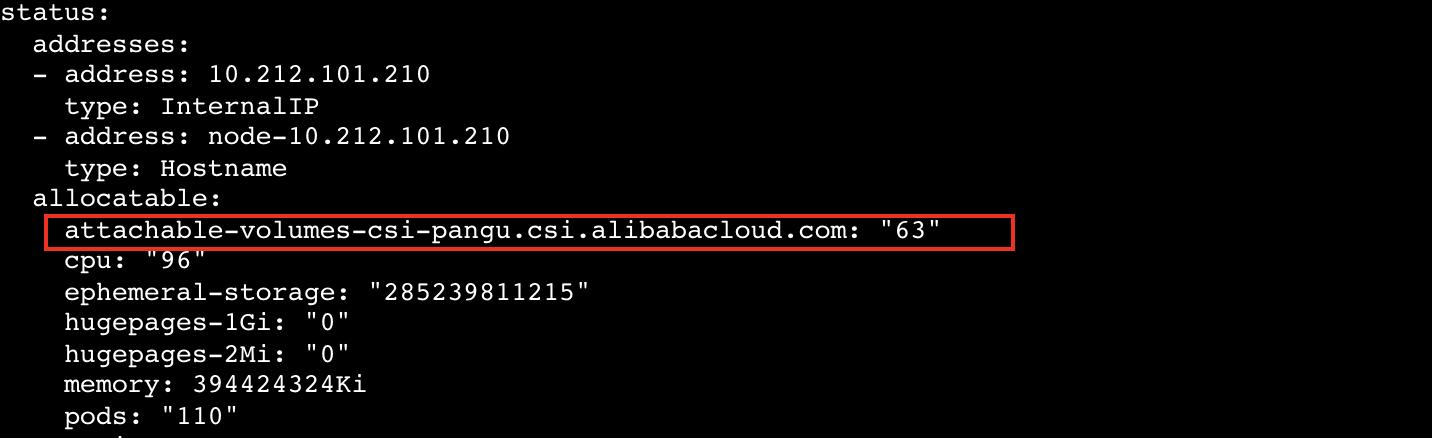

// Obtain CSI node information, such as the maximum number of supported volumes

NodeGetInfo(context.Context, *NodeGetInfoRequest) (*NodeGetInfoResponse, error)

}To be compatible with CSI standard, Kubernetes contains the following APIs:

apiVersion: storage.k8s.io/v1beta1

kind: CSINode

metadata:

name: node-10.212.101.210

spec:

drivers:

- name: yodaplugin.csi.alibabacloud.com

nodeID: node-10.212.101.210

topologyKeys:

- kubernetes.io/hostname

- name: pangu.csi.alibabacloud.com

nodeID: a5441fd9013042ee8104a674e4a9666a

topologyKeys:

- topology.pangu.csi.alibabacloud.com/zoneFunctions:

1) Check whether the external CSI plug-in is successfully registered. After the Node Driver Registry registers the plug-in with Kubelet, Kubelet will create a CSINode. Therefore, there is no need to create a CSINode explicitly.

2) Match the Node name in Kubernetes with the nodeID in the third-party storage system correspondingly. Here, Kubelet will call the GetNodeInfo function of the external CSI plug-in NodeServer to obtain the nodeID.

3) Display the volume topology information. In CSINode, topologyKeys indicates the topology information of the storage node. The volume topology information helps Scheduler select a proper storage node during Pod scheduling.

apiVersion: storage.k8s.io/v1beta1

kind: CSIDriver

metadata:

name: pangu.csi.alibabacloud.com

spec:

# Whether the plugin supports volume attaching (VolumeAttach)

attachRequired: true

# Whether the CSI plug-in requires Pod information during the Mount phase

podInfoOnMount: true

# Specify the CSI-supported volume mode

volumeLifecycleModes:

- PersistentFunctions:

1) Simplify the external CSI plug-in discovery. Created by the cluster administrator, the CSIDriver can learn which CSI plug-ins are in the environment through kubectl get csidriver.

2) Customize Kubernetes behaviors. For example, some external CSI plug-ins do not require the VolumeAttach operation. In this case, the .spec.attachRequired can be set to false.

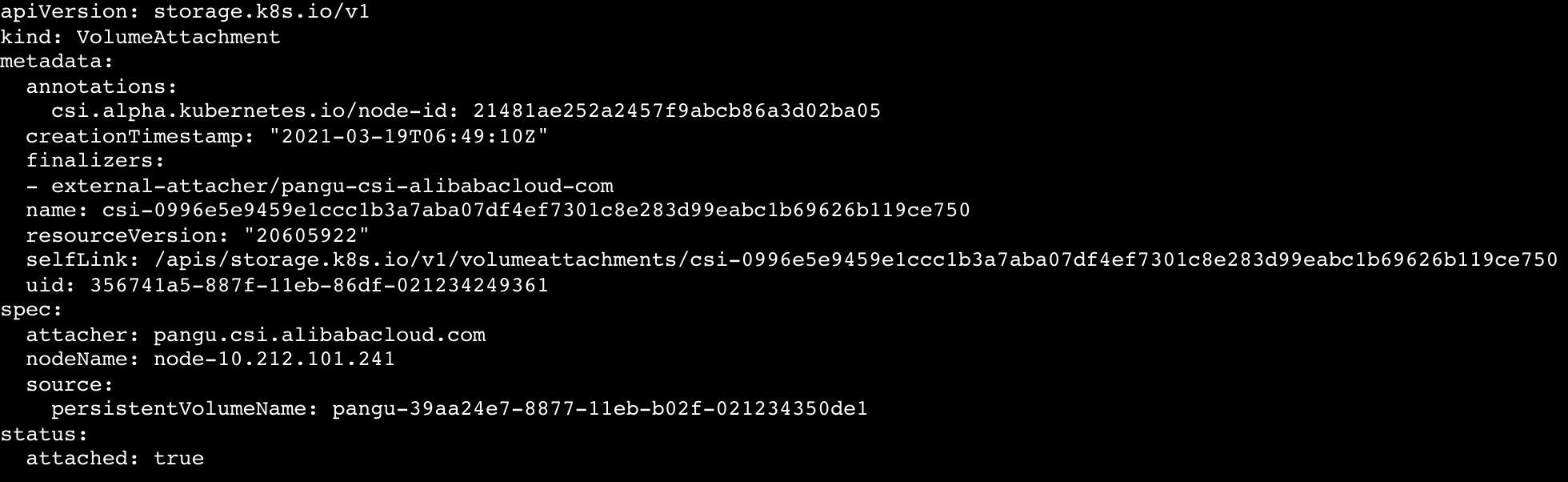

apiVersion: storage.k8s.io/v1

kind: VolumeAttachment

metadata:

annotations:

csi.alpha.kubernetes.io/node-id: 21481ae252a2457f9abcb86a3d02ba05

finalizers:

- external-attacher/pangu-csi-alibabacloud-com

name: csi-0996e5e9459e1ccc1b3a7aba07df4ef7301c8e283d99eabc1b69626b119ce750

spec:

attacher: pangu.csi.alibabacloud.com

nodeName: node-10.212.101.241

source:

persistentVolumeName: pangu-39aa24e7-8877-11eb-b02f-021234350de1

status:

attached: trueFunction:

The VolumeAttachment records the volume attaching, volume detaching, and node information.

The StorageClass contains the AllowedTopologies field:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-pangu

provisioner: pangu.csi.alibabacloud.com

parameters:

type: cloud_ssd

volumeBindingMode: Immediate

allowedTopologies:

- matchLabelExpressions:

- key: topology.pangu.csi.alibabacloud.com/zone

values:

- zone-1

- zone-2After being deployed, external CSI plug-in labels each node with the [AccessibleTopology] value returned by NodeGetInfo function. For more information, see the Node Driver Registry section.

External Provisioner sets the AccessibilityRequirements in the request parameter before calling the CreateVolume interface of the CSI plug-in:

For WaitForFirstConsumer

For Immediately:

Based on the scheduler of community version 1.18

The scheduling process of the scheduler consists of three steps:

The Filter Phase

Process the binding relationships between PVC and PV and dynamically allocates PVs (Dynamic Provisioning). In addition, the scheduler considers the node affinity of the PVs used by Pods during scheduling. The detailed process is as follows:

1) Skip the Pods without PVC.

2) FindPodVolumes

Obtain the boundClaims, claimsToBind, and unboundClaimsImmediate of the Pod.

If len(claimsToBind) is not null

The Score phase is not discussed.

The Assume Phase

The scheduler will first assume the PV and PVC and then the Pod.

1) Make a deep copy of the Pod to be scheduled.

2) AssumePodVolumes (for PVCs of the WaitForFirstConsumer type)

The Bind Phase

BindPodVolumes:

Check the PV and PVC status:

There will be no more details about storage volume expansion since it has been mentioned when introducing the External Resizer. Users only need to edit the .Spec.Resources.Requests.Storage field of the PVC. Note that the volume can only be expanded.

If PV expansion fails, the storage value of the spec field cannot be edited to the original value for the PVC (since only expansion is allowed). Refer to the PVC reduction method provided on the Kubernetes official website.

Check the Node Driver Registrar section.

The storage vendor must implement the NodeGetVolumeStats interface of the CSI plug-in. Kubelet will call this function and display it on its metrics:

Secret can be added to a CSI volume to process the private data required in different processes. Currently, the following parameters are supported by StorageClass:

Secret will be included in the parameters of the corresponding CSI interface. For example, the CreateVolume interface is included in the CreateVolumeRequest.Secrets parameter.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nginx-example

spec:

selector:

matchLabels:

app: nginx

serviceName: "nginx"

volumeClaimTemplates:

- metadata:

name: html

spec:

accessModes:

- ReadWriteOnce

volumeMode: Block

storageClassName: csi-pangu

resources:

requests:

storage: 40Gi

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeDevices:

- devicePath: "/dev/vdb"

name: htmlA third-party storage vendor must implement the NodePublishVolume interface. Kubernetes provides a toolkit for block devices (“k8s.io/kubernetes/pkg/util/mount”). In the NodePublishVolume phase, the EnsureBlock and MountBlock functions of the toolkit can be called.

As the length limits, no more details about the principles will be provided here. For those who are interested, see the official introduction: Volume Snapshot Volume Cloning.

This article first gives a general introduction to the CSI core processes. It then deeply analyzes the CSI standard from the perspectives of CSI Sidecar components, CSI interfaces, and API objects. The initial processes are indispensable for using any CSI volumes on Kubernetes. Therefore, the container storage problem in the environment must be caused by the faults in the preceding processes. This article sorts out the processes to help the programmers to solve environmental problems.

Alibaba Cloud Native Passes Six Trusted Cloud Assessment Certifications with Flying Colors

Kubernetes Stability Assurance Handbook – Part 4: Insight + Plan

664 posts | 55 followers

FollowAlibaba Developer - April 2, 2020

Alibaba Cloud Native - May 23, 2022

Alibaba Clouder - February 10, 2021

OpenAnolis - February 10, 2023

Alibaba Developer - August 18, 2020

Alibaba Developer - June 17, 2020

664 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by Alibaba Cloud Native Community