By Sun Zhiheng (Huizhi), Development Engineer at Alibaba Cloud

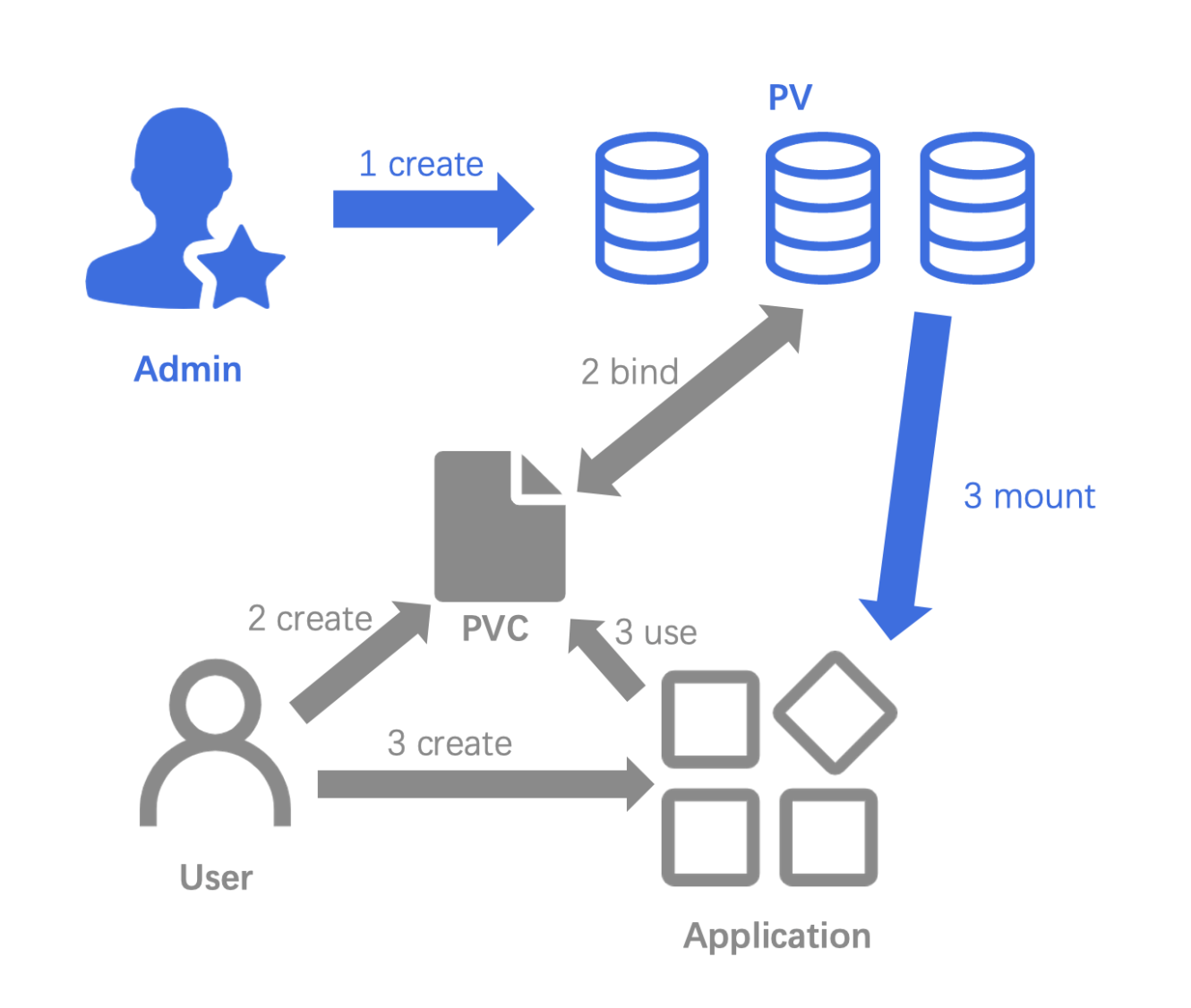

Before explaining the Kubernetes storage process, let's review the basic concepts of persistent storage in Kubernetes.

Kubernetes introduces PVs and PVCs to allow applications and developers to request storage resources properly without concerning storage device details. Use one of the following ways to create a PV:

Let's use the shared storage of a network file system (NFS) as an example to explain the differences between the two PV creation methods.

The following figure shows the process of statically creating a PV.

Step 1) A cluster administrator creates an NFS PV. NFS is a type of in-tree storage natively supported by Kubernetes. The YAML file is as follows:

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

nfs:

server: 192.168.4.1

path: /nfs_storageStep 2) A user creates a PVC. The YAML file is as follows:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10GiRun the kubectl get pv command to check that the PV and PVC are bound.

[root@huizhi ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs-pvc Bound nfs-pv-no-affinity 10Gi RWO 4sStep 3) The user creates an application and uses the PVC created in Step 2.

apiVersion: v1

kind: Pod

metadata:

name: test-nfs

spec:

containers:

- image: nginx:alpine

imagePullPolicy: IfNotPresent

name: nginx

volumeMounts:

- mountPath: /data

name: nfs-volume

volumes:

- name: nfs-volume

persistentVolumeClaim:

claimName: nfs-pvcThe NFS remote storage is mounted to the /data directory of the NGINX container in the pod.

To dynamically create a PV, ensure that the cluster is deployed with an NFS client provisioner and the corresponding StorageClass.

Compared with static PV creation, dynamic PV creation requires no intervention from the cluster administrator. The following figure shows the process of dynamically creating a PV.

The cluster administrator only needs to ensure that the environment contains an NFS-related StorageClass.

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: nfs-sc

provisioner: example.com/nfs

mountOptions:

- vers=4.1Step 1) The user creates a PVC and sets storageClassName to the name of the NFS-related StorageClass.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs

annotations:

volume.beta.kubernetes.io/storage-class: "example-nfs"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Mi

storageClassName: nfs-scStep 2) The NFS client provisioner in the cluster dynamically creates the corresponding PV. A PV is created in the environment and bound to the PVC.

[root@huizhi ~]# kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE

pvc-dce84888-7a9d-11e6-b1ee-5254001e0c1b 10Mi RWX Delete Bound default/nfs 4sStep 3) The user creates an application and uses the PVC created in Step 2. This step is the same as Step 3 of statically creating a PV.

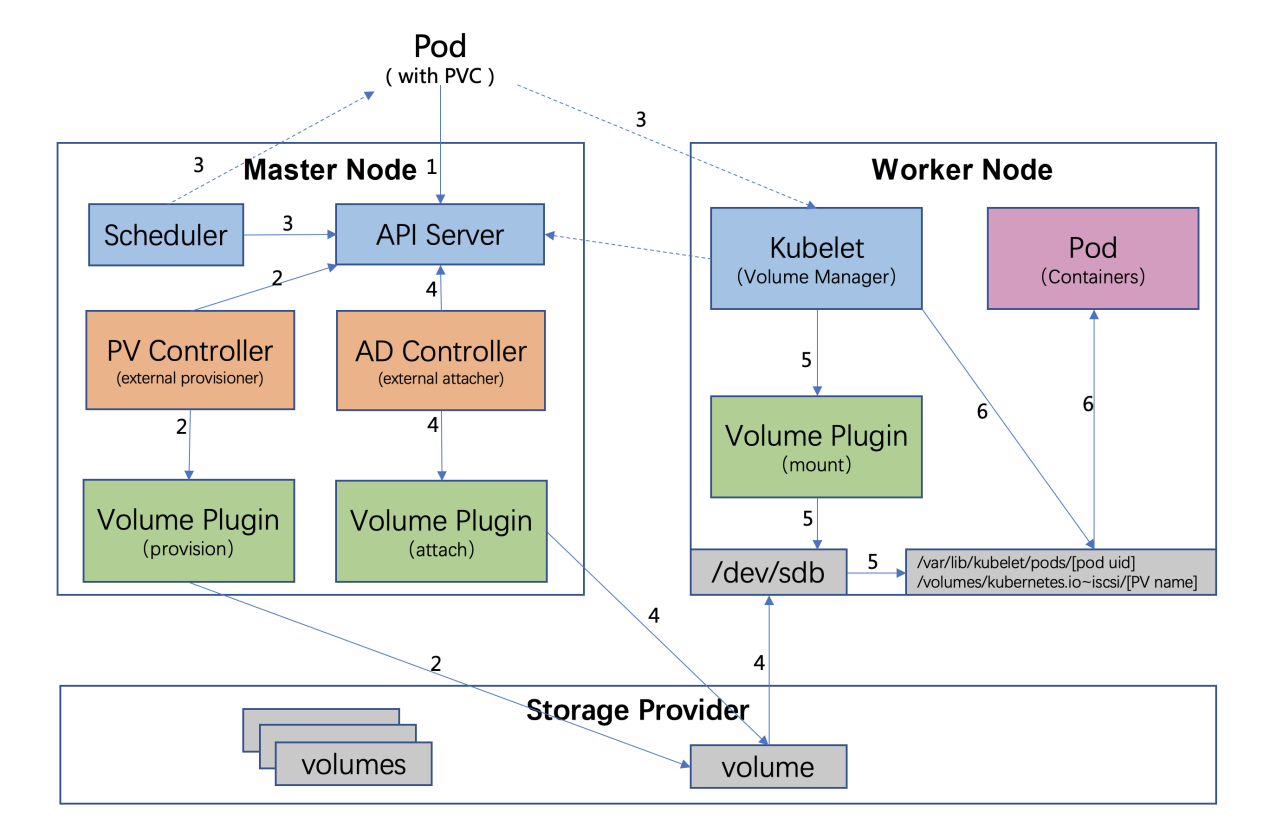

The following figure shows the process of Kubernetes persistent storage. This figure is taken from the cloud native storage courses given by Junbao.

Let's take a look at the steps involved in the process.

1) A user creates a pod that contains a PVC, which uses a dynamic PV.

2) Scheduler schedules the pod to an appropriate worker node based on the pod configuration, node status, and PV configuration.

3) PV Controller watches that the pod-used PVC is in the Pending state and calls Volume Plugin (in-tree) to create a PV and PV object. The out-of-tree process is implemented by External Provisioner.

4) AD Controller detects that the pod and PVC are in the 'To Be Attached' state and calls Volume Plugin to attach the storage device to the target worker node.

5) On the worker node, Volume Manager in the kubelet waits until the storage device is attached and uses Volume Plugin to mount the device to the global directory `/var/lib/kubelet/pods/[pod uid]/volumes/kubernetes.io~iscsi/[PV

name]` (iscsi is used as an example).

6) The kubelet uses Docker to start the containers in the pod and uses the bind mount method to map the volume that is mounted to the local-global directory to the containers.

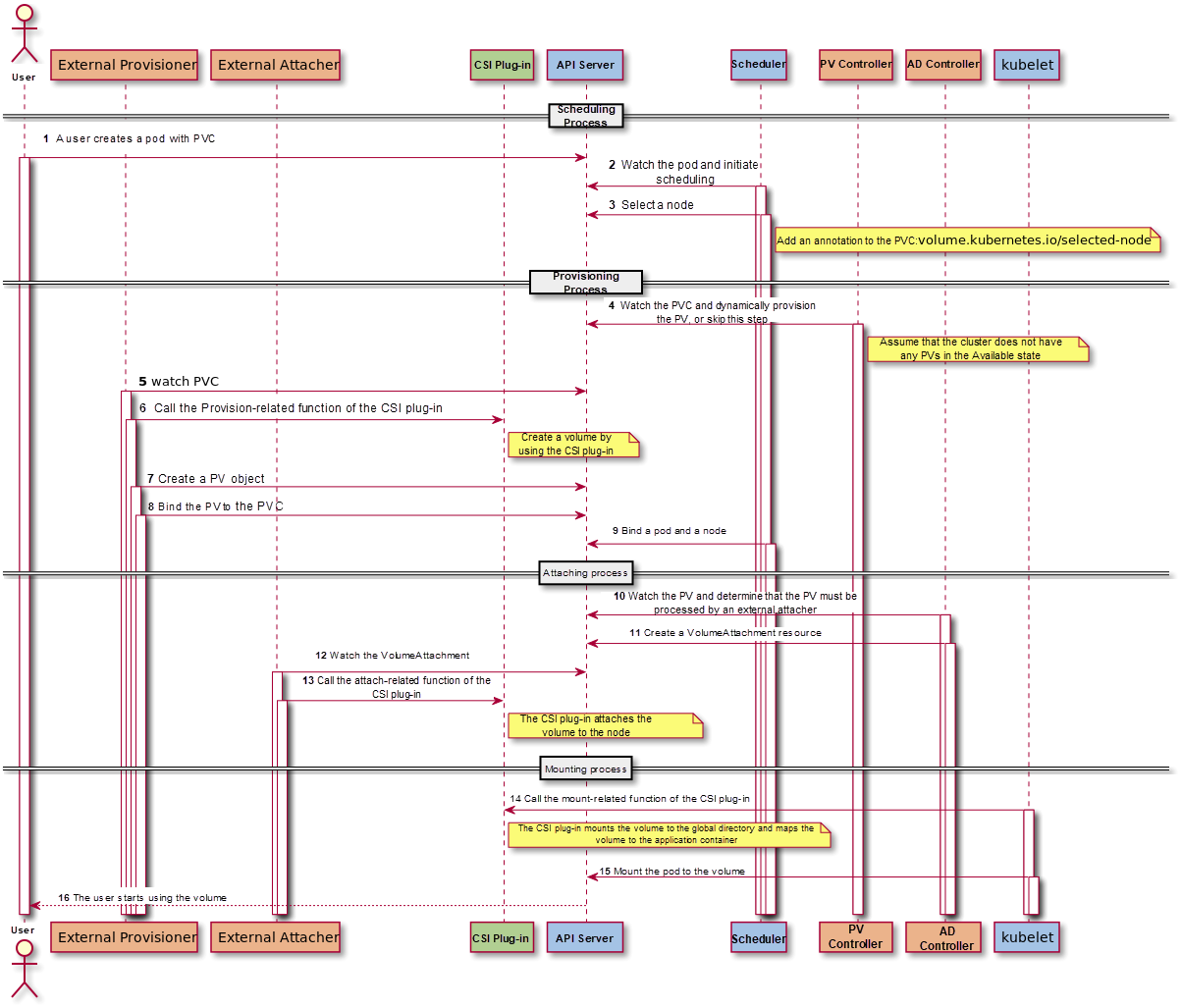

The following diagram shows a detailed process:

The persistent storage process varies slightly depending on different Kubernetes versions. This article uses Kubernetes 1.14.8 as an example.

The preceding process map shows the three stages from when a volume is created to when it is used by applications: Provision/Delete, Attach/Detach, and Mount/Unmount.

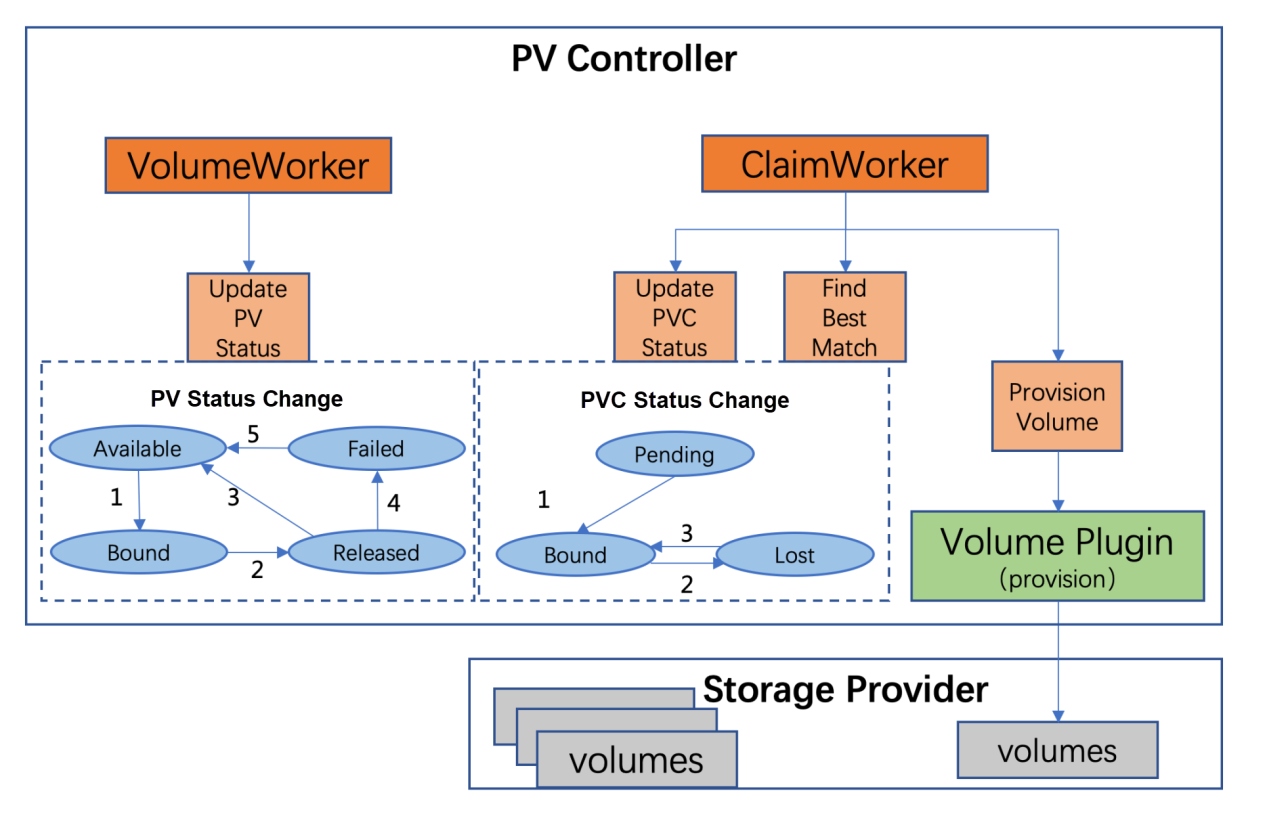

PV Controller Workers

PV Status Changes (UpdatePVStatus)

PVC Status Changes (UpdatePVCStatus)

Provisioning Process (Assuming a User Creates a PVC)

Static volume process (FindBestMatch): PV Controller selects a PV in the Available state in the environment to match to the new PVC.

volume.kubernetes.io/selected-node. If yes, the PVC is scheduled to a node by the scheduler (the PVC belongs to ProvisionVolume). In this case, binding is not delayed. Secondly, if the PVC's annotation does not include volume.kubernetes.io/selected-node and no StorageClass exists, binding is not delayed. If a StorageClass exists, PV Controller checks the VolumeBindingMode field. If it is set to WaitForFirstConsumer, binding is delayed. If it is set to Immediate, binding is not delayed.pv.kubernetes.io/bound-by-controller: "yes" is added to the PV. 4. The .Spec.VolumeName of the PVC is updated to the name of the PV. 5. .Status.Phase of the PVC is updated to Bound. 6. The annotations pv.kubernetes.io/bound-by-controller: "yes" and pv.kubernetes.io/bind-completed: "yes" are added to the PVC.Dynamic volume process (ProvisionVolume): The dynamic provisioning process is initiated if no appropriate PV exists in the environment.

kubernetes.io/ prefix. 2) PV Controller updates the PVC's annotation as follows:claim.Annotations["volume.beta.kubernetes.io/storage-provisioner"] = storageClass.Provisioner.

"pv.kubernetes.io/bound-by-controller"="yes" and "pv.kubernetes.io/provisioned-by"=plugin.GetPluginName()".

claim.Annotations["volume.beta.kubernetes.io/storage-provisioner"] in the PVC is the same as its provisioner name. External Provisioner passes in the --provisioner parameter to determine its provisioner name upon startup. 3) If VolumeMode of the PVC is set to Block, External Provisioner checks whether it supports block devices. 4) External Provisioner calls the Provision function and calls the CreateVolume interface of the CSI storage plug-in through gRPC. External Provisioner creates a PV to represent the volume and binds the PV to the PVC.The deleting volumes process is the reverse of the provisioning volumes process.

When a user deletes a PVC, PV Controller changes PV.Status.Phase to Released.

When PV.Status.Phase is set to Released, PV Controller checks the value of Spec.PersistentVolumeReclaimPolicy. If it is set to Retain, it is skipped. If it is set to Delete, then either of the following options is executed:

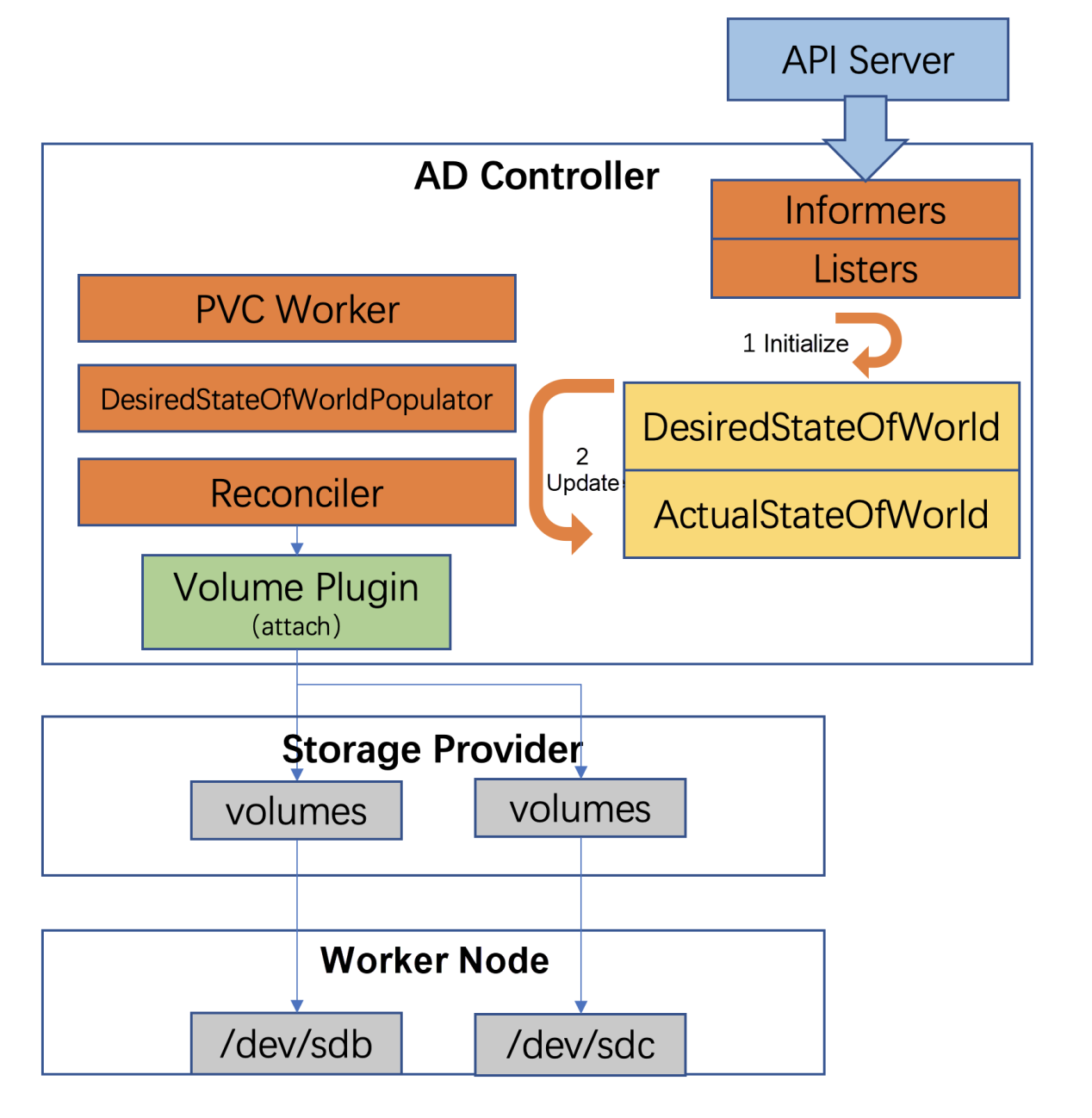

Both the kubelet and AD Controller perform the Attach and Detach operations. These operations are performed when kubelet if --enable-controller-attach-detach is specified in the startup parameters of the kubelet. Otherwise, these operations are performed by AD Controller. The following section explains the Attach and Detach operations using AD Controller as an example.

Two Core Variables of AD Controller

The Attaching Process

AD Controller initializes DSW and ASW based on the resource information in the cluster.

AD Controller has three components that periodically update DSW and ASW.

Detaching Process

volumes.kubernetes.io/keep-terminated-pod-volumes label. If yes, no operations are performed. If no, the volume is removed from DSW.a) In-tree Detaching: 1) AD Controller implements the NewDetacher method of the AttachableVolumePlugin interface to return a new detacher. 2) AD Controller calls the Detach function of the detacher to perform the Detach operation on the volume. 3) AD Controller updates ASW.

b) Out-of-tree Detaching: 1) AD Controller calls the in-tree CSIAttacher to delete the related VolumeAttachement object. 2) External Attacher watches the VolumeAttachement (VA) resource in the cluster. If a data volume needs to be deleted, External Attacher calls the Detach function and calls the ControllerUnpublishVolume interface of the CSI plug-in through gRPC. 3) AD Controller updates ASW.

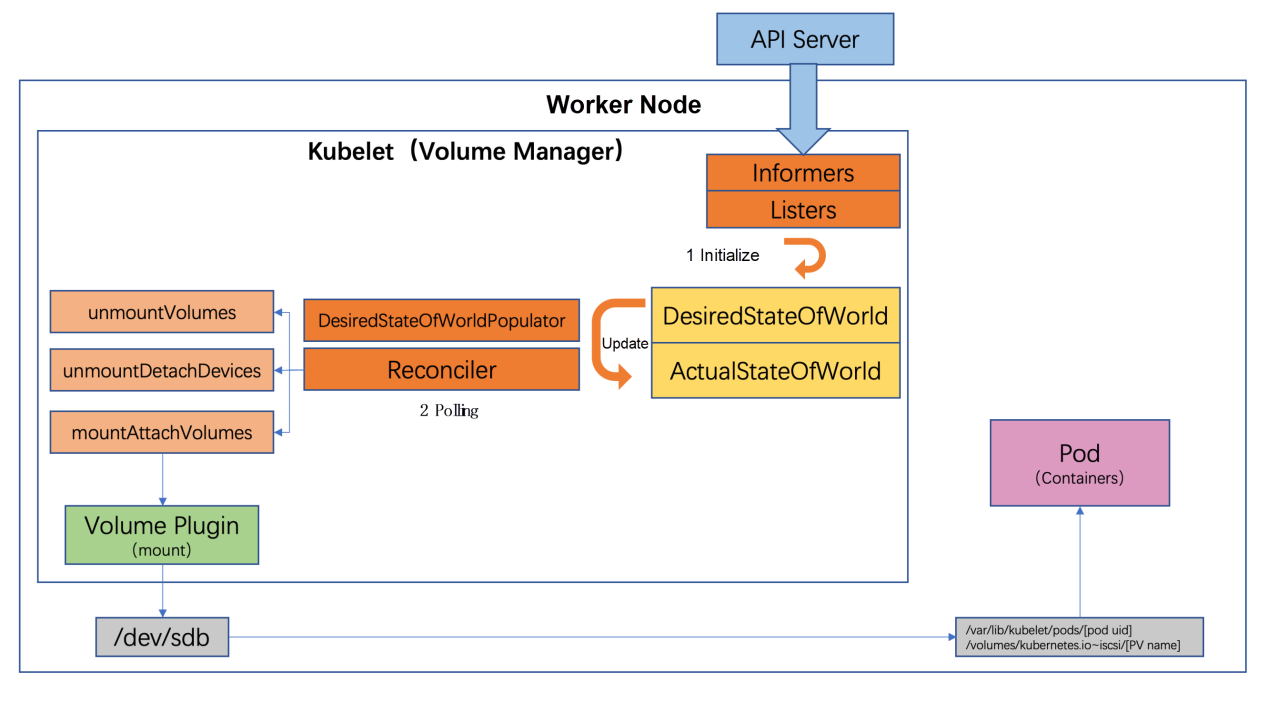

Volume Manager

It has two core variables:

The mounting and unmounting processes are as follows:

The global directory (global mount path) is a block device mounted to the Linux system only once. In Kubernetes, a PV may be mounted to multiple pod instances on a node. A formatted block device is mounted to a temporary global directory on a node. Then, the global directory is mounted to the corresponding directory of the pod by using the bind mount technology of Linux. In the preceding process map, the global directory is /var/lib/kubelet/pods/[pod uid]/volumes/kubernetes.io~iscsi/[PVname].

VolumeManager initializes DSW and ASW based on resource information in the cluster.

VolumeManager has two components that periodically update DSW and ASW.

UnmountVolumes ensures that the volumes are unmounted after the pod is deleted. All the pods in ASW are traversed. If any pod is not in DSW (indicating this pod has been deleted), the following operations are performed (VolumeMode=FileSystem is used as an example):

1) Remove all bind-mounts by calling the TearDown interface of Unmounter, or calling the NodeUnpublishVolume interface of the CSI plug-in in out-of-tree mode.

2) Unmount volume by calling the UnmountDevice function of DeviceUnmounter, or calling the NodeUnstageVolume interface of the CSI plug-in in out-of-tree mode.

3) ASW is updated.

MountAttachVolumes ensures that the volumes to be used by the pod are successfully mounted. All the pods in DSW are traversed. If any pod is not in ASW (the directory is to be mounted and mapped to the pod), the following operations are performed (VolumeMode=FileSystem is used as an example):

1) Wait until the volume is attached to the node by External Attacher or the kubelet.

2) Mount the volume to the global directory by calling the MountDevice function of DeviceMounter or calling the NodeStageVolume interface of the CSI plug-in in out-of-tree mode.

3) Update ASW if the volume is mounted to the global directory.

4) Mount the volume to the pod through bind-mount by calling the SetUp interface of Mounter or calling the NodePublishVolume interface of the CSI plug-in in out-of-tree mode.

5) Update ASW.

UnmountDetachDevices ensures that volumes are unmounted. All UnmountedVolumes in ASW are traversed. If any UnmountedVolumes do not exist in DSW (indicating these volumes are no longer used), the following operations are performed:

1) Unmount volume by calling the UnmountDevice function of DeviceUnmounter, or calling the NodeUnstageVolume interface of the CSI plug-in in out-of-tree mode.

2) ASW is updated.

This article introduces the basics and usage of Kubernetes persistent storage and analyzes the internal storage process of Kubernetes. In Kubernetes, all storage types require the preceding processes, but the Attach and Detach operations are not performed in certain scenarios. Any storage problem in an environment can be attributed to a fault in one of these processes.

Container storage is complex, especially in private cloud environments. However, through this process, it's possible to seize more opportunities while braving more challenges. Currently, competition is fierce in the storage landscape of China's private cloud market. Our agile PaaS container team is always looking for talented professionals to join us and help build a better future.

1) Source code of the Kubernetes community

2) kubernetes-design-proposals volume-provisioning

3) kubernetes-design-proposals CSI Volume Plugins in Kubernetes Design Doc

Seven Challenges for Cloud-native Storage in Practical Scenarios

664 posts | 55 followers

FollowAlibaba Clouder - May 21, 2019

Alibaba Clouder - June 18, 2019

Alibaba Developer - April 3, 2020

H Ohara - September 1, 2023

Alibaba Developer - April 2, 2020

Alibaba Clouder - July 2, 2019

664 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community