This article is adapted from a keynote presentation, "Apache RocketMQ EventBridge: Why Your GenAI Needs EDA?", delivered at the Community Over Code Asia 2025 conference by Shen Lin, an Apache RocketMQ PMC member and the head of Alibaba Cloud EventBridge, who specializes in EDA research.

The core of EDA is being event-centric and responding to changes in real time. It breaks from the passive, traditional "request-response" model, instead employing a fully automated "sense → trigger → act" flow. In AI systems, data streams, model training, inference, and external feedback can all function as "events" that trigger automated decisions and coordinated actions. EDA acts as the "nervous system" for the AI era, enabling AI to not only "think" but also to "sense" and "act." It enhances a system's real-time capabilities, flexibility, and level of automation, making it a critical foundation for building intelligent systems. In short: AI gives a system its "brain," while EDA builds its "nerves."

This article explores the core values of EDA in the age of AI and the critical problems it helps solve.

The mechanism behind AI hallucinations is complex, but it can be broadly analyzed from two stages: training and inference.

• During the training stage:

• During the inference stage:

To address AI hallucinations, three primary methods are commonly used today:

• Fine-Tuning: This involves retraining a model with more specific, domain-relevant data to improve its accuracy. While effective in many scenarios, this approach has high requirements. Without significant investment in human resources and computing power, it can be difficult to implement, especially in fields with frequently updated knowledge that require continuous model adjustments.

• Prompt Engineering: This approach involves carefully crafting detailed prompts that provide the LLM with the necessary context. However, designing a good prompt is a highly skilled task, and its effectiveness varies by user, making it akin to a "manual art." While prompt optimization can suppress some hallucinations, it only temporarily masks them without fundamentally solving the issue if the model's weights remain unchanged.

• Retrieval-Augmented Generation (RAG): This poses the question: can we automatically generate a high-quality prompt that contains the key information an LLM needs? This is very similar to RAG. Let's explore what RAG is.

Simply put, RAG works by retrieving relevant context and providing it to the LLM along with the original query. For instance, if we ask DeepSeek, "Which speakers at this Apache summit discussed the topic of RAG?", it wouldn't know the answer because it hasn't been trained on this recent data. However, if we provide it with a data package containing the speakers' presentation materials, DeepSeek gains highly relevant context, enabling it to answer accurately.

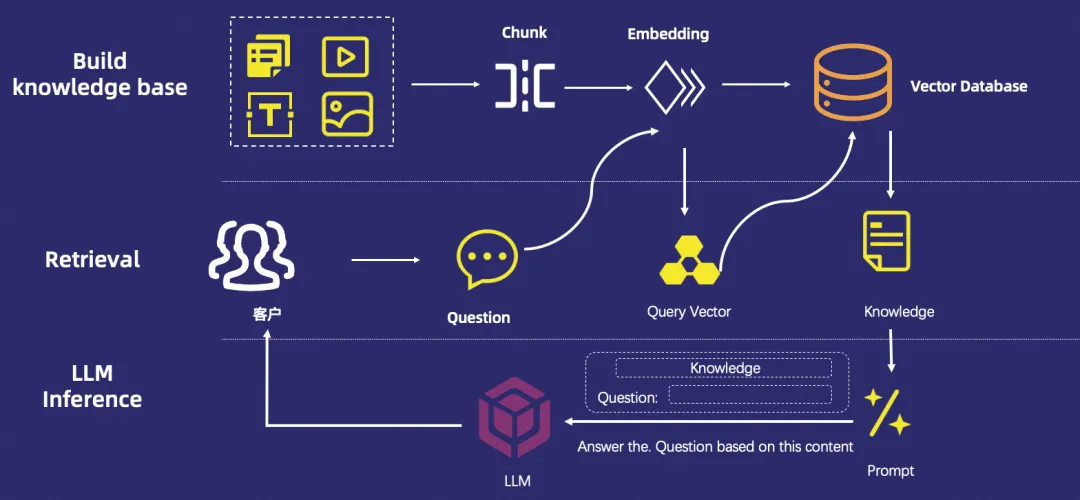

How does AI achieve this? The process is broken down into two main stages:

• Indexing: First, the source data must be stored in advance. To quickly find data relevant to a query within a large dataset, we use vectorization. Vectorization essentially marks an object with numerical values across multiple feature dimensions. The closer two vectors are in a multi-dimensional space, the more similar they are. Therefore, we need to vectorize the data beforehand and store it in a vector database.

• Retrieval and Generation: Then, when we query the LLM, we first vectorize the question and use the resulting vector to find the most relevant source data in the vector database. Finally, this retrieved knowledge is passed to the LLM as context along with the original question, allowing it to generate a more precise answer.

From this process, we can see two clear advantages of RAG:

• It saves costs and ensures data privacy by not requiring the knowledge base to be used for training the large model.

• It significantly lowers the probability of AI hallucinations without relying on the "manual artistry" of prompt engineering.

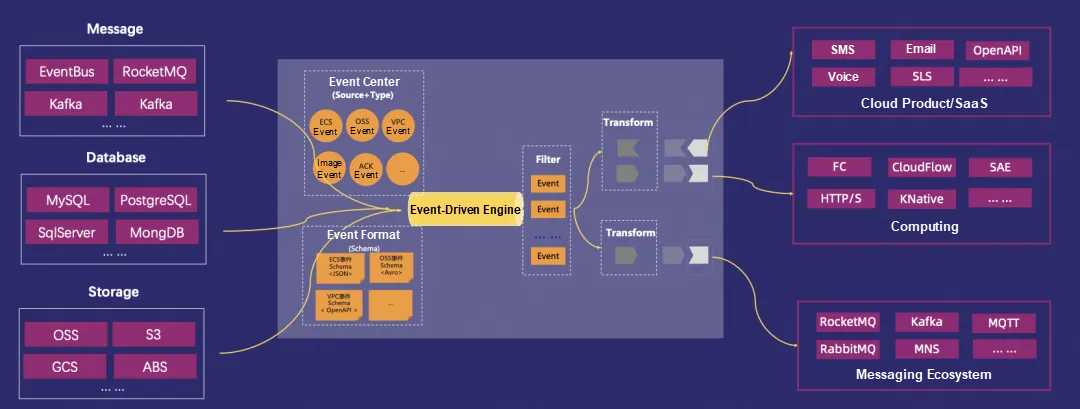

To understand why EventBridge is well-suited for RAG, let's first look at what EventBridge is.

• The EventBridge model is quite straightforward: it easily ingests external data in a standardized event format, integrates it using an event schema, and can optionally store it in an event bus. The events are then filtered or transformed before being pushed to downstream services.

• This workflow perfectly matches the three key requirements of a RAG pipeline: ingesting rich upstream data, performing custom chunking and vectorization, and persisting the results to various vector databases.

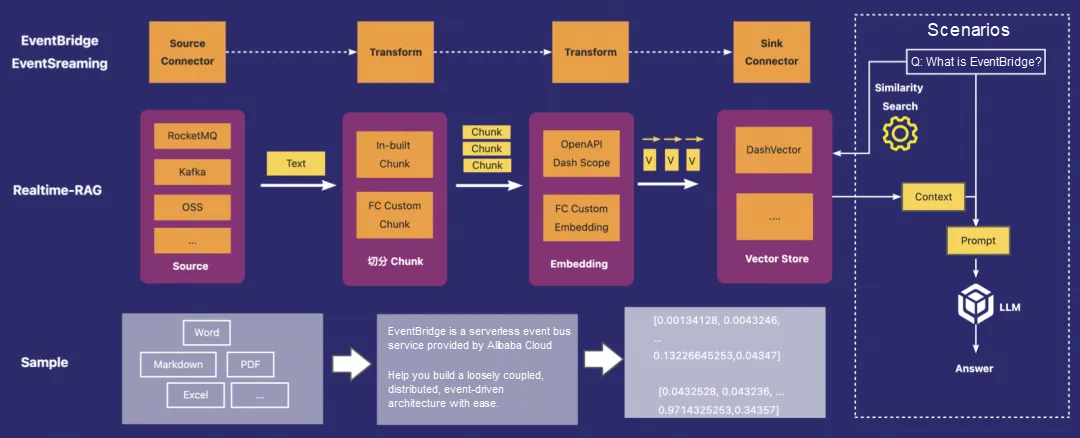

Let's take an example scenario: building an intelligent Q&A bot for EventBridge-related questions.

• First, we use an EventBridge event stream to process EventBridge documentation stored in an upstream OSS bucket. This involves chunking the documents, generating embeddings (vectorization), and storing the results in a vector database.

• Once this is done, when a user asks the bot, "What is EventBridge?", the bot first vectorizes the question, searches the vector database for the most relevant content, and passes this context to an LLM. The LLM can then provide a highly accurate answer based on the retrieved material, reducing hallucinations.

Currently, the RAG-powered knowledge base feature of Alibaba Cloud's GenAI platform (Model Studio) uses EventBridge's event streaming capabilities. This has helped numerous customers reduce LLM hallucinations, with particularly significant results in specialized vertical domains. If you are interested, you can try it here: https://bailian.console.aliyun.com/?&tab=app#/knowledge-base

The second scenario we'll discuss is the inference trigger.

Today, most of our interactions with LLMs are direct conversations, such as asking a question to DeepSeek or using an intelligent chatbot. However, a more common and rapidly growing use case is programmatic LLM invocation, seen in applications like supply chain optimization and financial fraud detection.

In microservices architectures, machine-to-machine API calls vastly outnumber human-initiated ones. Similarly, we can expect the scale of programmatic LLM triggers to far surpass manual usage in the future.

How can we seize the opportunities within this trend?

In fact, our existing business systems already contain vast amounts of data ripe for AI-powered inference. For example:

• Messaging services store customer comments that need to be analyzed and tagged as positive or negative, and then scored.

• Databases contain product descriptions that could be sent to an AI for optimization suggestions.

• Object storage like OSS or S3 holds large documents for which an AI could generate 100-word summaries.

While these tasks might have required manual effort in the past, they can now be delegated to LLMs, greatly improving efficiency. EventBridge excels at enabling existing business systems to leverage AI conveniently, quickly, and cost-effectively—often without writing a single line of code.

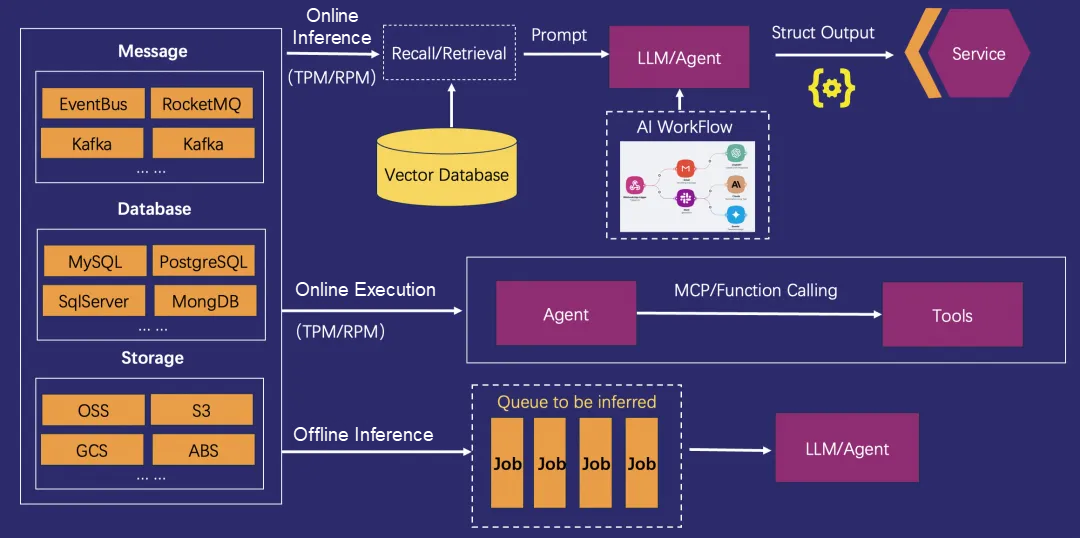

To achieve this, EventBridge offers three key tools:

• Real-time Inference with Targeted Output: EventBridge can listen for and retrieve data from databases, messaging systems, or storage services in real time. It then invokes an LLM inference service and delivers the output to a target destination. This process can be combined with RAG and may involve not just an LLM but also an Agent or an AI Workflow.

As you can see, implementation presents many challenges, but EventBridge helps customers solve these problems efficiently.

• Triggering Actions Based on Inference Results: Beyond just storing results, EventBridge can use an LLM's output to trigger a subsequent action. For example, an Agent could analyze a message and, based on the result, invoke a service to send an email.

• Asynchronous Inference for Higher Resource Utilization: For tasks that are not time-sensitive, EventBridge can facilitate offline, asynchronous inference. This allows for better scheduling of scarce GPU resources and can reduce cloud costs by at least half compared to real-time inference.

The power of AI lies in its application, and EDA is perfectly suited to act as an inference trigger to unlock that value.

AI infrastructure is a very popular and broad topic. Drawing a parallel to IT infrastructure, our focus here is on AI communication.

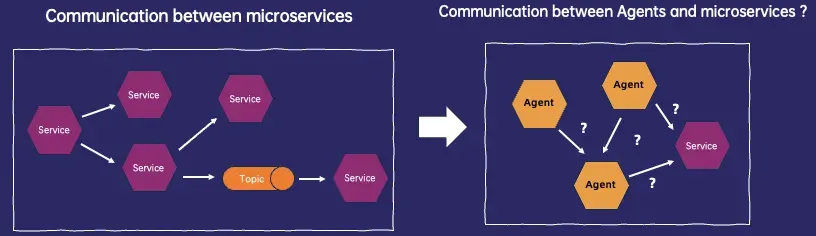

In the era of microservices, messaging systems played a crucial role in inter-service communication. In the age of AI, will they remain just as critical? And what new forms and products will emerge?

To answer this question, let's first examine how AI communication is currently handled.

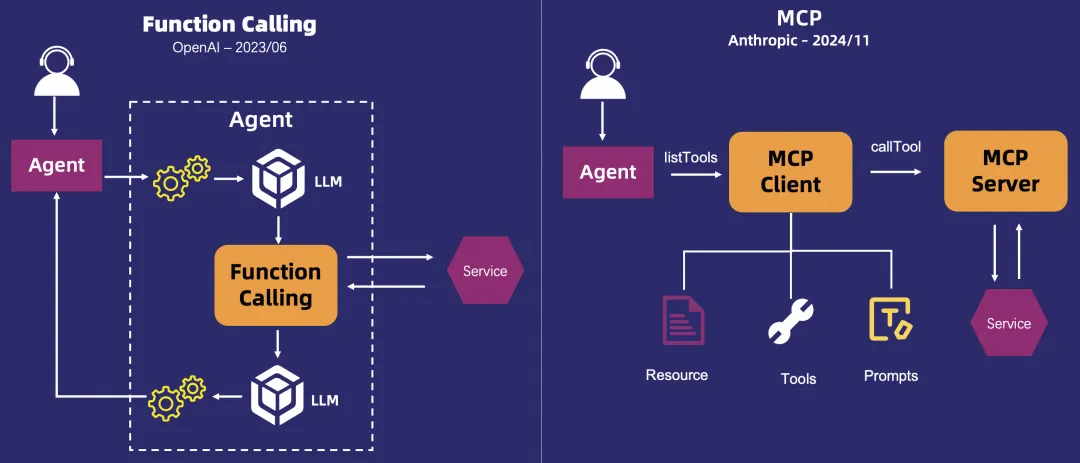

First, let's look at communication between Agents and traditional services. There are two mainstream approaches: Function Calling and MCP.

• Function Calling: Introduced by OpenAI in 2023. Since LLMs are text generators and cannot access external systems, they can be fine-tuned to understand definitions of external tool functions. When prompted, the LLM can generate the necessary parameters to invoke those tools.

• MCP (Model Context Protocol): Proposed by Anthropic in November 2024, MCP was intended to solve the stateless nature of LLMs by providing a runtime context, similar to a "session mechanism." It can also be used for tool invocation. Unlike Function Calling, it doesn't require the LLM to be pre-trained on function definitions; instead, it provides this information via contextual prompts, making it more model-agnostic but potentially less effective.

Next, let's see how Agents communicate with each other.

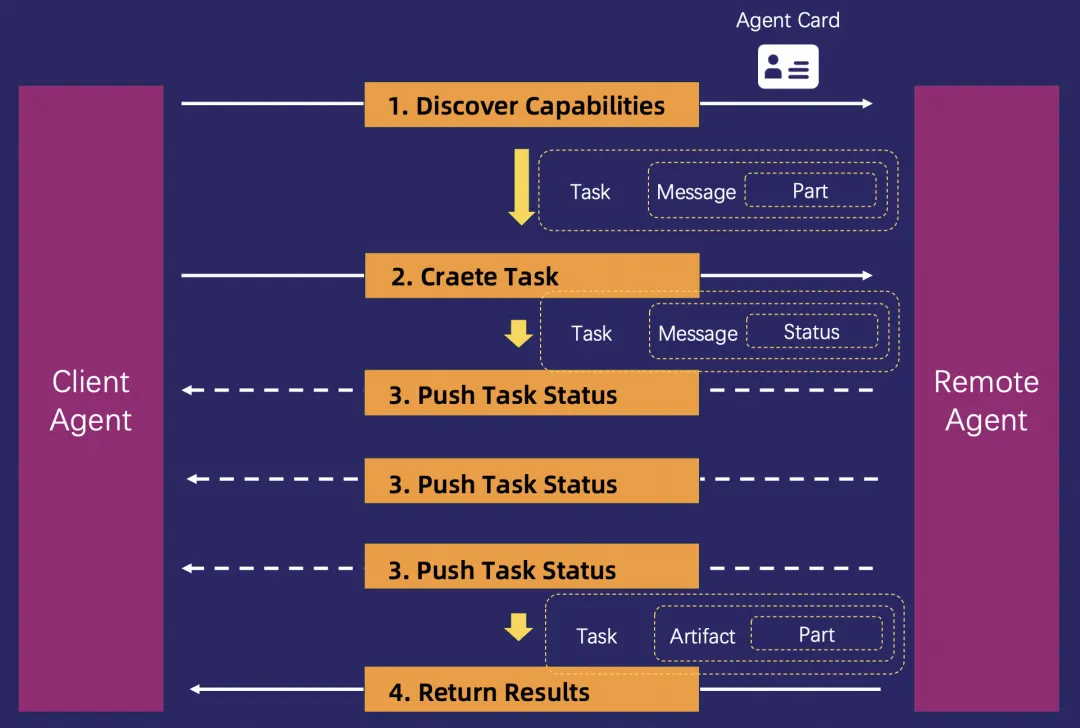

In April of this year, Google proposed the A2A (Agent-to-Agent) communication protocol, which operates in four steps:

• Step 1: A client Agent consults a remote Agent's "Agent Card" to discover its capabilities.

• Step 2: Based on the capability description, the client Agent invokes the remote Agent and creates a task for it to complete.

• Step 3: Since the task may be long-running, the A2A protocol allows the remote Agent to send continuous status updates to the client Agent via Server-Sent Events (SSE).

• Step 4: Finally, the result is returned to the client Agent, which can also be streamed.

So what is the difference between the MCP and A2A protocols? Google describes their relationship as follows:

• A2A is like a communication language: If an Agent has limited abilities and needs help from others, the A2A protocol provides a common language for them to communicate effectively.

• MCP is like a tool's user manual: Services are like tools that enhance an Agent's capabilities. MCP acts as the instruction manual, allowing an Agent to use these services more easily to extend its own abilities.

We can compare an Agent to a person and a Service to a tool. But is the line between asking a person for help and using a tool really that clear?

The division of responsibilities between A2A and MCP is elegant in theory, but faces practical challenges. Let's look at how they each declare an Agent or Service's capabilities.

• Using an example of a "query Beijing weather" service, we find that their capability declarations are very similar.

• Furthermore, their transport layer protocols are also similar, with both supporting SSE and JSON-gRPC.

• Finally, from a developer's perspective, when an Agent needs a "query weather" capability, it doesn't care whether that capability is provided by another Agent or a Service. The Agent's primary concern is the interface definition: what capabilities are available, how to call them, and what to expect in return. The backend provider is an implementation detail.

Our first prediction is that A2A and MCP will likely merge in the future, though the exact path will depend on ecosystem adoption.

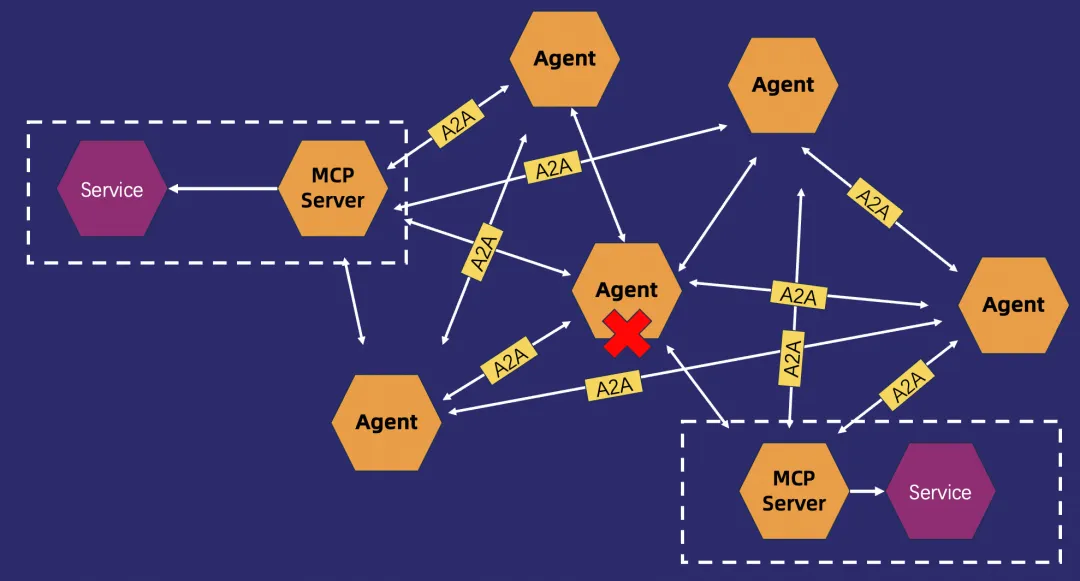

Our second prediction is that the point-to-point communication model in existing MCP and A2A protocols is insufficient. In a system with many Agents all interconnected via long-lived connections, what is the first thing that comes to mind?

Too many connections! A persistent connection between two Agents might seem manageable. But if one Agent needs to communicate with hundreds or thousands of others, the system will be flooded with a massive number of connections.

• First, the resource overhead for each Agent would be enormous.

• Second, with a mesh network, if one Agent fails and holds onto resources, it could potentially bring down other Agents or even the entire system—a common problem in microservices.

• Finally, even if the system survives the failure of an Agent, can communication be tracked and resumed once it recovers? Can operations be executed idempotently to avoid risks?

This presents numerous challenges related to stability, performance, cost, and scalability—many of which have already been addressed in the world of microservices.

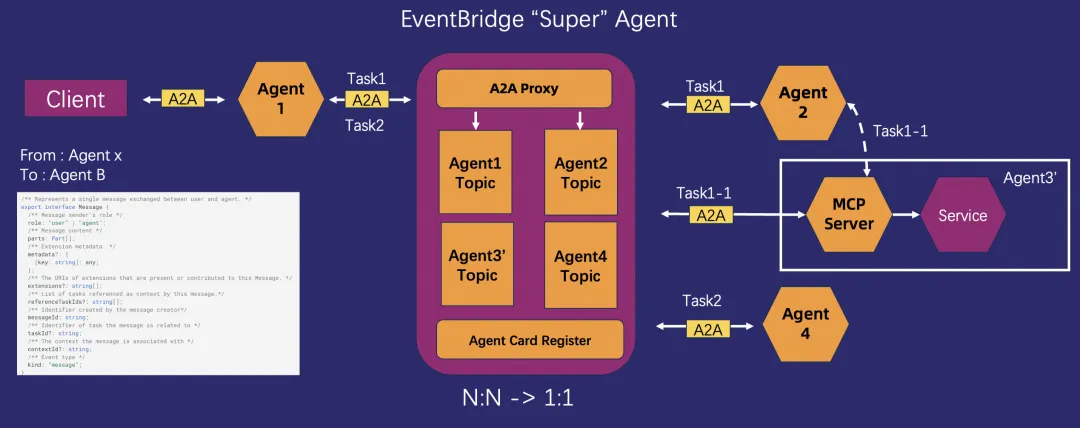

Based on these two predictions, we propose a solution from RocketMQ EventBridge: a model that introduces the role of an EventBridge Agent Proxy. We can tentatively call it a "Super Agent," though it's not a true agent itself but rather a proxy for agent capabilities.

• First, all Agents can register their capabilities—their "resumes"—with the Super Agent.

• If an Agent needs to invoke another's capability, it can look it up in the Super Agent and access it through interaction with the Super Agent.

• When an Agent needs capabilities from multiple other Agents, it interacts only with the Super Agent, which simplifies the N:N communication model to a 1:1 model.

• In addition to handling the A2A protocol, the Super Agent's proxy would also route and track the runtime status of each task. This ensures that every task is handled as expected—even during failures, restarts, or cluster scaling—and that its status is synchronized back to the originating Agent.

The "Super Agent" is somewhat analogous to a microservices service registry, but with a key difference: it not only provides service discovery but also acts as a service proxy. If we think even more broadly, we can move beyond just task-level tracking to provide "user-level" context.

• Today's Agents are memoryless. If you talk to one today, it won't remember you in a few days.

• However, everyone uses tools differently. If an Agent could better understand and remember you, it could provide a more personalized service.

• As a registration and proxy center, what if the Super Agent could provide user context while proxying for Agents? The more a user interacts with Agents, the more complete their user profile becomes. In turn, this makes the Agents more indispensable, creating a positive feedback loop.

The EventBridge Agent Proxy concept is still in the theoretical exploration phase, and we welcome discussion and collaboration.

• [Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks, Lewis et al., 2020]

• [Model Context Protocol (MCP) Specification, 2024]

• [A2A: Agent-to-Agent Communication Protocol, Google, 2024]

• Apache RocketMQ EventBridge Official Documentation: https://rocketmq.apache.org/

Apache RocketMQ for AI: A Strategic Upgrade Ushering in the Era of AI MQ

Practical Guide: Deploying an MCP Gateway On‑Premises with Higress and Nacos

667 posts | 55 followers

FollowAlibaba Cloud Native - June 12, 2024

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud Native Community - November 23, 2022

Alibaba Cloud New Products - December 4, 2020

Alibaba Cloud Native Community - January 13, 2023

Alibaba Cloud Native Community - December 1, 2023

667 posts | 55 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Alibaba Cloud Native Community