By Peng Li (Yuanyi)

The traditional auto scaling strategy based on GPU utilization cannot accurately reflect the actual load of large model inference services. Even if the GPU utilization reaches 100%, it does not necessarily indicate that the system is running under high load. Knative provides the automatic scaling mechanism, KPA (Knative Pod Autoscaler), to adjust resource allocation based on the QPS or RPS, directly reflecting the performance of inference services. The DeepSeek-R1 model is used to describe how to deploy a DeepSeek-R1 inference service in Knative.

• Knative is deployed in the ACK cluster. For more information, see Deploy Knative.

• The ack-virtual-node component is deployed. This component is required when you use elastic container instances. For more information, see Deploy ack-virtual-node in the cluster.

Create a Knative Service, add the alibabacloud.com/eci=true label to the Service, and add the k8s.aliyun.com/eci-use-specs annotation to specify the type of elastic container instance to be used. Then, run the kubectl apply command to deploy the YAML file.

• Resource specification: Example: ecs.gn7i-c8g1.2xlarge.

• Inference model: DeepSeek-R1-Distill-Qwen-1.5B.

Sample template:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

labels:

release: deepseek

name: deepseek

namespace: default

spec:

template:

metadata:

annotations:

k8s.aliyun.com/eci-use-specs : "ecs.gn7i-c8g1.2xlarge"

autoscaling.knative.dev/min-scale: "1"

labels:

release: deepseek

alibabacloud.com/eci: "true"

spec:

containers:

- command:

- /bin/sh

- -c

args:

- vllm serve deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B --max_model_len 2048

image: registry.cn-hangzhou.aliyuncs.com/knative-sample/vllm-openai:v0.7.1

imagePullPolicy: IfNotPresent

name: vllm-container

env:

- name: HF_HUB_ENABLE_HF_TRANSFER

value: "0"

ports:

- containerPort: 8000

readinessProbe:

httpGet:

path: /health

port: 8000

initialDelaySeconds: 60

periodSeconds: 5

resources:

limits:

nvidia.com/gpu: "1"

requests:

nvidia.com/gpu: "1"

volumeMounts:

- mountPath: /root/.cache/huggingface

name: cache-volume

- name: shm

mountPath: /dev/shm

volumes:

- name: cache-volume

emptyDir: {}

- name: shm

emptyDir:

medium: Memory

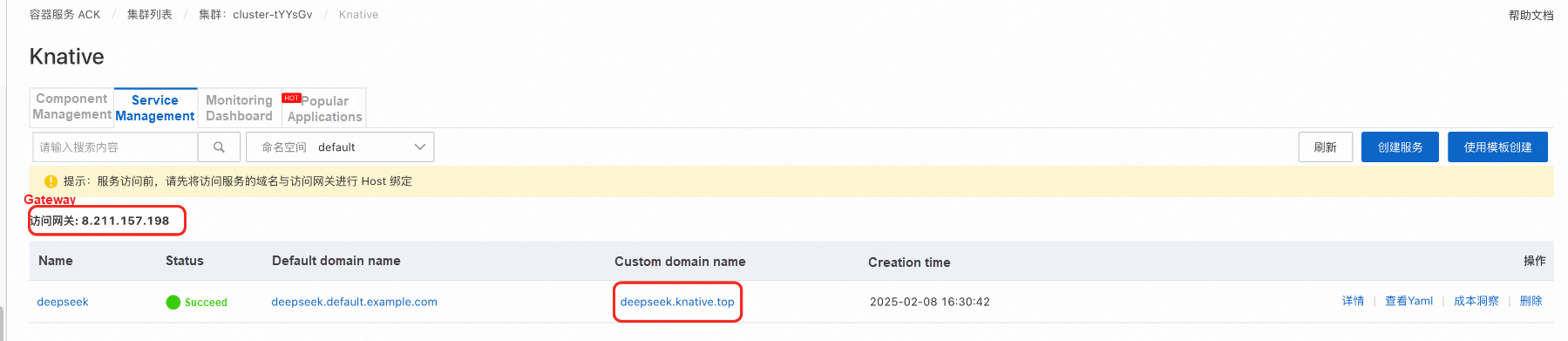

sizeLimit: 2GiAfter the deployment, on the Services tab, record the gateway IP address and the default domain name of the Service.

Verify the DeepSeek service:

curl -H "Host: deepseek.default.example.com" -H "Content-Type: application/json" http://deepseek.knative.top/v1/chat/completions -d '{"model": "deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B", "messages": [{"role": "user", "content": "Introduction to DeepSeek-R1"}]}'Response:

{"id":"chatcmpl-07d99924-b998-4f39-9ec9-01dfb4ece8a0","object":"chat.com pletion","created":1739003758,"model":"deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B","choices":[{"index":0,"message":{"role":"assistant","reasoning_content":null,"content":"<think>\n\n</think>\n\n DeepSeek-R1 is a large language model developed by DeepSeek to support dialogue and language understanding tasks. Here is some key information about DeepSeek-R1: \n\n1. **Architecture and Technology**: \n - **Language Processing**: As a large language model, the main functions of DeepSeek-R1 include text generation, understanding, and dialogue. \n - **Self-learning Ability**: The model is trained with a large amount of text data to improve its natural language processing capabilities. \n\n2. **Application Scenarios**: \n - **Chatbots**: The model is used to assist humans in dialogues in the manufacturing, healthcare, and education fields. \n - **Content Generation**: The model is adept at generating high-quality content for news reports and marketing materials. \n\n3. **Technical Features**: \n - **Efficient Computing**: Compared with lightweight models, DeepSeek-R1 is more efficient and suitable for real-time responses. \n - **Modular Design**: The model is based on a modular architecture for easy extension and optimization. \n\n4. **User Evaluation**: \n - **Usage Scenarios**: The model meets the needs of enterprises in specific industries. \n - **Evaluation Criteria**: It is evaluated based on technical accuracy and application scenarios. \n\n5. **Features and Benefits**: \n - **Superior Performance**: It performs well in multiple natural language processing tasks. \n - **Practicality**: It can address language processing needs in real-world workplaces. \n\n6. **Future Development**: \n - **Technical Updates**: The model is continuously optimized, with attention to more application areas, such as autonomous driving and other ethical issues. \n\n In summary, DeepSeek-R1 is a powerful and industry-specific large language model designed to serve real-world scenarios with efficient artificial intelligence.","tool_calls":[]},"logprobs":null,"finish_reason":"stop","stop_reason":null}],"usage":{"prompt_tokens":8,"total_tokens":337,"completion_tokens":329,"prompt_tokens_details":null},"prompt_logprobs":null}Knative allows you to define a specific domain name for a Knative service.

You can use Alibaba Cloud DNS to resolve domain names to the gateway IP address.

ChatGPTNextWeb allows you to deploy private ChatGPT web applications with one click. It supports DeepSeek, Claude, GPT4, and Gemini Pro models. You can use Knative to quickly deploy and access ChatGPT web applications.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: chatgpt-next-web

spec:

template:

spec:

containers:

- name: chatgpt-next-web

image: registry.cn-hangzhou.aliyuncs.com/knative-sample/chatgpt-next-web:v2.15.8

ports:

- containerPort: 3000

readinessProbe:

tcpSocket:

port: 3000

initialDelaySeconds: 60

periodSeconds: 5

env:

- name: HOSTNAME

value: '0.0.0.0'

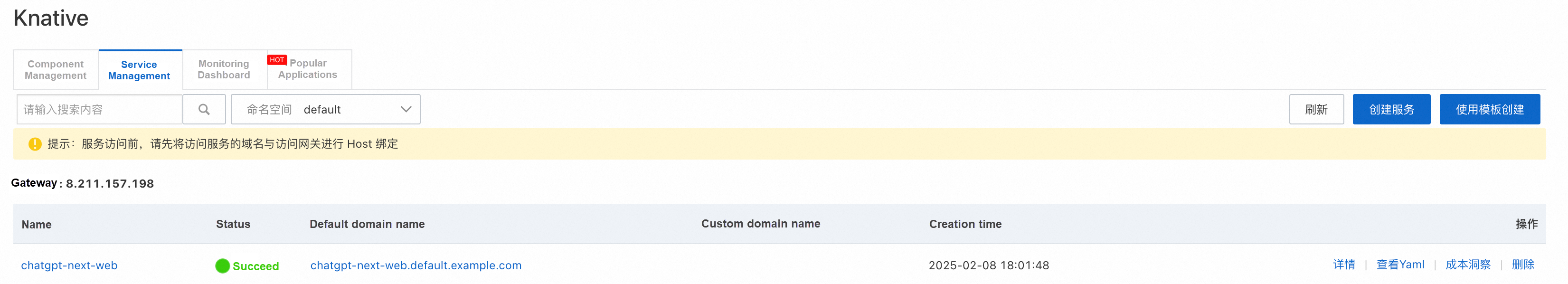

# Replace with your OpenAI API endpoint.On the Services tab, record the gateway IP address and the default domain name of the Service.

1. Add the following information to the hosts file to map the domain name of the Service to the IP address of the gateway for the chatgpt-next-web Service. Example:

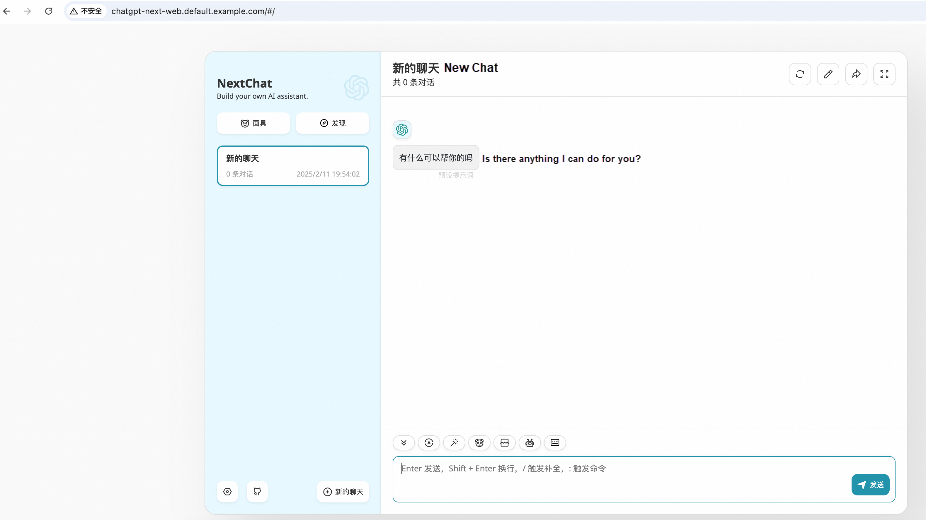

8.211.157.198 chatgpt-next-web.default.example.com # Replace the gateway IP address and domain name with the actual values. 2. After you modify the hosts file, go to the Services tab, and click the default domain name of the chatgpt-next-web Service to access the Service. As shown in the figure:

3. Configure DeepSeek.

• Set the port endpoint:

http://deepseek.knative.top

• API Key: You can directly apply for an API key on the official DeepSeek platform.

https://platform.deepseek.com/api_keys

• Custom domain model: deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B.

4. Check results.

Solving GPU Shortages in IDC with Alibaba Cloud ACK Edge and Virtual Nodes for DeepSeek Deployment

222 posts | 33 followers

FollowAlibaba Cloud Native Community - February 20, 2025

Alibaba Container Service - May 27, 2025

Alibaba Container Service - May 19, 2025

Alibaba Cloud Data Intelligence - February 8, 2025

Alibaba Container Service - February 7, 2020

Alibaba Container Service - April 17, 2025

222 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Conversational AI Service

Conversational AI Service

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn MoreMore Posts by Alibaba Container Service