By Xiaobing Meng (Zhishi)

As an intelligent inference model based on deep learning, DeepSeek quickly gained popularity due to its outstanding performance in natural language processing (NLP), image recognition, and other fields. Whether in enterprise-level applications or academic research, DeepSeek has shown strong potential. However, with the continuous expansion of its application scenarios, the computing power bottleneck of data centers is gradually highlighted.

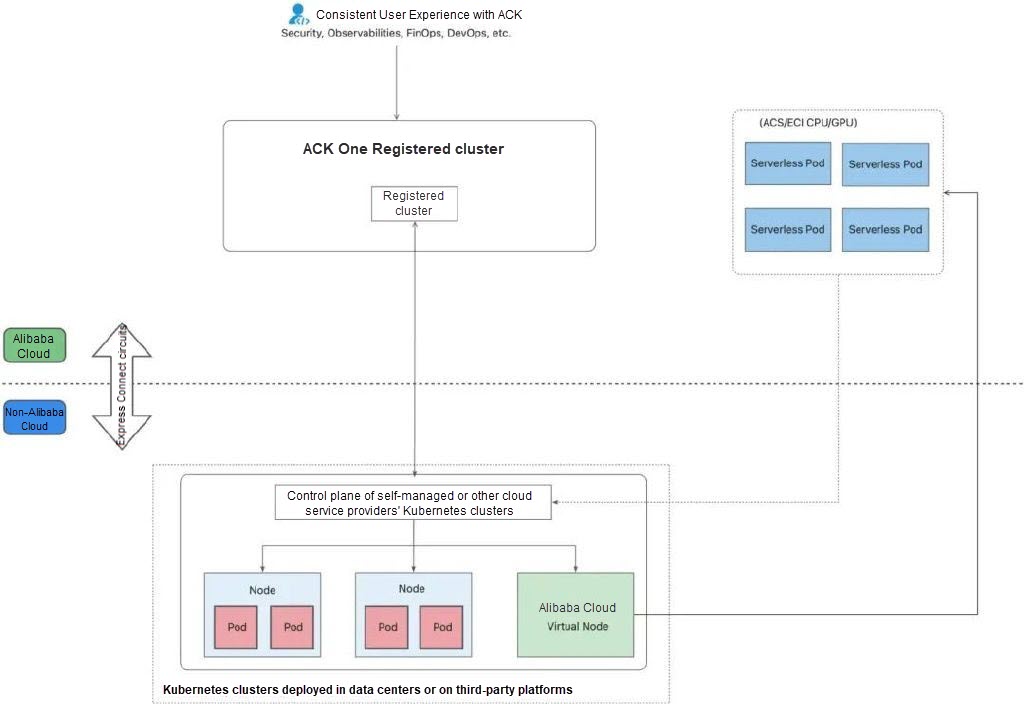

Faced with insufficient computing power, the ACK One registered cluster provided by Alibaba Cloud offers a flexible and effective solution for enterprises. By connecting the Kubernetes cluster in the on-premises data center to the ACK One registered cluster, enterprises can seamlessly expand their computing resources and fully utilize the powerful ACS GPU computing power, efficiently deploying the DeepSeek inference model.

You can register clusters deployed in data centers or on a third-party cloud to Alibaba Cloud Distributed Cloud Container Platform for Kubernetes (ACK One). This way, you can build hybrid clusters and manage clusters in a centralized manner.

Container Compute Service (ACS) is an upgrade of ACK Serverless clusters (FKA ASK). It is more cost-effective, easy-to-use, and elastic. ACS is intended for a variety of business scenarios. It defines cost-effective serverless compute classes and compute QoS classes, allowing you to request resources on demand and pay for them on a per-second basis. It saves you the need to worry about cluster and node O&M.

DeepSeek-R1 is the first-generation inference model provided by DeepSeek. It aims to improve the inference performance of LLMs through large-scale reinforcement learning. Statistics show that DeepSeek-R1 outperforms other closed source models in mathematical inference and programming competition. Its performance even reaches or surpasses the OpenAI-01 series in certain sectors. DeepSeek-R1 also excels in sectors related to knowledge, such as creation, writing, and Q&A. DeepSeek also distills inference capabilities to smaller models, such as Qwen and Llama, to fine-tune their inference performance. The 14B model distilled from DeepSeek surpasses the open source QwQ-32B model. The 32B and 70B models distilled from DeepSeek also hit new records. For more information about DeepSeek, see DeepSeek AI GitHub repository.

vLLM is a high-performance and easy-to-use LLM inference service framework. vLLM supports multiple commonly used LLMs, including Qwen models. vLLM is powered by technologies such as PagedAttention optimization, continuous batching, and model quantification to greatly improve the inference efficiency of LLMs. For more information about the vLLM framework, see vLLM GitHub repository.

• Log on to the Container Service for Kubernetes (ACK) console and activate Container Service as prompted.

• Log on to the ACS console. Follow the on-screen instructions to activate ACS.

• Create an ACK One registered cluster and connect it to a data center or a Kubernetes cluster of another cloud service provider. We recommend that you set the ACK One cluster to 1.24 or later. For more information, see Create an ACK One registered cluster and connect Cluster B to the registered cluster.

• The Arena client is installed and configured. For more information, see Configure the Arena client.

• Install the ack-virtual-node component. For more information, see Use ACS computing power in ACK One registered clusters

1. Run the following command to download the DeepSeek-R1-Distill-Qwen-7B model from ModelScope.

Note: Check whether the git-lfs plug-in is installed. If not, run yum install git-lfs or apt-get install git-lfs to install it. For more information, see Install git-lfs

git lfs install

GIT_LFS_SKIP_SMUDGE=1 git clone https://www.modelscope.cn/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B.git

cd DeepSeek-R1-Distill-Qwen-7B/

git lfs pull2. Create an OSS directory and upload the model files to the directory.

Note: To install and use ossutil, see Install ossutil.

ossutil mkdir oss://<your-bucket-name>/models/DeepSeek-R1-Distill-Qwen-7B

ossutil cp -r ./DeepSeek-R1-Distill-Qwen-7B oss://<your-bucket-name>/models/DeepSeek-R1-Distill-Qwen-7B3. Create a PV and a PVC. Configure a persistent volume (PV) named llm-model and a persistent volume claim (PVC) named llm-model for the cluster. For more information, see Mount a statically provisioned OSS volume.

The following table describes the parameters of the PV.

| Parameter or setting | Description |

|---|---|

| PV Type | OSS |

| Name | llm-model |

| Access Certificate | Specify the AccessKey ID and the AccessKey secret used to access the OSS bucket. |

| Bucket ID | Select the OSS bucket that you created in the previous step. |

| OSS Path | Select the path of the model, such as /models/DeepSeek-R1-Distill-Qwen-7B. |

The following table describes the parameters of the PVC.

| Parameter or setting | Description |

|---|---|

| PVC Type | OSS |

| Name | llm-model |

| Allocation Mode | In this example, Existing Volumes is selected. |

| Existing Volumes | Click Existing Volumes and select the PV that you created. |

The following code block shows the YAML template:

apiVersion: v1

kind: Secret

metadata:

name: oss-secret

stringData:

akId: <your-oss-ak> # The AccessKey ID used to access the OSS bucket.

akSecret: <your-oss-sk> # The AccessKey secret used to access the OSS bucket.

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: llm-model

labels:

alicloud-pvname: llm-model

spec:

capacity:

storage: 30Gi

accessModes:

- ReadOnlyMany

persistentVolumeReclaimPolicy: Retain

csi:

driver: ossplugin.csi.alibabacloud.com

volumeHandle: llm-model

nodePublishSecretRef:

name: oss-secret

namespace: default

volumeAttributes:

bucket: <your-bucket-name> # The name of the OSS bucket.

url: <your-bucket-endpoint> # The endpoint. We recommend internal endpoints, such as oss-cn-hangzhou-internal.aliyuncs.com.

otherOpts: "-o umask=022 -o max_stat_cache_size=0 -o allow_other"

path: <your-model-path> # The model path, such as /models/DeepSeek-R1-Distill-Qwen-7B/ in this example.

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: llm-model

spec:

accessModes:

- ReadOnlyMany

resources:

requests:

storage: 30Gi

selector:

matchLabels:

alicloud-pvname: llm-model1. Run the following command to view the status of nodes in the cluster.

kubectl get no -owideExpected output:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

idc-master-0210-001 Ready control-plane 26h v1.28.2 192.168.8.XXX <none> Alibaba Cloud Linux 3.2104 U11 (OpenAnolis Edition) 5.10.134-18.al8.x86_64 containerd://1.6.32

idc-worker-0210-001 Ready <none> 26h v1.28.2 192.168.8.XXX <none> Alibaba Cloud Linux 3.2104 U11 (OpenAnolis Edition) 5.10.134-18.al8.x86_64 containerd://1.6.32

idc-worker-0210-002 Ready <none> 26h v1.28.2 192.168.8.XXX <none> Alibaba Cloud Linux 3.2104 U11 (OpenAnolis Edition) 5.10.134-18.al8.x86_64 containerd://1.6.32

virtual-kubelet-cn-hangzhou-b Ready agent 20h v1.28.2 10.244.11.XXX <none> <unknown> <unknown> <unknown>

virtual-kubelet-cn-hangzhou-h Ready agent 25h v1.28.2 10.244.11.XXX <none> <unknown> <unknown> <unknown>From the output results, we can see that there are virtual-kubelet-cn-hangzhou- nodes in the node.

2. Run the following command to deploy the Qwen model as an inference service by using vLLM.

Note: Suggested GPU resources: 1 GPU, 8 vCPUs, and 32 GiB of memory.

arena serve custom \

--name=deepseek-r1 \

--version=v1 \

--gpus=1 \

--cpu=8 \

--memory=32Gi \

--replicas=1 \

--env-from-secret=akId=oss-secret \

--env-from-secret=akSecret=oss-secret \

--label=alibabacloud.com/acs="true" \ # Use ACS computing power.

--label=alibabacloud.com/compute-class=gpu \

--label=alibabacloud.com/gpu-model-series=<example-model> \ # Specify the GPU instance series.

--restful-port=8000 \

--readiness-probe-action="tcpSocket" \

--readiness-probe-action-option="port: 8000" \

--readiness-probe-option="initialDelaySeconds: 30" \

--readiness-probe-option="periodSeconds: 30" \

--image=registry-cn-hangzhou-vpc.ack.aliyuncs.com/ack-demo/vllm:v0.6.6 \

--data=llm-model:/model/DeepSeek-R1-Distill-Qwen-7B \

"vllm serve /model/DeepSeek-R1-Distill-Qwen-7B --port 8000 --trust-remote-code --served-model-name deepseek-r1 --max-model-len 32768 --gpu-memory-utilization 0.95 --enforce-eager"Note: You must use the following labels to describe how to use ACS GPU computing power.

--label=alibabacloud.com/acs="true"--label=alibabacloud.com/compute-class=gpu--label=alibabacloud.com/gpu-model-series=<example-model>

Expected output:

service/deepseek-r1-v1 created

deployment.apps/deepseek-r1-v1-custom-serving created

INFO[0001] The Job deepseek-r1 has been submitted successfully

INFO[0001] You can run `arena serve get deepseek-r1 --type custom-serving -n default` to check the job statusThe following table describes the parameters.

| Parameters | Description |

|---|---|

| --name | The name of the inference service. |

| --version | The version of the inference service. |

| --gpus | The number of GPUs used by each inference service replica. |

| --cpu | The number of CPUs used by each inference service replica. |

| --memory | The amount of memory used by each inference service replica. |

| --replicas | The number of inference service replicas. |

| --label | Add the following labels to specify ACS GPU computing power. `--label=alibabacloud.com/acs="true"` `--label=alibabacloud.com/compute-class=gpu` `--label=alibabacloud.com/gpu-model-series=example-model` Note: To view the supported GPU models, submit a ticket. |

| --restful-port | The port of the inference service to be exposed. |

| --readiness-probe-action | The connection type of readiness probes. Valid values: HttpGet, Exec, gRPC, and TCPSocket. |

| --readiness-probe-action-option | The connection method of readiness probes. |

| --readiness-probe-option | The readiness probe configuration. |

| --image | The image address of the inference service. |

| --data | Mount a shared PVC to the runtime environment. The value consists of two parts separated by a colon (:). Specify the name of the PVC on the left side of the colon. To obtain the name of the PVC, run the arena data list command. This command queries the PVCs that are available for the specified cluster. Specify the path to which the PV claimed by the PVC is mounted on the right side of the colon. The training data will be read from the specified path. This way, your training job can retrieve the data stored in the corresponding PV claimed by the PVC. |

3. Run the following command to query the details of the inference service. Wait until the service is ready.

arena serve get deepseek-r1Expected output:

Name: deepseek-r1

Namespace: default

Type: Custom

Version: v1

Desired: 1

Available: 1

Age: 17m

Address: 10.100.136.39

Port: RESTFUL:8000

GPU: 1

Instances:

NAME STATUS AGE READY RESTARTS GPU NODE

---- ------ --- ----- -------- --- ----

deepseek-r1-v1-custom-serving-5f59745cbd-bsrdq Running 17m 1/1 0 1 virtual-kubelet-cn-hangzhou-b4. Run the following command to check whether the inference service has been deployed to the virtual node.

kubectl get po -owide |grep deepseek-r1-v1Expected output:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deepseek-r1-v1-custom-serving-5f59745cbd-r8drs 1/1 Running 0 3m16s 192.168.2.XXX virtual-kubelet-cn-hangzhou-b <none> <none>From the results, we can see that the business pods of the inference service are scheduled to the virtual node.

1. Run kubectl port-forward to configure port forwarding between the inference service and the local environment.

kubectl port-forward svc/deepseek-r1-v1 8000:80002. Send requests to the inference service.

curl http://localhost:8000/v1/chat/completions -H "Content-Type: application/json" -d '{"model": "deepseek-r1", "messages": [{"role": "user", "content": "Hello, DeepSeek."}], "max_tokens": 100, "temperature": 0.7, "top_p": 0.9, "seed": 10}'Expected output:

{"id":"chatcmpl-cef570252f324ed2b34953b8062f793f","object":"chat.completion","created":1739245450,"model":"deepseek-r1","choices":[{"index":0,"message":{"role":"assistant","content":"Hello! I am DeepSeek-R1, an intelligent assistant independently developed by China's DeepSeek company. I am delighted to serve you! \n</think>\n\n Hello! I am DeepSeek-R1, an intelligent assistant independently developed by China's DeepSeek company. I am delighted to serve you!","tool_calls":[]},"logprobs":null,"finish_reason":"stop","stop_reason":null}],"usage":{"prompt_tokens":10,"total_tokens":68,"completion_tokens":58,"prompt_tokens_details":null},"prompt_logprobs":null}DeepSeek-R1 outperforms other closed source models in mathematical inference and programming competition. Its performance even reaches or surpasses the OpenAI-01 series in certain sectors. Once released, many people tried it. This article describes how to use ACS GPU computing power to deploy the DeepSeek inference service that is available for production on an ACK One registered cluster. This solves computing power insufficiency in the data center and makes it easier for enterprises to deal with complex and changeable business challenges, fully releasing the productivity potential of the cloud.

Visit the ACK One official website to learn more details and start your journey of intelligent scaling.

Use Alibaba Cloud ASM to Efficiently Manage LLM Traffic Part 2: Traffic Observability

How ACK Edge Solves Challenges in Elasticity for LLM Inference Services

228 posts | 33 followers

FollowAlibaba Container Service - November 21, 2024

Alibaba Container Service - December 18, 2024

Alibaba Container Service - April 17, 2025

Hironobu Ohara - February 3, 2023

Alibaba Container Service - December 26, 2024

Alibaba Cloud Native - October 18, 2023

228 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Container Service