By Jing Gu (Zibai)

This article focuses on how to use KServe to deploy an inference service from a DeepSeek distilled model in a Container Service for Kubernetes (ACK) cluster in the production environment.

DeepSeek-R1 is the first-generation inference model provided by DeepSeek to improve the inference performance of large language models (LLMs) through large-scale enhanced learning. Statistics show that DeepSeek-R1 outperforms other closed source models in mathematical inference and programming performance. The performance of the model reaches or surpasses the OpenAI-o1 series in specific sectors. DeepSeek-R1 also excels in sectors related to knowledge, such as creation, writing, and Q&A. DeepSeek distills inference capabilities into smaller models, such as Qwen and Llama, to fine-tune the inference performance of these models. The 14B model distilled from DeepSeek surpasses the open source QwQ-32B model. The 32B and 70B models distilled from DeepSeek also set new records. For more information about DeepSeek, see DeepSeek AI GitHub repository.

KServe is an open source cloud-native model service platform designed to simplify the process of deploying and running machine learning models on Kubernetes. KServe supports multiple machine learning frameworks and provides the auto scaling feature. KServe allows you to deploy models by using simple YAML configuration files and declarative APIs. This way, you can easily configure and manage model services. For more information, see KServe.

Arena is a lightweight client that is used to manage Kubernetes-based machine learning tasks. Arena allows you to streamline data preparation, model development, model training, and model prediction throughout the entire lifecycle of machine learning. This improves the work efficiency of data scientists. Arena is also deeply integrated with the basic services of Alibaba Cloud. It supports GPU sharing and Cloud Parallel File System (CPFS). Arena can run in deep learning frameworks optimized by Alibaba Cloud. This maximizes the performance and utilization of heterogeneous computing resources provided by Alibaba Cloud. For more information about Arena, see Documentation.

• An ACK cluster that contains GPU-accelerated nodes is created. For more information, see Create an ACK cluster with GPU-accelerated nodes.

Select A10 for the GPU model and ecs.gn7i-c8g1.2xlarge for the recommended specification.

• The ack-kserve component is installed. For more information, see Install the ack-kserve component.

• The Arena client is installed. For more information, see Install Arena.

1. Run the following command to download the DeepSeek-R1-Distill-Qwen-7B model from ModelScope.

# Check whether the git-lfs plug-in is installed.

# If not, you can run yum install git-lfs or apt install git-lfs to install it.

git lfs install

# Clone the DeepSeek-R1-Distill-Qwen-7B project.

GIT_LFS_SKIP_SMUDGE=1 git clone https://www.modelscope.cn/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B.git

# Download the model file.

cd DeepSeek-R1-Distill-Qwen-7B/

git lfs pull2. Upload files to the OSS bucket.

# Create a directory.

ossutil mkdir oss://<your-bucket-name>/models/DeepSeek-R1-Distill-Qwen-7B

# Upload the file.

ossutil cp -r ./DeepSeek-R1-Distill-Qwen-7B oss://<your-bucket-name>/models/DeepSeek-R1-Distill-Qwen-7B3. Configure a persistent volume (PV) named llm-model and a persistent volume claim (PVC) named llm-model for the cluster where you want to deploy the inference services. For more information, see Mount a statically provisioned OSS volume.

• The following table describes the basic parameters that are used to create the PV.

| Parameter or Setting | Description |

|---|---|

| PVC Type | OSS |

| Volume Name | llm-model |

| Access Certificate | The AccessKey pair used to access the OSS bucket. The AccessKey pair consists of an AccessKey ID and an AccessKey secret. |

| Bucket ID | Select the OSS bucket that you created in the previous step. |

| OSS Path | Select the path of the model, such as /models/DeepSeek-R1-Distill-Qwen-7B. |

• The following table describes the basic parameters that are used to create the PVC.

| Parameter or Setting | Description |

|---|---|

| PVC Type | OSS |

| Volume Name | llm-model |

| Allocation Mode | Select Existing Volumes. |

| Existing Volumes | Click Existing Volumes and select the PV that you created. |

1. Run the following command to start an inference service named deepseek.

arena serve kserve \

--name=deepseek \

--image=kube-ai-registry.cn-shanghai.cr.aliyuncs.com/kube-ai/vllm:v0.6.6 \

--gpus=1 \

--cpu=4 \

--memory=12Gi \

--data=llm-model:/model/DeepSeek-R1-Distill-Qwen-7B \

"vllm serve /model/DeepSeek-R1-Distill-Qwen-7B --port 8080 --trust-remote-code --served-model-name deepseek-r1 --max-model-len 32768 --gpu-memory-utilization 0.95 --enforce-eager"The following table describes the parameters.

| Parameters | Required | Description |

|---|---|---|

| --name | Yes | The name of the inference service that you submit, which is globally unique. |

| --image | Yes | The image address of the inference service. |

| --gpus | No | The number of GPUs to be used by the inference service. Default value: 0. |

| --cpu | No | The number of CPU cores to be used by the inference service. |

| --memory | No | The size of memory requested by the inference service. |

| --data | No | The address of the model that is deployed as the inference service. In this example, the model is stored in the llm-model directory, which is mounted to the /model/ directory in the pod. |

Expected output:

inferenceservice.serving.kserve.io/deepseek created

INFO[0003] The Job deepseek has been submitted successfully

INFO[0003] You can run `arena serve get deepseek --type kserve -n default` to check the job status1. Run the following command to view the deployment progress of the inference service deployed by using KServe.

arena serve get deepseekExpected output:

Name: deepseek

Namespace: default

Type: KServe

Version: 1

Desired: 1

Available: 1

Age: 3m

Address: http://deepseek-default.example.com

Port: :80

GPU: 1

Instances:

NAME STATUS AGE READY RESTARTS GPU NODE

---- ------ --- ----- -------- --- ----

deepseek-predictor-7cd4d568fd-fznfg Running 3m 1/1 0 1 cn-beijing.172.16.1.77The preceding output indicates that the inference service is deployed by using KServe and the model can be accessed from http://deepseek-default.example.com

2. Run the following command to obtain the IP address of the NGINX Ingress controller and access the inference service by using the IP address.

# Obtain the IP address of the NGINX Ingress controller.

NGINX_INGRESS_IP=$(kubectl -n kube-system get svc nginx-ingress-lb -ojsonpath='{.status.loadBalancer.ingress[0].ip}')

# Obtain the hostname of the inference service.

SERVICE_HOSTNAME=$(kubectl get inferenceservice deepseek -o jsonpath='{.status.url}' | cut -d "/" -f 3)

# Send a request to access the inference service.

curl -H "Host: $SERVICE_HOSTNAME" -H "Content-Type: application/json" http://$NGINX_INGRESS_IP:80/v1/chat/completions -d '{"model": "deepseek-r1", "messages": [{"role": "user", "content": "Say this is a test!"}], "max_tokens": 512, "temperature": 0.7, "top_p": 0.9, "seed": 10}'Expected output:

{"id":"chatcmpl-0fe3044126252c994d470e84807d4a0a","object":"chat.completion","created":1738828016,"model":"deepseek-r1","choices":[{"index":0,"message":{"role":"assistant","content":"<think>\n\n</think>\n\nIt seems like you're testing or sharing some information. How can I assist you further? If you have any questions or need help with something, feel free to ask!","tool_calls":[]},"logprobs":null,"finish_reason":"stop","stop_reason":null}],"usage":{"prompt_tokens":9,"total_tokens":48,"completion_tokens":39,"prompt_tokens_details":null},"prompt_logprobs":null}The observability of LLM inference services in the production environment is crucial for fault detection and identification. The vLLM framework provides many LLM inference metrics. For specific metrics, see the Metrics documentation. KServe also offers some metrics to help you monitor the performance and health of model services. Alibaba Cloud integrates metrics for LLM inference services into Arena. To enable the metrics, add the --enable-prometheus=true parameter to the application configurations.

arena serve kserve \

--name=deepseek \

--image=kube-ai-registry.cn-shanghai.cr.aliyuncs.com/kube-ai/vllm:v0.6.6 \

--gpus=1 \

--cpu=4 \

--memory=12Gi \

--enable-prometheus=true \

--data=llm-model:/model/DeepSeek-R1-Distill-Qwen-7B \

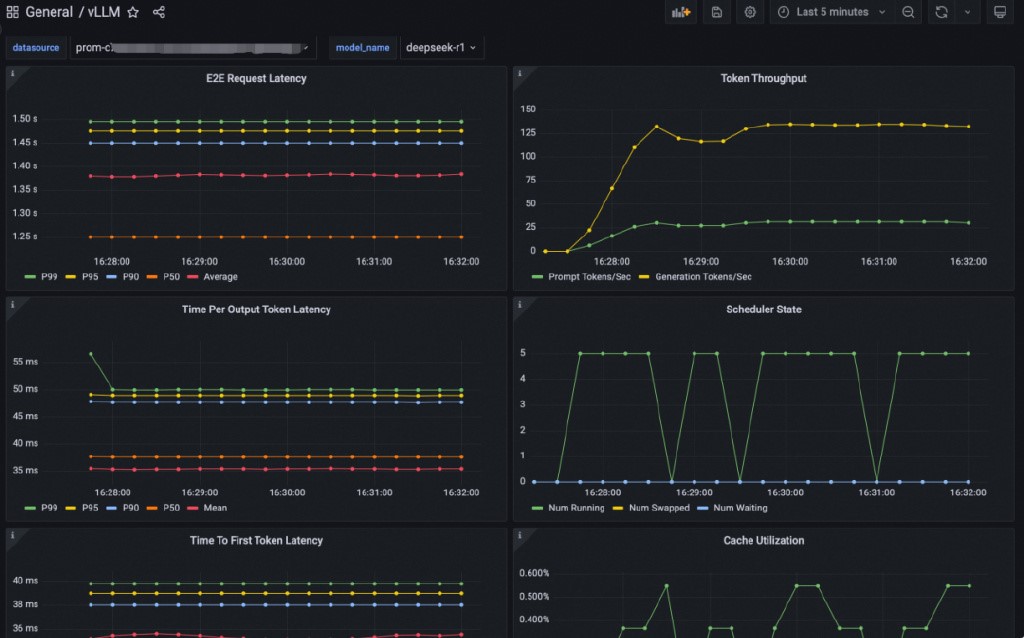

"vllm serve /model/DeepSeek-R1-Distill-Qwen-7B --port 8080 --trust-remote-code --served-model-name deepseek-r1 --max-model-len 32768 --gpu-memory-utilization 0.95 --enforce-eager"The following figure shows the configured Grafana dashboard.

When you deploy a model as an inference service by using KServe, the model that is deployed as the inference service needs to handle loads that dynamically fluctuate. KServe integrates Kubernetes-native Horizontal Pod Autoscaler (HPA) technology and the scaling controller to automatically and flexibly scale pods for a model based on CPU utilization, memory usage, GPU utilization, and custom performance metrics. This ensures the performance and stability of services.

arena serve kserve \

--name=deepseek \

--image=kube-ai-registry.cn-shanghai.cr.aliyuncs.com/kube-ai/vllm:v0.6.6 \

--gpus=1 \

--cpu=4 \

--memory=12Gi \

--scale-metric=DCGM_CUSTOM_PROCESS_SM_UTIL \

--scale-target=80 \

--min-replicas=1 \

--max-replicas=2 \

--data=llm-model:/model/DeepSeek-R1-Distill-Qwen-7B \

"vllm serve /model/DeepSeek-R1-Distill-Qwen-7B --port 8080 --trust-remote-code --served-model-name deepseek-r1 --max-model-len 32768 --gpu-memory-utilization 0.95 --enforce-eager"The preceding example shows how to configure an auto scaling policy based on the GPU utilization of pods. --scale-metric specifies the scaling metric as DCGM_CUSTOM_PROCESS_SM_UTIL and --scale-target specifies the threshold. When the GPU utilization exceeds 80%, scale-out is triggered. --min-replicas and --max-replicas set the minimum and maximum number of replicas. You can also configure other metrics based on your business requirements. For more information, see Configure an auto scaling policy for a service by using KServe.

With the development of technology, the size of files used by the models of AI applications become larger and larger. However, long latency and cold start issues may occur when these large files are pulled from storage services such as Object Storage Service (OSS) and Apsara File Storage NAS (NAS). You can use Fluid to accelerate file pulling for models and optimize the performance of inference services, especially inference services deployed by using KServe. The experimental results show that when the DeepSeek-R1-Distill-Qwen-7B model is loaded on A10, the ready time of LLM inference is reduced by 50.9% after using Fluid compared with OSS intranet pulling.

Application releases are used to publish service updates in the production environment. Canary releases ensure service stability and reduce the risks that may arise during service updates. ACK supports various canary release policies. For example, you can implement canary releases based on traffic ratios or request headers. For more information, see Implement a canary release for an inference service based on the NGINX Ingress controller.

The DeepSeek-R1-Distill-Qwen-7B model requires only 14 GB of GPU memory. When you use a high-specification GPU, such as an A100 GPU, you can deploy multiple inference services that share the GPU to improve GPU utilization. To do this, you can use GPU partitioning technologies to partition the GPU and use the GPU sharing feature of ACK to deploy multiple inference services that share the GPU. This improves GPU utilization. For more information, see Deploy inference services that share a GPU.

DeepSeek-R1 outperforms other closed source models in mathematical inference and programming competition. Its performance even reaches or surpasses the OpenAI-o1 series in certain sectors. Once released, many people tried it. This topic describes how to use KServe to deploy an inference service from a DeepSeek distilled model in a Container Service for Kubernetes (ACK) cluster in the production environment. The service provides basic capabilities such as model deployment, observability, canary release, and auto scaling. ACK also provides advanced capabilities such as Fluid model acceleration and GPU sharing among inference tasks. You are welcome to try it.

Alibaba Cloud ACK One: Registered Clusters Support ACS Computing Power

OpenYurt v1.6: Introduce Node-level Traffic Multiplexing Capability

222 posts | 33 followers

FollowAlibaba Container Service - May 19, 2025

Alibaba Cloud Native Community - February 13, 2025

Alibaba Cloud Native Community - February 20, 2025

Alibaba Cloud Data Intelligence - February 8, 2025

Alibaba Container Service - May 27, 2025

ApsaraDB - October 29, 2025

222 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Conversational AI Service

Conversational AI Service

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn MoreMore Posts by Alibaba Container Service