Jianyu Wang, Koordinator approver, Rougang Han, Koordinator approver, Ziqiu Zhu, Koordinator approver and Zhe Zhu, Koordinator contributor

As artificial intelligence continues to evolve, the scale and complexity of AI model training are growing exponentially. Large language models (LLMs) and distributed AI training scenarios place unprecedented demands on cluster resource scheduling. Efficient inter-pod communication, intelligent resource preemption, and unified heterogeneous device management have become critical challenges that production environments must address.

Since its official open-source release in April 2022, Koordinator has iterated through 15 major versions, consistently delivering comprehensive solutions for workload orchestration, resource scheduling, isolation, and performance optimization. The Koordinator community is grateful for the contributions from outstanding engineers at Alibaba, Ant Technology Group, Intel, XiaoHongShu, iQiyi, YouZan, and other organizations, who have provided invaluable ideas, code, and real-world scenarios.

Today, we are excited to announce the release of Koordinator v1.7.0. This version introduces groundbreaking capabilities tailored for large-scale AI training scenarios, including Network-Topology Aware Scheduling and Job-Level Preemption. Additionally, v1.7.0 enhances heterogeneous device scheduling with support for Ascend NPU and Cambricon MLU, providing end-to-end device management solutions. The release also includes comprehensive API Reference Documentation and a complete Developer Guide to improve the developer experience.

In the v1.7.0 release, 14 new developers actively contributed to the Koordinator community: @ditingdapeng, @Rouzip, @ClanEver, @zheng-weihao, @cntigers, @LennonChin, @ZhuZhezz, @dabaooline, @bobsongplus, @yccharles, @qingyuanz, @yyrdl, @hwenwur, and @hkttty2009. We sincerely thank all community members for their active participation and ongoing support!

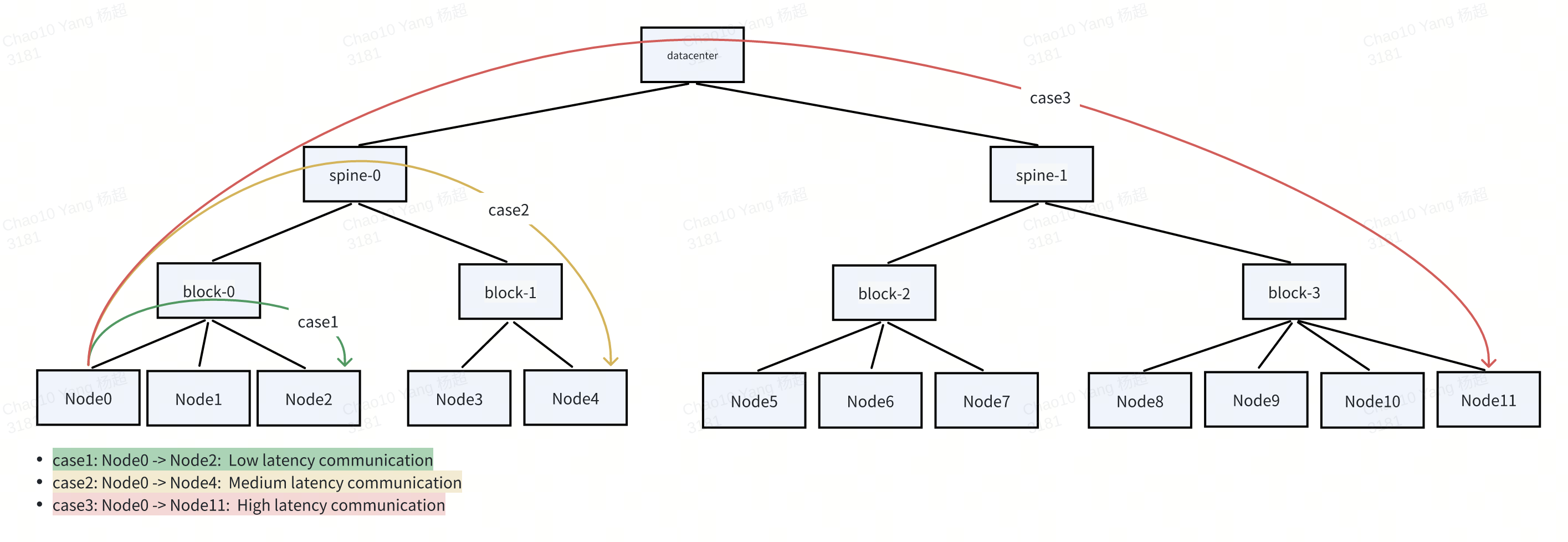

In large-scale AI training scenarios, especially for large language models (LLMs), efficient inter-pod communication is critical to training performance. Model parallelism techniques such as Tensor Parallelism (TP), Pipeline Parallelism (PP), and Data Parallelism (DP) require frequent and high-bandwidth data exchange across GPUs—often spanning multiple nodes. Under such workloads, network topology becomes a key performance bottleneck, where communication latency and bandwidth are heavily influenced by the physical network hierarchy (e.g., NVLink, block, spine).

To optimize training efficiency, Koordinator v1.7.0 provides Network-Topology Aware Scheduling capability, which ensures:

.status.nominatedNode field to ensure consistent placement.Administrators first label nodes with their network topology positions using tools like NVIDIA's topograph:

apiVersion: v1

kind: Node

metadata:

name: node-0

labels:

network.topology.nvidia.com/block: b1

network.topology.nvidia.com/spine: s1Then define the topology hierarchy via a ClusterNetworkTopology CR:

apiVersion: scheduling.koordinator.sh/v1alpha1

kind: ClusterNetworkTopology

metadata:

name: default

spec:

networkTopologySpec:

- labelKey:

- network.topology.nvidia.com/spine

topologyLayer: SpineLayer

- labelKey:

- network.topology.nvidia.com/block

parentTopologyLayer: SpineLayer

topologyLayer: BlockLayer

- parentTopologyLayer: BlockLayer

topologyLayer: NodeTopologyLayerTo leverage network topology awareness, create a PodGroup and annotate it with topology requirements:

apiVersion: scheduling.sigs.k8s.io/v1alpha1

kind: PodGroup

metadata:

name: training-job

namespace: default

annotations:

gang.scheduling.koordinator.sh/network-topology-spec: |

{

"gatherStrategy": [

{

"layer": "BlockLayer",

"strategy": "PreferGather"

}

]

}

spec:

minMember: 8

scheduleTimeoutSeconds: 300When scheduling pods belonging to this PodGroup, the scheduler will attempt to place all member pods within the same BlockLayer topology domain to minimize inter-node communication latency.

For more information about Network-Topology Aware Scheduling, please see Network Topology Aware Scheduling.

In large-scale cluster environments, high-priority jobs (e.g., critical AI training tasks) often need to preempt resources from lower-priority workloads when sufficient resources are not available. However, traditional pod-level preemption in Kubernetes cannot guarantee that all member pods of a distributed job will seize resources together, leading to invalid preemption and resource waste.

To solve this, Koordinator v1.7.0 provides Job-Level Preemption, which ensures that:

nominatedNode for all members to maintain scheduling consistency.The job-level preemption workflow consists of the following steps:

1. Unschedulable Pod Detection: When a pod cannot be scheduled, it enters the PostFilter phase.

2. Job Identification: The scheduler checks if the pod belongs to a PodGroup/GangGroup and fetches all member pods.

3. Preemption Eligibility Check: Verifies that pods.spec.preemptionPolicy ≠ Never and ensures no terminating victims exist on currently nominated nodes.

4. Candidate Node Selection: Finds nodes where preemption may help by simulating the removal of potential victims (lower-priority pods).

5. Job-Aware Cost Model: Selects the optimal node and minimal-cost victim set based on a job-aware cost model.

6. Preemption Execution: Deletes victims and sets status.nominatedNode for all member pods.

Define priority classes for preemptors and victims:

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: high-priority

value: 1000000

preemptionPolicy: PreemptLowerPriority

description: "Used for critical AI training jobs that can preempt others."

---

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: low-priority

value: 1000

preemptionPolicy: PreemptLowerPriority

description: "Used for non-critical jobs that can be preempted."Create a high-priority gang job:

apiVersion: scheduling.sigs.k8s.io/v1alpha1

kind: PodGroup

metadata:

name: hp-training-job

namespace: default

spec:

minMember: 2

scheduleTimeoutSeconds: 300

---

apiVersion: v1

kind: Pod

metadata:

name: hp-worker-1

namespace: default

labels:

pod-group.scheduling.sigs.k8s.io: hp-training-job

spec:

schedulerName: koord-scheduler

priorityClassName: high-priority

preemptionPolicy: PreemptLowerPriority

containers:

- name: worker

resources:

limits:

cpu: 3

memory: 4Gi

requests:

cpu: 3

memory: 4GiWhen the high-priority job cannot be scheduled, the scheduler will preempt low-priority pods across multiple nodes to make room for all member pods of the job.

For more information about Job-Level Preemption, please see Job Level Preemption.

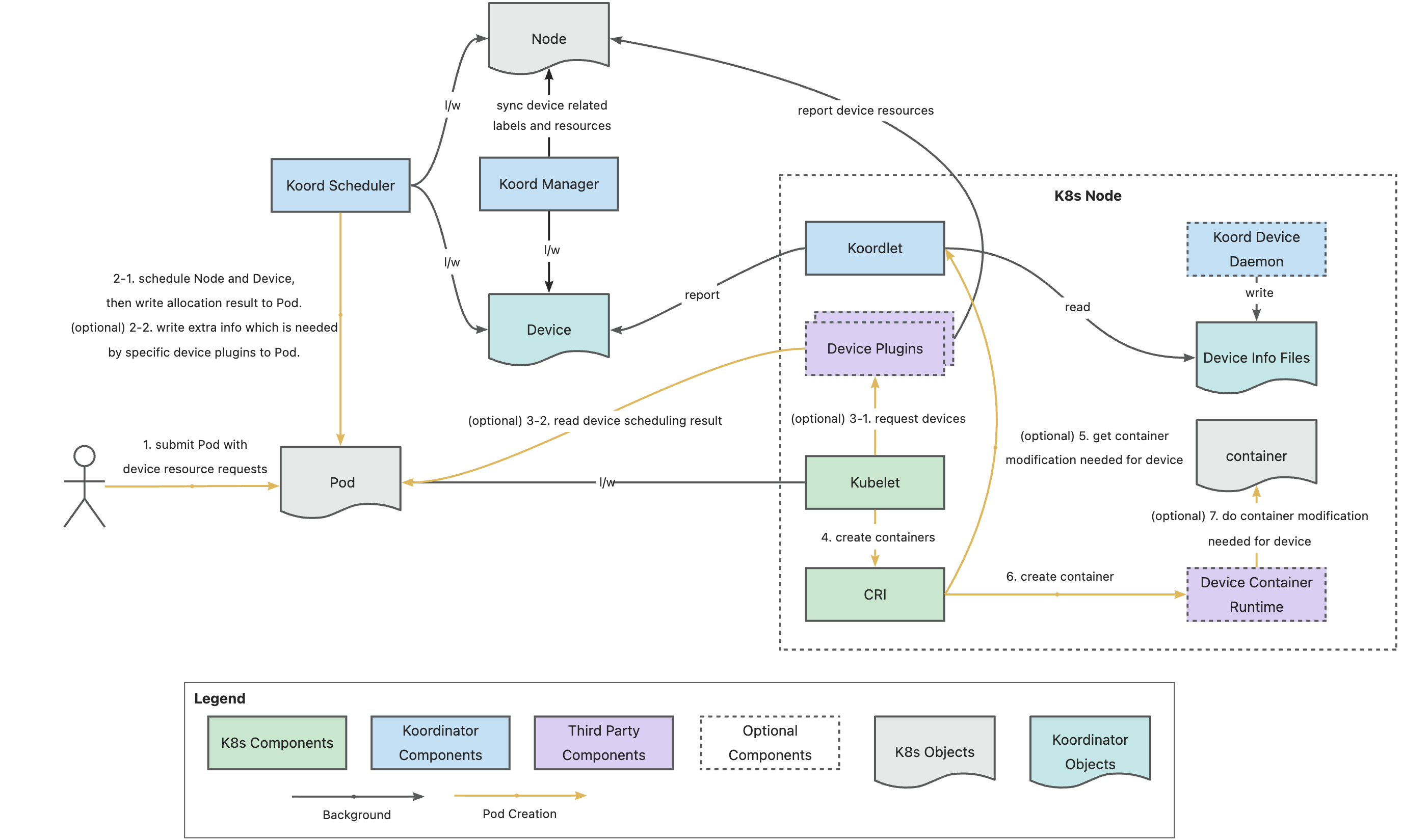

Building on the strong foundation of GPU scheduling in v1.6, Koordinator v1.7.0 extends heterogeneous device scheduling to support Ascend NPU and Cambricon MLU, providing unified device management and scheduling capabilities across multiple vendors.

Koordinator v1.7.0 supports both Ascend virtualization templates and full cards through the koord-device-daemon and koordlet components. Key features include:

Example Device CR for Ascend NPU:

apiVersion: scheduling.koordinator.sh/v1alpha1

kind: Device

metadata:

labels:

node.koordinator.sh/gpu-model: Ascend-910B3

annotations:

scheduling.koordinator.sh/gpu-partitions: |

{

"4": [

{

"minors": [0,1,2,3],

"gpuLinkType": "HCCS",

"allocationScore": "1"

}

]

}

name: node-1

spec:

devices:

- health: true

id: GPU-fd971b33-4891-fd2e-ed42-ce6adf324615

minor: 0

resources:

koordinator.sh/gpu-memory: 64Gi

koordinator.sh/gpu-memory-ratio: "100"

# Ascent Related Resources

# ...

topology:

busID: 0000:3b:00.0

nodeID: 0

pcieID: pci0000:3a

type: gpuKoordinator v1.7.0 supports Cambricon MLU cards in both full-card and virtualization (dynamic-smlu) modes. Key features include:

koordinator.sh/gpu-* resources for consistent scheduling.Example Pod requesting Cambricon virtual card:

apiVersion: v1

kind: Pod

metadata:

name: test-cambricon-partial

namespace: default

spec:

schedulerName: koord-scheduler

containers:

- name: demo-sleep

image: ubuntu:18.04

resources:

limits:

koordinator.sh/gpu.shared: "1"

koordinator.sh/gpu-memory: "1Gi"

koordinator.sh/gpu-core: "10"

cambricon.com/mlu.smlu.vcore: "10"

cambricon.com/mlu.smlu.vmemory: "4"

requests:

koordinator.sh/gpu.shared: "1"

koordinator.sh/gpu-memory: "1Gi"

koordinator.sh/gpu-core: "10"

cambricon.com/mlu.smlu.vcore: "10"

cambricon.com/mlu.smlu.vmemory: "4"For more information, please see Device Scheduling - Ascend NPU and Device Scheduling - Cambricon MLU.

Koordinator v1.7.0 also includes the following key enhancements:

1. GPU Share with HAMi Enhancements:

hami-daemon chart (version 0.1.0) replacing manual DaemonSet deployment for easier management.HostGPUMemoryUsage, HostCoreUtilization, vGPU_device_memory_usage_in_bytes, vGPU_device_memory_limit_in_bytes, and container-level device metrics.2. Load-Aware Scheduling Optimization:

dominantResourceWeight for dominant resource fairness, prodUsageIncludeSys for comprehensive prod usage calculation, enableScheduleWhenNodeMetricsExpired for expired metrics handling, estimatedSecondsAfterPodScheduled and estimatedSecondsAfterInitialized for precise resource estimation timing, allowCustomizeEstimation for pod-level estimation customization, and supportedResources for extended resource type support.3. Enhanced ElasticQuota with Quota Hook Plugin framework:

For a complete list of changes, please see v1.7.0 Release.

To improve the developer experience and facilitate community contributions, Koordinator v1.7.0 introduces comprehensive API Reference Documentation and a complete Developer Guide.

The new API Reference provides detailed documentation for:

Example from the Custom Resource Definitions documentation:

apiVersion: scheduling.koordinator.sh/v1alpha1

kind: Device

metadata:

name: worker01

labels:

node.koordinator.sh/gpu-model: NVIDIA-H20

node.koordinator.sh/gpu-vendor: nvidia

spec:

devices:

- health: true

id: GPU-a43e0de9-28a0-1e87-32f8-f5c4994b3e69

minor: 0

resources:

koordinator.sh/gpu-core: "100"

koordinator.sh/gpu-memory: 97871Mi

koordinator.sh/gpu-memory-ratio: "100"

topology:

busID: 0000:0e:00.0

nodeID: 0

pcieID: pci0000:0b

type: gpuThe Developer Guide provides comprehensive resources for contributors, including:

These resources significantly lower the barrier to entry for new contributors and enable developers to extend Koordinator's capabilities more easily.

For more information, please see API Reference and Developer Guide.

To help users quickly get started with Koordinator's colocation capabilities, v1.7.0 introduces a new best practice guide: Batch Colocation Quick Start. This guide provides step-by-step instructions for:

This guide complements the existing best practices for Spark job colocation, Hadoop YARN colocation, and fine-grained CPU orchestration, providing a comprehensive resource library for production deployments.

For more information, please see Batch Colocation Quick Start.

Koordinator is an open source community. In v1.7.0, there are 14 new developers who contributed to the Koordinator main repo:

@ditingdapeng made their first contribution in #2353

@Rouzip made their first contribution in #2005

@ClanEver made their first contribution in #2405

@zheng-weihao made their first contribution in #2409

@cntigers made their first contribution in #2434

@LennonChin made their first contribution in #2449

@ZhuZhezz made their first contribution in #2423

@dabaooline made their first contribution in #2483

@bobsongplus made their first contribution in #2524

@yccharles made their first contribution in #2474

@qingyuanz made their first contribution in #2584

@yyrdl made their first contribution in #2597

@hwenwur made their first contribution in #2621

@hkttty2009 made their first contribution in #2641

Thanks for the elders for their consistent efforts and the newbies for their active contributions. We welcome more contributors to join the Koordinator community.

In the next versions, Koordinator plans the following works:

Task Scheduling: Discuss with upstream developers about how to support Coscheduling and find a more elegant way to solve the following problems

We encourage user feedback on usage experiences and welcome more developers to participate in the Koordinator project, jointly driving its development!

Since the project was open-sourced, Koordinator has been released for more than 15 versions, with 110+ contributors involved. The community continues to grow and improve. We thank all community members for their active participation and valuable feedback. We also want to thank the CNCF organization and related community members for supporting the project.

Welcome more developers and end users to join us! It is your participation and feedback that make Koordinator keep improving. Whether you are a beginner or an expert in the Cloud Native communities, we look forward to hearing your voice!

The Assessment Engineering Is Becoming a Key Focus of the Next Round of Agent Evolution

From Data Silos to Intelligent Insights: Building a Future-oriented Operation Intelligence System

639 posts | 55 followers

FollowAlibaba Cloud Native Community - August 15, 2024

Alibaba Cloud Native Community - March 11, 2025

Alibaba Cloud Native Community - December 1, 2022

Alibaba Container Service - June 26, 2025

Alibaba Cloud Native Community - July 19, 2022

Alibaba Cloud Native Community - March 29, 2023

639 posts | 55 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Alibaba Cloud Native Community