By Wang Chen & Ma YunLei

The CIO of Alibaba Cloud, Jiang Linquan, once shared that during the process of implementing large model technology, he summarized a set of methodologies called RIDE, which stands for Reorganize (reorganize the organization and production relations), Identify (identify business pain points and AI opportunities), Define (define metrics and operational systems), and Execute (promote data construction and engineering implementation). Among them, Execute mentioned the core reason for assessing the importance of systems, which is that the most critical distinction of this round of large models is that there are no standard paradigms for measuring data and evaluation. This means that it is both a difficulty in enhancing product capability and a moat for product competitiveness.

In the field of AI, there is often a term referred to as "taste"; here, the "taste" mentioned actually refers to how to design evaluation projects and evaluate the output of agents.

In traditional software development, testing is an indispensable part of the development process, ensuring the determinacy of inputs and outputs, as well as forward compatibility, which is the basis for software quality assurance. Test coverage and accuracy are the metrics for evaluating quality, and accuracy must be maintained at 100% level.

Traditional software is largely deterministic. Given the same inputs, the system will always produce the same outputs. Its failure modes, that is, "bugs," are usually discrete, reproducible, and can be fixed by modifying specific lines of code.

AI applications, in essence, exhibit non-deterministic and probabilistic behavior. They display statistical, context-dependent failure modes and unpredictable emergent behaviors, meaning that for the same inputs, they may produce different outputs.

The traditional QA process, designed specifically for predictable, rule-based systems, has been unable to adequately address the challenges posed by these data-driven, adaptive systems. Even with thorough testing during the release phase, it cannot guarantee stability issues after going live with various outputs. Therefore, evaluation is no longer just a phase before deployment, but rather a combination of observability platforms, continuous monitoring, and automated evaluation and governance as a service that constitutes the evaluation project.

The non-determinacy of AI applications stems from their core technological architecture and training methods. Unlike traditional software, AI applications are in essence probabilistic systems. Their core function is to predict the next word in a sequence based on statistical patterns learned from massive data rather than true understanding. This inherent randomness is both the source of their creativity and the root of their unreliability, leading to the phenomenon of "hallucination," where the model generates outputs that sound reasonable but are actually incorrect or nonsensical.

The root causes of hallucination and uncertainty are complex:

Traditional automated evaluation metrics, such as BLEU (Bilingual Evaluation Understudy) for machine translation and ROUGE (Recall-Oriented Understudy for Gisting Evaluation) for text summarization, are primarily based on the overlap of vocabulary or phrases to calculate scores. This approach fundamentally falls short when evaluating modern generative AI models, which need to capture subtle differences in semantics, style, tone, and creativity. A model-generated text might be completely different from the reference answer in wording but could be more accurate and insightful semantically. Traditional metrics fail to recognize this, and may even assign low scores.

On the other hand, while human evaluation is regarded as the "gold standard" for assessment quality, its high costs, lengthy cycles, and inherent subjectivity make it difficult to adapt to the rapid iterations of AI technology development. This lag in evaluation capabilities forms a serious bottleneck, often causing promising AI projects to fall into pilot traps, unable to effectively validate and improve.

Therefore, "defeating magic with magic" has become a new paradigm in evaluation engineering, namely LLM-as-a-Judge automated evaluation tools. It utilizes a powerful large language model (usually a cutting-edge model) to act as a judge, scoring, ranking, or selecting the outputs of another AI model (or application). This approach cleverly combines the scalability of automated evaluation with the meticulousness of human evaluation.

In RL/RLHF scenarios, reward models (Reward Model, RM) have become a mainstream automated evaluation tool, along with benchmarks specifically for evaluating reward models, such as overseas RewardBench[1] and RM Bench[2] released jointly by domestic universities, which are used to measure the effectiveness of different RMs and compare who can better predict human preferences. Below, I will introduce ModelScope's recently open-sourced reward model — RM-Gallery, project address:

https://github.com/modelscope/RM-Gallery/

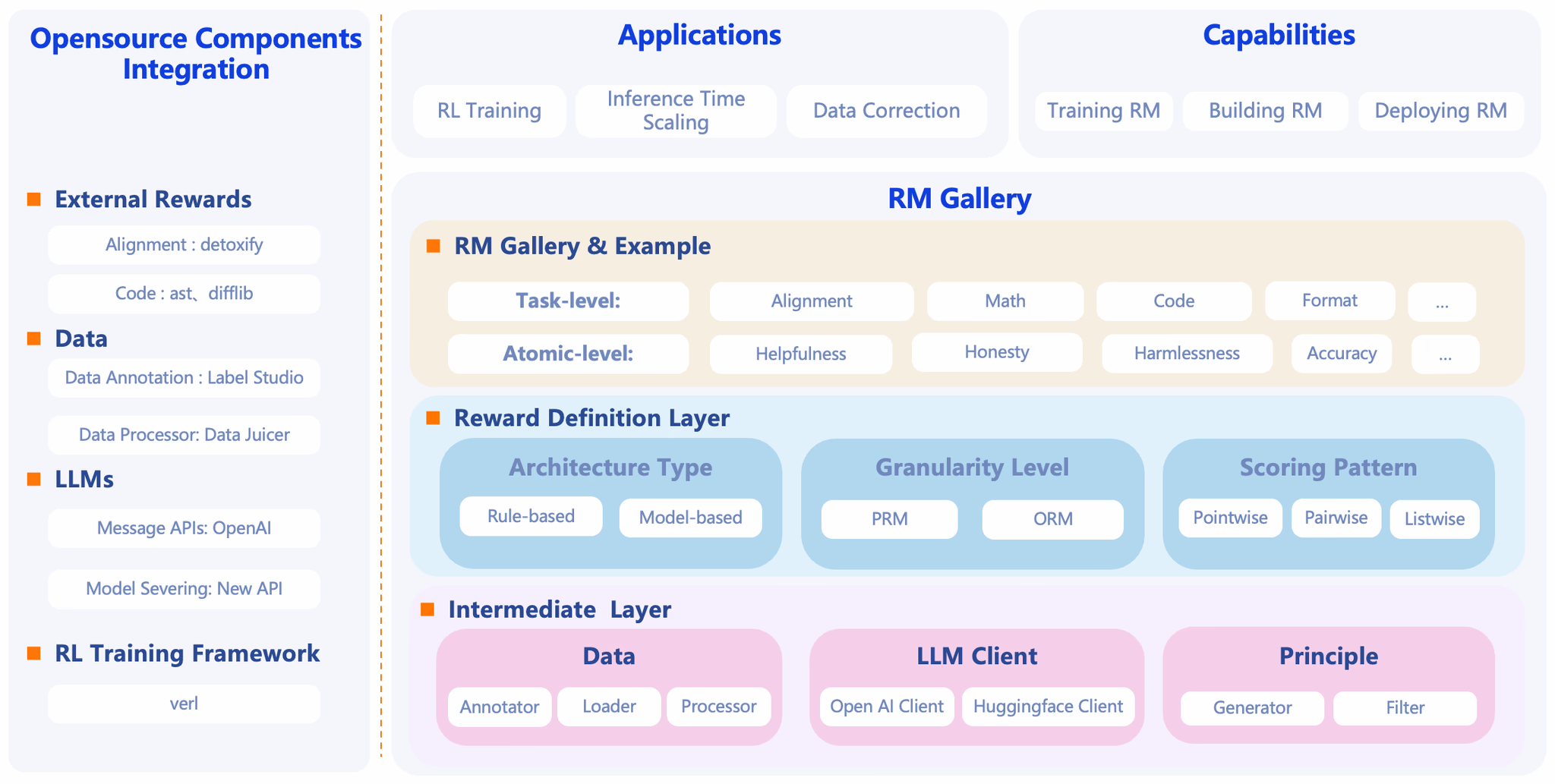

RM-Gallery is a one-stop platform that integrates reward model training, construction, and application, supporting high throughput and fault tolerance implementations for task-level and atomic-level reward models, facilitating the end-to-end realization of reward models.

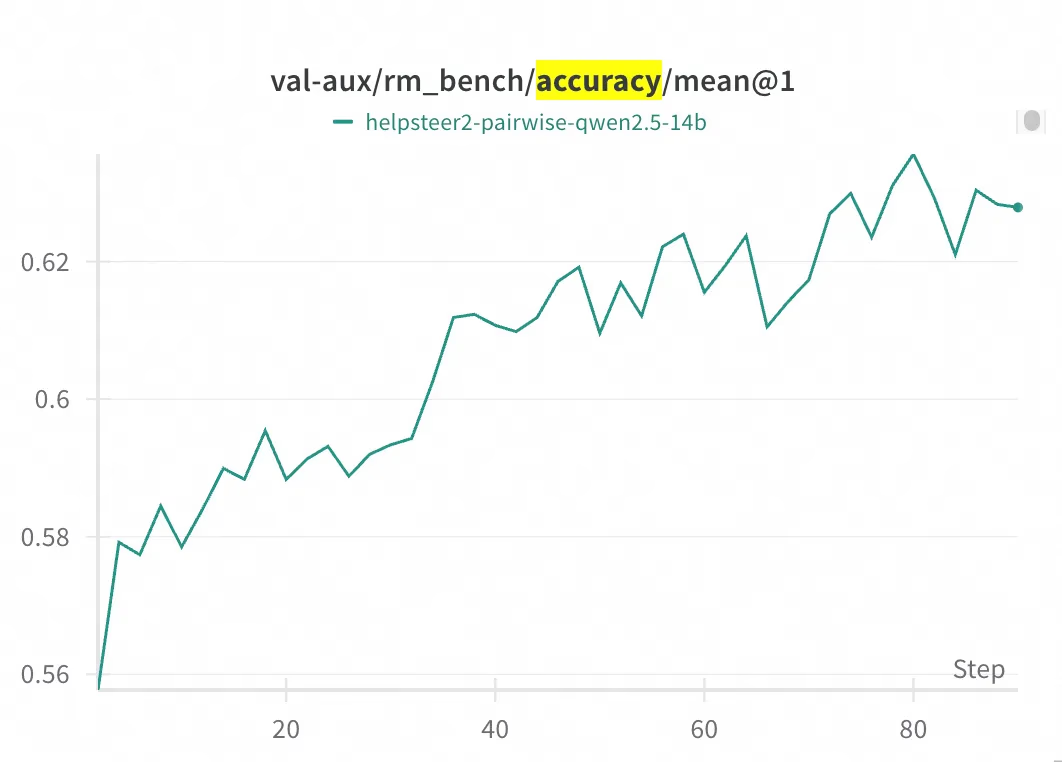

RM-Gallery provides an RL-based inference reward model training framework, compatible with mainstream frameworks (such as Verl), and offers examples for integrating RM-Gallery. In RM Bench, after 80 steps of training, accuracy improved from about 55.8% of the baseline model (Qwen2.5-14B) to about 62.5%.

Several key features of RM-Gallery include:

Thus, from a functional perspective, it is to build the reward model — used to assess the quality, priority, and preference consistency of large model outputs — into a trainable, reusable, and deployable infrastructure platform for evaluation engineering.

Of course, building a complete evaluation project requires more than a reward model; continuous collection of business data, including user dialogues, feedback, and calling logs, is needed to further optimize datasets, train smaller models, or even teacher models, forming a data flywheel.

[2] https://arxiv.org/html/2410.16184v1

If you want to learn more about Alibaba Cloud API Gateway (Higress), please click: https://higress.ai/en/

The Changes in the Agent Development Toolchain and the Invariance of the Application Architecture

644 posts | 55 followers

FollowAlibaba Cloud Community - January 4, 2026

Alibaba Cloud Native Community - January 19, 2026

Alibaba Cloud Native Community - September 9, 2025

Alibaba Cloud Native Community - January 15, 2026

Alibaba Cloud Native Community - October 11, 2025

Alibaba Cloud Native Community - August 25, 2025

644 posts | 55 followers

Follow API Gateway

API Gateway

API Gateway provides you with high-performance and high-availability API hosting services to deploy and release your APIs on Alibaba Cloud products.

Learn More AgentBay

AgentBay

Multimodal cloud-based operating environment and expert agent platform, supporting automation and remote control across browsers, desktops, mobile devices, and code.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn MoreMore Posts by Alibaba Cloud Native Community