With the popularity of large models such as DeepSeek, the demand for heterogeneous device resource scheduling in the AI and high-performance computing fields is growing rapidly, whether it is for devices like GPU, NPU, or RDMA. How to efficiently manage and schedule these resources has become the core issue in the industry. Against this backdrop, Koordinator actively responds to the demands of the community, continuously cultivates heterogeneous device scheduling capabilities, and introduces a series of innovative features in the latest v1.6 version, aiming to help customers solve challenges of heterogeneous resource scheduling.

In v1.6, we improved the device topology scheduling capability to support the perception of GPU topologies of more models, significantly accelerating the GPU interconnection performance in AI applications. In cooperation with the open-source project HAMi, we launched the end-to-end GPU and RDMA joint allocation capability and strong GPU isolation capability. This effectively improves the cross-machine interconnection efficiency of typical AI training tasks and the deployment density of inference tasks, thus ensuring application performance and improving cluster resource utilization. The Kubernetes community provides an enhanced resource plug-in that allows you to configure different node-scoring policies for different resources. This feature can effectively reduce the GPU fragmentation rate when GPU and CPU tasks are deployed in a cluster.

Since its official release in April 2022, Koordinator has iteratively released 14 major versions, attracting outstanding engineers from Alibaba, Ant Group, REDnote, iQIYI, Youzan, and many other enterprises to participate in the development. They offered abundant ideas, code, and practical application scenarios, which greatly promoted the development of the project. In particular, in v1.6.0, a total of 10 new developers actively participated in the construction of the Koordinator community. They are @LY-today, @AdrianMachao, @TaoYang526, @dongjiang1989, @chengjoey, @JBinin, @clay-wangzhi, @ferris-cx, @nce3xin, and @lijunxin559. Thanks for their contributions, as well as for the continued effort and support of all community members!

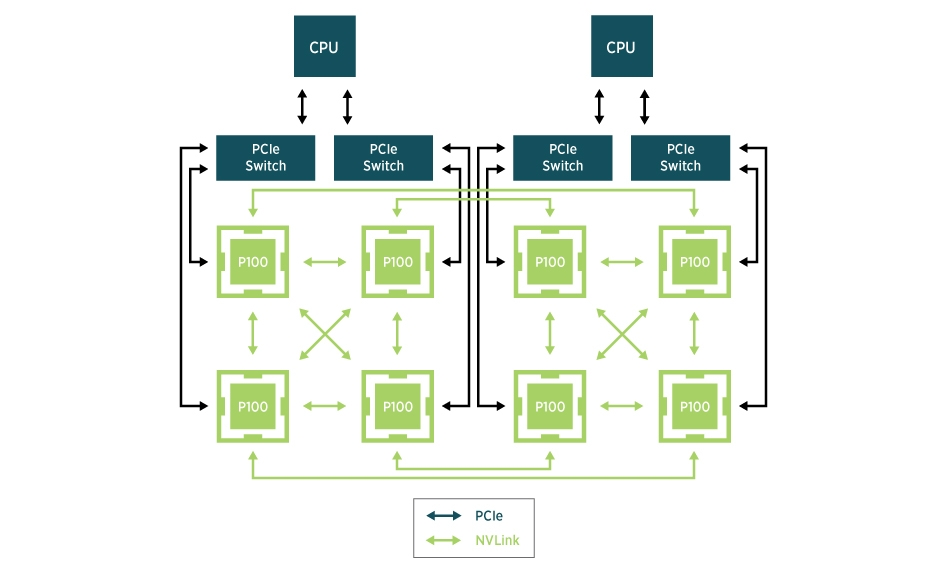

With the rapid development of fields such as deep learning and high-performance computing (HPC), GPUs have become a core resource for many compute-intensive workloads. In Kubernetes clusters, efficient use of GPUs can critically improve application performance. However, the performance of GPU resources is unbalanced, which is affected by hardware topology and resource configuration. Example:

For the preceding device scenarios, Koordinator provides a variety of device topology scheduling APIs to meet the requirements of pods for GPU topology. Here are examples of how to use APIs:

1. GPU, CPU, and memory are allocated in the same NUMA Node.

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduling.koordinator.sh/numa-topology-spec: '{"numaTopologyPolicy":"Restricted", "singleNUMANodeExclusive":"Preferred"}'

spec:

containers:

- resources:

limits:

koordinator.sh/gpu: 200

cpu: 64

memory: 500Gi

requests:

koordinator.sh/gpu: 200

cpu: 64

memory: 500Gi2. GPUs are allocated in the same PCIe.

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduling.koordinator.sh/device-allocate-hint: |-

{

"gpu": {

"requiredTopologyScope": "PCIe"

}

}

spec:

containers:

- resources:

limits:

koordinator.sh/gpu: 2003. GPUs are allocated in the same NUMA Node.

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduling.koordinator.sh/device-allocate-hint: |-

{

"gpu": {

"requiredTopologyScope": "NUMANode"

}

}

spec:

containers:

- resources:

limits:

koordinator.sh/gpu: 4004. GPUs need to be allocated based on predefined partitions.

Generally, predefined partition rules for GPUs are determined by specific GPU models or system configurations, and may also be affected by the GPU configuration on a specific node. The scheduler cannot gain the specifics of the hardware model or GPU type; instead, it relies on node-level components to report these predefined rules to the device's custom resources (CR). Sample code:

apiVersion: scheduling.koordinator.sh/v1alpha1

kind: Device

metadata:

annotations:

scheduling.koordinator.sh/gpu-partitions: |

{

"1": [

"NVLINK": {

{

# Which GPUs are included

"minors": [

0

],

# GPU Interconnect Type

"gpuLinkType": "NVLink",

# Here we take the bottleneck bandwidth between GPUs in the Ring algorithm. BusBandwidth can be referenced from https://github.com/NVIDIA/nccl-tests/blob/master/doc/PERFORMANCE.md

"ringBusBandwidth": 400Gi

# Indicate the overall allocation quality for the node after the partition has been assigned away.

"allocationScore": "1",

},

...

}

...

],

"2": [

...

],

"4": [

...

],

"8": [

...

]

}

labels:

// Indicate whether the partition rule must be followed.

node.koordinator.sh/gpu-partition-policy: "Honor"

name: node-1When there are multiple optional partition schemes, Koordinator allows users to decide whether to allocate GPUs based on the optimal partition:

kind: Pod

metadata:

name: hello-gpu

annotations:

scheduling.koordinator.sh/gpu-partition-spec: |

{

# BestEffort|Restricted

"allocatePolicy": "Restricted",

}

spec:

containers:

- name: main

resources:

limits:

koordinator.sh/gpu: 100If users do not need to allocate GPUs based on the optimal partition, the scheduler works based on the binpack algorithm as much as possible.

For more information about topology-aware GPU scheduling, see the following documents:

Thanks to the community developer @eahydra for contributing to this feature!

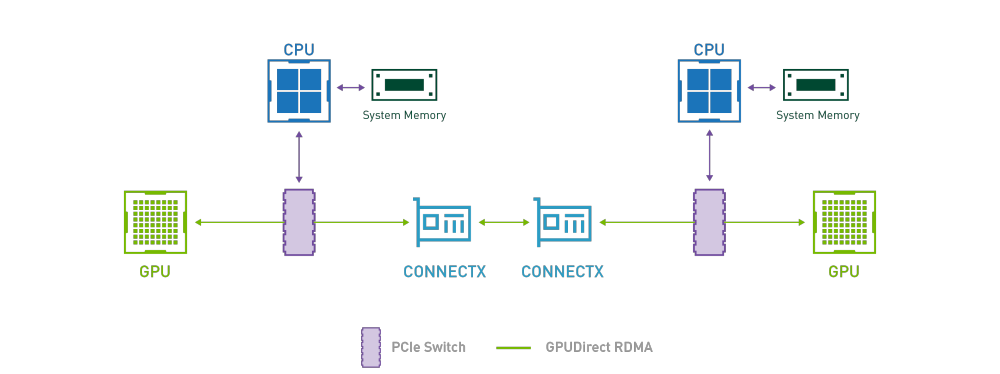

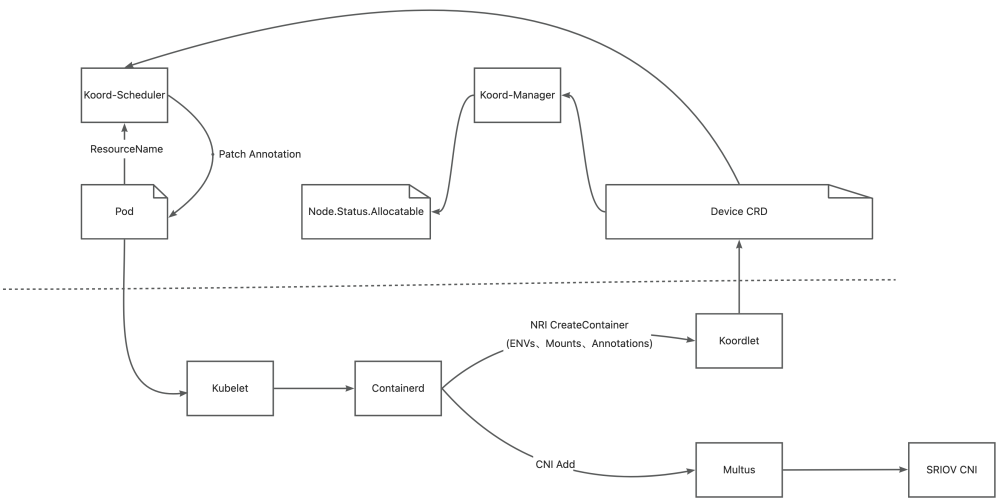

In an AI model training scenario, GPUs need to communicate frequently to synchronize the weights that are iteratively updated during the training process. GDR stands for GPUDirect RDMA, whose purpose is to address the problem of data exchange efficiency among multiple GPU devices. Through the GDR technology, you can exchange data between GPUs across multiple devices without going through the CPU and memory. This greatly saves CPU and memory overheads and reduces latency. To achieve this goal, Koordinator v1.6.0 implements the joint scheduling feature of GPU and RDMA devices. The overall architecture is as follows:

Because of the numerous components involved and the complexity of the environment, Koordinator v1.6.0 provides best practices to show how to deploy Koordinator, Multus-CNI, and SRIOV-CNI step by step. After deploying the relevant components, users can use the following pod protocol to request the scheduler to jointly allocate the GPUs and RDMA they apply for:

apiVersion: v1

kind: Pod

metadata:

name: pod-vf01

namespace: kubeflow

annotations:

scheduling.koordinator.sh/device-joint-allocate: |-

{

"deviceTypes": ["gpu","rdma"]

}

scheduling.koordinator.sh/device-allocate-hint: |-

{

"rdma": {

"vfSelector": {} //apply VF

}

}

spec:

schedulerName: koord-scheduler

containers:

- name: container-vf

resources:

requests:

koordinator.sh/gpu: 100

koordinator.sh/rdma: 100

limits:

koordinator.sh/gpu: 100

koordinator.sh/rdma: 100To further use Koordinator to conduct end-to-end tests for GDR tasks, you can refer to the examples in the best practices step by step. We earnestly thank the community developer @ferris-cx for his contribution to this feature!

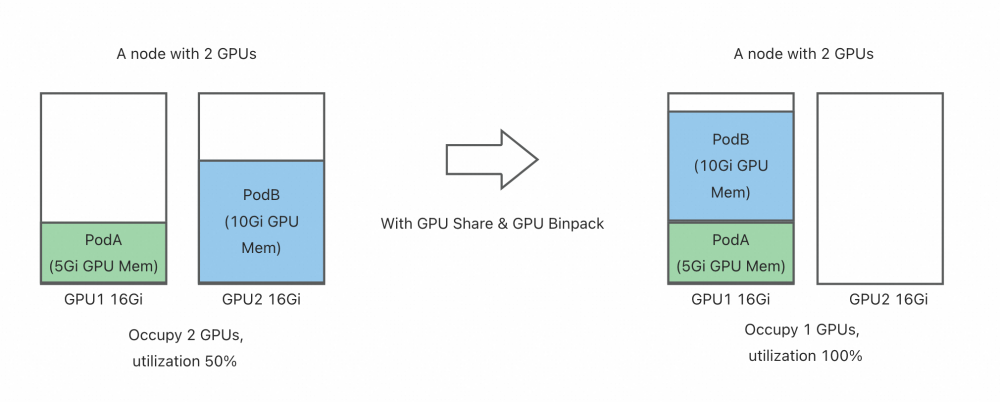

In AI applications, GPU is an indispensable core device for large model training and inference. It can provide powerful computing power support for compute-intensive tasks. However, this powerful computing power is often accompanied by high costs. In actual production, we often encounter such situations: we have to use a high-performance GPU card to run some small models or lightweight inference tasks that only occupy a small part of GPU resources (such as 20% of the computing power or GPU memory). This resource usage not only wastes valuable GPU computing power but also significantly increases the cost of the enterprise.

This situation is particularly common in the following scenarios:

• Online inference: Many online inference tasks have low computing requirements but high latency requirements. They must be deployed on high-performance GPUs to meet real-time requirements.

• Development and test environment: When developers debug a model, they only need to use a small amount of GPU resources. However, traditional scheduling methods cause low resource utilization.

• Multi-tenant shared cluster: In a GPU cluster shared by multiple users or teams, each task exclusively occupies a GPU, resulting in uneven resource allocation and difficulty in fully utilizing hardware capabilities.

To solve this problem, Koordinator and HAMi provide GPU-sharing isolation for users, allowing multiple pods to share the same GPU card. In this way, we can significantly improve the resource utilization of GPU, reduce enterprise costs, and meet the flexible resource requirements of different tasks. For example, in the GPU sharing mode of Koordinator, users can accurately allocate the number of GPU cores or the GPU memory ratio to ensure that each task can obtain the required resources while avoiding mutual interference.

HAMi is a CNCF sandbox project that provides a device management middleware for Kubernetes. HAMi-Core, as its core module, offers GPU-sharing isolation capabilities by hijacking API calls between CUDA-Runtime (libcudart.so) and CUDA-Driver (libcuda.so). In v1.6.0, Koordinator leverages the GPU isolation capabilities of HAMi-Core to provide an end-to-end GPU sharing solution.

You can deploy a DaemonSet through the following YAML file to directly install HAMi-Core on the corresponding node.

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: hami-core-distribute

namespace: default

spec:

selector:

matchLabels:

koord-app: hami-core-distribute

template:

metadata:

labels:

koord-app: hami-core-distribute

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-type

operator: In

values:

- "gpu"

containers:

- command:

- /bin/sh

- -c

- |

cp -f /k8s-vgpu/lib/nvidia/libvgpu.so /usl/local/vgpu && sleep 3600000

image: docker.m.daocloud.io/projecthami/hami:v2.4.0

imagePullPolicy: Always

name: name

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: "0"

memory: "0"

volumeMounts:

- mountPath: /usl/local/vgpu

name: vgpu-hook

- mountPath: /tmp/vgpulock

name: vgpu-lock

tolerations:

- operator: Exists

volumes:

- hostPath:

path: /usl/local/vgpu

type: DirectoryOrCreate

name: vgpu-hook

# https://github.com/Project-HAMi/HAMi/issues/696

- hostPath:

path: /tmp/vgpulock

type: DirectoryOrCreate

name: vgpu-lockBy default, GPU binpack is enabled for the Koordinator scheduler. Once Koordinator and HAMi-Core are installed, you can apply for GPU sharing cards and enable HAMi-Core isolation in the following ways:

apiVersion: v1

kind: Pod

metadata:

name: pod-example

namespace: default

labels:

koordinator.sh/gpu-isolation-provider: hami-core

spec:

schedulerName: koord-scheduler

containers:

- command:

- sleep

- 365d

image: busybox

imagePullPolicy: IfNotPresent

name: curlimage

resources:

limits:

cpu: 40m

memory: 40Mi

koordinator.sh/gpu-shared: 1

koordinator.sh/gpu-core: 50

koordinator.sh/gpu-memory-ratio: 50

requests:

cpu: 40m

memory: 40Mi

koordinator.sh/gpu-shared: 1

koordinator.sh/gpu-core: 50

koordinator.sh/gpu-memory-ratio: 50

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

restartPolicy: AlwaysFor instructions on enabling the HAMi GPU-sharing isolation capability in Koordinator, see:

• Device Scheduling - GPU Share With HAMi

Sincerely thank the HAMi community member @wawa0210 for contributing to this feature!

In modern Kubernetes clusters, multiple types of resources (such as CPU, memory, and GPU) are usually managed on a unified platform. However, there are often significant differences in the usage patterns and requirements of different resources, which leads to different policy requirements for resource packing and spreading.

Example:

• GPU resources: In AI model training or inference tasks, to maximize GPU utilization and reduce fragmentation, users typically prefer to schedule GPU tasks to nodes that have GPUs (packing). This policy can avoid resource waste caused by excessively dispersed GPU distribution.

• CPU and memory resources: In contrast, the requirements for CPU and memory resources are more diverse. For some online services or batch processing tasks, users are more inclined to distribute the tasks across multiple nodes (spreading) to avoid resource hotspot issues on a single node, thereby improving the stability and performance of the overall cluster.

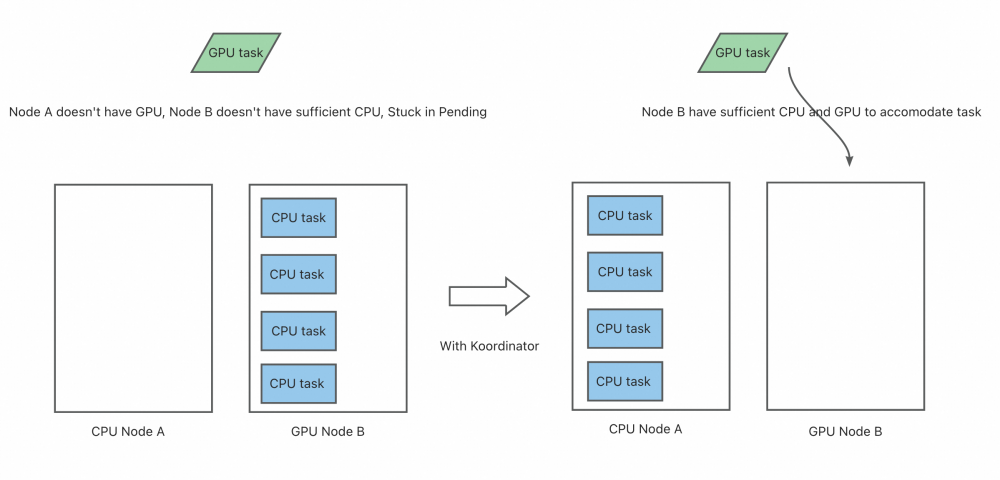

In addition, in mixed workload scenarios, the resource requirements of different tasks also affect each other. Example:

• In a cluster that runs both GPU training tasks and CPU-intensive tasks simultaneously, if the CPU-intensive tasks are scheduled to GPU nodes and consume a large amount of CPU and memory resources, subsequent GPU tasks may fail to start due to insufficient non-CPU resources and eventually fall Pending.

• In a multi-tenant environment, some users may only apply for CPU and memory resources, while others require GPU resources. If the scheduler fails to distinguish between these demands, it may result in resource contention and unfair resource allocation.

The Kubernetes-native NodeResourcesFit plug-ins only support the same scoring policy for different resources. Example:

apiVersion: kubescheduler.config.k8s.io/v1

kind: KubeSchedulerConfiguration

profiles:

- pluginConfig:

- name: NodeResourcesFit

args:

apiVersion: kubescheduler.config.k8s.io/v1

kind: NodeResourcesFitArgs

scoringStrategy:

type: LeastAllocated

resources:

- name: cpu

weight: 1

- name: memory

weight: 1

- name: nvidia.com/gpu

weight: 1However, in production practice, this design is not applicable in some scenarios. For example, in AI scenarios, the services that apply for GPUs want to prioritize occupying the entire GPU to prevent GPU fragmentation; the service that applies for CPU and memory resources wants to preferentially spread to reduce CPU hotspots. Koordinator introduced the NodeResourceFitPlus plug-in in v1.6.0 to configure differentiated scoring policies for different resources. You can use the following configuration when installing the Koordinator scheduler:

apiVersion: kubescheduler.config.k8s.io/v1

kind: KubeSchedulerConfiguration

profiles:

- pluginConfig:

- args:

apiVersion: kubescheduler.config.k8s.io/v1

kind: NodeResourcesFitPlusArgs

resources:

nvidia.com/gpu:

type: MostAllocated

weight: 2

cpu:

type: LeastAllocated

weight: 1

memory:

type: LeastAllocated

weight: 1

name: NodeResourcesFitPlus

plugins:

score:

enabled:

- name: NodeResourcesFitPlus

weight: 2

schedulerName: koord-schedulerIn addition, services that apply for CPU and memory resources are likely to be preferentially distributed to non-GPU machines to prevent excessive CPU and memory consumption on GPU machines, which causes the actual GPU-requesting tasks to be Pending due to insufficient non-GPU resources. Koordinator introduced the ScarceResourceAvoidance plug-in in v1.6.0 to meet this requirement. You can configure the scheduler as follows. This indicates that nvidia.com/gpu is a scarce resource. If a pod does not apply for this scarce resource, try to avoid scheduling the pod to it.

apiVersion: kubescheduler.config.k8s.io/v1

kind: KubeSchedulerConfiguration

profiles:

- pluginConfig:

- args:

apiVersion: kubescheduler.config.k8s.io/v1

kind: ScarceResourceAvoidanceArgs

resources:

- nvidia.com/gpu

name: ScarceResourceAvoidance

plugins:

score:

enabled:

- name: NodeResourcesFitPlus

weight: 2

- name: ScarceResourceAvoidance

weight: 2

disabled:

- name: "*"

schedulerName: koord-schedulerFor more information about how to design and use a differentiated scheduling policy of GPU resources, see:

Thanks to the community developer @LY-today for his contribution to this feature.

Efficient utilization of heterogeneous resources often relies on the precise alignment of tightly coupled CPU and NUMA resources. Example:

• GPU-accelerated tasks: In a server with multiple NUMA nodes, if the physical connection between GPUs and CPUs or memory crosses NUMA boundaries, data transfer latency may increase, which significantly weakens the task performance. As a result, such tasks typically require GPUs, CPUs, and memory to be allocated on the same NUMA node.

• AI inference services: Online inference tasks are sensitive to latency, so it is necessary to make sure that GPU and CPU resources are allocated as close as possible to cut the communication overhead across NUMA nodes.

• Scientific computing tasks: Some high-performance computing tasks, such as molecular dynamics (MD) simulation or weather forecasting, require high-bandwidth, low-latency memory access, so strict alignment between CPU cores and local memory is required.

These requirements apply not only to task scheduling but also to resource reservation scenarios. In a production environment, resource reservation is a vital mechanism for locking resources for critical tasks in advance to ensure their smooth operation at a future point. However, in heterogeneous resource scenarios, a simple resource reservation mechanism often fails to meet refined resource orchestration requirements. Example:

• Some tasks may need to reserve CPU and GPU resources on specific NUMA nodes to ensure optimal performance after the task is started.

• In a multi-tenant cluster, different users may need to reserve different combinations of resource types (such as GPUs + CPUs + memory) and expect these resources to be strictly aligned.

• When the reserved resources are not fully used, it is a challenge to flexibly allocate the remaining resources to other tasks while avoiding affecting the resource guarantee of the reserved tasks.

To cope with these complex scenarios, the resource reservation feature is fully enhanced in Koordinator v1.6 to provide more refined and flexible resource orchestration capabilities. Specifically, the following issues are fixed:

Changes to plug-in extension interfaces:

The following snippets provide examples on how to use new features:

1. Exact-Match Reservation

Exact matching of reserved resources by pods can narrow down the matching relationship between a group of pods and a group of reserved resources, allocating reserved resources more controllable.

apiVersion: v1

kind: Pod

metadata:

annotations:

# Specify the reserved resource categories for pods. Pods can match only Reservation objects that have the same amount of reserved resources as the pod specifications under these resource categories. For example, you can specify "cpu", "memory", and "nvidia.com/gpu".

scheduling.koordinator.sh/exact-match-reservation: '{"resourceNames":{"cpu","memory","nvidia.com/gpu"}}'2. Reservation-ignored

If you specify pods to ignore resource reservations, pods can fill reserved but unallocated idle resources on nodes. This further reduces resource fragmentation when you use preemption.

apiVersion: v1

kind: Pod

metadata:

labels:

# Specify that the scheduling of pods can ignore the resource reservation.

scheduling.koordinator.sh/reservation-ignored: "true"3. Reservation Affinity

apiVersion: v1

kind: Pod

metadata:

annotations:

# Specify the name of the resource reservation that matches the pod.

scheduling.koordinator.sh/reservation-affinity: '{"name":"test-reservation"}'4. Reservation Taints and Tolerance

---

apiVersion: scheduling.koordinator.sh/v1alpha1

kind: Reservation

metadata:

name: test-reservation

spec:

# Specify taints for reservation. The reserved resources can only be allocated to pods that tolerate the taints.

taints:

- effect: NoSchedule

key: test-taint-key

value: test-taint-value

# ...

---

apiVersion: v1

kind: Pod

metadata:

annotations:

# Specify the taint tolerance of pods for resource reservation.

scheduling.koordinator.sh/reservation-affinity: '{"tolerations":[{"key":"test-taint-key","operator":"Equal","value":"test-taint-value","effect":"NoSchedule"}]}'5. Reservation Preemption

Note: Currently, you cannot use high-priority pods to preempt low-priority reservations.

apiVersion: kubescheduler.config.k8s.io/v1beta3

kind: KubeSchedulerConfiguration

profiles:

- pluginConfigs:

- name: Reservation

args:

apiVersion: kubescheduler.config.k8s.io/v1beta3

kind: ReservationArgs

enablePreemption: true

# ...

plugins:

postFilter:

# In the scheduler configuration, disable preemption for the DefaultPreemption plug-in and enable preemption for the Reservation plug-in.

- disabled:

- name: DefaultPreemption

# ...

- enabled:

- name: ReservationHeartfelt thanks to the community developer @saintube for contributing to this feature!

In modern data centers, hybrid deployment technology has become an important means to improve resource utilization. By deploying latency-sensitive tasks (such as online services) and resource-intensive tasks (such as offline batch processing) in the same cluster, enterprises can significantly reduce hardware costs and improve resource efficiency. However, with the continuous improvement of the resource usage of hybrid clusters, how to ensure resource isolation between different types of tasks has become a key challenge.

In hybrid deployment scenarios, the core objectives of the resource isolation are:

• Guarantee the performance of high-priority tasks: For example, online services require stable CPU, memory, and I/O resources to meet low-latency requirements.

• Make full use of idle resources: Offline tasks should make full use of resources that are not used by high-priority tasks without causing interference to tasks.

• Dynamically adjust resource allocation: The resource allocation policy is adjusted in real time based on node load changes to avoid resource contention or waste.

To achieve these goals, Koordinator continues to build and improve resource isolation capabilities. In v1.6, we have carried out a series of feature optimizations and issue fixes around resource overcommitment and hybrid QoS deployment, including:

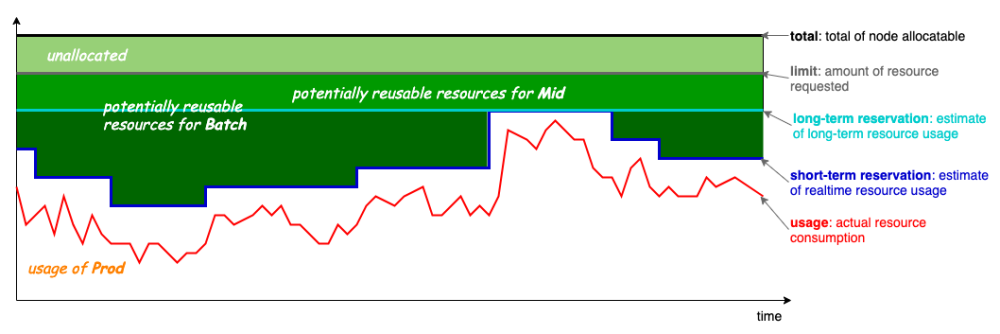

Mid resource overcommitment is introduced in Koordinator v1.3, providing dynamic resource overcommitment based on node profiles. However, to ensure the stability of overcommitted resources, Mid resources are completely obtained from the scheduled Prod pods on the node, which means that the empty node has no Mid resources at the beginning. This makes it inconvenient for some workloads to use Mid resources. The Koordinator community has also received feedback and contributions from some enterprise users.

In v1.6, Koordinator updates the overcommitment formula as follows:

MidAllocatable := min(ProdReclaimable, NodeAllocatable * thresholdRatio) + ProdUnallocated * unallocatedRatio

ProdReclaimable := min(max(0, ProdAllocated - ProdPeak * (1 + safeMargin)), NodeUnused) The computing logic has two changes:

In addition, in terms of hybrid QoS deployment, Koordinator v1.6 enhances the QoS policy configuration capability for pods, which is suitable for scenarios such as adding blacklisted interfering pods on hybrid deployment nodes and adjusting canary hybrid QoS deployment:

You can enable the Resctrl feature for pods through the following methods:

apiVersion: v1

kind: Pod

metadata:

annotations:

node.koordinator.sh/resctrl: '{"llc": {"schemata": {"range": [0, 30]}}, "mb": {"schemata": {"percent": 20}}}'The CPU QoS configuration for pods can be enabled in the following ways:

apiVersion: v1

kind: Pod

metadata:

annotations:

koordinator.sh/cpuQOS: '{"groupIdentity": 1}'We would like to express our sincere gratitude to community developers including @kangclzjc, @j4ckstraw, @lijunxin559, @tan90github, and @yangfeiyu20102011 for their contributions to the hybrid deployment features.

With the continuous development of cloud-native technologies, more and more enterprises migrate their core business to the Kubernetes platform, making the cluster size and the number of tasks show explosive growth. This trend poses significant technical challenges, especially in scheduling performance and rescheduling policies:

• Scheduling performance requirements: As the cluster size scales, the number of tasks that the scheduler needs to process increases dramatically, which places higher requirements on the performance and scalability of the scheduler. For example, in a large-scale cluster, it is a key issue to quickly make the scheduling decisions of pods and reduce the scheduling latency.

• Rescheduling policy requirements: In a multi-tenant environment, resource competition intensifies. Frequent rescheduling may cause workloads to be repeatedly migrated between different nodes, which increases the burden on the system and affects cluster stability. In addition, how to reasonably allocate resources to avoid hotspot issues while ensuring the stable operation of production tasks has also become an important consideration in the design of rescheduling policies.

To address these challenges, Koordinator has fully optimized the scheduler and rescheduler in v1.6.0. It aims to improve the scheduling performance and the stability and rationality of rescheduling policies. The following optimizations are made for scheduler performance in the current version:

With the continuous expansion of the cluster size, the stability and rationality of the rescheduling process become core concerns. Frequent evictions may cause workloads to be migrated repeatedly between nodes, increasing system burdens and posing stability risks. To this end, we have made multiple optimizations to the rescheduler in v1.6.0:

1. Optimize the LowNodeLoad plug-in:

2. Enhance MigrationController:

3. Add the global rescheduling configuration parameter MaxNoOfPodsToEvictTotal. It controls the maximum number of evicted pods in the whole rescheduler, reducing the burden on the cluster and improving stability.

We would like to thank the community developers @AdrianMachao, @songtao98, @LY-today, @zwForrest, @JBinin, @googs1025, and @bogo-y for their contributions to scheduling and rescheduling optimization.

The Koordinator community will continue to focus on strengthening GPU resource management and scheduling features, provide rescheduling plug-ins to further solve the GPU fragmentation issue caused by unbalanced resource allocation, and plan to introduce more new functions and features in the next version to support more complex workload scenarios. Additionally, in terms of resource reservation and hybrid deployment, we will further optimize them to support finer-grained scenarios.

Currently, the community is planning the following proposals:

• Fine-grained device scheduling support Ascend NPU

• Rescheduling to address the imbalance of different types of resources on a single node

• PreAllocation: Reservation support binding to scheduled pods

The main usage issue to be addressed is as follows:

• NRI Plugin Conflict: Duplicate CPU Pinning Attempt Detected

The long-term proposal is as follows:

• Provide an Evolvable End to End Solution for Koordinator Device Management

We encourage user feedback and welcome more developers to participate in the Koordinator project to promote its development!

640 posts | 55 followers

FollowAlibaba Cloud Native Community - November 3, 2025

Alibaba Cloud Native Community - December 1, 2022

Alibaba Cloud Native Community - August 15, 2024

ApsaraDB - October 24, 2025

Alibaba Cloud Native Community - June 29, 2023

Alibaba Cloud Native Community - March 29, 2023

640 posts | 55 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Native Community