Watch the replay of the Apsara Conference 2022 at this link!

By Koordinator Team

Since Koordinator was released in April 2022, eight versions have been released. During the project's development for more than half a year, the Koordinator community has attracted many outstanding engineers from many companies. These engineers contributed many ideas, codes, and scenes to promote the maturity of the Koordinator project.

On November 3, at the Apsara Conference 2022 in Hangzhou, Yu Ding (Head of the Cloud-Native Application Platform of Alibaba Cloud Intelligence) announced that Koordinator 1.0 was officially released.

If you did not pay attention to the co-location and scheduling field, you probably don't know much about Koordinator. Since Koordinator 1.0 was released recently, this article clarifies the development history of the Koordinator project in detail, interprets the core idea and vision of Koordinator, and explains the technical concept of the rapidly developing cloud-native co-location system.

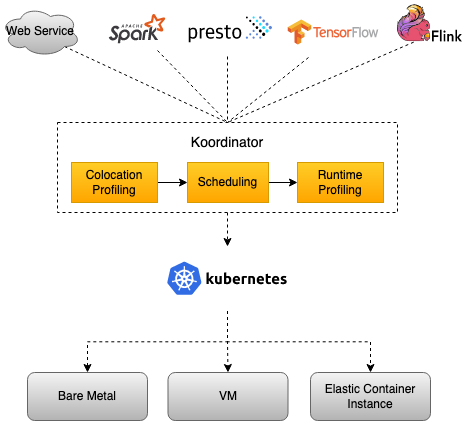

The name Koordinator is taken from the word coordinator, swapping the letter K because of Kubernetes. Koordinator semantically fits the problems to be solved in the project: coordinate and orchestrate different workloads in Kubernetes clusters so they can run on one cluster and one node with the best layout and posture.

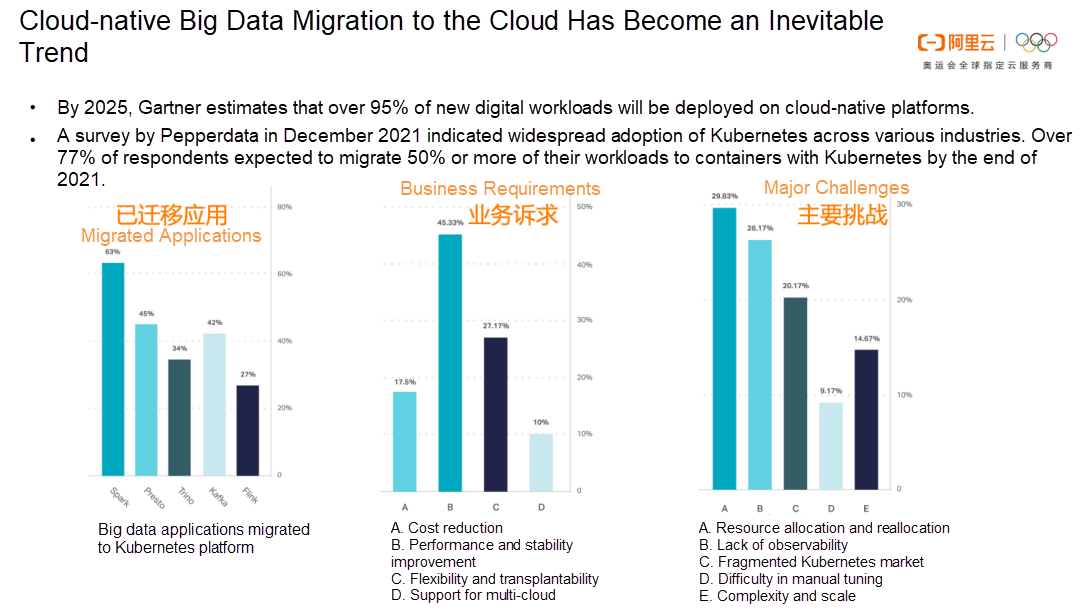

Container co-location technology originates from the Borg system within Google. It has always been a very mysterious existence in the industry before relevant papers were published in 2015. In the industry, Hadoop-based technologies for big data task scheduling solved the demands of enterprises for big data computing in the early stage. People familiar with the history should know that the cloud-native container scheduling system Kubernetes was opened source in 2014. Inspired by Borg design ideas, Borg system designers redesigned the Kubernetes system with the requirements of application scheduling in the cloud era. The good extensibility of Kubernetes enables it to adapt to diverse workloads and helps users increase the daily O&M efficiency of workloads. With the vigorous development of Kubernetes, it has gradually become the de facto standard of the industry. More workloads have begun to run in Kubernetes. Big data-related loads have gradually been migrated to Kubernetes, especially over the past two years.

Alibaba started the research and development of co-location technology as early as 2016. By 2022, the internal co-location system will have experienced three rounds of architecture upgrades and tests of Double 11 Global Shopping Festival peak traffic for many years. Currently, Alibaba has completed a cloud-native co-location with a full business scale of over ten million cores. The co-located CPU utilization rate exceeds 50%. The co-location technology has helped Alibaba significantly reduce computing costs in the 2022 Double 11 Shopping Festival. In this process, Alibaba has also gone through some detours and accumulated a lot of practical experience in production.

Alibaba officially released the open-source Koordinator in April 2022 to help enterprises avoid detours and get the resource efficiency dividend from the cloud-native co-location technology more quickly. Enterprises can implement multiple types of co-located load scheduling solutions based on standard Kubernetes with the help of the neutral, open-source community established by Alibaba to achieve a consistent cloud-native co-located architecture on and off the cloud, reduce system O&M costs, and maintain healthy and sustainable development.

Since Koordinator was released, Koordinator has continuously learned from Kubernetes and other predecessors in the open-source system and specifications and has gradually formed a relatively complete community operation mechanism. Here, we will downplay the position of each community member and invite them to participate in community construction as users and developers. Similarly, the future roadmap and development plan of Koordinator will also come from the feedback, requirements, and consensus of all members and users rather than the formulation of Alibaba. Therefore, for every cloud-native enthusiast, whether you are a first-time participant in the scheduling field or a witness of co-location technology, you are welcome to pay attention to and participate in the Koordinator project to help build a future-oriented open-source co-location system.

The co-location technology requires a complete, self-closed-loop scheduling loop, but in the process of applying the co-location technology, enterprises will face two major challenges:

The Koordinator team draws lessons from years of production practices at Alibaba and has designed specific solutions to the two challenges, aiming to help enterprises truly use co-location technology and make good use of Kubernetes instead of showing its technologies.

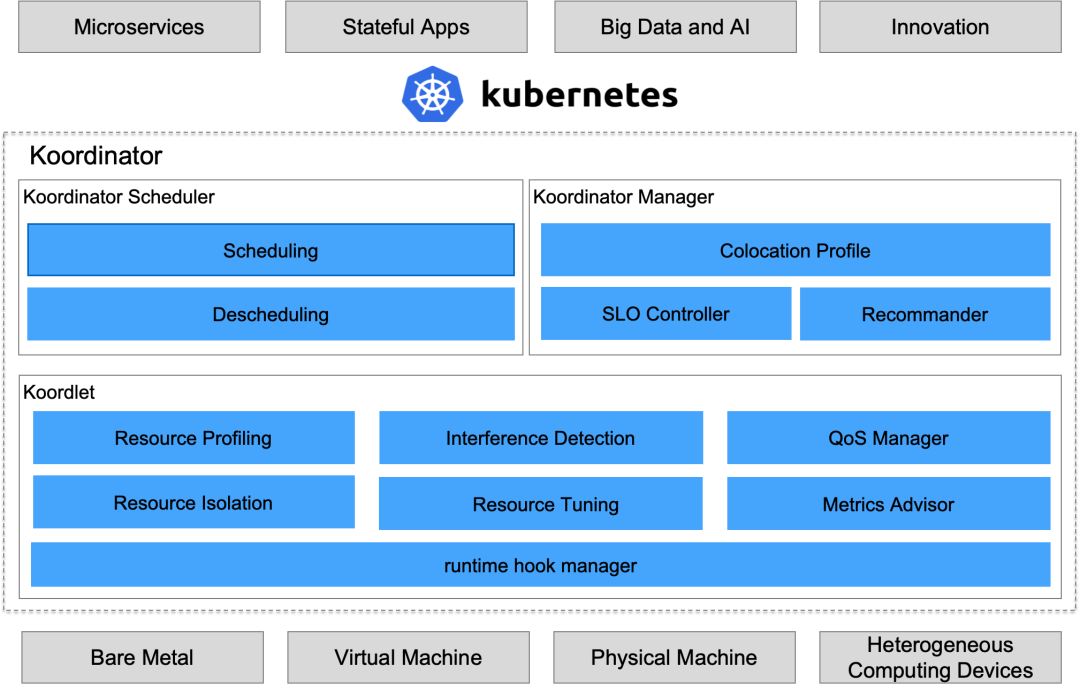

The following figure shows the overall architecture of Koordinator 1.0. Koordinator 1.0 provides complete solutions for co-located workloads orchestration, co-located resource scheduling, co-located resource isolation, and performance tuning. Koordinator 1.0 helps users improve the running performance of latency-sensitive services, mine idle node resources, and allocate them to computing tasks in need, increasing the utilization rate of global resources.

After the Double 11 Shopping Festival of 2021, Alibaba announced that the unified scheduling system was first implemented on a large scale, fully supporting Alibaba businesses in the Double 11 Shopping Festival.

Alibaba Cloud’s Container and Big Data Teams, Alibaba's Resource Efficiency Team, and Ant Group’s Container Orchestration Team have been developing the core project of Alibaba for over a year. Finally, they fully upgraded co-location technology to unified scheduling technology. Today, the unified scheduling system has fully unified the scheduling of Alibaba's e-commerce, search and promotion, and big data of MaxCompute. It has unified Pod scheduling and high-performance task scheduling, achieved complete resource views unification and scheduling coordination, realized the co-location of various complex business forms, and increased utilization. It supports the large-scale resource scheduling of dozens of data centers, millions of containers, and tens of millions of kernels worldwide.

As a practitioner of cloud-native co-location technology, Alibaba is devoted to introducing the technical concept of co-location to the production environment. Last year, Alibaba completed a co-location scale of more than ten million kernels during the Double 11 Global Shopping Festival, saving over 50% of the resource cost for big sales and speeding up 100% on a trace of fast putting on and pulling off shelves to achieve a smooth user experience with the help of the co-location technology.

Driven by peak scenarios such as the Double 11 Shopping Festival, Alibaba's co-located scheduling technology continues to evolve, and Alibaba has accumulated a lot of practical production experience. Today, it is the third generation of a cloud-native full-service co-location system. The architecture of Koordinator is based on Alibaba's internal ultra-large-scale production practices. Combined with the real demands of enterprise container customers, Koordinator has made more breakthroughs in standardization and generalization, realizing the first standard Kubernetes-based open-source co-location system available for production.

Co-location is a set of resource scheduling solutions for refined orchestration of latency-sensitive services and a hybrid deployment of big data computing workloads. The core technology is listed below:

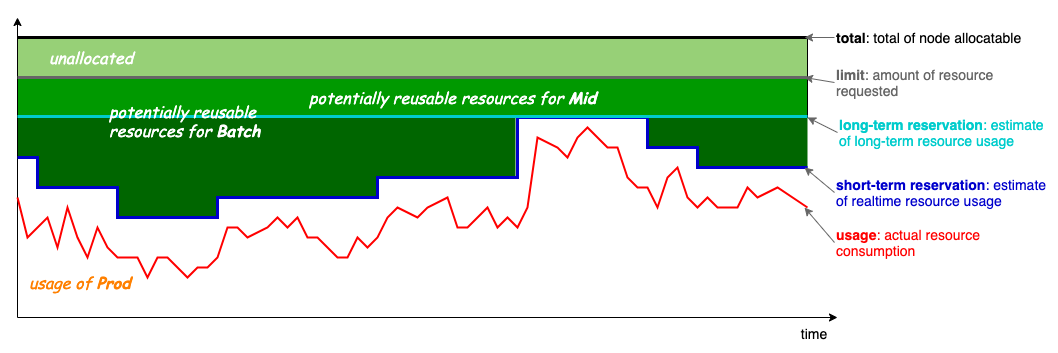

The preceding figure shows the co-located resource overcommitment model of Koordinator, which is also the most critical and core part of the co-location. The basic idea of overcommitment is to use those allocated but unused resources to run low-priority tasks. The four lines shown in the figure represent resource usage (red line), short-term reservation (blue line), long-term reservation (light blue line), and total resources (black line).

The resource model is sufficiently refined and flexible. It supports a wide range of online resource orchestration services, short-lived batch processing tasks (MapReduce), and real-time computing tasks. Koordinator schedules co-located resources based on such a resource model, with priority preemption, load awareness, interference identification, and QoS assurance technologies to build the underlying core system of co-located resource scheduling. The Koordinator community will be constructed based on this idea and continue to develop the scheduling capability in co-located scenarios to solve real business problems faced by enterprises.

The biggest challenge for enterprises to access the co-location is how to make applications run on the co-located platform. The threshold of this first step is often the biggest obstacle. Koordinator designed a zero-intrusion co-located scheduling system to address this issue, combined with internal production practice experience.

Users can introduce Koordinator to the production environment easily through efforts in these three areas. Thus, the first obstacle of applying co-location to the production environment is well addressed.

Since the release of Koordinator in April 2022, the community has been built around the scheduling of co-located resources to solve the core problems of resource orchestration and resource isolation in the co-located tasks. The Koordinator community is always built around three major capabilities during version iteration: task scheduling, differentiated service level objective (SLO), and QoS-aware scheduling capabilities.

At the beginning of the launch, Koordinator is expected to support co-located scheduling of various workloads in Kubernetes to improve the runtime efficiency and reliability of workloads, including the workloads with All-or-Nothing requirements in machine learning and big data. For example, when a job is submitted, multiple tasks are generated. These tasks are expected to either be scheduled successfully or failed to be scheduled. This requirement is called All-or-Nothing, whose corresponding implementation is called Gang Scheduling (or Coscheduling). Koordinator implements Enhanced Coscheduling based on the existing Coscheduling in the community to meet the All-or-Nothing scheduling requirements:

The Kubernetes of enterprises is a large Kubernetes cluster shared by multiple products and R&D teams. A large number of resources are managed by the resource O&M team, including the CPU, memory, and disk in the cluster.

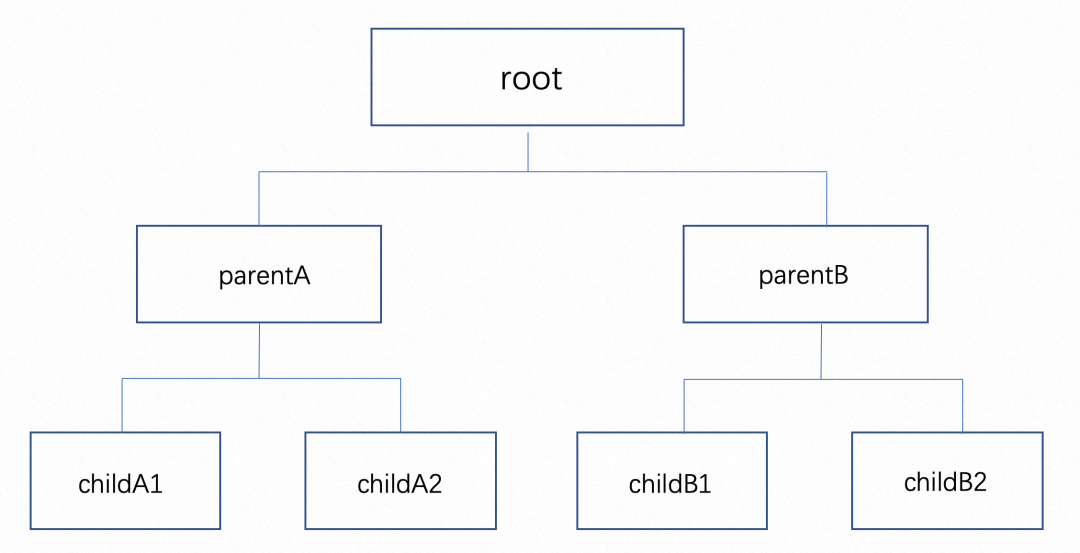

Koordinator realizes Enhanced ElasticQuota Scheduling based on the ElasticQuota CRD of the community to help users manage resource quotas, improve resource usage efficiency, reduce costs, and increase benefits. The Enhanced ElasticQuota Scheduling has the following enhancements:

Koordinator allocates the idle quota to more needed businesses through the quota borrowing mechanism and fairness guarantee mechanism of Koordinator ElasticQuota Scheduling. When the owner of the quota needs the quota, Koordinator can ensure that the quota is available. The tree-structure management mechanism is used to manage quotas, which can be easily mapped to the organizational structure of most companies and solve the quota management demands within the same department or across different departments.

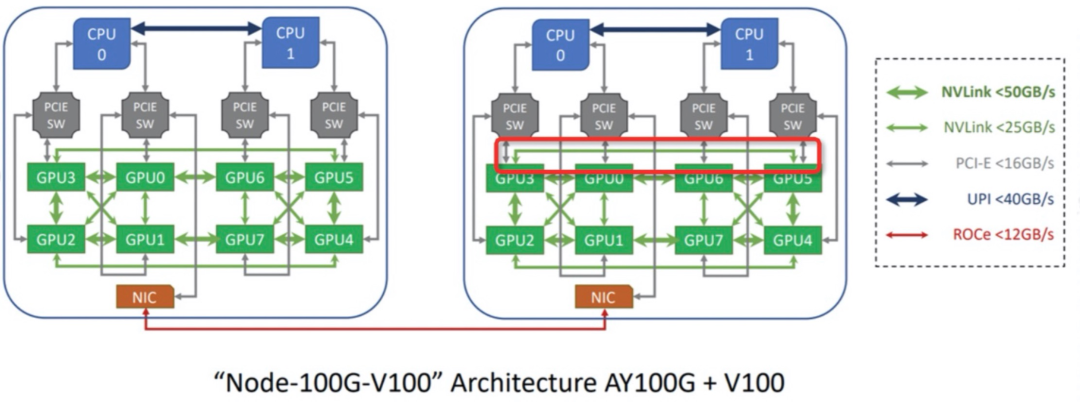

Heterogeneous resources are widely used in the AI field (such as GPU, RDMA, and FPGA). Koordinator provides fine-grained device scheduling management mechanisms for heterogeneous resource scheduling scenarios, including:

Differentiated SLO is the core co-location capability provided by Koordinator to ensure the running stability of pods after resources are overcommitted. Koordinator introduces a well-designed priority and QoS mechanism. Users access applications based on this best practice. With a comprehensive resource isolation policy, the quality of service of different applications is ultimately guaranteed.

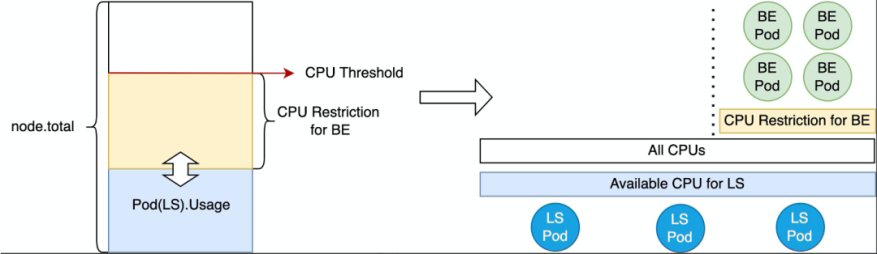

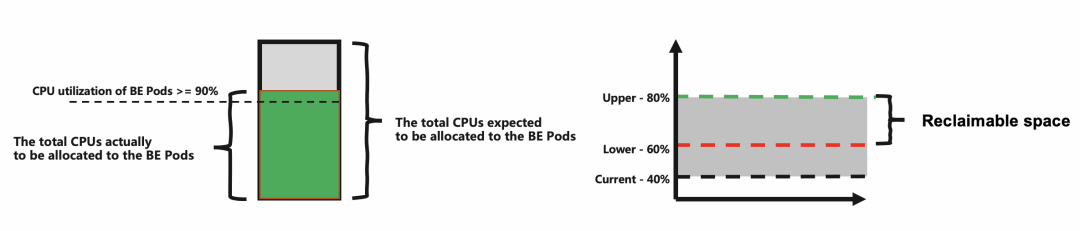

The koordlet, a standalone component of Koordinator, adjusts the CPU resource quota of a pod of the BestEffort type based on the load level of nodes. This mechanism is called CPU Suppress. If the load of the online service application on the node is low, the koordlet allocates more idle resources to the BestEffort pods. If the load of the online service application increases, the koordlet returns the CPU resources allocated to the BestEffort pods to the online service application.

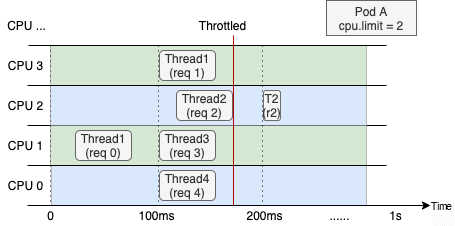

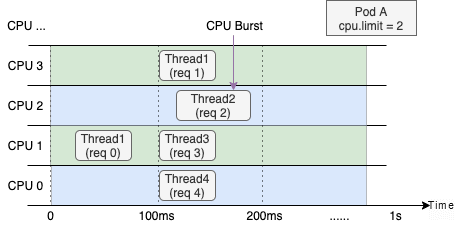

CPU Burst is an SLO-aware resource scheduling feature. Users can improve the performance of latency-sensitive applications by CPU Burst. The scheduler of the kernel suppresses the CPU of the container because of the CPU limit set by the container. This process is called CPU Throttle. The process reduces the performance of the application.

Koordinator automatically detects CPU Throttle events and automatically adjusts the CPU Limit to an appropriate value. The CPU Burst mechanism can significantly improve the performance of latency-sensitive applications.

When latency-sensitive applications provide services, memory usage may increase due to the burst traffic. Similarly, BestEffort workloads may have similar problems. For example, the current computing load exceeds the expected resource request or limit. These scenarios increase the overall memory usage of nodes and have an unpredictable impact on the runtime stability of nodes. For example, the quality of service of latency-sensitive applications will be degraded, and even the latency-sensitive applications will become unavailable. These problems are more challenging, especially in the co-located scenarios.

We implement an active eviction mechanism based on the memory security threshold in Koordinator. koordlet periodically checks whether the recent memory usage of nodes and pods exceeds the security threshold. If it exceeds, koordlet will evict some BestEffort pods to free up memory. Before the eviction, pods are ordered according to the specified priority; the lower the priority, the earlier the eviction. Pods with the same priority will be sorted according to memory usage (RSS). The higher the memory usage, the earlier the eviction.

When the load of the online applications of CPU Suppress increases, offline tasks may be frequently suppressed. Although this can ensure the runtime quality of online applications, it still has some impact on offline tasks. Offline tasks have a low priority, but frequent suppression will cause the performance of offline tasks to be unsatisfied, and the suppression will even affect the quality of service of online tasks. Moreover, extreme cases may occur due to frequent suppression. If offline tasks hold special resources (such as global kernel locks when they are suppressed), the frequent suppression may lead to problems (such as priority reversal), which will affect online applications (even though it does not happen very often).

Koordinator proposed an eviction mechanism based on resource satisfaction to solve this problem. We name the ratio of the total CPUs actually allocated to the total CPUs expected to be allocated as CPU satisfaction. If the CPU satisfaction of the offline task group is lower than the threshold and the CPU utilization of the offline task group exceeds 90%, the koordlet will evict some low-priority offline tasks and release some resources for higher-priority offline tasks. This mechanism can meet the resource requirements of offline tasks better.

Different types of workloads are deployed on the same machine in a co-location scenario. These workloads frequently compete for resources in the lower-level dimensions of the hardware. Therefore, the quality of service of the workload cannot be guaranteed if the competition is intense.

Based on resource director technology (RDT) Koordinator controls the final-level cache (usually L3 cache on the server) that can be used by workloads with different priorities. RDT also controls the memory bandwidth that workloads can use through the MBA feature. This way, the L3 cache and the memory bandwidth used by workloads can be isolated, ensuring the quality of service of high-priority workloads and increasing overall resource utilization.

The differentiated SLO capability of Koordinator provides many QoS assurance capabilities on the nodes, which can solve problems of runtime quality. Koordinator Scheduler also provides enhanced scheduling capabilities in the cluster dimension to ensure pods with different priorities and of different types are assigned appropriate nodes during the scheduling phase.

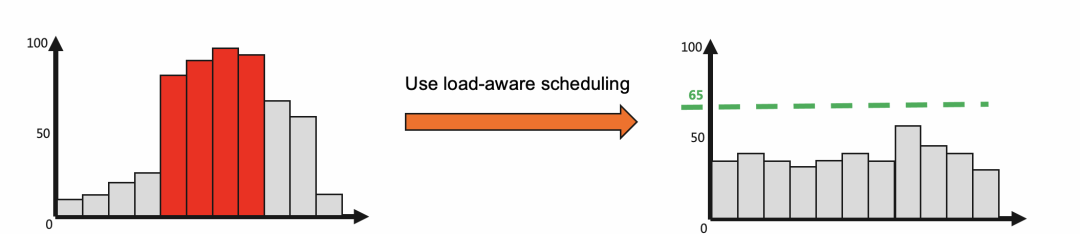

The overcommitted resource can significantly improve the resource utilization of the cluster, but it also highlights the uneven resource utilization among nodes in the cluster. This phenomenon also exists in non-co-location environments. The native Kubernetes does not support the resource overcommitment mechanism, and the resource utilization rate on nodes is not very high, which masks this problem to a certain extent. However, this problem is exposed when the resource utilization rate rises higher in co-location scenarios.

Uneven utilization refers to the unevenness between nodes and local load hotspots. The latter may affect the overall operation effect of workloads. In other cases, uneven utilization refers to the serious resource conflicts that may exist between online applications and offline tasks on nodes with high loads, affecting the runtime quality of online applications.

The Koordinator scheduler provides a configurable scheduling plug-in to control the utilization of the cluster to solve this problem. This scheduling capability mainly depends on the node metric data reported by the koordlet. Nodes whose loads are higher than a certain threshold are filtered out during scheduling. On the one hand, this prevents the case that pods cannot obtain good resource guarantees on nodes with high loads. On the other hand, it prevents nodes with high loads from deteriorating. The plug-in will select nodes with lower utilization in the scoring phase. Based on the time window and estimation mechanism, the plug-in avoids the situation that the cold node overheats after a period due to the instantaneous scheduling of too many pods to the cold node machine.

With the improvement of resource utilization, we are in a deep water area of co-location, and the performance of resource runtimes needs to be optimized further. A finer resource orchestration can help ensure the quality of runtime to push the resource utilization rate to a higher level through co-location.

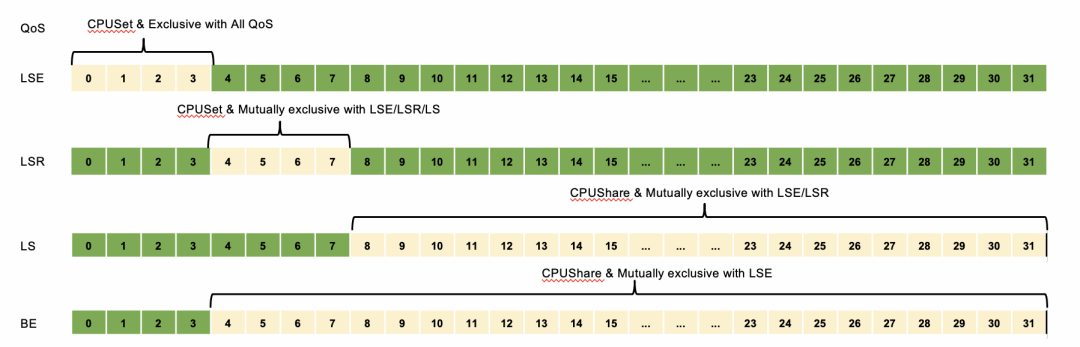

We have divided the LS types of Koordinator QoS online applications into three types: LSE, LSR, and LS. The split QoS type has higher isolation and runtime quality. With this split, the entire Koordinator QoS semantics are more precise, complete, and compatible with the existing QoS semantics of Kubernetes.

Moreover, we have designed a set of rich and flexible CPU orchestration policies for Koordinator QoS, as shown in the following table:

| Q0s | K8s QoS | Nuclear Binding Policy | Description |

| LSE | - | FullPCPUs | Meets a few latency-sensitive applications |

| LSR | Guaranteed | FulPCPUs/SpreadByPCPUs | Meets most latency-sensitive applications. When kubelet CPU Manager Policy is static, Guaranteed is equivalent to LSR |

| LS | Guaranteed/B Urstable | AutomaticallyResize | Meet most online applications. When kubelet CPU Manager Policy is none, Guaranteed is equivalent to LS. |

| BE | BestEffort | BindAll | Meet most offline workloads |

Different QoS-type workloads have different isolation:

Koordinator Scheduler allocates specific CPU logic cores for LSE pods and LSR pods and updates them to the Pod Annotations. The koordlet on the standalone side coordinates to update the CPU allocated by the scheduler to the cgroup.

The scheduler allocates CPUs according to the CPU topology. By default, it is guaranteed that the allocated CPUs belong to the same NUMA node for better performance. Pods can be configured with different mutually exclusive policies during CPU scheduling. For example, if the services of multiple cores in a system are deployed on the same physical core, the performance is poor, but it works well if they are separated. In this case, you can configure mutually exclusive policies. The scheduler will allocate different physical cores to pods with this mutually exclusive attribute as much as possible. The scheduler selects nodes in the scoring phase that meet the CPU scheduling requirements based on the overall situation of the cluster.

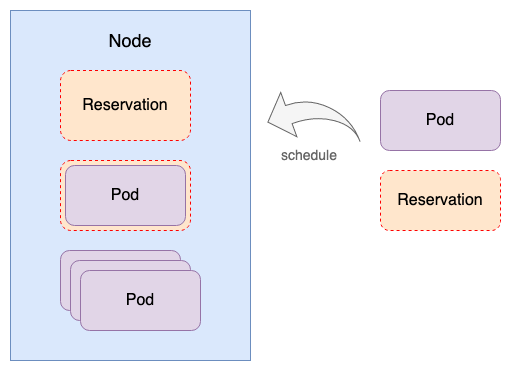

Koordinator allows you to implement atomic resource reservation without intruding into the existing Kubernetes mechanisms and codes. Koordinator Reservation API allows users to reserve resources without modifying the Pod Spec or existing workloads (such as Deployments and StatefulSets). Resource reservation plays an important role in scenarios (such as capacity management, fragmentation optimization, scheduling success rate, and rescheduling):

During scheduling, the scheduler makes a comprehensive judgment based on the conditions and configurations in the cluster and then selects the most suitable node to be allocated to pods. However, as time and workloads change, the most appropriate node also gets worse. Both the differentiated SLO and the scheduler provide rich capabilities to solve these problems. However, the differentiated SLO focuses more on its standalone situation and cannot perceive global changes.

From the perspective of control, we also need to make decisions based on the situation in the cluster to evict and migrate abnormal pods to more appropriate nodes, so these pods can have the opportunity to provide better services. Therefore, Koordinator Descheduler was created.

We believe that pod migration is a complex process that involves steps (such as auditing, resource allocation, and application startup), scenarios (such as application release and upgrade), scale-in and scale-out, and resource O&M by cluster administrators. Therefore, learning how to manage the stability risks in the pod migration process and ensuring that the availability of applications will not be affected by the pod migration is a critical issue that must be solved.

Here are a few scenarios to help you understand better:

Koordinator defines a PodMigrationJob API based on the CRD. The rescheduler or other automated self-healing components can safely migrate pods through PodMigrationJob. When the controller processes pod migration jobs, it attempts to reserve resources through the Koordinator reservation mechanism first. If the reservation fails, the migration fails. If the resource reservation succeeds, it will initiate an eviction and wait for the reserved resources to be consumed. The whole process will be recorded in the PodMigrationJobStatus, generating related events.

We have implemented a new Descheduler Framework in Koordinator. We consider the information below in rescheduling scenarios:

Koordinator descheduler framework provides mechanisms for plug-in configuration management, such as enabling, disabling, and unified configuration, plug-in initialization, and plug-in execution cycle management. Moreover, this framework has a built-in controller based on the PodMigrationJob, and the controller serves as an evictor plug-in that can be easily used by various rescheduling plug-ins to help rescheduling plug-ins securely migrate pods.

Based on the Koordinator descheduler framework, users can easily implement custom rescheduling policies by implementing custom scheduling plug-ins based on the Kubernetes scheduling framework. Users can also implement the controller in the form of plug-ins to support the scenario where rescheduling is triggered based on events.

The Koordinator community will continue to enrich the form of co-located big data computing tasks, expand the co-location support of computing frameworks (such as Hadoop YARN), and provide more solutions for co-located tasks. This community will also continuously improve the interference detection and problem diagnosis system on the project, promote more workloads to integrate into the Koordinator ecosystem, and achieve higher resource operation efficiency.

The Koordinator community will continue to maintain a neutral development trend and unite with various manufacturers to continuously promote the standardization of the co-location capability. We welcome everyone to join the community to accelerate the process!

How to Protect Gateway High Availability in Big Promotion Scenarios

Cloud-Native Activates New Paradigms for Application Construction, Bringing Serverless Singularity

640 posts | 55 followers

FollowAlibaba Cloud Community - December 2, 2022

OpenAnolis - June 1, 2023

Alibaba Cloud Native Community - December 6, 2022

Alibaba Cloud Native Community - August 15, 2024

Alibaba Cloud Native Community - December 7, 2023

Alibaba Cloud Native Community - June 29, 2023

640 posts | 55 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn MoreMore Posts by Alibaba Cloud Native Community