By Hao Chen and Yingqiang Zhang

Recently, at the VLDB 2023 database conference held in Vancouver, Canada, a paper PolarDB-SCC: A Cloud-Native Database Ensuring Low Latency for Strongly Consistent Reads written by the Alibaba Cloud ApsaraDB team was successfully selected for the VLDB Industrial Track.

In this paper, PolarDB-SCC proposes a cloud-native database with a globally strongly consistent primary-secondary architecture. This architecture has been in use for over a year and is the first cloud-native database with a primary-secondary architecture that achieves globally consistent reads without service awareness. This solves the consistency issues faced by many customers.

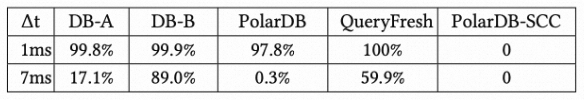

Existing cloud-native single-write-multiple-read databases based on shared storage typically use asynchronous log transmission and log playback, resulting in expired data being returned by read-only (RO) nodes, which can only guarantee eventual consistency. We tested several databases and found that their RO nodes return expired data. In our tests, data is first written to the read-write (RW) nodes, and then after a short period, the data is read on the RO nodes, and the proportion of expired data read on the RO nodes is calculated. The results, shown below, include anonymized commercial cloud-native databases referred to as DB-A and DB-B. PolarDB does not have the SCC feature, and QueryFresh is an academic solution. It is evident that only PolarDB-SCC can completely avoid reading expired data.

To achieve strong consistency in RO nodes, commonly used algorithms are commit-wait and read-wait. In the commit-wait algorithm, RW nodes have to wait until the transaction logs are transmitted to all RO nodes and played back before committing the transaction. This significantly affects the performance of RW nodes. In the read-wait algorithm, when an RO node processes a request, it first needs to obtain the current maximum commit timestamp of the RW node and then wait for the RO node's logs to be played back before actually processing the request. This results in longer processing latency for RO nodes.

To achieve global consistency with low latency, we have redesigned and implemented PolarDB-SCC. By deeply integrating with RDMA (Remote Direct Memory Access), it uses an interactive multi-dimensional primary-secondary information synchronization mechanism instead of the traditional primary-secondary log replication architecture. Through ingenious design, it reduces the number of times RO nodes obtain timestamps and avoids unnecessary log playback waiting. The result is the implementation of globally strongly consistent reads in RO nodes with minimal performance loss.

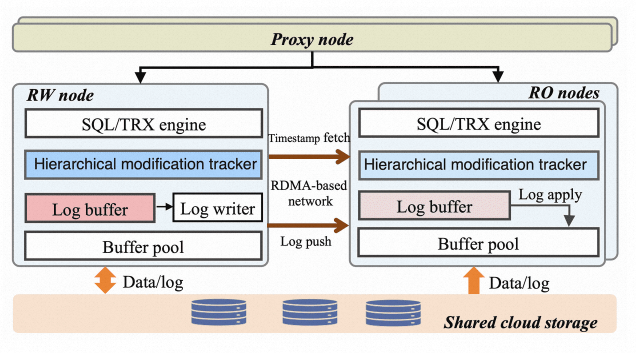

The PolarDB-SCC uses a shared storage-based architecture called single-write-multiple-read. It consists of a read-write (RW) node and multiple read-only (RO) nodes. These nodes can also be connected to proxy nodes. The proxy node enables read/write separation by distributing read requests to the RO nodes and forwarding write requests to the RW nodes, thus ensuring transparent load balancing. To quickly transmit logs and obtain timestamps, the RW and RO nodes can employ RDMA-based network connections.

The transaction system of PolarDB has been redesigned based on timestamps. It completely reconstructs the traditional transaction system of MySQL, which is based on active transaction arrays. This redesign supports distributed transaction extension and improves standalone performance. This serves as the foundation of PolarDB-SCC. The core designs of PolarDB-SCC are as follows:

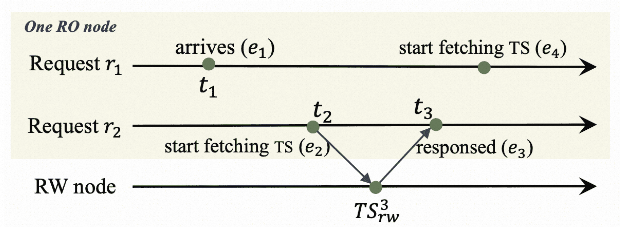

The latest modified timestamp is stored on the RW node. Therefore, the RO node needs to obtain the timestamp from the RW node each time a request is processed. Although the RDMA network is fast, heavy load on the RO nodes can still result in high overhead. To reduce the overhead of timestamp acquisition, we propose the use of linear Lamport timestamp. With this approach, after the RO node acquires the timestamp from the RW node, it can be stored locally. Any request that arrives at the RO node earlier than the timestamp can directly use the locally stored timestamp without obtaining a new timestamp from the RW node. This can save a significant amount of overhead caused by obtaining timestamps when the RO nodes are heavily loaded.

The RW node maintains global, table-level, and page-level updated timestamps. When an RO node processes a request, it first obtains the global timestamp. If the global timestamp is smaller than the timestamp of the RO playback log, the RO node does not immediately wait. Instead, it continues to compare the timestamps of the tables and pages that the request needs to access. It only waits for log playback when the timestamp of the page to be accessed is still unsatisfied. This approach avoids unnecessary waiting for log playback.

PolarDB-SCC uses a one-sided RDMA interface to facilitate data transmission from RW nodes to RO nodes. This significantly improves log transmission speed and reduces CPU overhead caused by log transmission.

When processing a read request, an RO node must obtain the latest timestamp from the RW node. If the RO node is heavily loaded, it will consume more network bandwidth and introduce greater latency to the RO node's request. By implementing linear Lamport timestamps, one timestamp can serve multiple requests when there is high concurrency. The main idea is that if a request finds that another request has already obtained a timestamp from the RW node after its arrival, it can directly reuse that timestamp instead of getting a new one from the RW node, while still ensuring strong consistency. This can be illustrated with the following example.

In the preceding figure, one RO node has two concurrent read requests. r1 and r2. The r2 sends a request to the RW node to read the timestamp at t2, and obtains the timestamp TS3rw of RW at t3. We can get the relationship between these events: e2 TS3rw

TS3rw e3. r1 arrives at t1. By assigning a timestamp to each event in the RO node, the sequence relationship of different events on the same RO node can be determined. If t1 is earlier than t2, we can draw a conclusion, e1

e3. r1 arrives at t1. By assigning a timestamp to each event in the RO node, the sequence relationship of different events on the same RO node can be determined. If t1 is earlier than t2, we can draw a conclusion, e1 e2

e2 TS3rw

TS3rw e3. In other words, the timestamp obtained by r2 actually reflects all updates before the arrival of r1, so r1 can directly use the timestamp of r2 instead of obtaining a new timestamp. Based on this principle, each time the RO node obtains the timestamp of the RW node, it stores the timestamp locally and records the time when the timestamp is obtained. If the arrival time of a request is earlier than the time when the locally stored timestamp is obtained, the request can directly use the timestamp.

e3. In other words, the timestamp obtained by r2 actually reflects all updates before the arrival of r1, so r1 can directly use the timestamp of r2 instead of obtaining a new timestamp. Based on this principle, each time the RO node obtains the timestamp of the RW node, it stores the timestamp locally and records the time when the timestamp is obtained. If the arrival time of a request is earlier than the time when the locally stored timestamp is obtained, the request can directly use the timestamp.

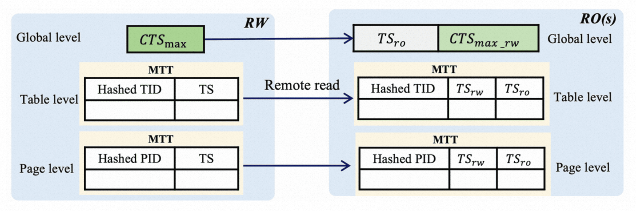

To achieve strongly consistent reads on an RO node, the RO node needs to first obtain the current maximum timestamp of the RW node. Then, it needs to wait until the log on the RO node is played back to the timestamp before the read request can be processed. However, in reality, the requested data may already be the latest while the RO node is waiting for log playback. Therefore, there is no need to wait for log playback. To avoid these unnecessary waiting times, PolarDB-SCC uses more fine-grained modification tracking. The RW node maintains three levels of modification information: the global timestamp of the latest modification, the table-level timestamp, and the page-level timestamp.

When processing a read request, the RO node first obtains the global timestamp from the RW node. If the global timestamp does not meet the condition, the RO node can further obtain the timestamp of the currently accessed table. If the global timestamp still does not meet the condition, the RO node needs to check whether the timestamp of the corresponding page to be accessed meets the condition. The RO node only needs to wait for log playback when the timestamp of the page is still greater than the RO log playback timestamp.

The three levels of timestamps on the RW node are stored in a memory hash table. To reduce memory usage, the timestamps of multiple tables or pages are stored in the same location, and only larger timestamps are allowed to replace smaller timestamps. This way, even if the RO node obtains a large timestamp, consistency is not affected. The specific design is shown in the following diagram. TID and PID indicate the IDs of the table and page, respectively. When an RO node obtains the corresponding timestamp, it will be locally cached according to the design of the linear Lamport timestamp mentioned earlier, and can be used by other eligible requests.

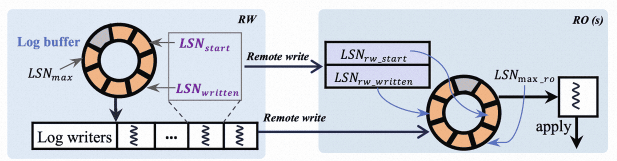

If an RO node always obtains logs through shared storage, the log transmission will be slow. If an RW node sends logs to an RO node through TCP/IP, it will waste the CPU resources on both the RW and RO nodes and result in relatively high latency. Therefore, in PolarDB-SCC, the RW node remotely writes logs to the RO cache using one-sided RDMA. This process does not require the CPU resources of the RO node and has low latency.

The specific implementation is shown in the following diagram. Both RO and RW nodes maintain log buffers of the same size. The background thread of the RW node writes the log buffer of the RW node to the log buffer of the RO node using RDMA. Since the CPU resources of the RO node are not involved in log transmission, the RO node cannot determine which logs in its cache are valid, and the RW node cannot determine which logs on the RO node have been played back. Therefore, the log transfer protocol requires additional control information to ensure that no overwriting occurs. Due to space limitations, further details are not provided here and can be referred to in the paper.

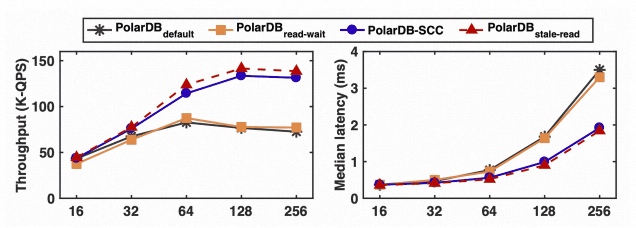

PolarDB-SCC has been officially released on PolarDB for MySQL and has been running in production for over a year. Therefore, our experiments were conducted in the Alibaba Cloud public cloud environment. Most of the experiments were performed on instances with 8 cores and 32GB of memory. Since PolarDB-SCC is built on top of PolarDB for MySQL, our main comparison is with different configurations of PolarDB:

PolarDB-default: In this configuration, all requests are processed on the read-write (RW) node, resulting in a strongly consistent system.

PolarDB-read-write: This configuration uses a basic read-write scheme to achieve strongly consistent reads on the read-only (RO) node.

PolarDB-stale-read: In this configuration, the RO node directly processes the request and may return outdated data.

The line chart above depicts the performance of each system under different workloads using the SysBench read-write load. When the workload is low (e.g., 16 or 32 threads), the performance of different systems is similar since they have not reached their bottleneck. In this scenario, it is acceptable for all requests to be processed on the RW nodes. As the workload increases, there is a slight performance increase in PolarDB-default and PolarDB-read-write, but it quickly reaches saturation. However, PolarDB-SCC consistently maintains similar performance to PolarDB-stale-read. Hence, PolarDB-SCC not only achieves strong consistency but also incurs minimal performance loss.

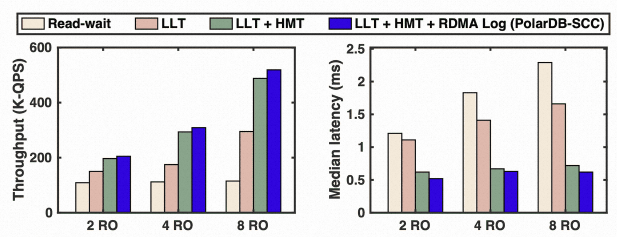

We conducted further tests to evaluate the performance improvement brought about by different designs of PolarDB-SCC. The abbreviation LLT represents linear Lamport timestamps, while HMT stands for hierarchical fine-grained modification tracking. It can be observed that each design of PolarDB-SCC contributes to the overall performance improvement. Moreover, the more RO nodes there are, the more significant the performance improvement becomes.

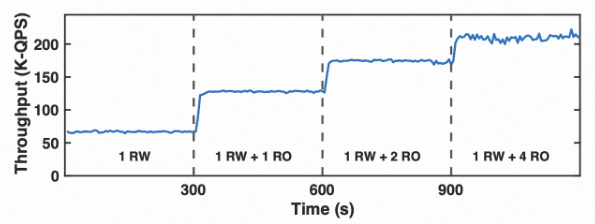

PolarDB-SCC offers a unified and strongly consistent endpoint through proxies. Users can connect their applications to this endpoint and dynamically scale the number of RO nodes in the backend without making any changes to their applications. The serverless feature of PolarDB allows for the dynamic adjustment of the number of RO nodes to accommodate varying workloads. When integrated with PolarDB-SCC, the application appears to be connected to only one RW node with dynamic resources, while ensuring strong consistency. This scenario was tested as shown in the figure below. As the workload becomes heavier at 300 seconds, 600 seconds, and 900 seconds, the database cluster dynamically adds more RO nodes in the background. The addition of more RO nodes results in a rapid and almost linear increase in performance.

Achieving strong consistency among read-only nodes has always been a challenging technical problem in the database industry. PolarDB-SCC disrupts the traditional primary-secondary replication architecture and proposes a new database architecture. This new architecture utilizes various RDMA operators to comprehensively reconstruct the data communication between primary and secondary nodes. It tracks fine-grained data modifications, introduces a new timestamp scheme, and integrates with a new generation of transaction systems based on time sequences to achieve high-performance global consistency. This architecture is available on the official PolarDB website. For more information, please visit:

https://www.alibabacloud.com/help/en/polardb/polardb-for-mysql/user-guide/scc

Click on the link to download the paper.

Click here to learn more about PolarDB.

Alibaba Cloud ApsaraDB paper list selected in the VLDB 2023:

[Infographic] Highlights | Database New Feature in November 2023

About Database Kernel | PolarDB X-Engine: Building a Cost-Effective Transaction Storage Engine

ApsaraDB - July 11, 2023

ApsaraDB - August 13, 2024

ApsaraDB - October 26, 2023

ApsaraDB - September 21, 2022

ApsaraDB - November 28, 2019

ApsaraDB - December 21, 2022

MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn MoreMore Posts by ApsaraDB