By Qingda Hu, Haiping Zhang and Lixiang Yuan

DDL is the most resource-intensive SQL operation in a database. This article provides an overview of the experience and progress in end-to-end MDL (Metadata Lock) lock management in Alibaba Cloud PolarDB. The goal is to continuously optimize the user experience and create the best cloud-native database for users.

In daily database operations, users often feel uneasy about DDL due to concerns about its impact on business SQL. The main issue lies in the lock blocking problem caused by MDL table locks during DDL execution. Additionally, the various types of DDL make it difficult for users to understand the locking behavior and anticipate the consequences, adding complexity to the problem. With years of experience from numerous online instances, we truly understand the confusion users face when dealing with MDL lock problems.

This article summarizes the experience and progress achieved by the kernel team of Alibaba Cloud PolarDB for MySQL in end-to-end MDL lock management to continuously strive for lock-free DDL and enable users to execute DDL. Before diving into the details, let's review the main concerns users have regarding DDL locking.

Unfortunately, almost all DDL operations, whether it's the native DDL of the MySQL kernel or various third-party plugins (like gh-ost, pt-osc, and "lock-free changes" by cloud vendors), require MDL mutexes at the table level. This is because DDL aims to modify table structure or definitions, which inevitably involves modifying metadata or dictionary information. Therefore, DDL relies on MDL locks to correctly update metadata, perform file operations, and update corresponding cache information. When DDL modifies metadata, it applies MDL mutexes at the table level to block concurrent metadata query or modification operations, and then safely updates the metadata cache to ensure that all threads parse the corresponding version of table data with the correct version of metadata.

Readers familiar with MySQL may wonder why third-party plugins such as gh-ost appear to show "lock-free" performance during DDL operations. The core difference here lies in the fact that the MySQL kernel and third-party plugins use different strategies to handle the issue of "no lock".

Compared to third-party plugins, the MDL locking mechanism of the MySQL kernel is simple and straightforward. When a DDL operation requests an MDL-X (mutex), if there are uncommitted long transactions or large queries on the destination table, the DDL operation will continue to wait to acquire the MDL-X mutex. Since MDL-X locks have the highest priority, DDL will block all new transactions on the destination table while waiting for MDL-X locks. This can lead to accumulation and congestion of business connectivity, and may even cause the entire business system to fail due to an avalanche effect.

To avoid this problem, the MySQL community has developed many external tools, such as pt-osc and GitHub gh-ost. These tools implement table copying as a method, which involves creating an empty table, copying existing data through select + insert, copying incremental data through triggers or binlogs, and finally switching between the new and old tables through a rename operation. Various tools provided by cloud vendors, like DMS' lock-free changes, have similar principles to these external tools. However, this method also has some obvious disadvantages:

Unfortunately, neither method is perfect in all scenarios, whether it is a third-party plug-in or the MySQL kernel. The PolarDB for MySQL kernel team aims to address both the avalanche and hunger problems while maintaining optimal performance.

After resolving the issue of "not acquiring the lock," we also need to address the problem of "acquiring the lock." If the mutex is held for an extended period, it can lead to issues such as business accumulation and avalanche.

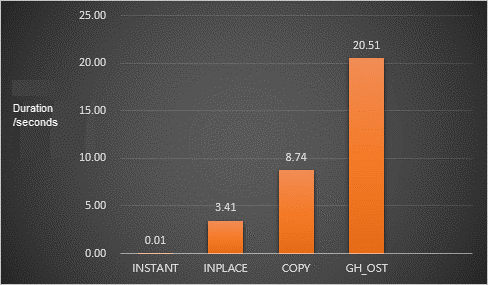

MySQL users are familiar with the three types of DDL in MySQL: INSTANT DDL, INPLACE DDL, and COPY DDL. Among them, online DDL (commonly known as "non-locking table" DDL, including INSTANT DDL and most INPLACE DDL) does not lock the table for most of the execution time, only briefly holding the MDL-X lock of the table when modifying metadata (usually within seconds). This provides a good user experience. MySQL 8.0 has already implemented online DDL capabilities for common operations such as adding indexes, adding columns within seconds, and adding or subtracting primary keys. However, COPY DDL still exists when SQL layer operations are involved. During the execution of COPY DDL, the table remains locked throughout the process (read-only, no writes). SQL layer operations include modifying the character set of a table or the type of a column. To address COPY DDL, PolarDB for MySQL extends the scope of online DDL that doesn't lock tables. For example, PolarDB for MySQL supports Instant Modify Column, allowing you to modify column types within seconds. It also supports all online DDL capabilities at the SQL layer, collectively referred to as Fast DDL.

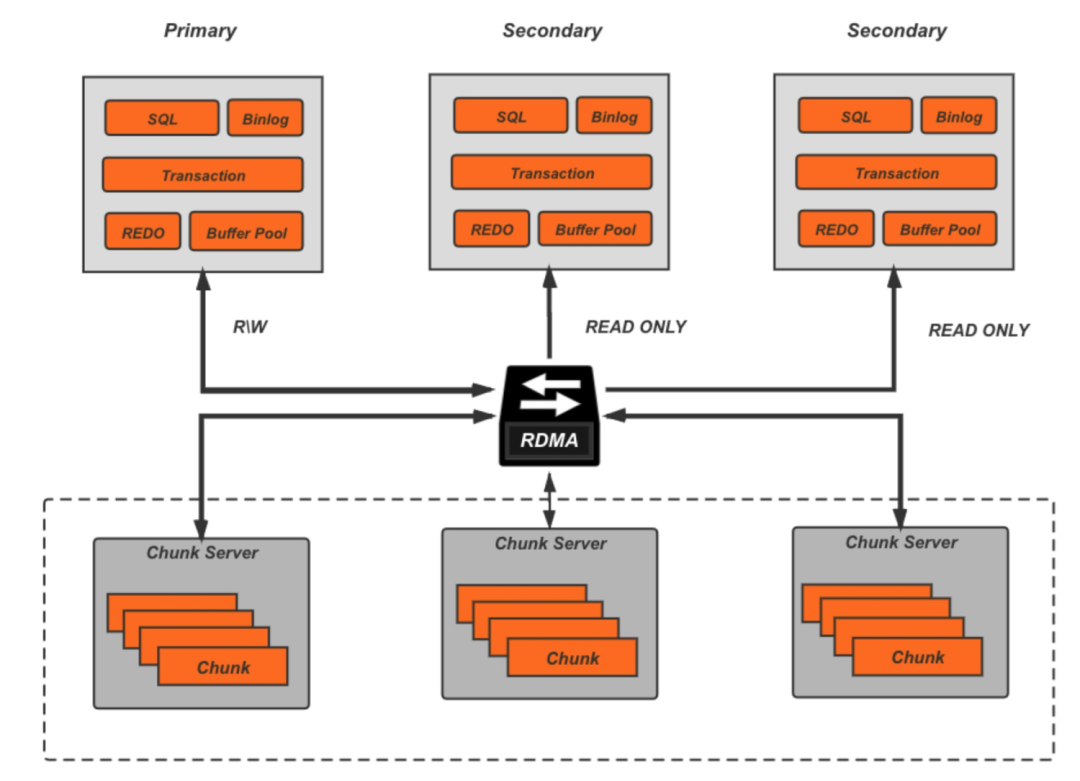

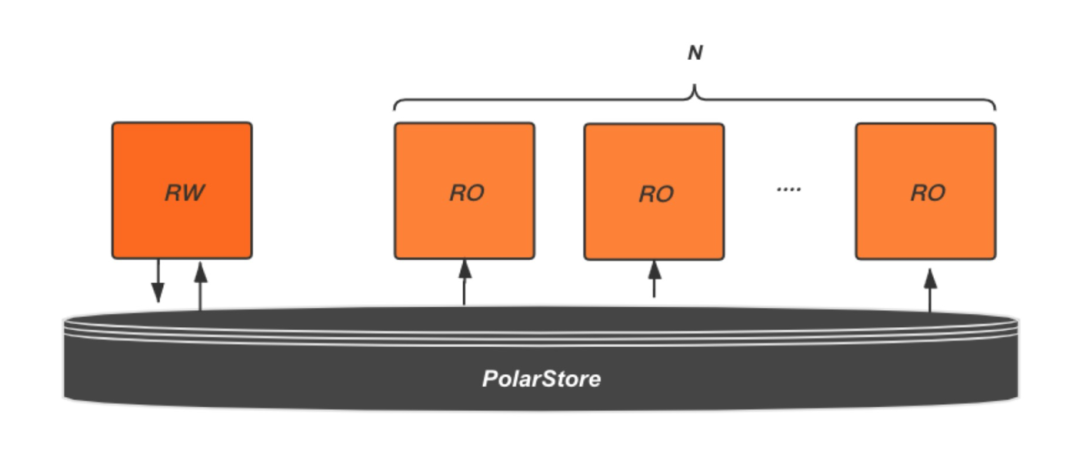

Compared to MySQL, the cluster architecture of PolarDB complicates this problem. MDL locks not only focus on a single node but also encompass locks on the synchronization links of multiple nodes and the cluster. Therefore, a cluster-level end-to-end solution is required. MySQL users are familiar with MySQL primary and secondary clusters based on binlogs. The primary and secondary nodes are logically isolated in the MySQL primary and secondary replication clusters that rely on binlogs. In other words, the MDL lock behavior of the primary node does not affect the secondary node. Therefore, MySQL only needs to consider the MDL lock of a single node. However, PolarDB for MySQL is based on a shared storage architecture. Taking a write-once-read-multiple cluster as an example, a write node and multiple read-only nodes share the same distributed storage and synchronize data among different nodes via physical replication. When a write node performs a DDL operation, multiple read-only nodes can observe real-time data during the DDL process. Hence, the MDL table lock in PolarDB is a cluster-level distributed lock, requiring consideration of lock blocking on multiple nodes.

Based on the architectural features of PolarDB and our years of online operations and maintenance experience, we have identified several objectives to achieve a DDL lock mechanism with a good user experience in the cluster dimension:

1. Solve the problem of avalanche. In the past, DDL failures caused by large transactions or queries on RW write nodes, read-only nodes, or blocking points on the RW->RO physical replication link could trigger a business avalanche. To address this issue, PolarDB for MySQL introduced the Non-Block DDL feature 1 last year, which allows new transactions to access the destination table even if the MDL-X lock cannot be obtained. This feature ensures the stability of the entire business system and has been well-received by many customers.

2. Solve the problem of hunger. In cases where the lock is not available, the Non-Block DDL feature uses methods like retry to avoid DML accumulation and avalanche. However, in scenarios with large transactions or queries, DDL may still fail to obtain the lock and continue to fail. With an increasing number of major users using PolarDB for MySQL, which includes instances with more than 10 read-only nodes, the probability of encountering large queries or transactions in the cluster dimension also increases. To address this issue, PolarDB for MySQL recently released the Preemptive DDL feature [3], which grants the highest MDL lock permission to a DDL. If conditions are met, PolarDB for MySQL will proactively kill transactions and queries that block the DDL, ensuring smooth execution.

3. Solve data consistency and real-time problems. TP databases have high requirements for transactions, and it is crucial to ensure consistency among data changes, meta information/meta information cache changes, and file operations during the DDL process. In PolarDB for MySQL, which is based on shared storage, the complexity of maintaining consistency across multiple nodes becomes even greater. Multiple nodes need to meet consistency requirements during all phases, such as normal data synchronization, database recovery, and point-in-time restoration. PolarDB for MySQL has made a series of optimizations to address these issues. However, this part of the content requires a deeper understanding of database background and code, so it will not be further discussed in this article.

4. Addressing the blocking problem of RW->RO physical replication link.Since its launch five years ago, PolarDB for MySQL has been widely used in various industries. Each industry has different requirements for DDL operations. The following are the specific requirements:

• High-frequency DDL operations, such as those in SaaS and other industries, require high-performance MDL locks. DDL operations are common and frequent. In order to prevent lock blocking from affecting data synchronization across the cluster, PolarDB needs to decouple distributed MDL locks from physical replication.

• DDL operations often come with high business pressure, especially when indexing is involved in high-pressure scenarios. In such cases, a large number of redo logs are generated. PolarDB ensures the stability and low latency of the physical replication link during the DDL process.

To address these issues, PolarDB for MySQL has optimized the entire physical replication link [4]. It utilizes asynchronous thread pools and feedback mechanisms to decouple the synchronization of MDL locks from physical replication. It also optimizes the synchronization and replication speed of redo logs during DDL [5], catering to the synchronization requirements in high-pressure DDL scenarios.

5. Continuous evolution capability: DDL & DML MVCC. As mentioned earlier, in extreme cases, users still need to manually execute Preemptive DDL to resolve the hunger problem. We have been pondering if there is a better way for users to be completely unaware of MDL locks. Readers familiar with InnoDB are likely aware of its row-level MVCC capability. Even if a transaction modifies a row of data but has not been committed, when another transaction queries the same row of data, the transaction creates the corresponding version based on its timestamp through the undo list, without waiting for the lock to be released.

Some readers may wonder why DDL does not provide a similar MVCC capability to prevent DDL and DML operations from blocking each other. The reason is that DDL operations involve changes to various information, such as file operations, table data, metadata, and table structure cache. Achieving MVCC capability for DDL and DML would require significant modifications to multiple modules and code, resulting in excessive code changes and higher stability risks. However, to meet customer demands, the PolarDB kernel team has been exploring the optimal engineering path. In the upcoming version of PolarDB 8.0.2, we will introduce the Instant Add Column feature, providing users with the MVCC capability for high-frequency DDL and DML. In the future, we will continue to support MVCC capability for other high-frequency DDL and DML operations, such as Add Index.

As mentioned before, the non-block DDL feature 1 is designed to address the business avalanche problem caused by MDL lock blocking. This feature adopts a lock acquisition logic similar to that of third-party plug-ins (gh-ost and pt-osc). When a DDL operation fails to acquire an MDL lock, the lock acquisition thread enters a short Sleep phase and then attempts to acquire the MDL lock again. This ensures that the business remains online during DDL execution. Non-block DDL has been tested in a grayscale environment and has gained popularity among many users. In the future, we will consider enabling this feature by default. Additionally, in version 2.2.15 of 8.0.2, non-block DDL is supported at the cluster level. If the primary node has acquired the MDL lock but the synchronized MDL lock on the read-only node is blocked (default blocking time is 50s, controlled by the loose_replica_lock_wait_timeout parameter), non-block DDL will attempt to acquire the lock at the cluster level to implement non-block DDL.

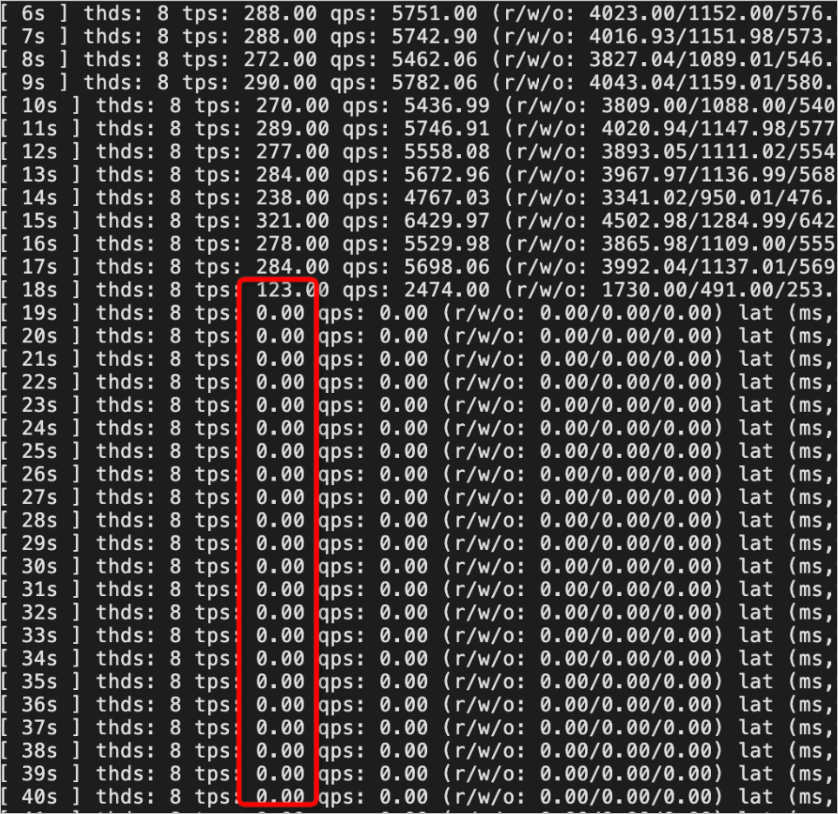

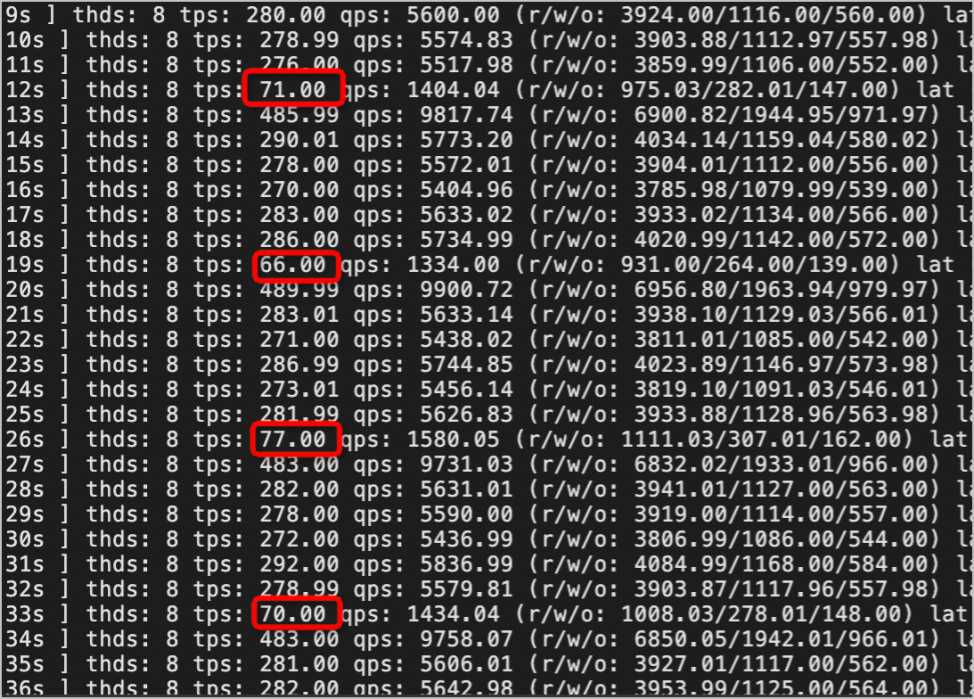

You can enable the non-block DDL feature by setting the loose_polar_nonblock_ddl_mode parameter to ON [2]. Below is a comparison of the impact on your business when enabling the non-block DDL feature versus using the native DDL feature, using sysbench to simulate your business.

1. Start a transaction on the sbtest1 table but do not commit the transaction, so that the transaction holds the MDL lock to the table.

begin;

select * from sbtest1;2. In another session, add columns to the sbtest1 table with Non-Block DDL enabled and disabled and monitor the TPS changes.

# The current DDL cannot acquire the MDL lock because the large query of current session 1 holds the MDL lock and is blocked.

alter table sbtest1 add column d int;The TPS drops to zero and the default timeout period is 31536000. The business is severely affected.

TPS declines periodically but not to zero. It slightly affects the business, and the stability of the system is ensured.

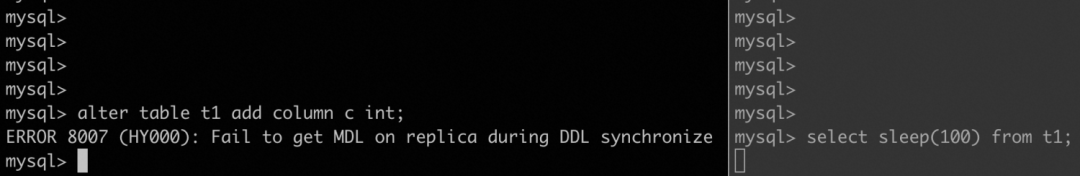

The previous non-block DDL feature resolves the business avalanche problem caused by blocking MDL locks acquired by DDL. However, if DDL fails to acquire MDL locks, it can result in frequent DDL execution failures. Currently, there are occasional DDL execution failures during online shifts due to large queries and transactions on the read-only (RO) nodes, resulting in the error message ERROR 8007 (HY000): Fail to get MDL on replica during DDL synchronize. This error is specific to the shared storage architecture of PolarDB and differs from traditional MySQL. As a result, users are often confused and unsure how to proceed. An official document [6] is available to provide solutions to such problems. According to this document, you can identify the transaction that holds the MDL lock on the read-only node and manually kill it to ensure successful synchronization of the MDL lock. However, this approach is still unclear in certain scenarios. On one hand, users have a limited time window to kill the transaction (currently, the timeout period for MDL lock synchronization is 50 seconds, which can be adjusted using loose_replica_lock_wait_timeout). On the other hand, with the growing number of PolarDB customers, there are many clusters with more than 10 read-only nodes, making manual kill operations inefficient. To address these issues, we have introduced the preemptive DDL feature.

If a read-only node performs a DDL operation on the current table through physical replication, the read-only node will attempt to obtain the MDL lock of the table. Suppose a large query or a long transaction exists on the table and the read-only node fails to obtain the MDL lock within the expected time after you enable the Preemptive DDL feature [3]. In that case, the read-only node will attempt to kill the thread that holds the MDL lock. This ensures successful synchronization of the MDL lock and solves the DDL hunger problem.

You can enable the preemptive DDL feature by setting the parameter loose_polar_support_mdl_sync_preemption to ON. The following shows the experimental effects of enabling and disabling preemptive DDL when a long transaction blocks the synchronized MDL lock of DDL on a read-only node.

1. Query the test.t1 table on a read-only node.

mysql> use test

Database changed

# Execute a large query for 100s.

mysql> select sleep(100) from t1;2. If you perform a column-adding operation on the primary node and the column is blocked, the execution fails.

mysql > alter table t1 add column c int;

ERROR 8007 (HY000): Fail to get MDL on replica during DDL synchronize

Because there is a large query on the read-only node, so synchronizing MDL lock fails, the DDL execution fails and rolls back.

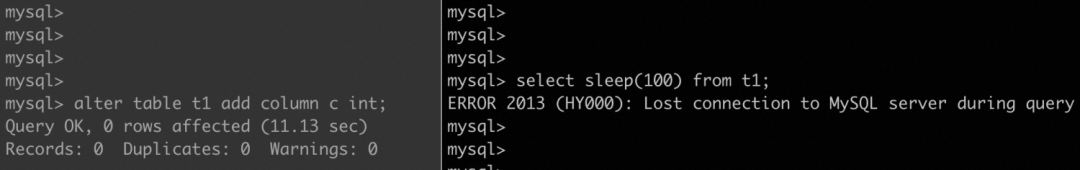

1. Query the test.t1 table on a read-only node.

mysql> use test

Database changed

# Execute a large query for 100s.

mysql> select sleep(100) from t1;2. If you perform a column-adding operation on the primary node and the column is blocked, wait for preemption to occur and the execution succeeds.

mysql> alter table t1 add column c int;

Query OK, 0 rows affected (11.13 sec)

Records: 0 Duplicates: 0 Warnings: 0

After the preemptive DDL feature is enabled, the column is added and the read-only node (shown on the right) can be seen at the same time. The large query has been disconnected.

Both Non-Block DDL and Preemptive DDL are designed to minimize the impact of locks on your DDL changes in scenarios where mutex locks exist. However, users still need to perceive the existence of MDL locks in some scenarios. For example, in extreme scenarios, users still need to manually trigger Preemptive DDL to solve the problem of DDL hunger. We have been exploring whether we can implement finer-grained concurrency control between DDL and DML, similar to InnoDB MVCC capability. However, as mentioned above, DDL is a complex operation, and its execution process involves a series of processes such as file operation, table data change, metadata change, and table cache processing. Therefore, considering the strong coupling of MySQL code, we have segmented this goal to gradually support this ability while controlling code cuts and stability risks. In the first phase, we give priority to supporting the MVCC capability of online high-frequency DDL and DML, that is, meeting the MVCC capability of Instant Add Column and DML according to the statement dimension (user documents to be released in version 8.0.2). In order to be compatible with the default performance of MySQL, we not only support the concurrency of DDL and uncommitted transactions, but also support the readview of DD, so that DML transactions that cross DDL can choose to read table structure information at the isolation level of RC or RR, thus allowing users to decide whether to use the new or old table definition.

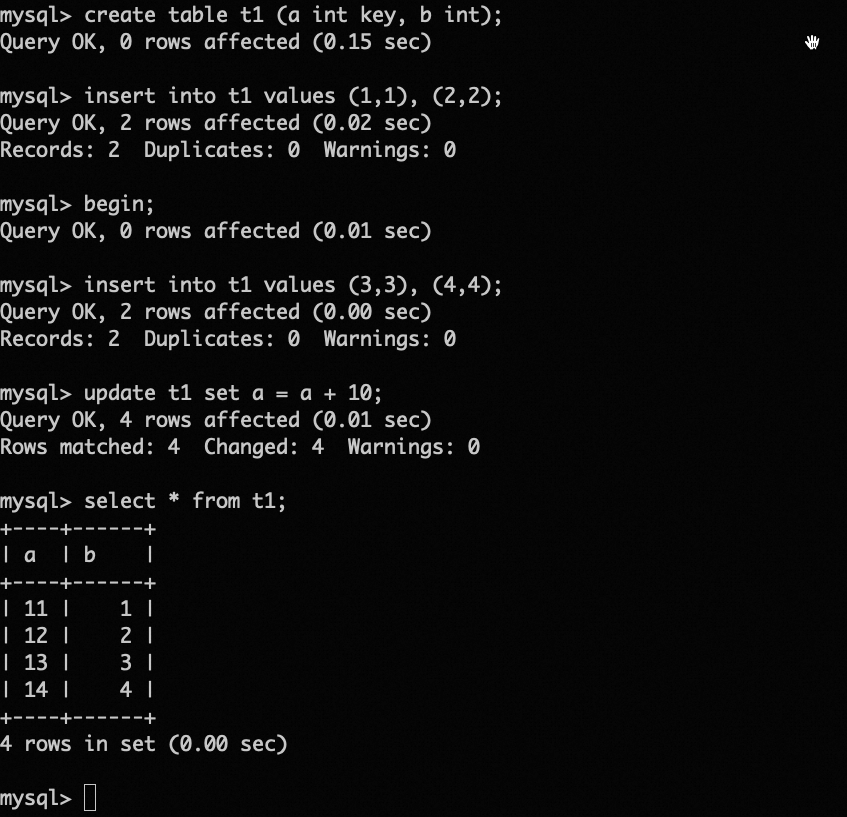

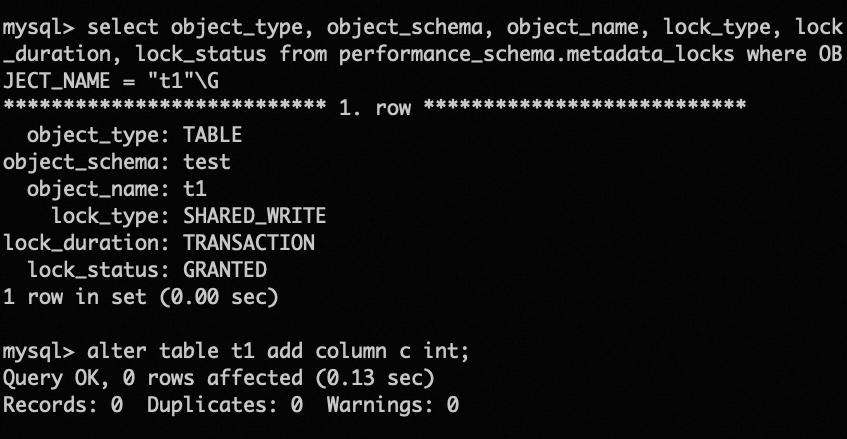

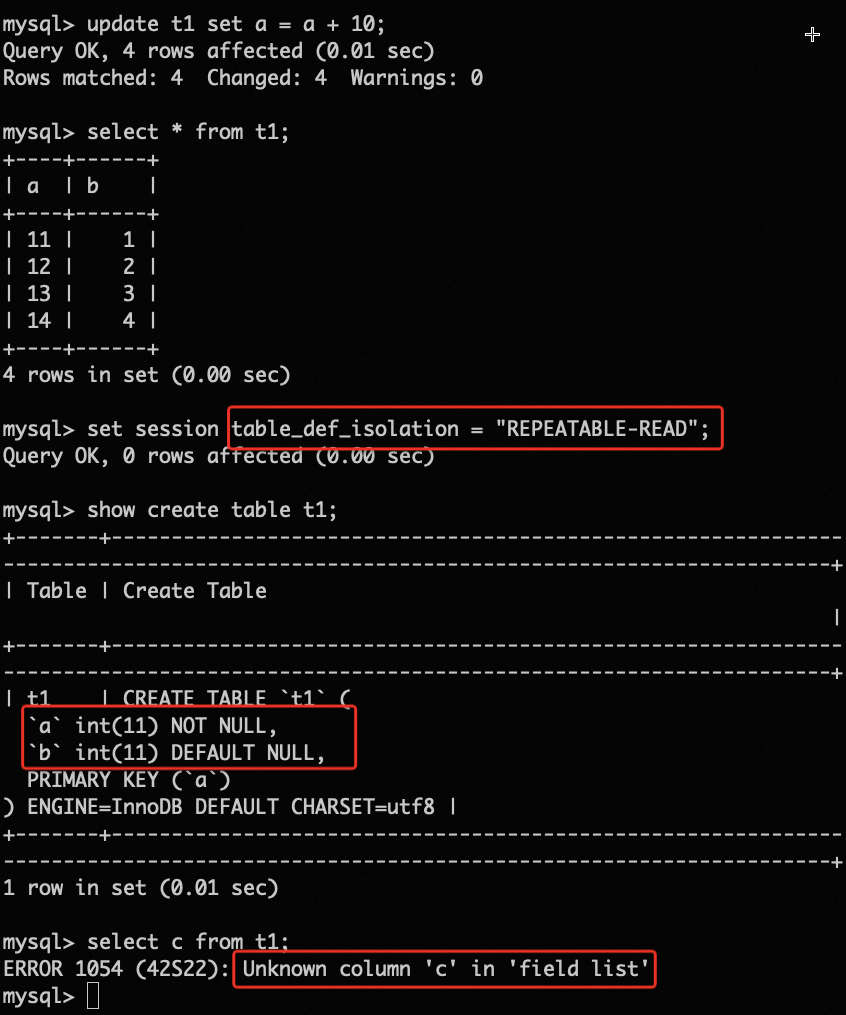

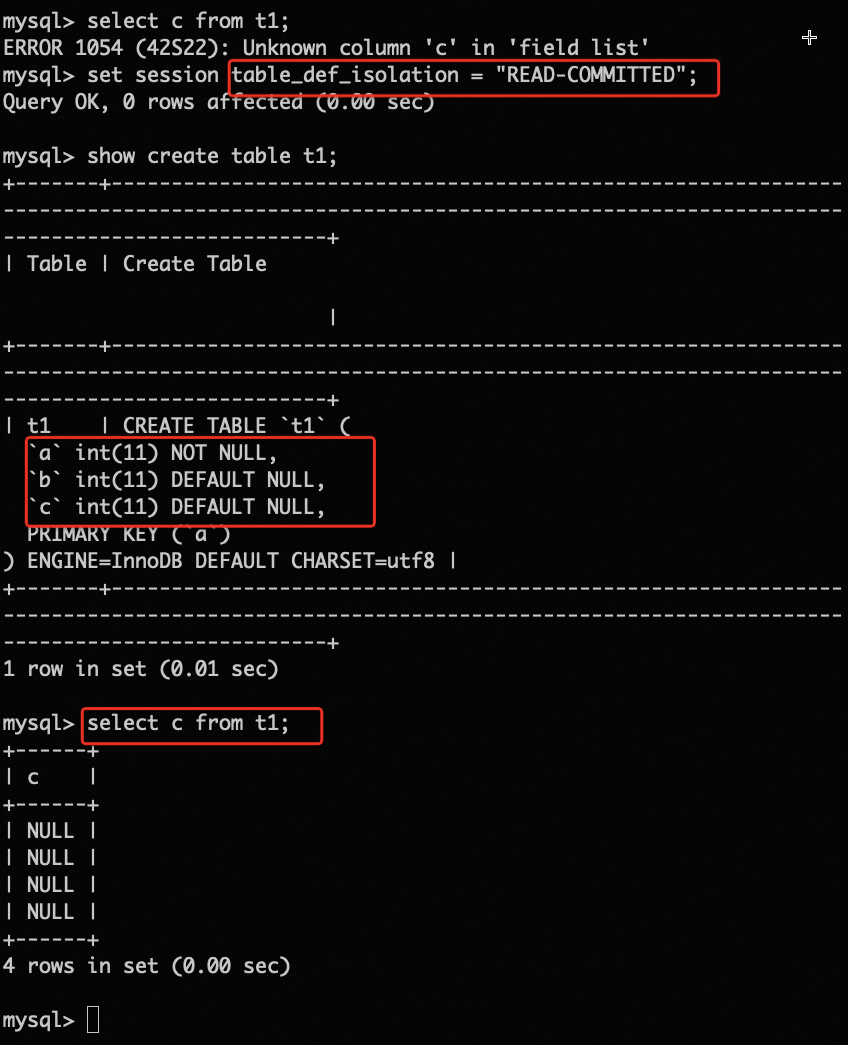

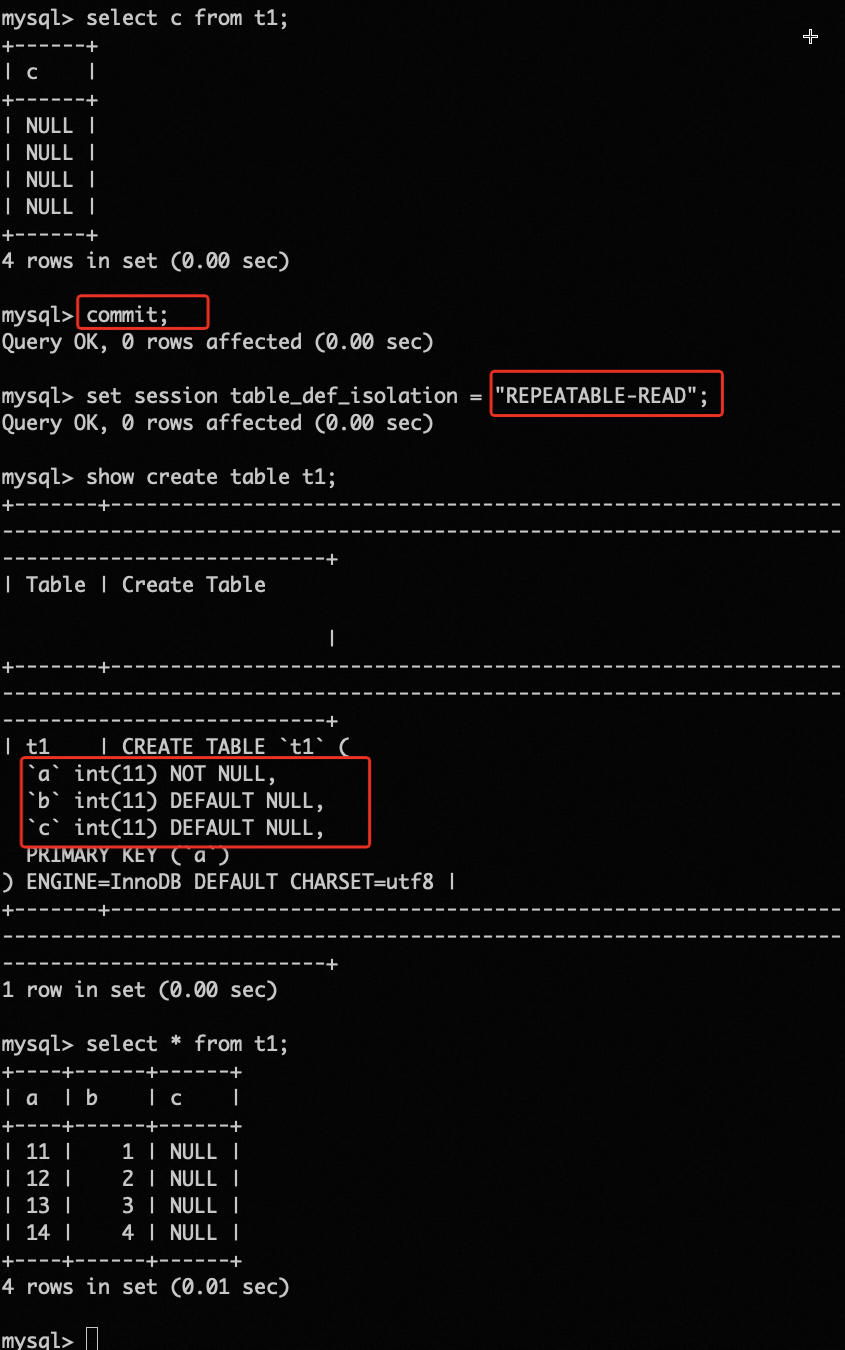

The specific effects are as follows:

Step 1: Start Session A, create a table named t1, and insert some data into it. Then, start a transaction in which insert and update data, and the transaction is not committed.

Step 2 (DDL will not be blocked by uncommitted transactions): Open a new session B and query the performance_schema. At this time, the MDL of t1 is held by an uncommitted transaction in session A. Perform a DDL operation (add column). The operation can be completed immediately without being blocked by uncommitted transactions.

Step 3 (Cross-DDL transactions can select the isolation level used to access the table): Return to the first session A and set the isolation level parameter for table access table_def_isolation to REPEATABLE-READ. Because the DDL is executed after the transaction, the new column c is not visible. The transaction will always see the same table definition as it was at the beginning of the transaction.

Set the table_def_isolation to READ-COMMITTED. Because the DDL has been committed, column c will be visible to the transaction.

After the transaction is committed, the readview of DD is released, and then only the latest table structure can be seen.

Currently, cloud-native databases, whether PolarDB or other vendor databases, provide the write-once-read-multiple capability in the form of "separation between storage and computing" and "shared storage." If you are interested in this architecture, you can read our monthly report (Interpretation of PolarDB physical replication[7] and The hot page optimization of PolarDB physical replication [8]). Readers who are interested in this type of I/O optimization for storage-computing separation scenarios can read our related paper published on VLDB last year (CloudJump: Optimizing Cloud Databases for Cloud Storages [9]).

Simply put, cloud-native databases rely on physical replication (Redo log) to synchronize data among different nodes, while metadata, table data, and file change triggered by DDL also synchronize multiple nodes along with physical replication. The three rely on distributed MDL locks to provide real-time and consistency guarantees. However, when MDL locks are coupled with physical replication, a series of problems arise, especially the consistency problem between log stream, lock synchronization, and file operation. Here, we introduce two types of problems that are closely related to users:

Stability and real-time performance of distributed MDL locks in high-frequency DDL scenarios. In particular, when the MDL lock is blocked, the normal physical log cannot be affected. To solve this problem, PolarDB has designed a new distributed MDL lock mechanism [6], which is enabled by default), which is mainly reflected in the following two aspects:

The stability and real-time performance of physical replication in high-pressure DDL scenarios. In PolarDB, data is indexed using B-tree indexes. However, slow DDL operations often require rebuilding or creating B-tree indexes. For example, creating primary keys, creating secondary indexes, or optimizing tables require B-tree indexes. As a result, a large number of physical logs are generated. These physical logs are often processed on the critical path of DDL operations, which increases the execution time of DDL operations. Additionally, physical replication technology requires read-only nodes to parse and apply the newly generated physical logs. The large number of physical logs generated by DDL operations can significantly impact the log synchronization process of read-only nodes and even render them unavailable. To address these issues, PolarDB provides the DDL physical replication optimization feature [7], which is enabled by default. This feature mainly includes the following improvements:

● Speed up DDL log writing on the primary node: The critical path for writing physical logs on the primary node and applying physical logs on read-only nodes has been optimized, reducing the execution time of creating primary key DDL on the primary node by up to 20.6%.

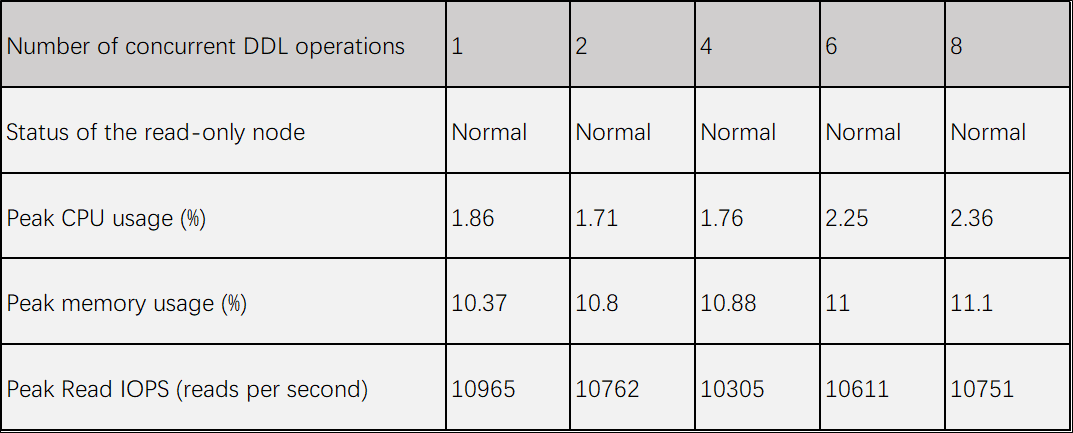

● Speeding up physical replication on read-only nodes: The replication latency of DDL parsing on read-only nodes can be reduced to as low as 0.4%, significantly reducing the hardware overhead of CPU, memory, and I/O. Test data shows that regardless of whether one or eight DDL operations are executed on the primary node, the read-only node remains stable without noticeable fluctuations.

DDL is one of the most cumbersome SQL operations in PolarDB. The ease of use of DDL is an important part of a good experience in PolarDB. This article summarizes the experience and progress of PolarDB in end-to-end MDL lock governance, leaving the simplicity for customers, and continuously optimizing the user experience.

Check out free tier & special offers of Alibaba Cloud database products: https://www.alibabacloud.com/product/databases

[1] Introduction to Non-Block DDL Monthly Report: http://mysql.taobao.org/monthly/2022/10/01/

[2] Non-block DDL User Documentation: https://www.alibabacloud.com/help/en/doc-detail/436462.html

[3] Preemptive DDL User Documentation: https://www.alibabacloud.com/help/en/doc-detail/2326304.html

[4] PolarDB Parallel Metadata Lock Synchronization: https://www.alibabacloud.com/help/en/doc-detail/200678.html

[5] DDL Physical Replication Optimization: https://www.alibabacloud.com/help/en/doc-detail/198213.html

[6] Fail to Get MDL Lock During DDL: https://www.alibabacloud.com/help/en/doc-detail/611732.html

[7] Interpretation of PolarDB Physical Replication: http://mysql.taobao.org/monthly/2018/12/05/

[8] The Hotspot Page Optimization of PolarDB Physical Replication : http://mysql.taobao.org/monthly/2021/03/04/

[9] VLDB Paper Link CloudJump: Optimizing Cloud Databases for Cloud Storages: https://www.vldb.org/pvldb/vol15/p3432-chen.pdf

Join Reorder - Core Technology of PolarDB for Xscale Optimizer

About Database Kernel | PolarDB Optimizer Query Transformation: IN-List Transformation

ApsaraDB - December 12, 2025

Alibaba Clouder - April 8, 2018

ApsaraDB - April 9, 2025

ApsaraDB - January 8, 2025

ApsaraDB - February 4, 2024

ApsaraDB - July 29, 2022

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB