By Junzhe Nie (Junzhe)

Alibaba Cloud PolarDB for MySQL is fully compatible with MySQL. It offers features like multi-master multi-write, multi-active disaster recovery, and HTAP. It provides transaction and analysis performance that is 6 times and 400 times higher than open-source databases, respectively, while reducing the Total Cost of Ownership (TCO) by 50% compared to self-built databases.

X-Engine is a low-cost and cost-effective solution provided by PolarDB. By combining LSM-tree tiered storage and standard zstd compression, X-Engine achieves a good balance between performance and cost. In a standard sysbench scenario, it reduces storage space by 60% compared to the InnoDB engine, with a slight decrease of 10 to 20% in read and write performance. However, for archival and cold data scenarios, the storage cost remains high even after compression, as distributed elastic block storage like PolarStore, designed for high-throughput and low-latency scenarios, uses high-end SSDs and expensive RDMA networks.

Object storage in the cloud is designed for scenarios with large amounts of data, low costs, and high reliability. Storage products offer similar features as listed below:

Take Alibaba Cloud Object Storage Service (OSS) as an example:

| Costs | By using PolarDB X-Engine, the storage cost of the same data can be reduced by up to 10 times. |

| Ease of use | Provides standard RESTful APIs, a wide range of SDK packages, client tools, and consoles, as well as supports stream writes and reads, and data lifecycle management. |

| Durability | This feature is designed to provide 99.9999999999% (twelve 9's) data durability and 99.995% service availability. |

| Scalability | This feature allows you to pay only for actual usage, so you can start with smaller usage and scale up based on your business requirements without affecting performance and durability. |

| Latency | Latency is related to factors such as the bucket region and the request size. Generally, the latency of a small request (for example, less than 512 KB) is within tens of milliseconds. It is higher than the enhanced SSD whose latency is only hundreds of microseconds. |

Object Storage is an object-based storage solution where each object has a unique identifier and doesn't support modifications. This is because modifying a file would result in multiple versions, requiring complex consistency management and occupying more storage space. As a result, OSS only supports overwrite operations and is not suitable for the in-place update storage structure. This leads to more severe write amplification issues and poor random update performance.

X-Engine adopts an LSM-tree structure that utilizes Copy-on-Write (CoW) to write new data to the Memtable. It then merges the old and new data in the background after flushing it to disk. Unlike the in-place update storage structure, X-Engine's LSM-tree is less impacted by the overwrite-only feature of OSS. It can naturally support updating archived data stored on OSS. Additionally, LSM-tree inherently supports tiered storage of hot and cold data, where hot data resides in upper layers and cold data in lower layers. The data volume in the bottom layer exceeds the sum of all data volumes in the upper layers. This tiered storage feature is suitable for combining various storage media, with most data stored on OSS and a smaller portion stored on higher-performance media. This approach strikes a balance between performance and cost.

Considering these factors, X-Engine aims to combine OSS to further reduce the cost of storing cold data while preserving the ecological characteristics of MySQL. The key considerations include:

Core benefits:

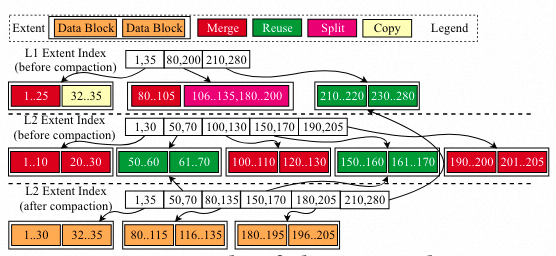

In order to minimize the number of I/Os required for merging between two adjacent levels (i.e. Leveli and Leveli+1) and reuse data as much as possible, X-Engine divides the granularity of the merge into 2 MB extent levels, which can be further refined to 16 KB data blocks. If the keys of a range involved in the compaction do not overlap with the keys of other ranges, only the corresponding metadata index of the extent needs to be updated without physically moving the data on disk. However, when X-Engine uses OSS as the underlying storage medium, the original file management method of logically dividing a physical file into multiple extents is no longer applicable due to the characteristics of OSS, which only supports append write and overwrite without offset write.

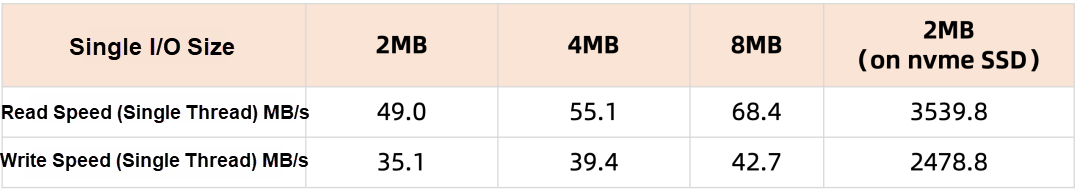

Moreover, although object storage has the advantages of ultra-low cost and ultra-high scalability, it sacrifices some read and write performance. The high network latency of Object Storage Service makes small I/O at the KB level expensive and unable to fully utilize the large bandwidth of the service. Therefore, Object Storage Service is more suitable for large I/O and frequently used for big data analysis in the lakehouse. The following table compares the read and write speeds of OSS and local NVMe SSD for different I/O sizes. It can be seen that as the I/O size increases, the average read and write speeds improve, but there is still a significant difference between OSS and local NVMe SSD. Therefore, when using OSS as the underlying storage medium, X-Engine should leverage the features of the medium as much as possible to mitigate the performance gap between OSS and the more expensive PolarStore.

In the article Disaggregating RocksDB: A Production Experience published at SIGMOD 2023, the approach Meta takes in basing RocksDB on a file system called Tectonic, which is similar to an Object Storage Service with characteristics such as high latency and append write, is similar to what we discussed in this article. As mentioned in the article, RocksDB needs to compensate for the latency difference caused by remote I/O through two aspects: optimizing Tectonic and implementing specific optimizations in RocksDB. The optimizations in Tectonic, such as caching file metadata, optimizing tail latency, and internal OSS, are not discussed in detail. The specific optimizations in RocksDB include the following:

The approach taken by X-Engine aligns with the above optimizations in terms of core ideas, such as minimizing the number of I/O operations during the reading process, optimizing multiple small I/O operations into a single large I/O operation, implementing parallel reading and read-ahead, and speeding up scanning. In the following section, I will provide a detailed description of these optimizations.

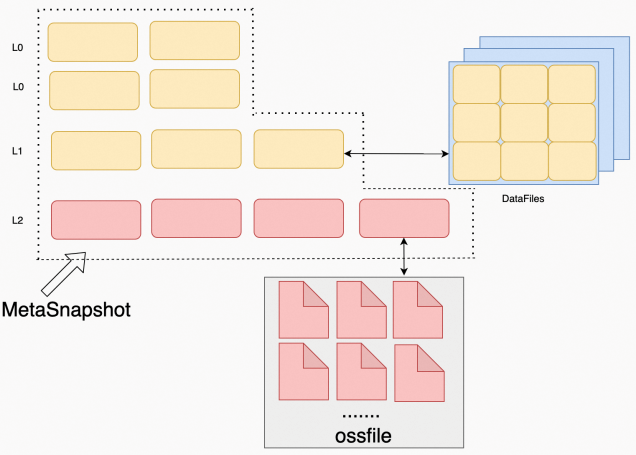

When storing data in PolarStore, each physical file is divided into extents of 2 MB in size. Each extent consists of several variable-length blocks of 16 KB in size. After storing data in OSS, each extent corresponds to an object, which is a file in OSS. When allocating an extent, the file is created and allocated by OSS. When reclaiming an extent, the file is deleted by OSS.

When a version of static data is reclaimed, the extent it contains may be released for subsequent rewriting. To reclaim the extents, metadata lock is required. If you call the OSS interface to physically delete the extent objects, it will incur network latency and hinder the flushing process. Therefore, to reclaim the space of objects in OSS, you need to mark them first. The actual deletion of physical objects is performed by background threads.

If you choose to dump a table to OSS, the data is likely to be cold data and not in the row_cache. If a cache miss occurs, a point query (primary key) requires the following operations:

The best case is when the table_reader is in the table_cache, requiring only one OSS I/O operation. The worst case is when the table_reader is not in the table_cache, requiring two I/O operations.

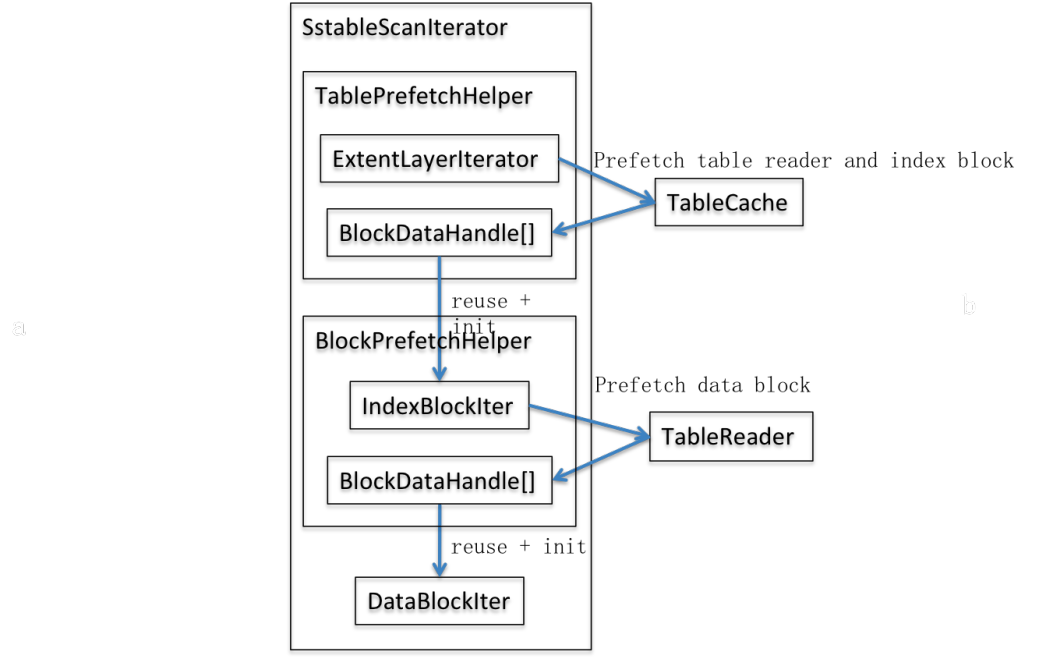

A range query requires the following operations:

In this case, if the range is large, reading data blocks sequentially will result in many small I/Os. It takes more time to read the block of an extent three times in OSS than to read the entire extent. Therefore, for data in OSS, you need to cache the entire extent at once and manage the corresponding cache to release it immediately after querying without excessively occupying memory resources. After optimization, you can use the range query to query an extent. If the table_reader is in the cache, accessing OSS only once or twice at most is sufficient, greatly improving read performance.

X-Engine's fault recovery relies on Checkpoint and Slog to recover metadata. This includes determining which extents are in use, the LSM-tree shapes of each table, and the number of extents in each level. When it comes to extents in OSS, you can mark the extents that have been used without reading any data from OSS. Therefore, storing data in OSS does not affect the rate of downtime recovery.

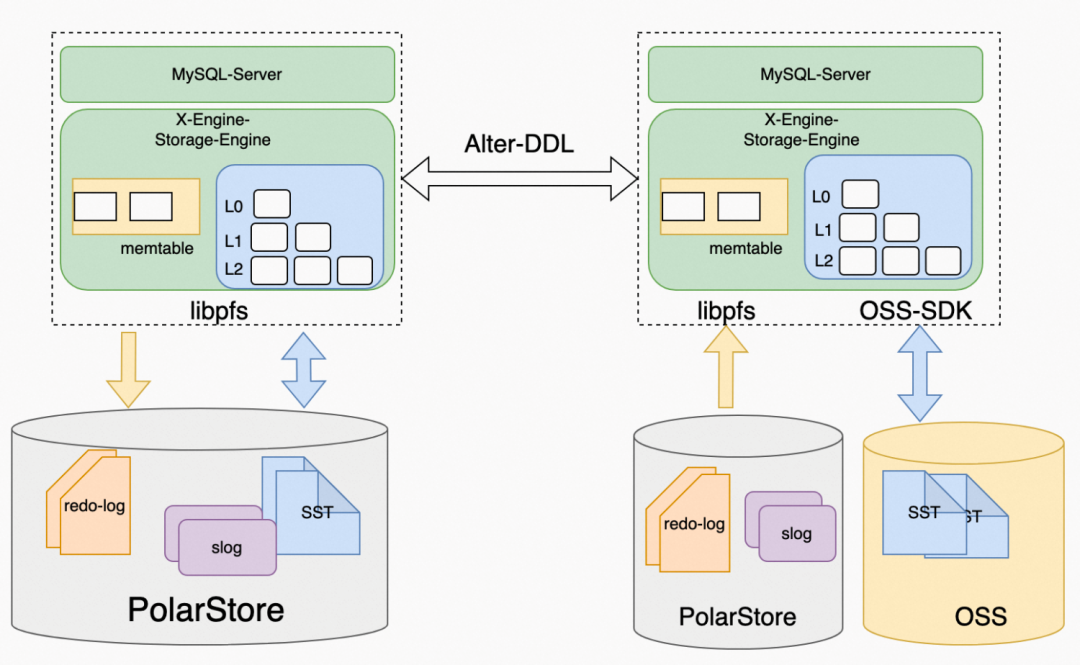

The current logic of X-Engine synchronizes incremental writes through redo-log playback and disk data changes through Slog playback. After storing data in OSS, both the RW (Read-Write) and RO (Read-Only) nodes share a copy of the data, and the high availability of the data is ensured by OSS. As for the Standby node, it has exclusive access to the data because the data on the disk and RW nodes are different. When performing a DDL operation to create an OSS table, you can synchronize the metadata attributes to Standby by synchronizing the original physical replication mechanism.

If you don't directly interact with OSS, data from Level 0 to Level 1 will still be written to PolarStore. It is only when the data is merged to Level 2 that it is written to OSS.

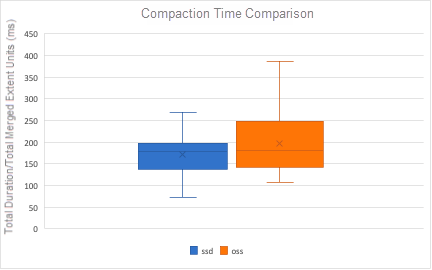

During the compaction process, extents need to be read from OSS. In X-Engine, the compaction read size in OSS has been changed from block (16 KB) to extent (2 MB). Additionally, extents of different sizes can be created on different storage media. This optimization improves the compaction rate of data in OSS. Test results have shown that data compaction in OSS is about 25% slower than on SSDs. Therefore, you need to adjust the scheduling policy based on the rate of compaction tasks on different storage media.

Scheduling Policy

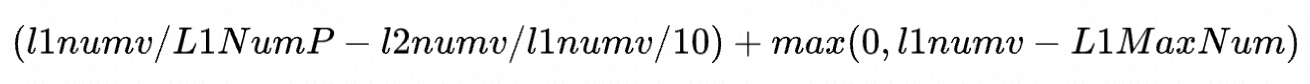

Score calculation for major compaction

Original formula:

The original formula l2numv/l1numv/10 is used as a subtractive term to reduce the frequency of large major merges. If the L2 is placed in OSS, the burden on compaction will be heavier, and the weight of this subtractive item should be larger.

The size of the compaction job generated during major compaction:

During major compaction, the extent range where L1 participates in the merge is divided first, and then the extent range where L2 participates in the merge is selected based on this range. The data of level 1 and level 2 participating in the compaction are divided into disjoint batches and added to the generated compaction task. Each compaction job corresponds to multiple compaction tasks, and each task has a maximum limit on the number of extents. The generated compaction jobs enter the queue for circular execution. This design ensures that compactions between multiple tables can be performed together, and the concurrency granularity between tables can take advantage of the background compaction threads. Therefore, different levels of compaction concurrency within a table are not supported.

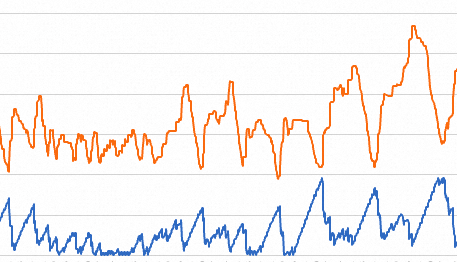

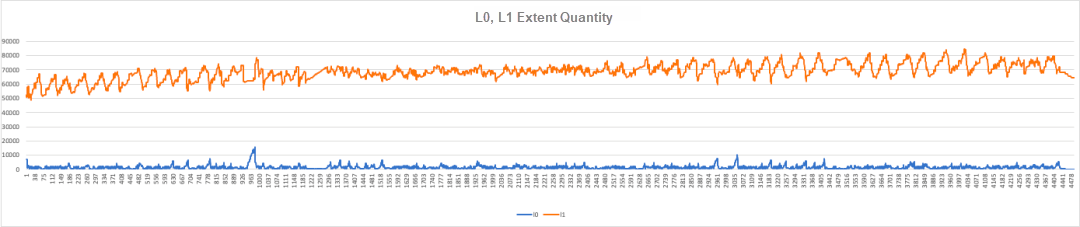

However, this design has problems in the case of continuous writing and a large amount of data in the original table. If the data volume is large, a major compaction task may need to merge tens of thousands of extents. During this period, the L0->L1 compaction is blocked, which may cause heap pressure on L0 data. As shown in the following line chart, the blue line represents the number of L0 extents, and the yellow line represents the number of L1 extents. The jitter becomes more severe as writing continues.

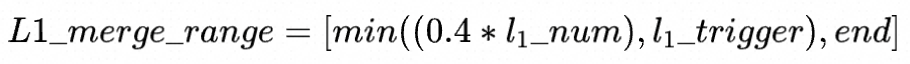

The original policy divides the L1 merge range into the extents within the following ranges. L1_extent represents the actual number of extents at L1, and L1_trigger represents the number of merged extents triggered by L1.

When there is high write pressure and the writes last for a long time (e.g., more than 24 hours), the number of 0.4 * L1_extent may be much larger than that of L1_trigger. In this case, a very large number of compaction jobs may be generated. We want to adjust the policy to reduce the range of L1_merge_range in order to decrease the number of generated compaction jobs and avoid data accumulation at L0.

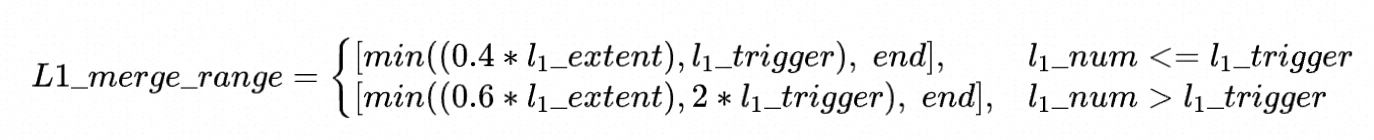

New policy:

The new policy reduces the number of L1 extents that are merged when l1_extent > l1_trigger (generally, the L2 data volume is large, and the main factor that affects the number of merged L1 extents is L2). Taking the default parameters l1_trigger = 1000, l1max = 5000, compaction_task_extents_limit = 1000 as an example, in the original policy, when L1 = 3000, the number of L1 extents participating in the merge is 2000. In addition, L2 extents participating in the merge may be split into more than a dozen tasks and circulate in the queue for more than a dozen times. In the new policy, the number of L1 extents participating in the merge is 1200. When the number of L2 is the main factor affecting the merge, the number of merges can be reduced by about 40% to avoid a long compaction time. After the modification, the number of L0 and L1 remains stable, avoiding data accumulation.

This section evaluates the performance of X-Engine on different storage media while maintaining the same configuration. The sysbench script is used to test several common scenarios, such as insertion, point query, and range query.

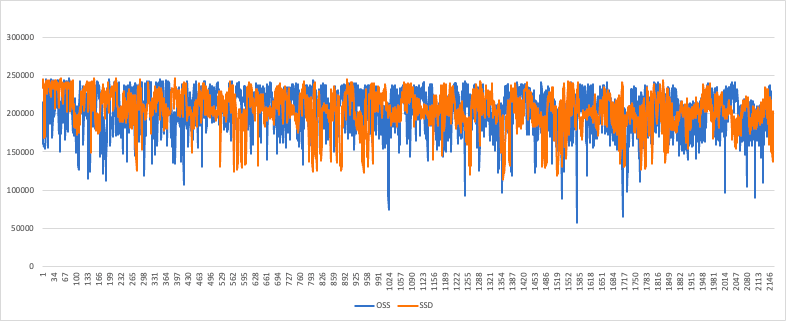

The oltp_insert.lua script is used to continuously write data for six hours. The yellow line in the graph shows the QPS (queries per second) changes over time when data is written to SSDs, while the blue line represents the QPS changes when data is written to OSS. As observed, the write performance is not affected because the foreground writes are still initially written to the PolarStore medium and then transferred to OSS through the compaction task. This result is also consistent with the findings in the article Disaggregating RocksDB: A Production Experience, published in SIGMOD 2023.

Note: In order to better compare the performance gap across different storage media, all data is stored in L2, which sacrifices the advantage of having hot data in L0 and L1, and cold data in L2. Additionally, the test sets row_cache and block_cache to small values for comparison purposes. However, in real scenarios, queries utilize cache, and the performance differences between various media are not as significant when the cache is hit.

oltp_point_select.lua

SQL: SELECT c FROM sbtest%u WHERE id=?

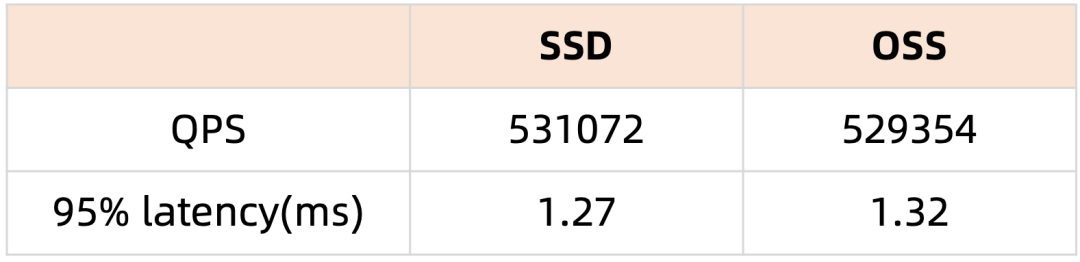

In this script, multiple point queries based on primary keys are continuously executed within a transaction. As a result, multiple queries will hit the same block. The time overhead of accessing OSS is evenly distributed, and the large number of concurrent threads in the stress test can increase the OSS bandwidth. However, there is no significant reduction in QPS and latency.

select_random_points.lua

SQL: SELECT id, k, c, pad FROM sbtest1 WHERE k IN (?, ?, ?,...?)

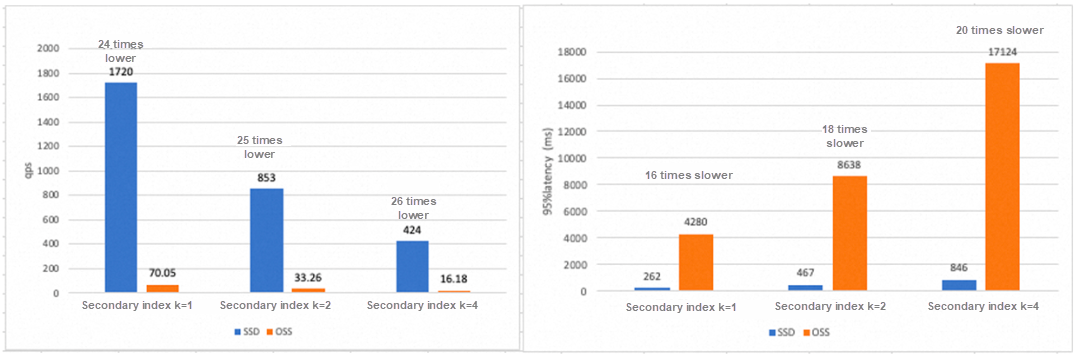

In the current data volume of SQL statements in select_random_points.lua, each k value in the IN clause corresponds to approximately 150 IDs, which are evenly distributed across each extent. In this case, the secondary indexes need to be accessed first, followed by accessing the primary indexes one by one in the table. This process involves multiple accesses to OSS. When the ratio of the query data volume to the number of OSS accesses is large, the network latency of OSS becomes the main factor affecting the query time, and the performance gap between OSS and SSD becomes more noticeable.

The following histogram compares the QPS (queries per second) when the values of ? in the IN clause are 1, 2, and 4. Each k value corresponds to approximately 150 evenly distributed IDs. As shown, as the queried data volume increases exponentially, the QPS decreases exponentially and the latency increases exponentially. This is in line with expectations. In terms of the comparison between OSS and SSD, as the queried data volume increases exponentially, the number of OSS accesses also increases. As a result, the QPS decreases from 24 times to 26 times, and the 95% latency increases from 16 times to 20 times.

select_random_ranges.lua

SQL: SELECT count(k) FROM sbtest.sbtest1 WHERE k BETWEEN ?AND ?OR BETWEEN ?AND ?...;

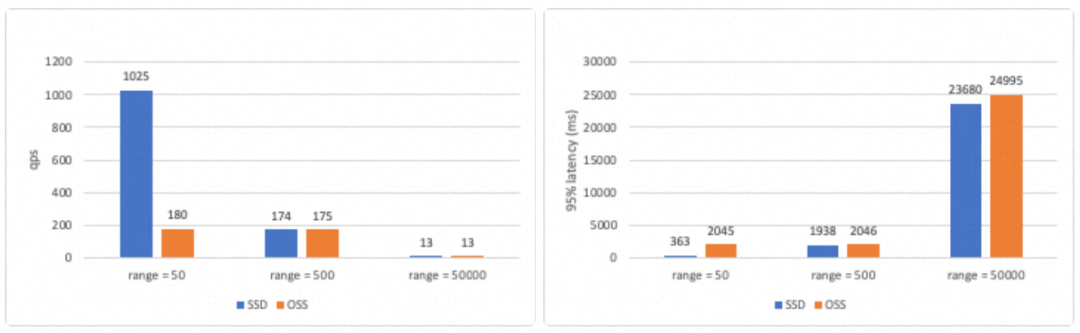

This query statement only retrieves the fields from secondary indexes and primary keys, which are part of the covering index and only require accessing the secondary index itself. When the range is 50 or 500, each range only reads 1-2 extents, and the number of times the query accesses OSS remains the same. Therefore, there is not a significant difference in performance on OSS. However, for SSDs, the number of blocks that need to be read is 10 times more, resulting in a decrease in QPS and an increase in latency. When the range is 50000, one range corresponds to approximately 20 extents, which allows for better utilization of parallel scanning and OSS bandwidth. In this case, there is no difference in QPS and latency measured on the two media.

The results indicate that when X-Engine uses OSS as the underlying storage medium, it is most suitable for scenarios involving large-scale data queries. However, it performs poorly in scenarios where there are multiple point queries and the data distribution is scattered.

Based on the test scenarios mentioned above, it can be observed that the write performance is not affected after placing L2 in OSS. In terms of read performance, there is a slight decrease in the performance of a single point query. However, since the time consumed by a single point query is very short, the slowdown is not significant. On the other hand, multiple point queries (such as Mutiget, which involves the use of secondary indexes and table-access-by-index-rowid operations) result in performance degradation due to multiple accesses to OSS. Mutiget is also a scenario where performance degradation is evident, as mentioned in the article "Disaggregating RocksDB: A Production Experience". However, enabling row_cache and block_cache can help alleviate the performance degradation. The degradation can be as high as 30% for range queries, but the performance can be further optimized later through the use of Read-ahead technology.

Overall, after the data is stored in L2, the storage cost is reduced to 1/10 of the original cost, and a moderate decrease in performance is acceptable.

Interpretation of VLDB Paper | Core Technologies for Strongly Consistent Cluster of PolarDB MySQL

AnalyticDB for MySQL: Implementing High Throughput, Exactly-Once Data Ingestion with Flink

ApsaraDB - October 21, 2020

ApsaraDB - March 3, 2020

ApsaraDB - February 19, 2020

ApsaraDB - October 20, 2020

ApsaraDB - November 17, 2020

ApsaraDB - May 14, 2020

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB