In Large Language Model (LLM) inference, KVCache is the core mechanism for improving efficiency: by caching the historical Key-Value pairs of the Transformer self-attention layers, it avoids repetitive computation, significantly reducing single-step inference overhead. However, under the emerging paradigm of "Agentic Inference"—where models need to continuously perceive the environment, make multi-turn decisions, self-reflect, and collaborate with other agents to complete complex tasks—traditional KVCache mechanisms expose three key bottlenecks:

● State Bloat: Long-context interactions cause cache memory usage to grow exponentially.

● Lack of Cross-Turn Persistence: Session states are difficult to maintain effectively, affecting inference continuity.

● Cache Isolation between Multi-Tasks/Multi-Agents: The lack of sharing mechanisms results in redundant computation and decision conflicts.

To tackle these challenges, the Alibaba Cloud Tair team collaborated closely with the SGLang community and the Mooncake team to develop a next-generation cache infrastructure for agentic AI inference called HiCache. It currently serves as a core technological component in the SGLang framework and is also slated to become a key module in the future of Aibaba Cloud Tair KVCache.

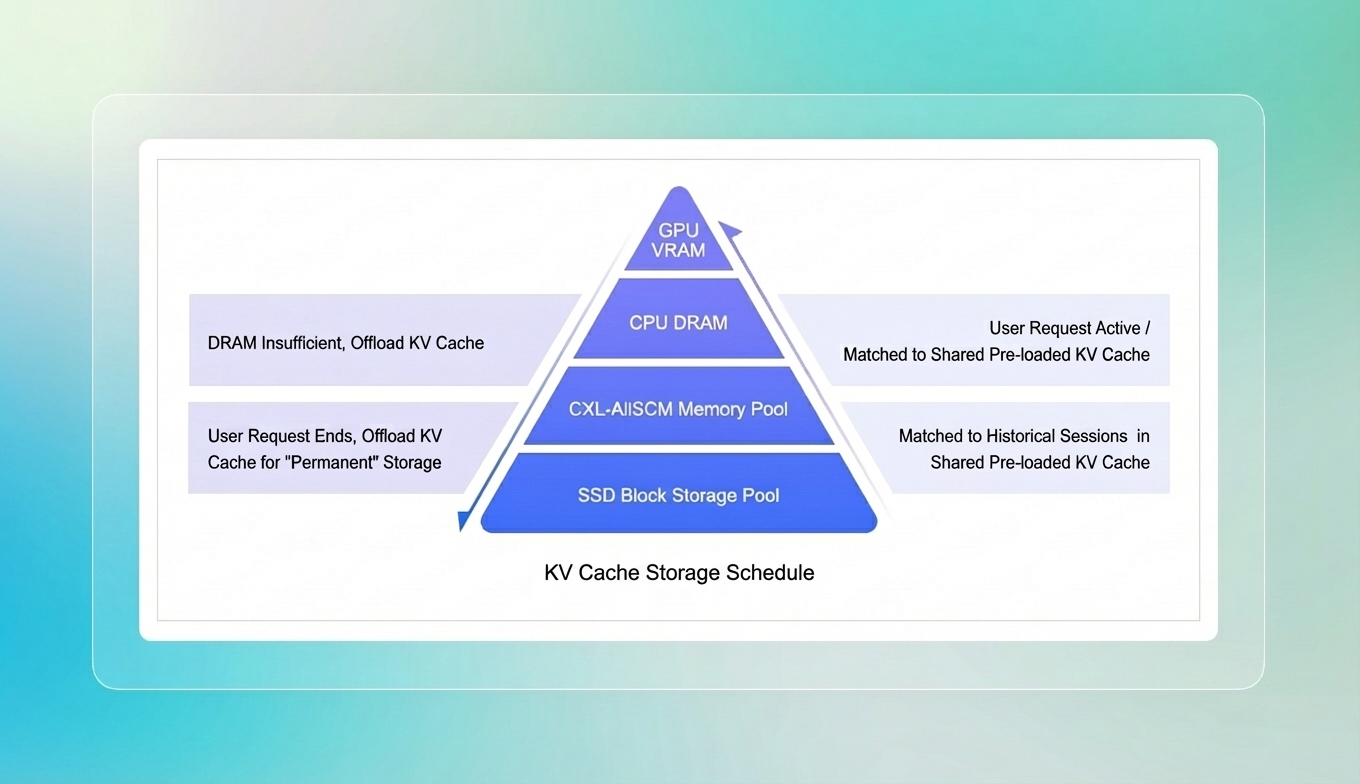

At its heart, HiCache is based on a Multi‑tier KVCache Offloading and Global Sharing architecture. This system seamlessly spans three layers: GPU memory, host (CPU) memory, and the DeepSeek 3FS distributed file system.

In a real-world production deployment, Novita AI integrated HiCache into their SGLang framework. The results were dramatic: the cache hit rate jumped from 40% to 80%, average Time to First Token (TTFT) fell by 56%, and inference queries per second (QPS) doubled.

To tackle these challenges, the Alibaba Cloud Tair team collaborated closely with the SGLang community and the Mooncake team to develop a next-generation cache infrastructure for agentic AI inference called HiCache. It currently serves as a core technological component in the SGLang framework and is also slated to become a key module in the future of Aibaba Cloud Tair KVCache.

At its heart, HiCache is based on a Multi‑tier KVCache Offloading and Global Sharing architecture. This system seamlessly spans three layers: GPU memory, host (CPU) memory, and the DeepSeek 3FS distributed file system.

In a real-world production deployment, Novita AI integrated HiCache into their SGLang framework. The results were dramatic: the cache hit rate jumped from 40% to 80%, average Time to First Token (TTFT) fell by 56%, and inference queries per second (QPS) doubled.

This series of technical articles will systematically deconstruct the technological evolution of KVCache for Agentic Inference:

Tair KVCache, as an extension of Alibaba Cloud Database Tair's product capabilities, essentially represents three leaps in cache paradigms:

It marks the upgrade of cache from an auxiliary component to a core capability of the AI infrastructure layer—making "state" storable, shareable, and schedulable, supporting the scalable inference foundation of the agent era.

LLM inference is essentially an Autoregressive process: the model generates tokens one by one, and each step requires "looking back" at all previously generated contexts. This mechanism ensures semantic coherence but also brings significant computational redundancy.

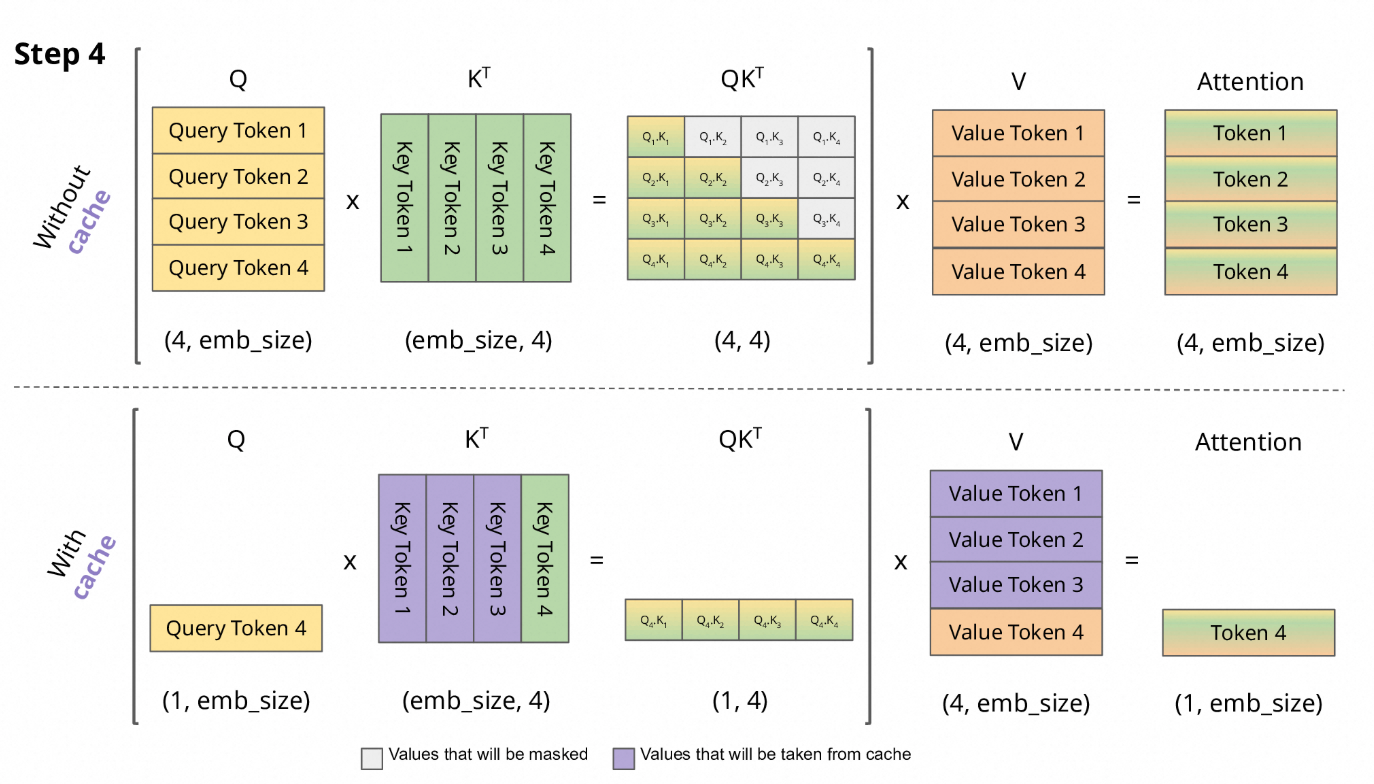

The core of the problem lies in the Attention mechanism. When generating each new token, the model needs to perform a dot product operation between the Query (Q) of the current token and the Keys (K) of all historical tokens to calculate attention weights, and then perform a weighted aggregation of the Values (V) of the historical tokens. However, the K and V corresponding to historical tokens do not change once generated—if they are recalculated for every decoding step, it will cause massive unnecessary repetitive overhead.

KVCache was born to solve this problem: it caches the K and V of each token after the first calculation and reuses them in subsequent generation steps, thereby avoiding repetitive forward propagation computation. This optimization significantly reduces inference latency and improves throughput efficiency, becoming a fundamental technology for efficient inference in modern large language models.

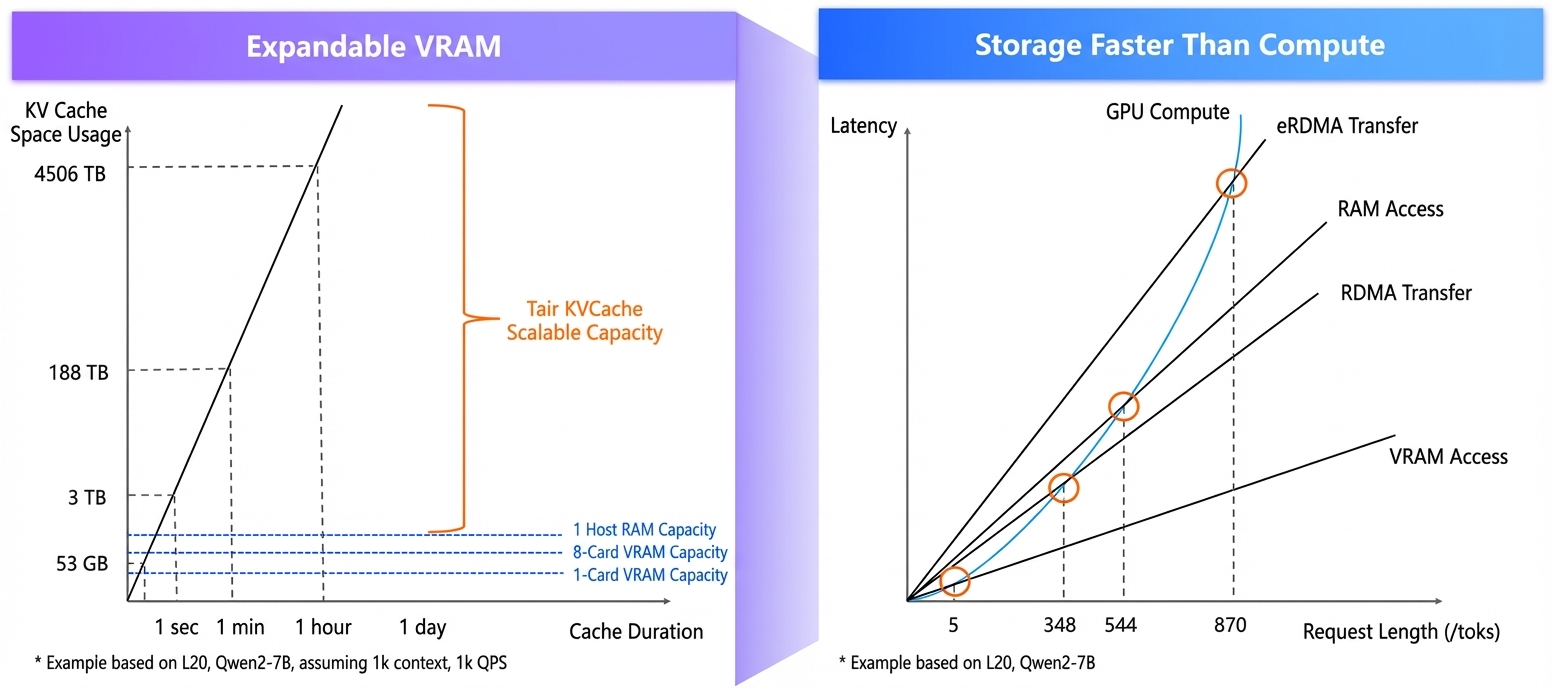

While KVCache brings performance gains, it also introduces a new bottleneck—Storage Capacity.

As shown in the data (Left Figure), taking the Qwen2-7B model as an example, in an online service scenario with thousands of QPS and an average input of 1K tokens, superimposed with requirements like multi-turn dialogue state maintenance and Prefix Caching, the total KVCache volume grows linearly with cache duration—expanding rapidly from GB levels (seconds) to PB levels (days), far exceeding the carrying capacity of local GPU memory or even host memory.

The Right Figure reveals a key insight: Store-as-Compute (Trading Storage for Compute). When the sequence length exceeds a certain threshold, the end-to-end latency of loading cached KV from storage media is actually lower than re-executing Prefill computation on the GPU. This provides a solid theoretical basis for KVCache Offloading—gradually offloading low-frequency KV states from GPU memory to host memory, or even to a remote distributed storage pool via RDMA. This not only breaks through the single-machine capacity bottleneck but also ensures that load latency is lower than re-computation overhead.

This strategy of "using storage to exchange for computation" provides a scalable path for long-context, high-concurrency LLM inference services that balances throughput, latency, and cost.

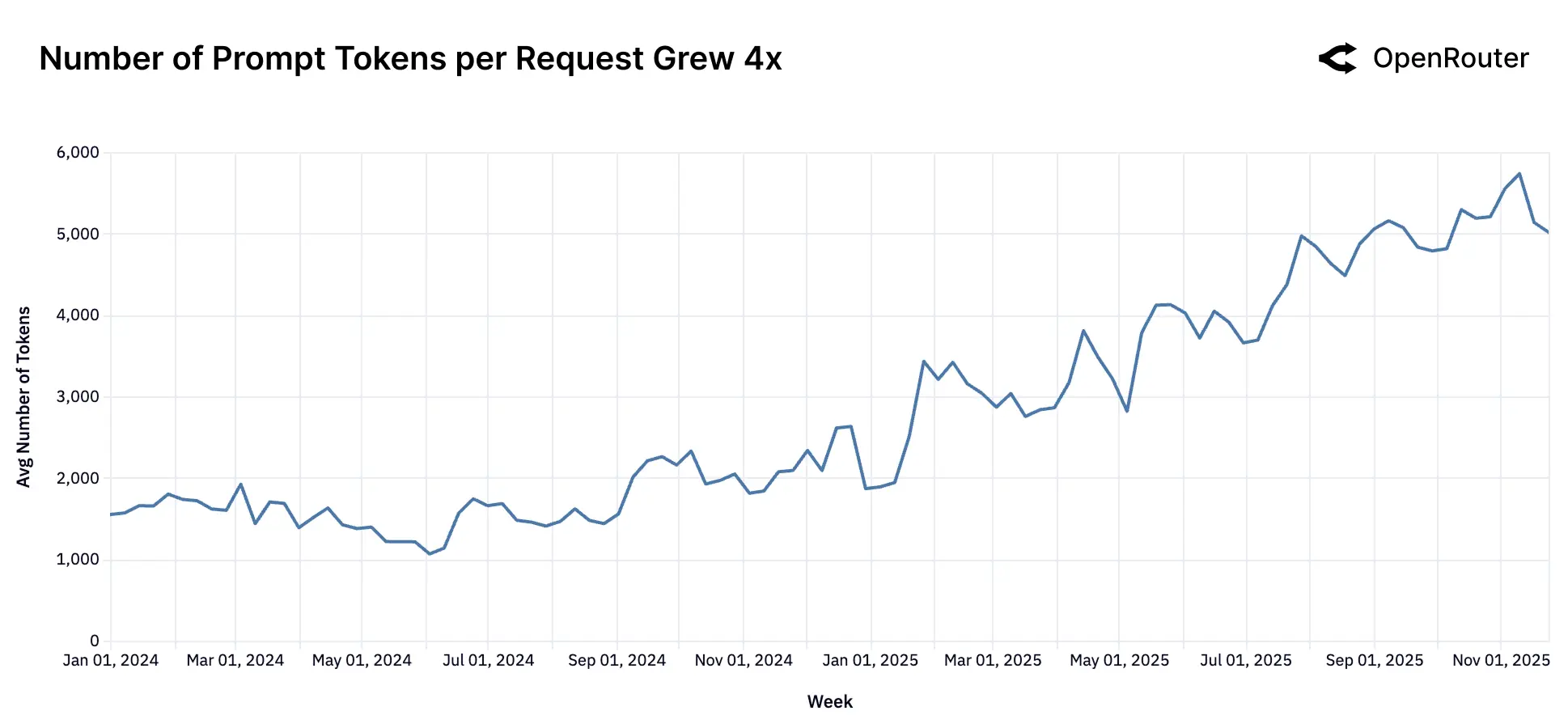

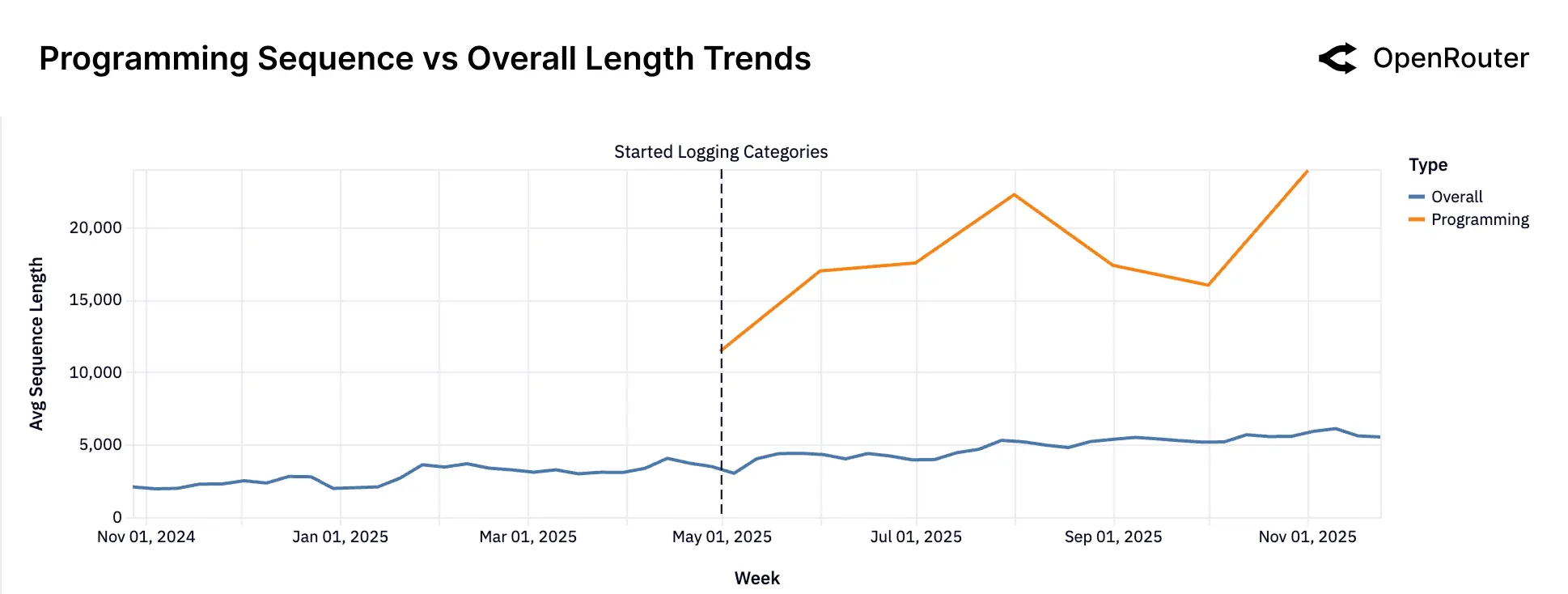

A recent report from OpenRouter points out: "Currently, the application of LLMs in actual production is undergoing a fundamental shift: from single-turn text completion to multi-step, tool-integrated, strong reasoning-driven workflows. We call this shift the rise of 'Agentic Inference'—where models are no longer just used to generate text but are deployed as 'Actors' capable of active planning, tool calling, and continuous interaction within extended contexts. Key to this is the change in sequence length distribution, and how programming application scenarios drive overall complexity."

AI Agents need to remember long-term, cross-turn, multi-task contexts, such as: complete traces of multi-turn tool calls, user historical preferences and behavior logs, multi-document collaborative analysis (e.g., contracts + financial reports + emails), and shared memory in multi-agent collaboration. This causes context length to leap from hundreds/thousands of tokens in traditional chat to tens of thousands or even millions of tokens. In this scenario, since KVCache size grows linearly with context length, inference services easily hit GPU memory capacity bottlenecks.

Programming Agent applications typically run in a "Think-Act-Observe" loop. Each round adds a small number of tokens (e.g., tool execution results), but requires retaining the full historical KV to maintain state consistency. Traditional one-time inference KVCache lifecycles are per-request, whereas in Agent inference scenarios, the KVCache lifecycle spans the entire session or even hours. This requires KVCache to be persistent and support incremental append, rather than re-computation each time.

Programming interactions are closer to the rhythm of "Human-Human" dialogue, with users having lower tolerance for latency (Target: <500 ms end-to-end). Without KVCache, generating a single token requires recalculating the entire history with  complexity; with KVCache achieving

complexity; with KVCache achieving  complexity, which is crucial for ultra-long contexts. Furthermore, as context length changes with increasing turns, avoiding repetitive computation to save costs is a key focus for current applications.

complexity, which is crucial for ultra-long contexts. Furthermore, as context length changes with increasing turns, avoiding repetitive computation to save costs is a key focus for current applications.

An Agent instance often needs to handle multiple users/sub-tasks concurrently, and different tasks may share parts of the context (e.g., different queries from the same user sharing a profile, multiple sub-Agents sharing environment state, prompt template or system instruction reuse). This necessitates KVCache sharing/reuse mechanisms (such as prefix caching, cross-request reuse), which can drastically reduce repetitive computation and memory footprint.

Targeting the challenges of Agentic Inference—extremely long context windows, continuous interaction and streaming inference, multi-task concurrency and shared caching, and increased real-time requirements—SGLang HiCache constructs a Hierarchical KVCache management system. It unifies GPU memory, host memory, local disk, and even remote distributed storage (such as 3FS) into a unified cache hierarchy.

Through intelligent heat-aware scheduling and asynchronous prefetching mechanisms, HiCache retains frequently accessed "hot" data in capacity-limited GPU memory while transparently offloading "cold" data to larger-capacity lower-tier storage, loading it back to GPU memory for computation just before a request arrives. This design allows the SGLang inference system to break through the physical boundaries of single-machine hardware, expanding effective cache capacity in a nearly linear manner, and truly unleashing the potential of "Store-as-Compute."

On the other hand, achieving efficient KVCache Offloading requires a high-performance underlying storage system. 3FS (Fire-Flyer File System) is a distributed file system open-sourced by DeepSeek, designed specifically for AI large model training and inference scenarios, featuring:

● Disaggregated Storage-Compute Architecture: Decoupling computation and storage for independent scaling.

● Extreme Throughput Performance: Combining RDMA networks and NVMe SSDs, achieving 6.6 TiB/s read bandwidth in a 180-node cluster.

● Strong Consistency Guarantee: Based on the CRAQ chain replication protocol, balancing consistency and high availability.

● Flexible Access Interfaces: Providing POSIX-compatible FUSE clients and high-performance USRBIO interfaces, balancing ease of use and extreme performance.

The Tair team integrated 3FS into the SGLang HiCache system, providing a high-bandwidth, low-latency offloading channel for KVCache, while enabling global cache reuse capabilities across nodes.

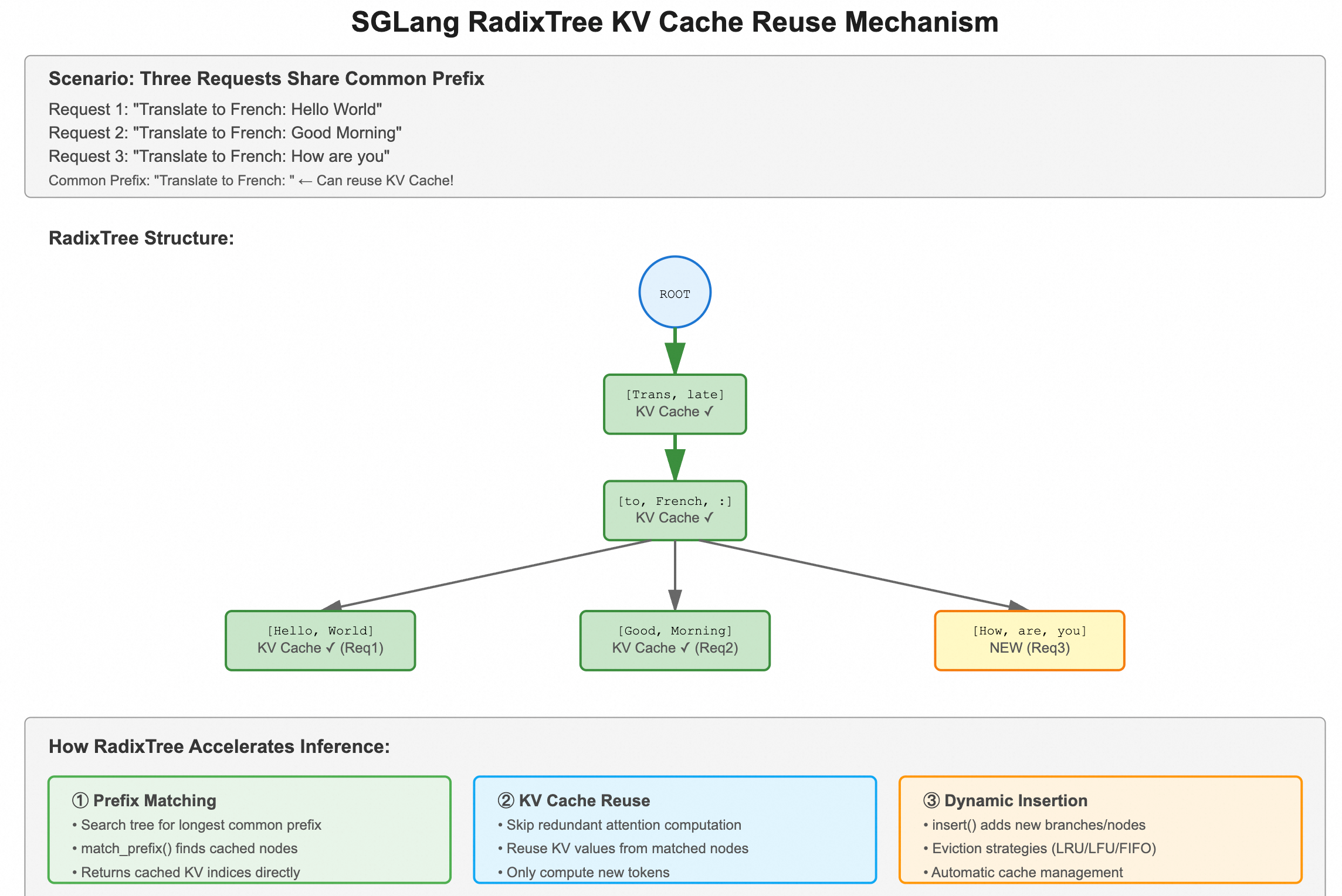

In LLM inference services, repeatedly calculating the same text prefix is a huge waste of performance.

Imagine a scenario: in Alibaba Cloud's enterprise AI assistant service, all user requests start with the same System Prompt—perhaps thousands of tokens of role settings and rule descriptions. Traditional KVCache mechanisms manage independently per request; even if these prefixes are identical, each request must re-execute Prefill computation, causing massive redundant overhead.

SGLang's RadixTree was born to solve this pain point. RadixTree is an efficient prefix retrieval data structure. SGLang uses it to manage and index all cached token sequences. When a new request arrives, the system searches its token sequence in the RadixTree, finds the longest common prefix with cached sequences, and directly reuses the corresponding KVCache, performing Prefill computation only on the remaining new tokens.

This optimization is particularly effective in the following scenarios:

Shared System Prompts: Massive requests reuse the same System Prompt.

Multi-turn Dialogue: Subsequent turns of the same session naturally share historical context.

AI Coding: In code completion scenarios, multiple requests for the same file share a large amount of code context.

Through prefix reuse, RadixTree can reduce the computation volume of the SGLang Prefill stage by several times or even tens of times, significantly improving throughput and reducing Time to First Token (TTFT).

RadixTree solved the problem of "how to reuse," but not "how many can be cached"—KVCache is still limited by GPU memory capacity.

With the rise of tasks like Agentic AI, long document Q&A, and repository-level code understanding, request context length continues to grow. Cache capacity directly determines the hit rate, which in turn affects system throughput and response latency. A single GPU with ~100GB of memory is stretched when facing massive concurrent long-context requests.

To this end, we designed and implemented HiRadixTree (Hierarchical RadixTree, hereinafter referred to as HiCache) in SGLang—a hierarchical KVCache management mechanism that extends the RadixTree, originally confined to GPU memory, into a three-tier storage architecture:

Its core working mechanism is as follows:

● Automatic Offload: The system asynchronously offloads high-frequency KVCache to CPU memory based on access heat; subsequently, it further persists it to local disk or remote distributed storage (such as 3FS).

● Intelligent Prefetch: When a request hits remote cache, the system asynchronously prefetches the required KV data to GPU memory before actual computation, hiding I/O latency to the greatest extent.

● Heat-Aware Eviction: Combining strategies like LRU, it prioritizes retaining high-frequency "hot" data in GPU memory to ensure maximum cache hit rates.

Through this hierarchical design, a GPU with only 40GB of memory can leverage CPU memory to expand to 200GB+ of effective cache capacity, and further combine with the storage layer to support TB-level ultra-long context caching. While maintaining RadixTree's efficient prefix retrieval capability, HiRadixTree truly realizes nearly infinite KVCache capacity expansion, providing solid infrastructure support for long-context, high-concurrency LLM inference services.

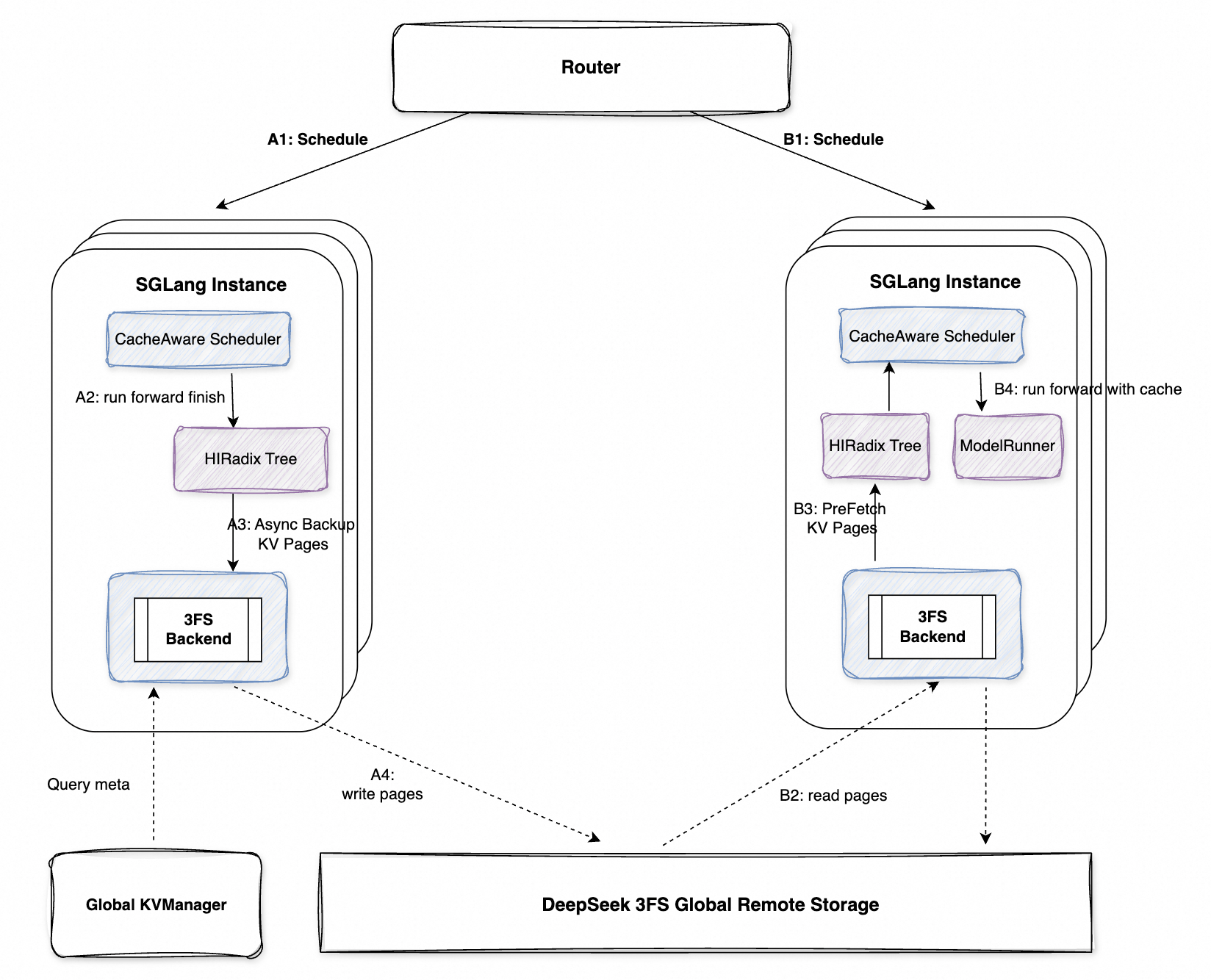

The HiCache system architecture consists of several key modules:

● HiRadixTree: A GPU/CPU dual-layer prefix cache tree structure that natively supports automatic synchronization of KVCache between GPU and CPU.

● Storage Backend: A pluggable storage backend abstraction layer. It currently integrates backends like 3FS, Mooncake, and NIXL. By encapsulating operations like batch_get / batch_set / batch_exists through a unified interface, it supports zero-copy data transmission, balancing high throughput and low latency.

● Global KVManager: Provides unified metadata management services for the distributed file system (FS). It possesses efficient metadata organization, query, and coordination capabilities, providing consistency management for global KVCache.

● 3FS Global Storage: DeepSeek's open-source high-performance distributed file system. Adopting a disaggregated storage-compute architecture and combining RDMA network optimization with NVMe SSDs, it provides TiB/s-level aggregated read bandwidth, serving as the persistent storage base for HiCache.

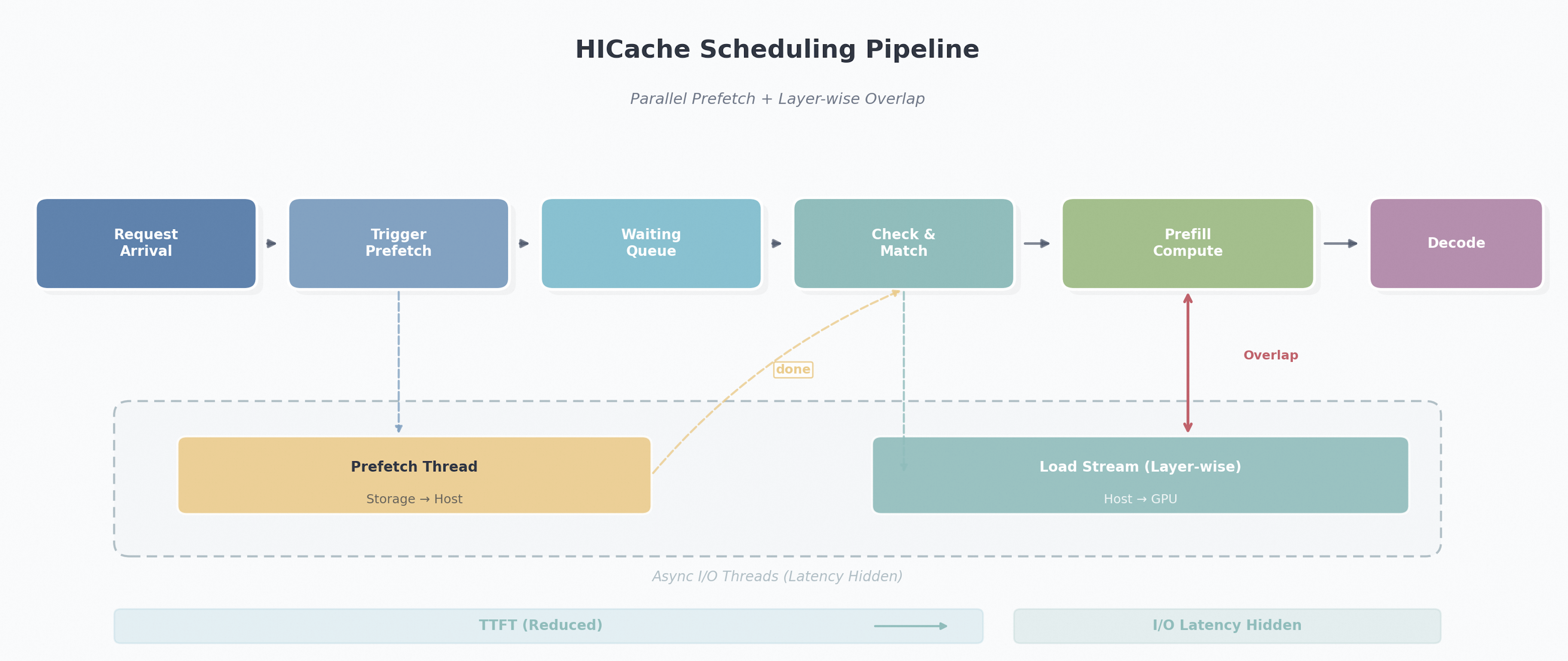

In the original scheduling mode, a request goes through the full process of "Wait -> Prefix Match -> Memory Allocation -> Prefill Computation" from enqueue to first Token generation, where KVCache only exists in GPU memory. The HiCache mode achieves two key optimizations by introducing a three-tier storage architecture and an asynchronous pipeline:

● prefetch_from_storage is triggered immediately when a request is enqueued. During the scheduling wait period, background threads asynchronously load the KV data hit in Storage to Host memory, effectively utilizing the "idle" time of queue waiting.

● When the Scheduler schedules a request, it terminates the request prefetch or skips request scheduling based on the scheduling strategy. Supported scheduling strategies include:

● When a request is scheduled for execution, Host -> GPU KV loading is performed layer by layer via an independent CUDA Stream (load_to_device_per_layer). Model forward computation can begin immediately after the i layer KV is ready, without waiting for all layers to finish loading, implementing a pipeline overlap of computation and transmission.

This design hides the originally blocking I/O overhead within scheduling waits and GPU computation. While significantly expanding effective cache capacity, it minimizes the impact on Time to First Token (TTFT).

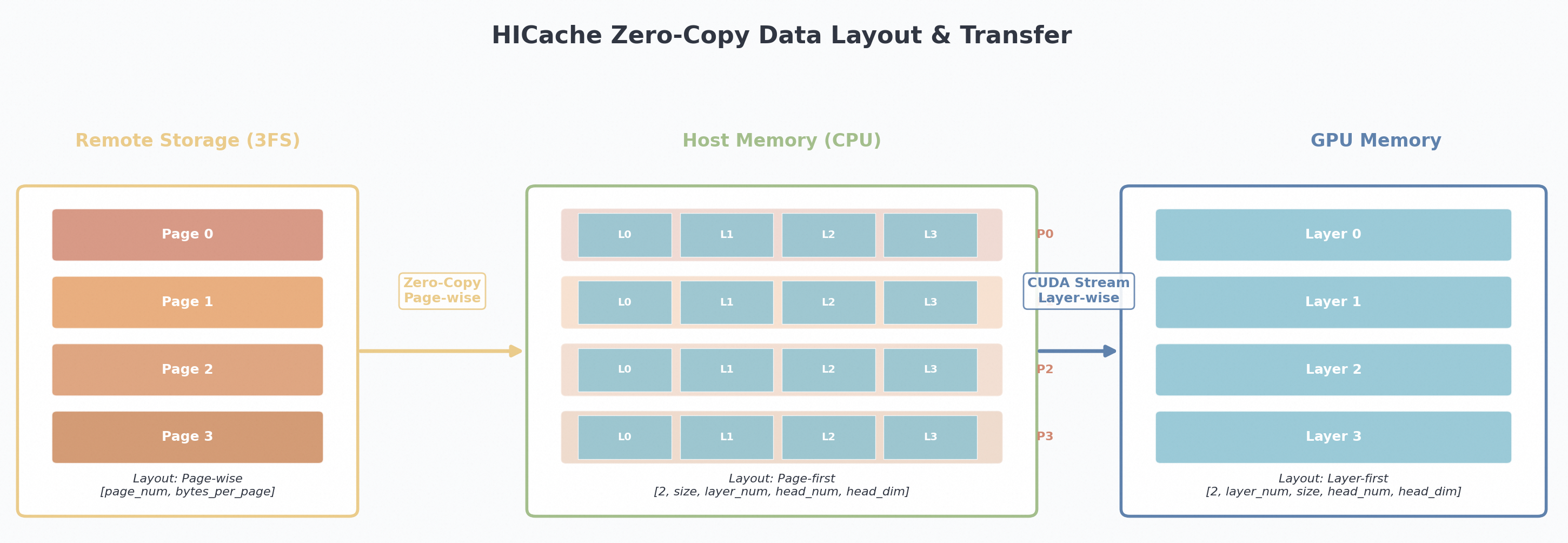

HiCache employs Zero-Copy technology to achieve efficient cross-layer transmission of KVCache.

In terms of Data Layout:

● Remote storage is organized by Page, with each Page having an independent Prefix Hash Key for retrieval.

● Host memory adopts a Page-first layout ([2, size, layer_num, head_num, head_dim]), making data of all Layers within the same Page physically contiguous.

● GPU memory adopts a Layer-first layout ([2, layer_num, size, head_num, head_dim]) to facilitate layer-wise access.

● In traditional Layer-first layouts ([2, layer_num, size, ...]), KV data of the same Page is scattered across different memory regions for each Layer. Before writing to storage, .flatten().contiguous() must be executed to copy and reorganize data into continuous blocks. However, the Page-first layout ([2, size, layer_num, ...]) places the size dimension at the front, making all Layer data within the same Page physically contiguous in memory, allowing direct writing to or reading from storage without extra data reorganization copying.

In terms of Transmission Path:

● Storage -> Host uses Page-wise granularity, writing data directly to the Host KV Pool via user-space I/O libraries like 3FS libusrbio, bypassing kernel buffers.

● Host -> GPU uses Layer-wise granularity, transmitting layer by layer via an independent CUDA Stream. This allows model forward computation to start immediately after the i layer data is ready, achieving pipeline overlap of computation and transmission. This design maximizes storage bandwidth utilization while effectively hiding data loading latency within GPU computation.

● The Page-first layout acts as a "bridge" at the Host layer, satisfying the Page continuity requirements of the storage layer while supporting the Layer access mode of the GPU layer via transposition, trading a single layout transformation for zero-copy gains on the transmission path.

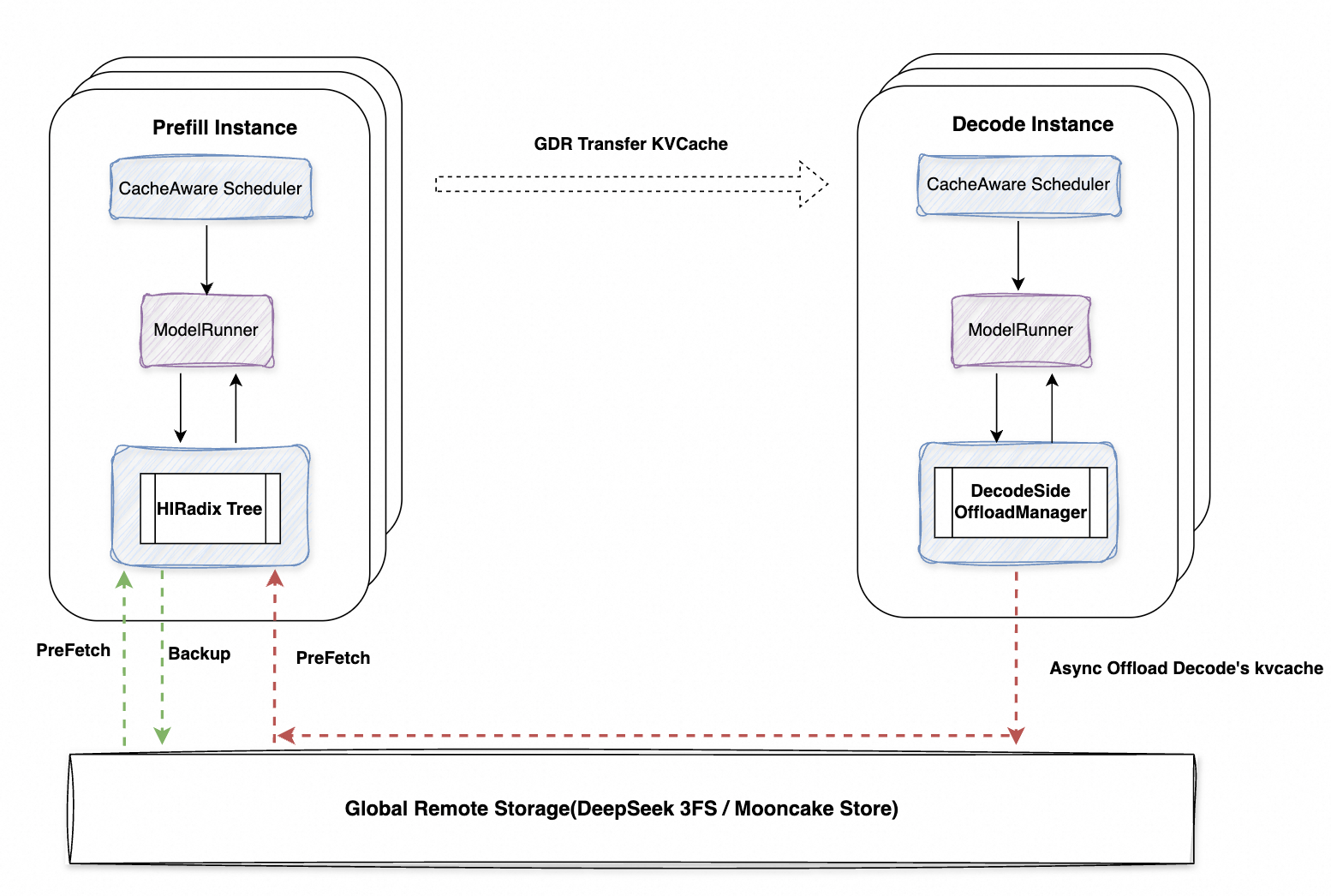

Currently, SGLang's PD (Prefill/Decode) separation architecture has been seamlessly integrated with HiCache. The full lifecycle management process of KVCache is as follows:

● High-Speed Direct Transfer: Zero-copy direct transmission of KVCache between Prefill and Decode nodes via GDR (GPU Direct RDMA) high-speed channels.

● Prefill Cross-Instance Reuse: Supports enabling HiCache on Prefill nodes, implementing asynchronous Offload & Prefetching of KVCache, and KVCache reuse across instances.

● Decode Node Lightweight Cache Control: For historical compatibility, HiCache is disabled by default on Decode nodes. A new lightweight component, Decode OffloadManager, was added specifically to handle asynchronous Offloading operations. In multi-turn dialogue scenarios, Prefill nodes can directly reuse KVCache already generated by Decode nodes, avoiding repetitive computation, thus achieving cache efficiency and performance equivalent to non-separated deployment under the PD separation architecture.

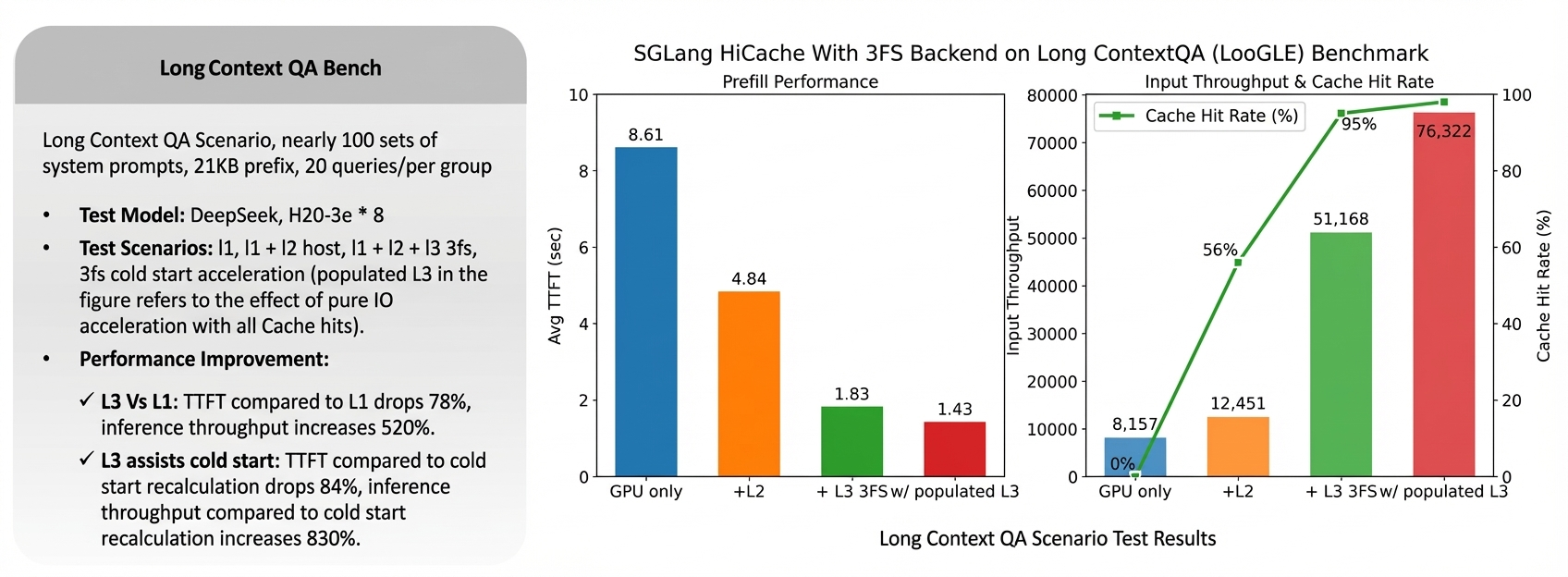

Test Benchmark: LongContext QA (LooGLE).

The SGLang HiCache project is actively under construction. Future evolution will focus on the following directions, and community co-construction is welcomed:

● Deep Integration of EPD Architecture: Support efficient transmission of Embedding Cache between Embedding Node and Prefill Node via HiCache.

● Support for Sparse Attention: Adapt to models like DeepSeek-V3.

● Support for Hybrid Models: Adapt to Hybrid Models supporting Mamba, SWA (Sliding Window Attention), etc.

● Smarter Scheduling Strategies: Dynamically regulate backup/prefetch rates based on real-time metrics like band usage and error_rate to improve cache efficiency and resource utilization.

● Comprehensive Observability System: Enrich monitoring metrics to provide more comprehensive and fine-grained performance insights, assisting in problem diagnosis and tuning.

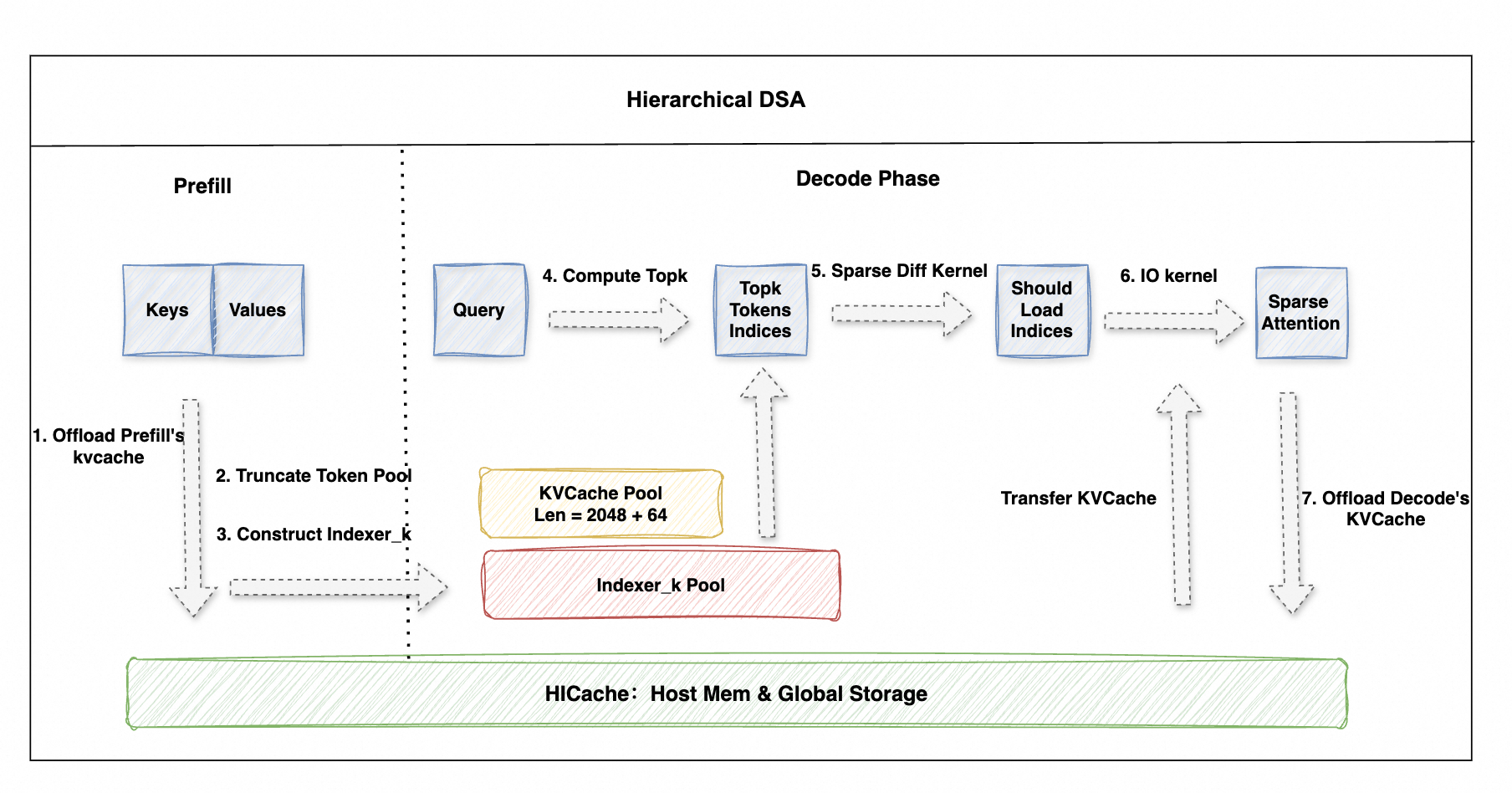

As context length continues to grow, Sparse Attention has become an important technical path for improving long-text inference efficiency—by selecting only a small number of tokens critical to the current prediction for attention calculation, it significantly reduces computational overhead with almost no loss of accuracy. DeepSeek's proposed NSA (Native Sparse Attention) is a representative work in this direction.

However, existing sparsification schemes still need to retain full KVCache in GPU memory, so the memory bottleneck in long-context scenarios persists. To this end, we are building a Hierarchical Sparse Attention framework in SGLang, combining HiCache to implement layered offloading and on-demand loading of KVCache. By retaining only the required TopK KVCache in the GPU, we can break through memory capacity limits and significantly increase the supported Batch Size and system throughput.

As a high-performance distributed file system designed specifically for AI scenarios, 3FS requires deployment and O&M that balance flexibility, ease of use, high availability, and elastic scalability.

In deployment practice, the Kubernetes Operator open-sourced by the Alibaba Cloud Server R&D Storage Team provides a complete cloud-native solution:

● Declarative Deployment & Containerized Management: Based on Kubernetes Custom Resources + Controller capabilities, implementing containerized deployment of 3FS clusters, supporting environments like self-built physical machine clusters and Alibaba Cloud ACK.

● Perception-less Storage Access: Dynamically injecting Fuse Client containers via Webhook mechanisms, completely transparent to user business containers.

● Fault Self-healing & Elastic Scaling: The Operator continuously monitors component status, automatically replaces faulty replicas, and implements rolling upgrades and elastic scaling; solves Mgmtd Pod IP change issues via Headless Service + DNS resolution to ensure seamless switchover between primary and backup nodes.

● Tenant Resource Isolation: Supports deploying multiple 3FS clusters in the same Kubernetes cluster, combined with Alibaba Cloud VPC subnet partitioning and security group strategies to achieve control resource reuse and network security isolation across business scenarios.

With the accelerated adoption of Hybrid Architecture Models (Full Attention Layers + Linear Attention Layers) in long-context LLM service scenarios, SGLang significantly reduces memory footprint and computation latency while maintaining inference capability through innovative memory management and scheduling mechanisms. This design effectively resolves the conflict between the irreversibility of linear attention states and traditional optimization mechanisms. Core capabilities include:

● Layered Memory Architecture: Isolates management of KVCache (Token granularity) and SSM states (Request granularity), managing caches for different attention layers separately, supporting predefined cache pool ratios based on actual load.

● Elastic Memory Scheduling: Implements dynamic scaling of KV/SSM dual pools based on CUDA virtual memory technology to maximize resource utilization under fixed total memory.

● Hybrid Prefix Cache: Extends Radix Tree to support KV/SSM dual cache lifecycle management, achieving prefix reuse and eviction without operator modification.

● Speculative Decoding Adaptation: Compatible with acceleration schemes like EAGLE-Tree via state snapshot slot mechanisms, supporting Top-K > 1 scenarios.

● PD Architecture Extension: Adds independent state transmission channels to simplify the integration of new hybrid models.

Facing diverse inference engines and backend storage systems, Tair KVCache extracts common KVCache global management requirements and provides a unified global KVCache management system, Tair KVCache Manager:

● Provides global external KVCache management capabilities. Implements cross-machine KVCache reuse.

● Supports access for mainstream inference engines like SGLang, vLLM, RTP-LLM, and TensorRT-LLM through unified interfaces and transmission libraries.

● Supports multiple storage systems, including 3FS. By encapsulating heterogeneous storage systems through consistent storage metadata abstraction, it significantly reduces the complexity and development cost of accessing different inference engines and storage systems.

● Provides enterprise-level capabilities such as multi-tenant Quota management, high reliability, and observability.

● Addressing the difficulty of determining the benefits of global KVCache pooling for specific businesses and scenarios, KVCache Manager provides compute and cache simulation capabilities. It can calculate hit rates and compute savings based on real business Traces. It also provides configuration optimization functions to help users adjust storage configurations to achieve optimal ROI.

[Infographic] Highlights | Database New Features in November 2025

Alibaba Clouder - June 23, 2021

Alibaba Clouder - December 19, 2018

ApsaraDB - December 21, 2022

ApsaraDB - September 21, 2023

Alibaba Cloud Community - October 14, 2025

ApsaraDB - June 13, 2022

AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by ApsaraDB