By Hongqi, Alibaba Cloud Senior Technical Expert

According to the definition of serverless computing provided by the Cloud Native Computing Foundation (CNCF), serverless architectures are designed to fix issues by using function as a service (FaaS) and backend as a service (BaaS). This definition clarifies the nature of serverless, but it causes confusion and arguments.

To meet emerging needs and keep pace with technological developments, industry participants have launched non-FaaS serverless computing services, such as Google Cloud Run and Alibaba Cloud Serverless App Engine (SAE) and Serverless Kubernetes. Based on serverless technology, these services provide auto scaling capabilities and support pay-as-you-go billing. They enrich the scenarios of serverless computing.

The emergence of these services blurs the boundaries of serverless computing. Many cloud services are evolving toward the serverless form. But how can we fix business issues based on a vague concept? One design goal of serverless has remained unchanged: to focus on business logic no matter what types of servers, auto scaling capabilities, or pay-as-you-go billing methods are used.

Ben Kehoe, a famous serverless expert, describes serverless as a state of mind. Consider the following things when you conduct businesses:

When you build a serverless architecture, focus on your business logic instead of spending time choosing cutting edge services and technologies to fix technical pain points. Once you understand your business logic, it is easier for you to select suitable technologies and services and figure out a workable plan to design an application architecture. Going serverless allows you to fix practical issues while focusing on your businesses. This means less work and fewer resources because you can transfer some work to others.

The following section explains how serverless architectures are applied to common scenarios. We will look at architecture design from the perspectives of computing, storage, and message transmission. We will also weigh the pros and cons of architectures in terms of maintainability, security, reliability, scalability, and costs. To make the discussion more practical, I will use specific services as examples. You can also try out other services because these architectures are universal.

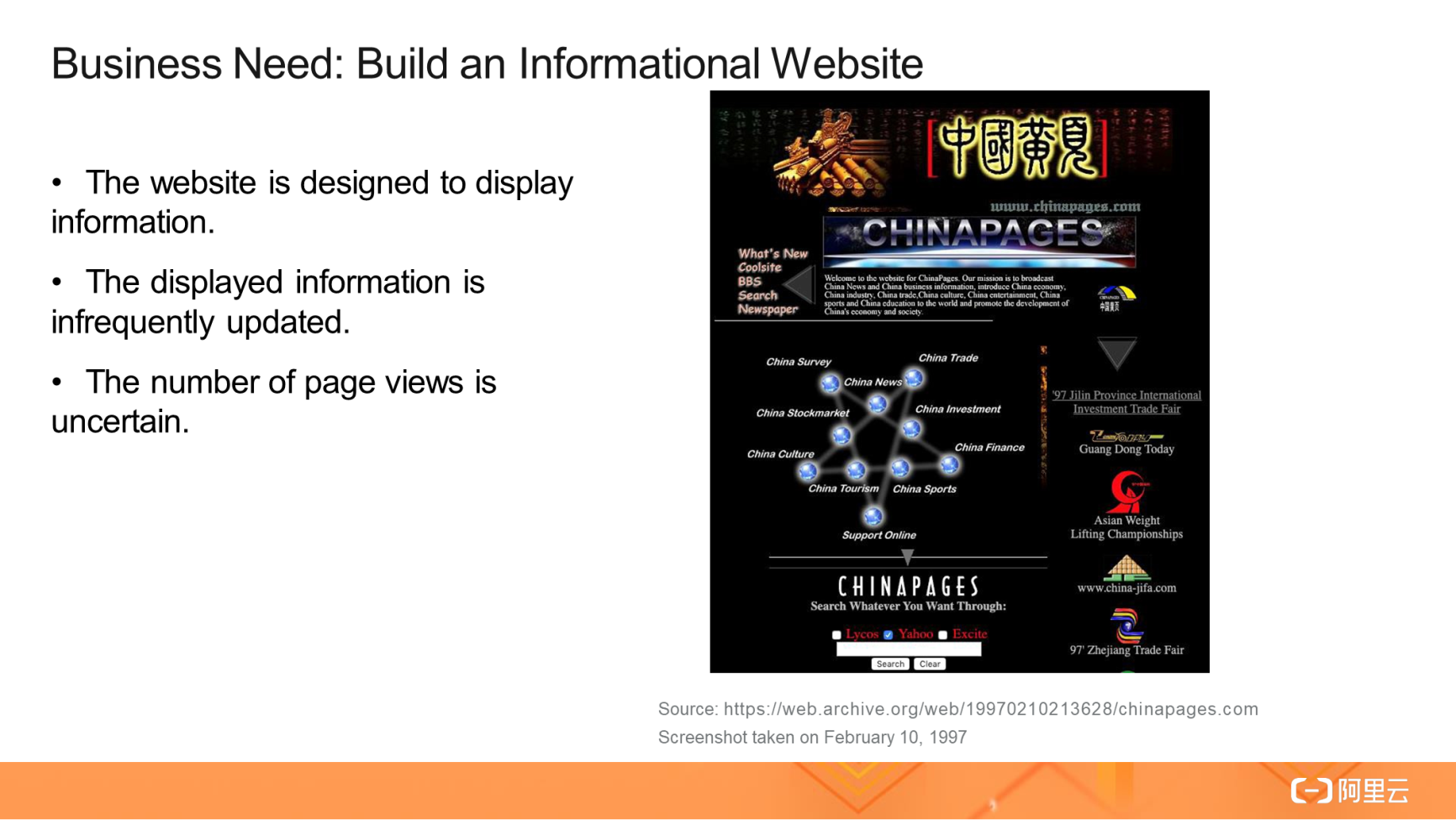

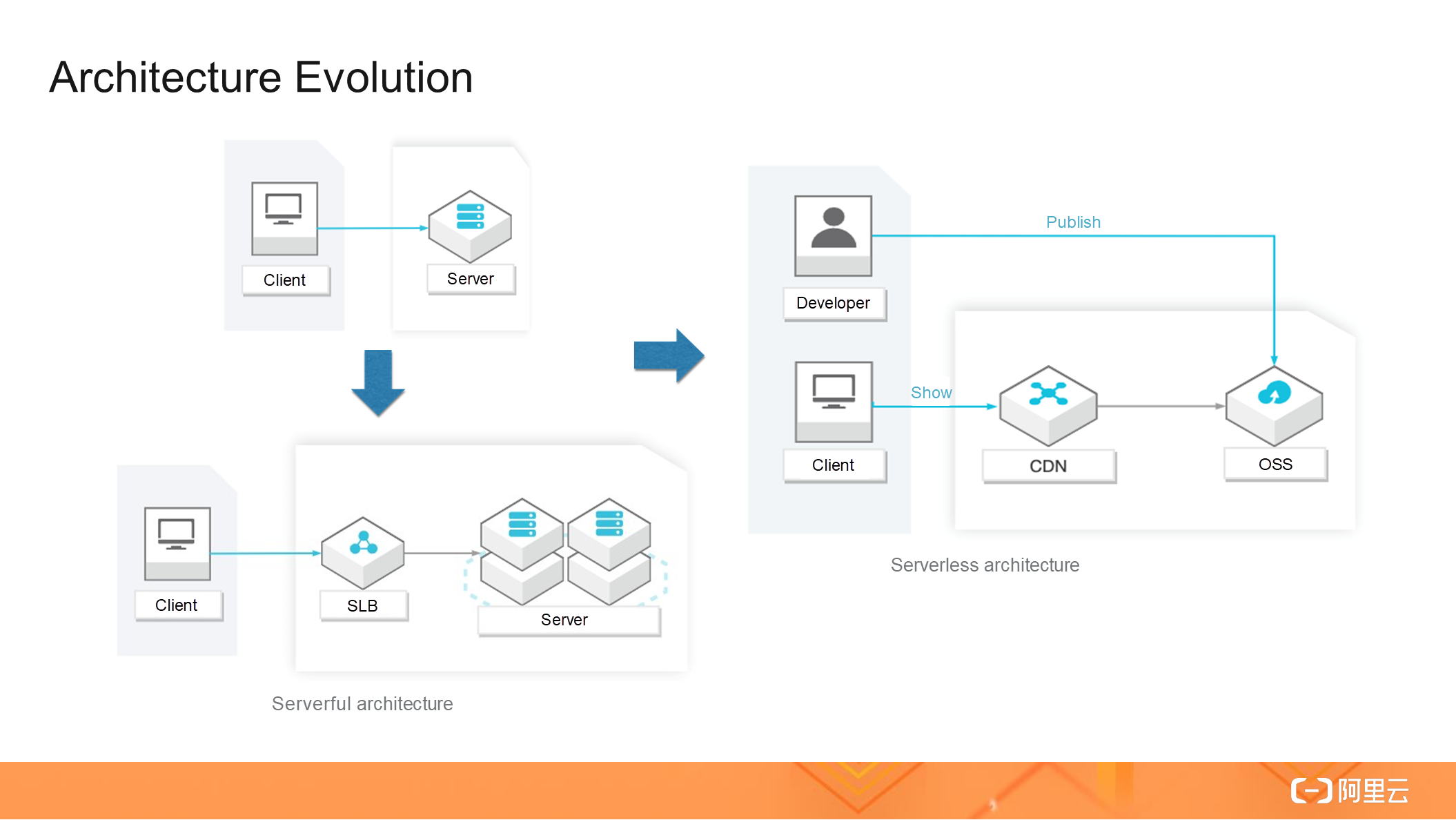

For example, assume you are about to build a simple informational website, like online yellow pages. The following three solutions are available:

Going from Solution 1 to Solution 3 takes you into the realm of serverless. In other words, you migrate your businesses to the cloud and do away with managed servers. What changes do you experience when you go serverless? Solution 1 and Solution 2 require a series of tasks to be completed, including budgeting, scale-out, high availability, and manual monitoring. This was not what Jack Ma wanted when, in the early days, he just wanted to build an informational website to introduce China to the world. This was his business logic. So, go serverless if you just want to focus on your business. Solution 3 builds a static website based on a serverless architecture. It has the following advantages over the other two solutions:

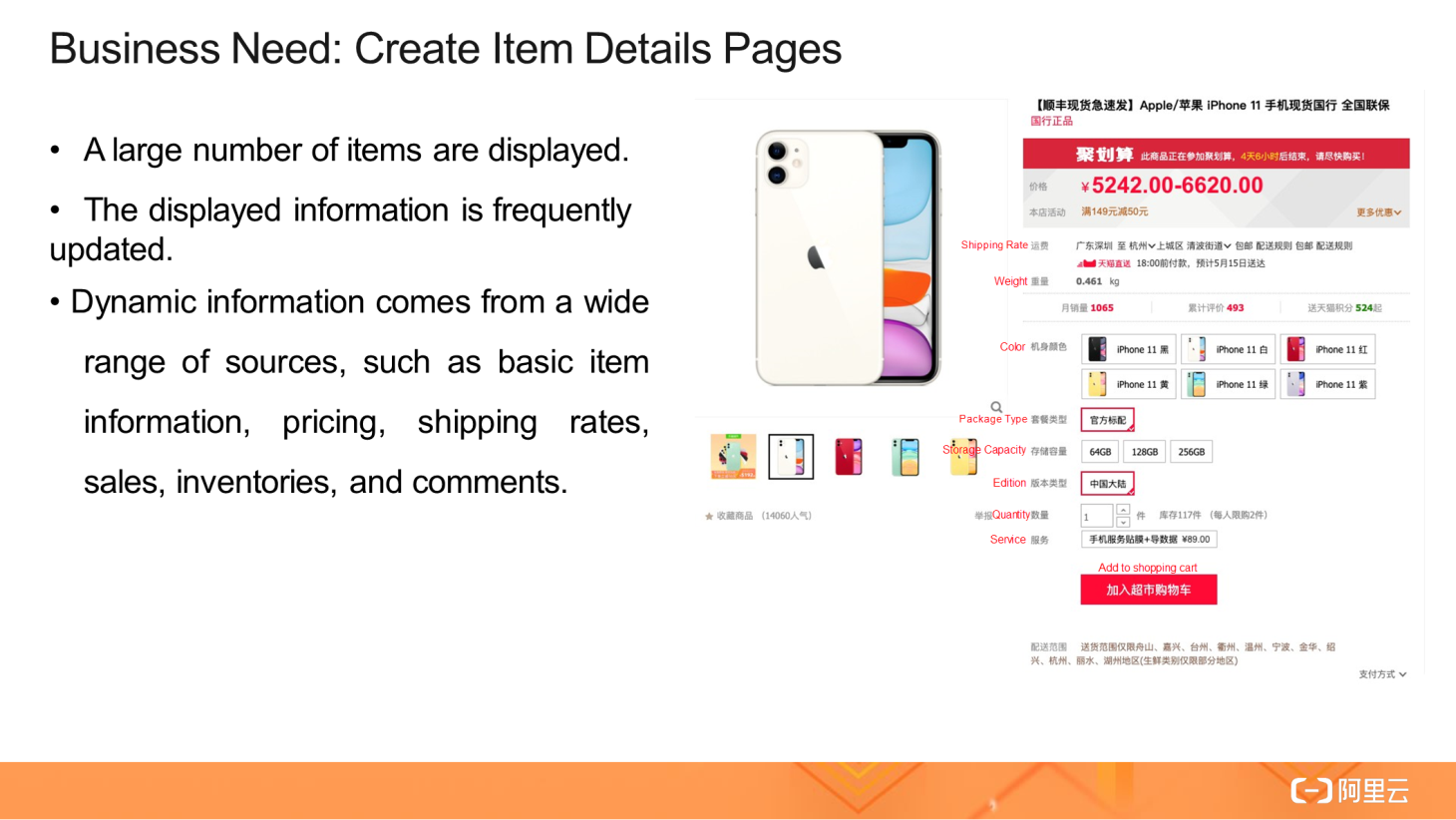

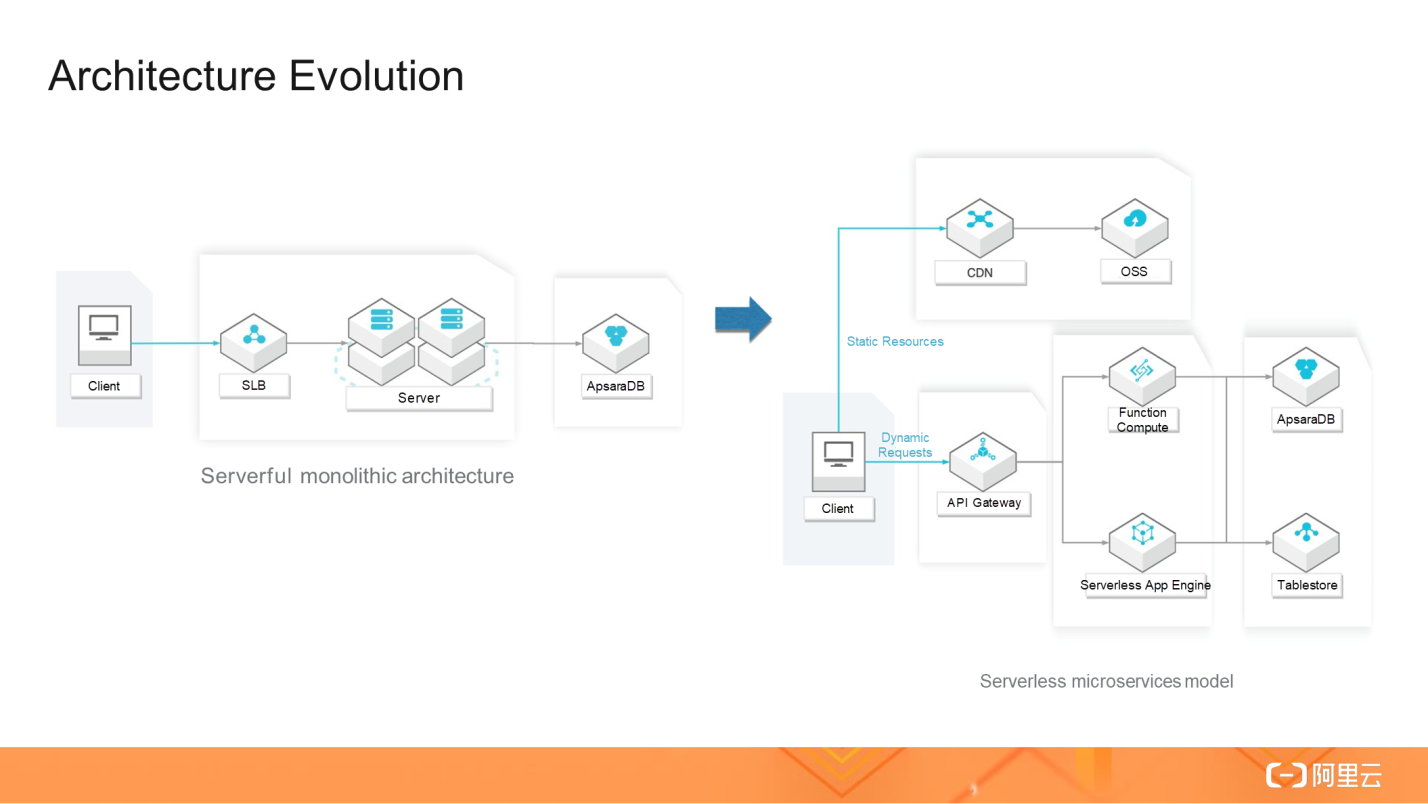

Static pages and websites can be used to display small-volume content that is infrequently updated. Dynamic pages and websites can be used to display frequently updated content in large volumes. For example, you cannot design static pages to manage item information on the item pages of Taobao. We can enable dynamic responses to user requests by using the following solutions:

You can choose an appropriate solution to fix your major business issues based on the development phase and scale of your organization. Taobao's initial success had nothing to do with the technical architecture it used. No matter what architecture you use, serverless as a state of mind helps you focus on your businesses. For example:

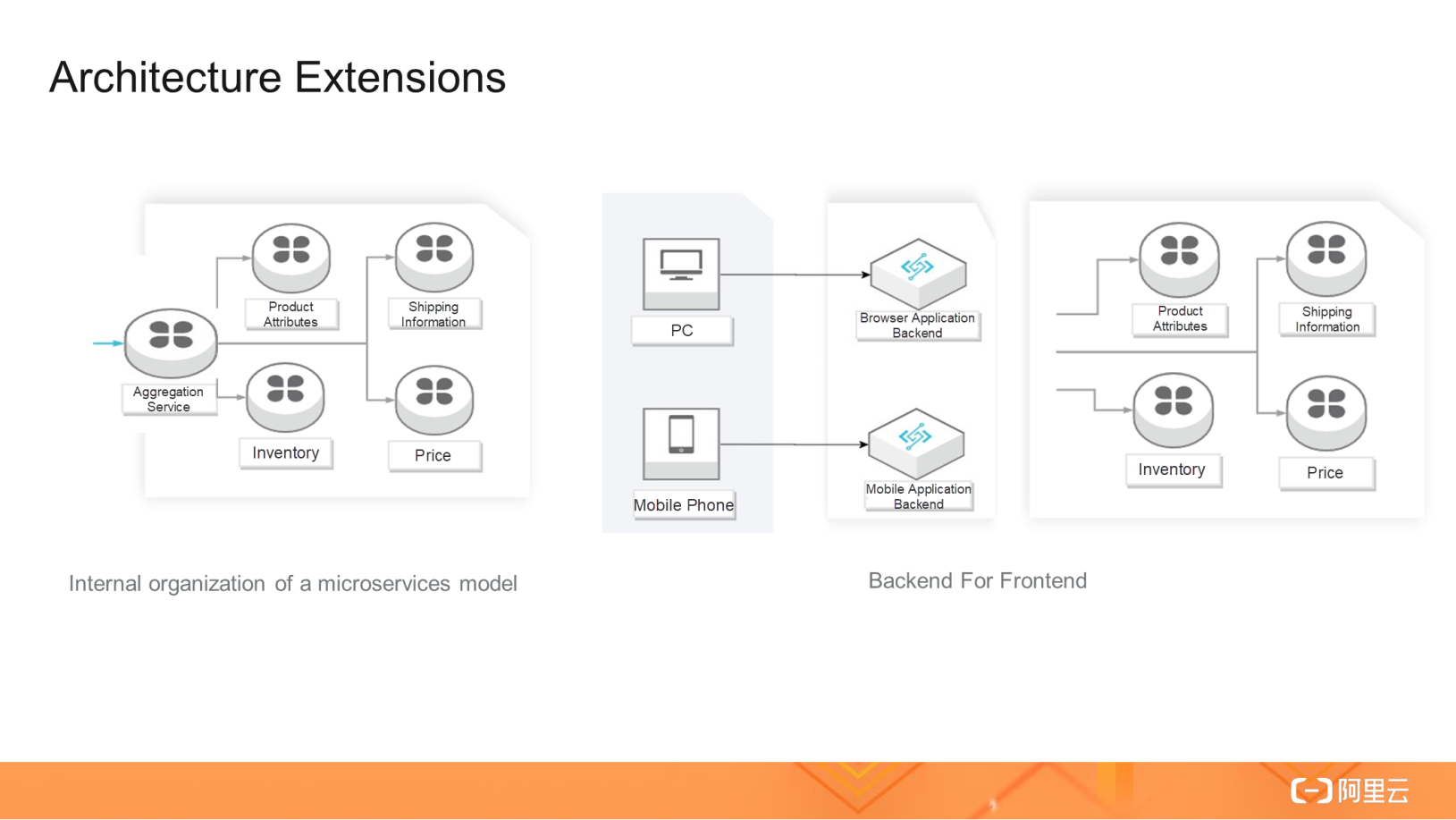

The architecture shown in the right part of the preceding figure introduces API Gateway, Function Compute, or SAE to implement a computing layer. A large number of tasks are completed by cloud services, allowing you to focus on your business logic. The following figure shows the interactions between microservices in the system. An item aggregation service presents internal microservices to external users in a unified manner. The microservices can be implemented by using SAE or functions.

The architecture can be extended to support access by different clients, as shown in the right part of the preceding figure. Different clients may need different information. You can enable your website to recommend items to mobile phone users based on their locations. Can we develop a serverless architecture that benefits both mobile phone users and web browser users? The answer to this question lies in the Backend for Frontend (BFF) architecture, which has received high praise from frontend development engineers because it is built with serverless technology. Frontend engineers can directly write BFF code from the business perspective, without having to handle complex server-related details.

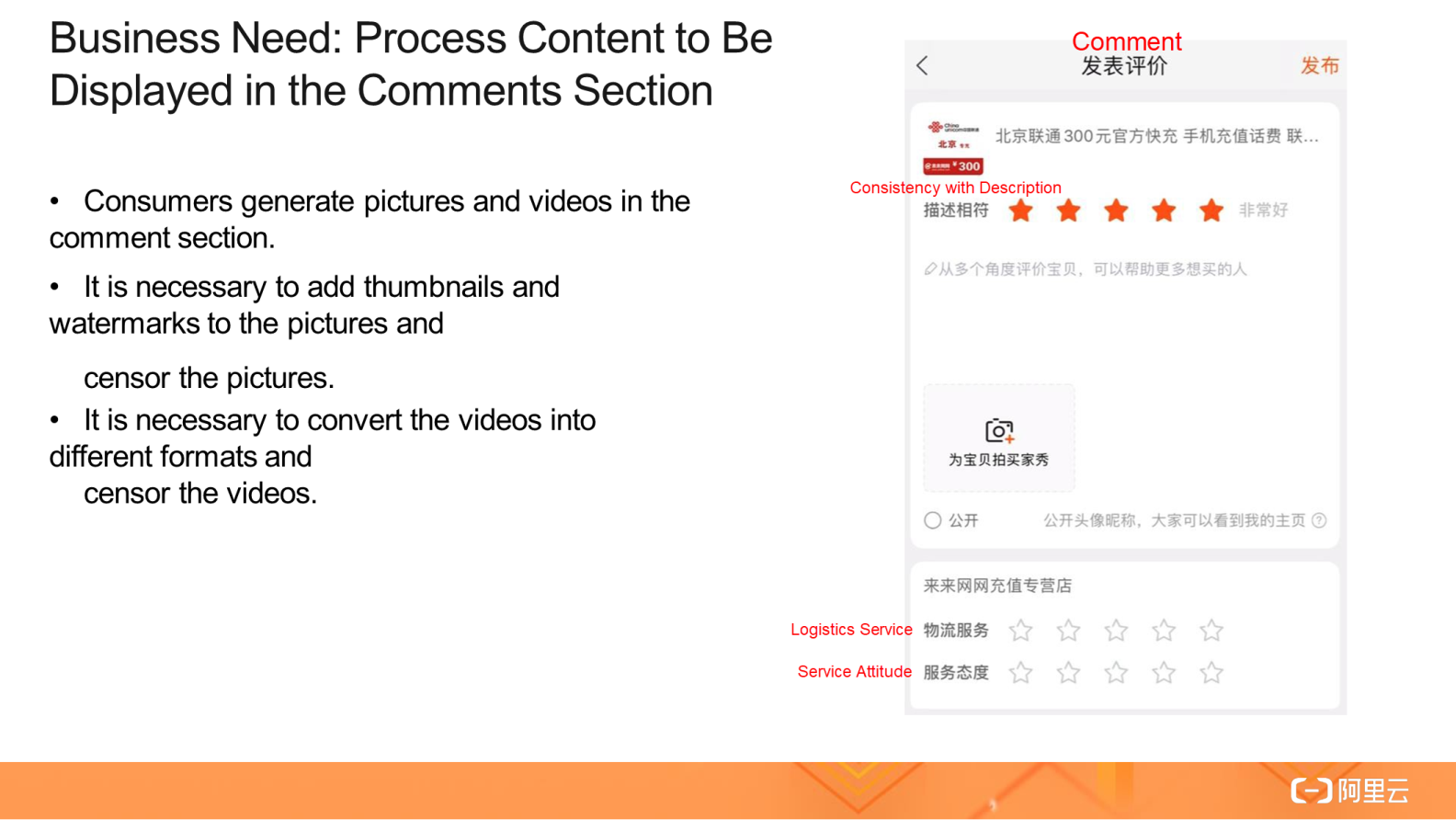

Dynamic pages are created in sync with request processing. However, some requests may take a long time to process. For example, say we need to implement content management for the pictures and videos that users generate in the comments section so that they can be displayed or played back properly on different clients. The content management involves how to upload pictures, add thumbnails and watermarks to the pictures, and censor pictures.

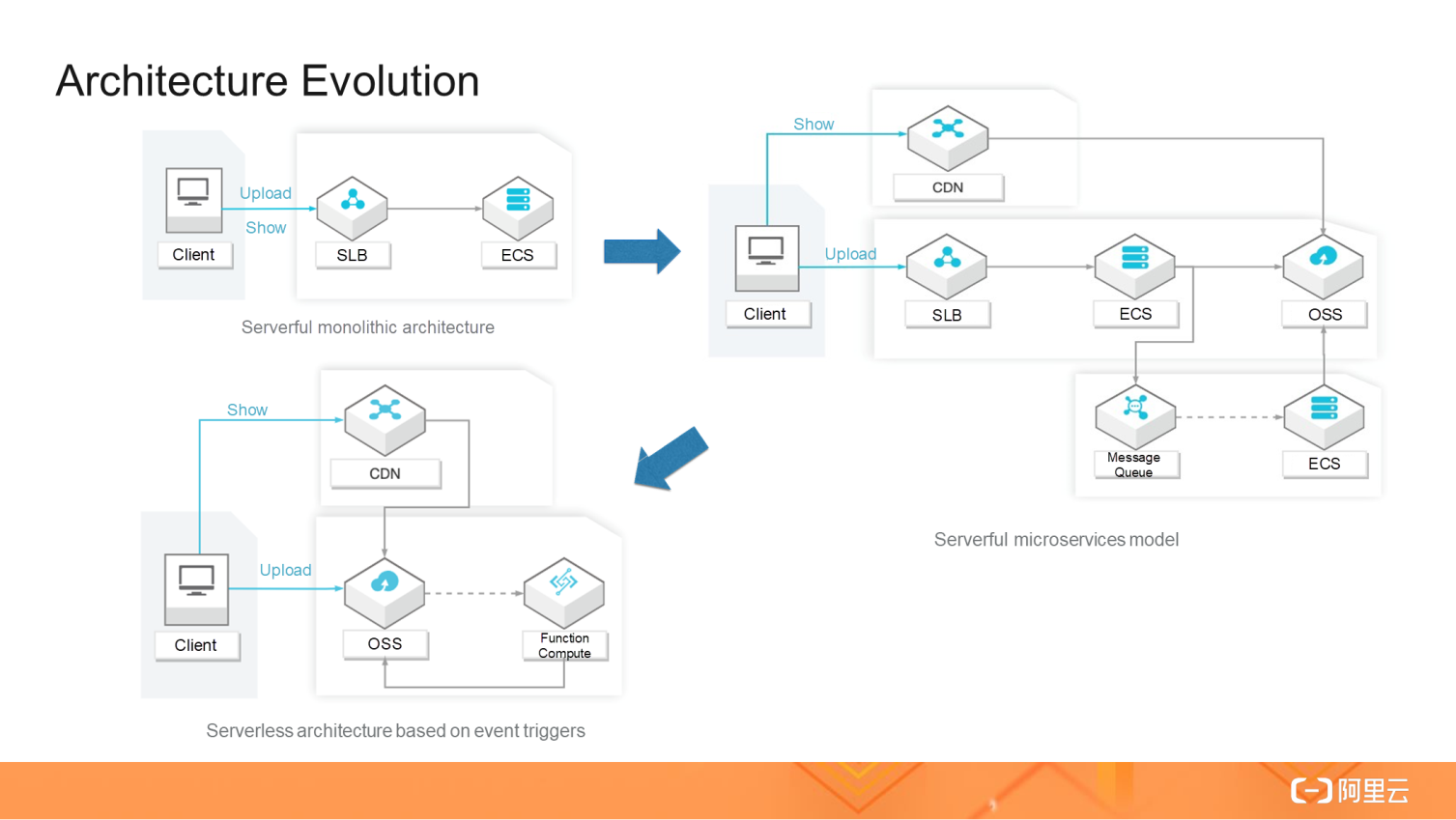

The technical architectures used to process uploaded multimedia files in real time have evolved as follows:

If you choose a serverful monolithic architecture, you have to consider the following issues:

A server-based microservices model can help you fix most of the preceding issues, but new issues emerge, for example:

A serverless architecture can fix all of the preceding issues. With serverless, a series of tasks that were originally handled by developers are transferred to services for automatic execution. These tasks include load balancing, high availability and auto scaling of servers, and message queue management. As architectures are evolving, fewer tasks need to be handled by developers and systems have become more sophisticated. This means more effort can be devoted to businesses. This significantly accelerates business delivery.

A serverless architecture provides the following benefits:

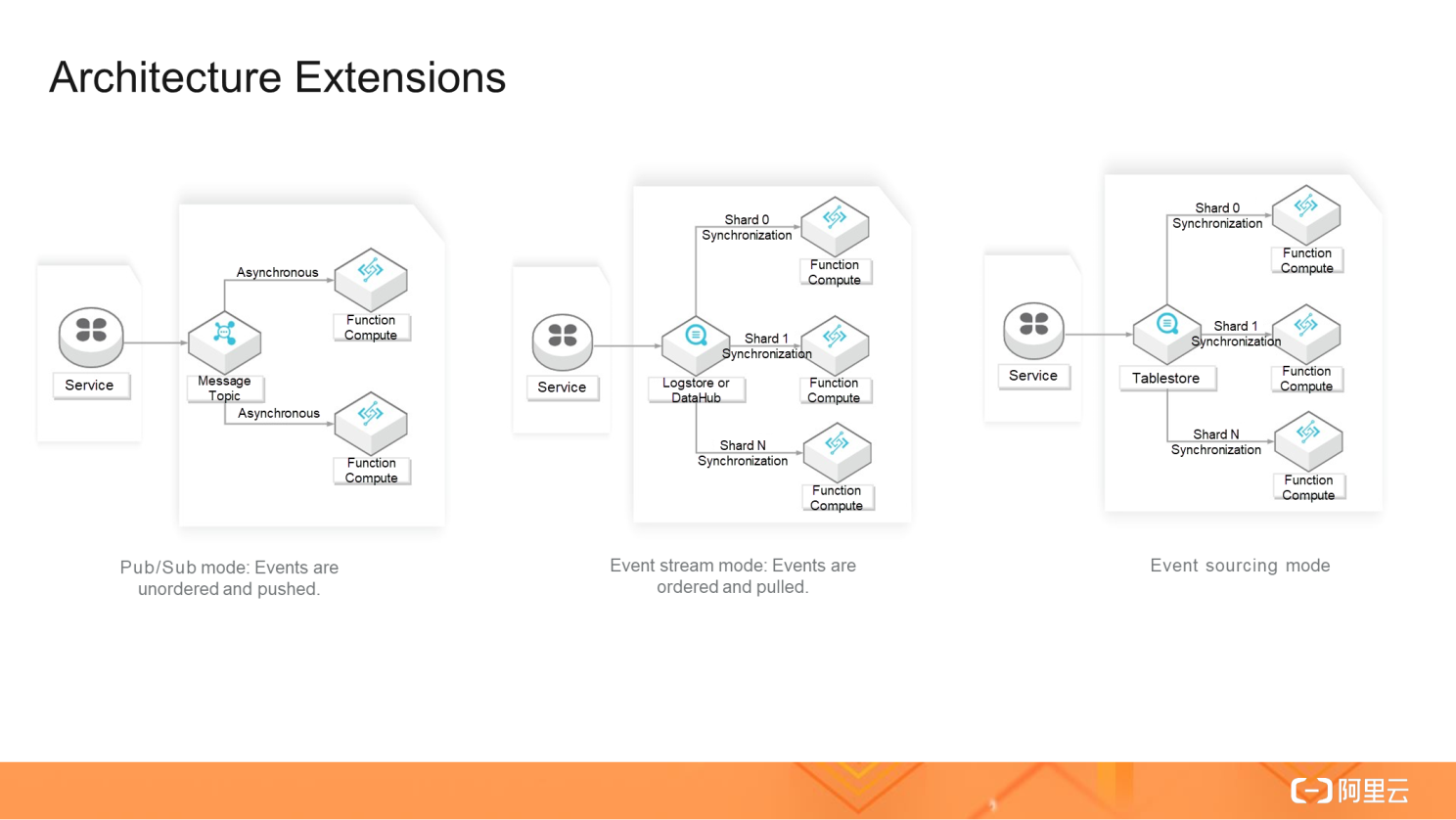

Event triggers are an important feature of FaaS. The publish/subscribe (pub/sub) event-driven model is not new. However, before serverless architectures were widely used, we had to manually implement event production and consumption processes and set up supporting intermediate connections. This is similar to the working of a server-based microservices model.

In a serverless architecture, events are sent by the producer, and you can focus on the consumer logic without having to maintain the intermediate connections. This is a major benefit of going serverless.

Function Compute is also integrated with the event sources of other cloud services, allowing you to conveniently apply common modes to your businesses, such as pub/sub mode, event streaming mode, and event sourcing mode.

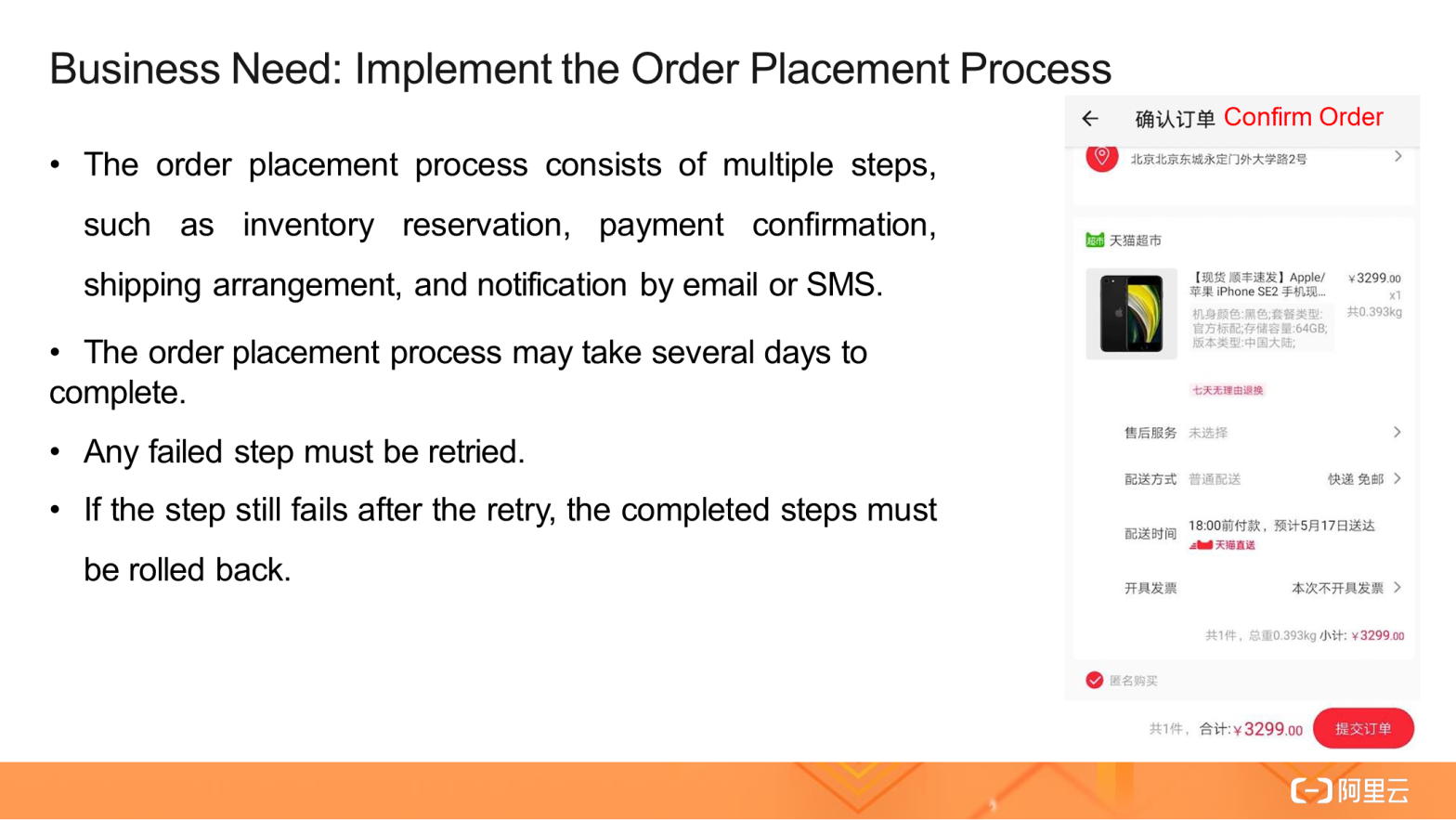

As mentioned above, an item page contains complex elements, but only read operations can be performed on the page. The aggregation service APIs are stateless and synchronous. Now, let's look at an order placement process, which is a core scenario of e-commerce.

This process involves a series of distributed writes, which are difficult to process in a microservices model. However, you can use a standalone application to easily process these distributed writes. This is because only one database is used and data consistency can be maintained through database transactions. In practice, you may have to deal with some external services and need a solution to ensure that each step is completed or rolled back smoothly. A classic solution is the Saga model, which can be implemented based on two different architectures.

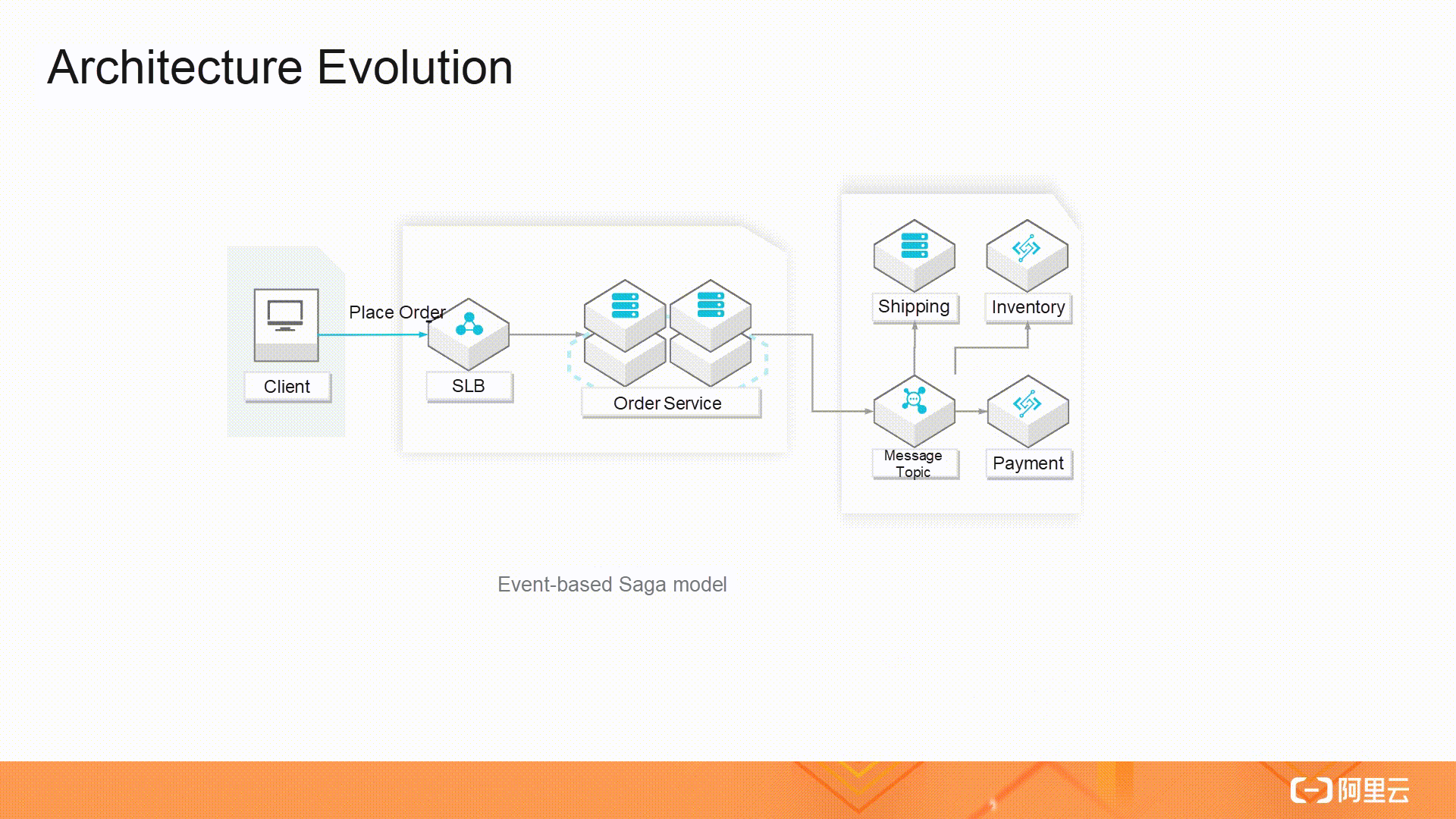

In one architecture, the progress of steps is driven by events. A message bus is used by the relevant services, such as the inventory service, to listen to events. The listener has access to servers or functions. This architecture can do away with servers by integrating Function Compute with topics.

The modules of this architecture are loosely coupled and assigned clear responsibilities. However, this architecture becomes increasingly difficult to maintain as more complex steps are added. For example, it is difficult to understand the business logic and track the execution status. In addition, the architecture has low maintainability.

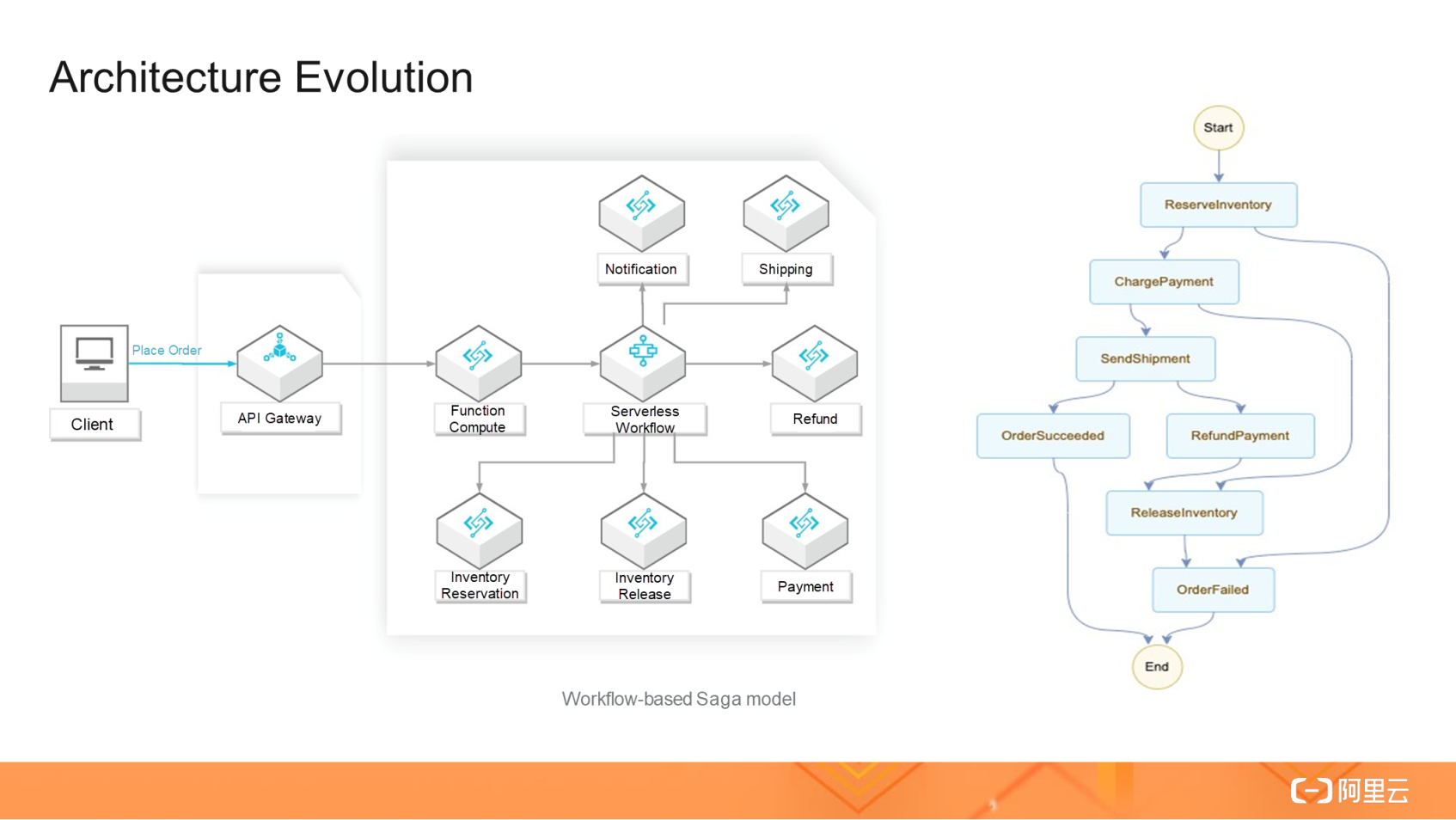

The other architecture is based on workflows. The services are all independent of each other, and information is not transmitted by using events. Instead, a centralized coordinator service schedules individual business services and maintains the business logic and states. However, the following issues arise from this centralized coordinator:

You can use cloud services, such as Alibaba Cloud Serverless Workflow, to complete the preceding tasks automatically so that you only need to focus on your business logic.

The related flowchart is shown in the right part of the following figure. The flowchart implements the event-driven Saga model, which significantly simplifies processes and improves observability.

As your businesses grow and generate more data, you can mine the value of this data. For example, you can analyze how users behave on your website and make recommendations to them accordingly. A data pipeline includes data collection, processing, and analytics. It is possible but difficult to build a data pipeline from scratch. Here, we are talking about e-commerce instead of how to provide a data pipeline service. Once you have set a goal for your businesses, you will find it easier to choose ways to achieve this goal.

This article introduces the common scenarios of serverless architectures and explains how serverless architectures separate tasks unrelated to your business from business logic and transfer them to platforms and services for handling. The division of responsibilities and coordination are common practices and they are more clearly defined in serverless architectures. Less is more. A serverless architecture allows you to focus on your businesses and the core competitiveness of your products without having to deal with servers, server loads, and other details that are not related to your businesses.

Cloud-Native Storage: Container Storage and Kubernetes Storage Volumes

Getting Started with Serverless: What Is Function Compute and How Does It Work?

480 posts | 48 followers

FollowAlibaba Developer - October 13, 2020

Alibaba Clouder - February 3, 2021

Alibaba Developer - November 8, 2021

Alibaba Clouder - February 15, 2021

Alibaba Clouder - March 19, 2019

Alibaba Clouder - April 16, 2019

480 posts | 48 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn MoreMore Posts by Alibaba Cloud Native Community