By Fuwei (Yuge), Senior Development Engineer at Alibaba Cloud

Before getting into a detailed definition of a container, let's briefly review how an operating system (OS) manages processes. We can see processes by logging on to the OS and performing operations such as running the ps command. These processes include system services and users' application processes. Let's take a look at the characteristics of these processes.

Next, it is significant to identify the problems that may arise out of the characteristics listed above.

To address these three issues, let's understand how to provide an independent running environment for these processes?

Next, let's understand how to define a set of processes in this fashion.

A container is a set of processes that is isolated at the view level and restricted in resource utilization, with a separate file system. "View-level isolation" means that only part of the processes is visible and that these processes have independent hostnames. The restriction on resource utilization may involve the memory size and CPU count. A container is isolated from other resources of the system and has its own resource view.

A container also has a separate file system. This system uses system resources and therefore does not need kernel-related code or tools. Due to this, it is critical to provide the container with the required binary files, configuration files, and dependencies. The container may run provided that the file collection required for running the container is available.

A container image refers to a collection of all files required for running a container. Generally, Dockerfile is used to build an image. Dockerfile provides a very convenient syntactic sugar to help us describe every building step. Certainly, each building step is performed on the existing file system and makes changes to the content of the file system. These changes are referred to as a changeset. Obtain a complete image by sequentially applying these changes to an empty folder. The changeset features layering and reusability to provide the following benefits:

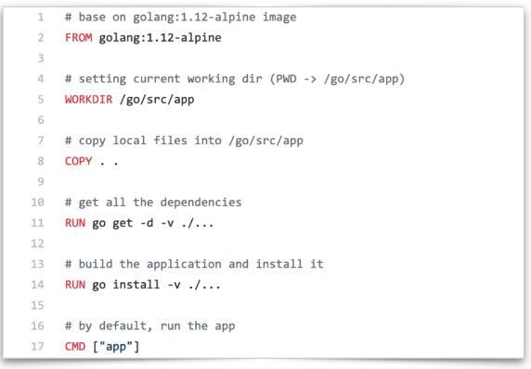

As shown in the following figure, Dockerfile describes how a Go application is built.

1) The FROM line indicates the image based on which building steps are performed. As mentioned earlier, images can be reused.

2) The WORKDIR line indicates the directory in which the subsequent building steps are performed. Its function is similar to the cd command in a shell.

3) The COPY line indicates copying files on the host to the container image.

4) The RUN line indicates performing the corresponding action on a specific file system. Obtain an application after running this command.

5) The CMD line indicates the default program name used in the image.

After Dockerfile is available, build the required application by running a Docker build command. Building results are stored locally. Generally, an image is built in a packaging tool or other isolated environments.

Now, the next question is how do these images run in the production or test environment? In this case, a broker or central storage, which is known as the Docker registry, is required. This registry stores all the generated image data. To push a local image to the image repository, run the Docker pull command. This helps to download and run the corresponding data in the production or test environment.

To run a container, you need to complete three steps:

An image is equivalent to a template, and a container is the running instance of an image. Therefore, build an image and run everywhere.

A container is a set of processes that is isolated from the rest of the system, such as other processes, network resources, and file systems. An image is the collection of all files required by a container and is built just once for running everywhere.

A container is a set of isolated processes. By running the Docker run command, you may specify an image to provide a separate file system and specify the corresponding running program. The specified running program is referred to as an initial process. The container starts with the startup of the initial process and exits with the exit of the initial process.

Therefore, the lifecycle of a container is considered to be the same as that of an initial process. Certainly, a container may include more than an initial process. The initial process generates sub-processes or manages O&M operations resulting from the execution of the Docker exec command. When the initial process exits, all its sub-processes exit to prevent resource leakage. However, this method incurs some problems. As programs in an application are usually stateful and may generate important data. After a container exits and is deleted, its data is lost. This is unacceptable for the application, and therefore, there is a need to persist important data generated by containers. To meet this demand, a container directly persists data into a specified directory, which is referred to as a volume. The most prominent feature of the volume is that the lifecycle of the volume is independent of the container. To be specific, container operations such as creation, running, stopping, and deletion are irrelevant to the volume because the volume is a special directory that helps persist data in the container. Mount the volume to the container so that the container writes data to the corresponding directory, and the exit of the container does not incur data loss. Generally, a volume is managed in two ways:

Moby is the most popular container management engine available today. The Moby daemon manages containers, images, networks, and volumes at upper layers. The most important component on which the Moby daemon relies is containerd. The container component is a container runtime management engine that is independent of the Moby daemon. It manages containers and images at the upper layers.

Similar to a daemon process, containerd includes a containerd shim module at the underlying layer. This design is due to the following reasons:

This article provides a general introduction to Moby. For a detailed description, refer to subsequent articles in this series.

A virtual machine (VM) simulates hardware resources such as the CPU and memory by using the hypervisor-based virtualization technology, wherein a guest OS is built on the host. This process is referred to as the installation of a VM.

Each guest OS, such as Ubuntu, CentOS, or Windows, has an independent kernel. All applications running in such a guest OS are independent of each other. Therefore, using a VM achieves better isolation. However, implementing this isolation requires the virtualization of certain computing resources, which results in the waste of existing computing resources. In addition, each guest OS consumes a large amount of disk space. For example, the Windows OS consumes 10 GB to 30 GB disk space, and the Ubuntu OS consumes 5 GB to 6 GB disk space. Furthermore, the startup of a VM is very slow in this condition. The weaknesses of the VM technology drive the emergence of container technology. Containers are oriented to processes and therefore require no guest operating systems. A container needs only a separate file system that provides it with the required file collection. In this case, all the file isolation is process-level. Therefore, the container starts up faster and requires less disk space than the VM. However, the effect of process-level isolation is not as ideal as that of VMs.

In a nutshell, both containers and VMs have pros and cons; currently, container technology is developing towards a stronger isolation capability.

This article gives a quick walkthrough of the following basic concepts of Kubernetes containers

Getting Started with Kubernetes | A Brief History of Cloud-native Technologies

Getting Started with Kubernetes | Deep Dive into Kubernetes Core Concepts

668 posts | 55 followers

FollowAlibaba Developer - June 19, 2020

Alibaba Developer - April 7, 2020

Alibaba Clouder - July 24, 2020

Alibaba Cloud Native Community - February 26, 2020

Alibaba Developer - February 26, 2020

Alibaba Clouder - February 22, 2021

668 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More AgentBay

AgentBay

Multimodal cloud-based operating environment and expert agent platform, supporting automation and remote control across browsers, desktops, mobile devices, and code.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community