By Zhimin Tang, Xiang Li, Fei Guo

Since 2015, Alibaba Cloud Container Service for Kubernetes (ACK) has been one of the fastest growing cloud services on Alibaba Cloud. Today ACK not only serves many Alibaba Cloud customers, but also supports Alibaba internal infrastructure and many other Alibaba cloud services.

Like many other container services in world leading cloud vendors, reliability and availability are the top priorities for ACK. To achieve these goals, we built a cell-based and globally available platform to run tens of thousands of Kubernetes clusters.

In this blog post, we will share the experience of managing a large number of Kubernetes clusters on a cloud infrastructure as well as explore the design of the underlying platform.

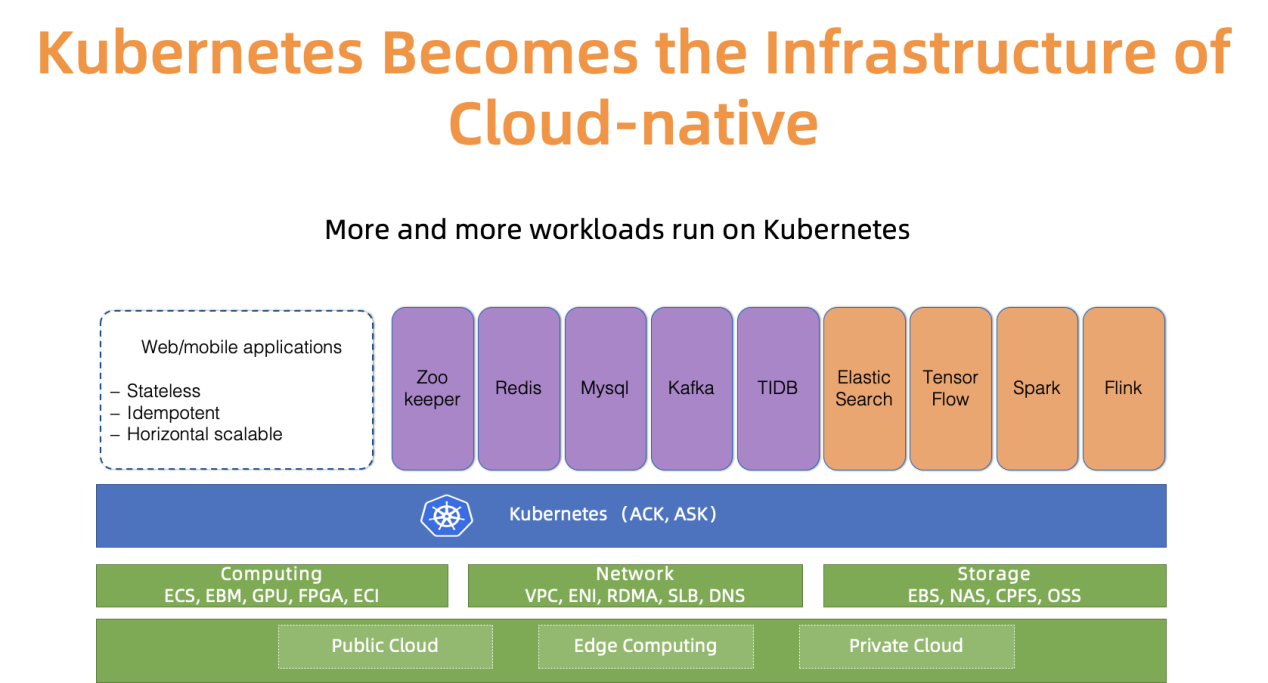

Kubernetes has become the de facto cloud native platform to run various workloads. For instance, as illustrated in Figure 1, in Alibaba cloud, more and more stateful/stateless applications as well as the application operators now run in Kubernetes clusters. Managing Kubernetes has always been an interesting and a serious topic for infrastructure engineers. When people mention cloud providers like Alibaba Cloud, they always mean to point out a scale problem.

What is Kubernetes clusters management at scale? In the past, we have presented our best practices of managing one Kubernetes with 10,000 nodes. Sure, that is an interesting scaling problem. But there is another dimension of scale - the number of clusters.

Figure 1. Kubernetes ecosystem in Alibaba Cloud

We have talked to many ACK users about cluster scale. Most of them prefer to run several dozens, if not hundreds, of small or medium size Kubernetes clusters for good reasons: controlling the blast radius, separating clusters for different teams, spinning ephemeral clusters for testing. Presumably, had ACK aimed to globally support many customers with this Kubernetes cluster usage model, it would need to manage a large number of Kubernetes cluster across more than 20 regions reliably and efficiently.

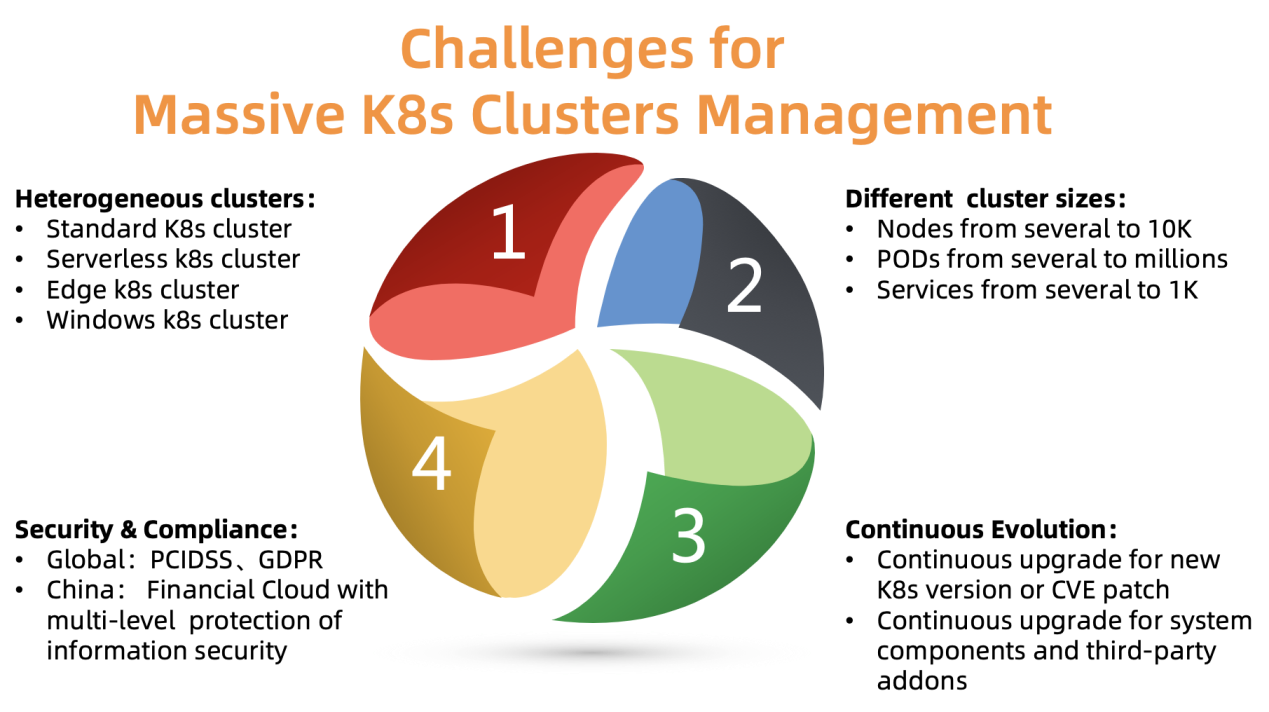

Figure 2. The challenges of managing massive number of Kubernetes clusters

What are the major challenges of managing clusters at scale? As summarized in Figure 2, there are four major issues that we need to tackle:

1. Heterogeneous clusters

ACK needs to support different types of clusters, including standard, serverless, Edge, Windows and a few others. Different clusters require different parameters, components, and hosting models. Some of our customers need customizations to fit their use cases.

2. Different cluster sizes

Different cluster varies in size, ranging from a few nodes to tens of thousands of nodes, from several pods to thousands of pods. The resource requirements for the control plane of these cluster differ significantly. A bad resource allocation might hurt cluster performance or even cause failure.

3. Different versions

Kubernetes itself evolves very fast with a release cycle of every a few months. Customers are always willing to safely try new features. Hence they may run their test workload against newer versions, while keep running the production workload on stable versions. To satisfy this requirement, ACK needs to continuously deliver new version of Kubernetes to our customers and support stable versions.

4. Security compliance

The clusters are distributed in different regions. Thus, they must comply with different compliance requirements. For example, the cluster in Europe needs to follow the GDPR act of the European Union, and the financial cloud in China need to have additional level of protections. Failing to accomplish these requirements is not an option since it introduces huge risks for our customers.

ACK platform is designed to resolve most of above problems and nowadays manages more than 10K kubernetes clusters globally in a reliable and stable fashion. Let us reveal how this is achieved by going through a few key ACK design principles.

Cell-based architecture, compared to a centralized architecture, is common for scaling the capacity beyond a single data center or for expanding the disaster recovery domain.

Alibaba Cloud has more than 20 regions across the world. Each region consists of multiple available zones (AZs), and an AZ typically maps to a data center. In a large region (such as the Hangzhou region), it is quite common to have 1000s of customer Kubernetes clusters that are managed by ACK.

ACK manages these Kubernetes clusters using Kubernetes itself, meaning that we run a meta Kubernetes cluster to manage the control plane of our customers' Kubernetes clusters. This architecture is also known as the Kube-on-Kube (KoK) architecture. KoK simplifies the customer cluster management since it makes the cluster rollout easy and deterministic. More importantly, we can now reuse the features that native Kubernetes provides by itself. For example, we can use deployment to manage API Servers, use etcd operator to operate multiple etcds. "Dogfooding" is always fun and helps us to improve our product and services simultaneously.

Within one region, we deploy multiple meta Kubernetes clusters to support the growth of the ACK customers. We call each meta cluster a cell. To tolerate AZ failures, ACK supports multi-active deployment in one region, which means the meta cluster spreads the master components of the customer Kubernetes clusters across multiple AZs, and runs them in active-active mode. To ensure the reliability and efficiency of the master components, ACK optimizes the placement of different components to ensure API Server and etcd are deployed close to each other.

This model enable us to manage Kubernetes effectively, flexibly and reliably.

As we mentioned above, in each region, the number of meta clusters grows as the number of customer increases. But when shall we add a new meta cluster? This is a typical capacity planning problem. In general, a new meta cluster is instantiated when existing meta clusters run out of required resources.

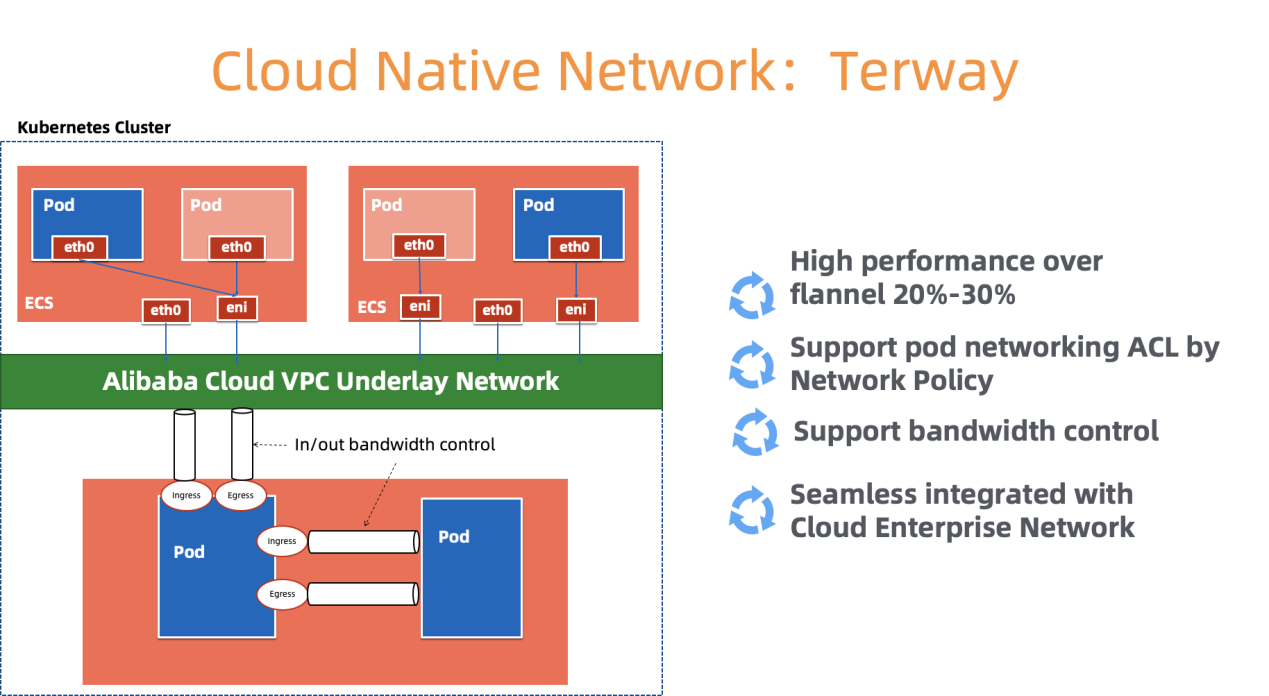

Let us take network resource for an example. In a KoK architecture, customer Kubernetes components are deployed as Pods in meta cluster. We use Terway - a high performance container networking plugin developed by Alibaba Cloud to manage the container network. It provides a rich set of security policies, and enable us to use Alibaba Cloud Elastic Networking Interface (ENI) to connect to users' VPC. To provide network resources to nodes, pods, services in the meta cluster efficiently, we need to do careful allocation based on the network resources capacity and utilization inside the meta cluster VPC. When we are about to run out of the networking resources, a new cell will be created.

We also consider the cost factors, density requirements, resources quota, reliability requirements, statistic data to determine the number of customer clusters in each meta cluster, and to decide when to create a new meta cluster. Note that small clusters can grow to larger ones, and thus requires more resources even if the number of cluster remain unchanged. We usually leave enough headroom to tolerate the growth of each cluster.

Figure 3. Cloud Native network architecture of Terway

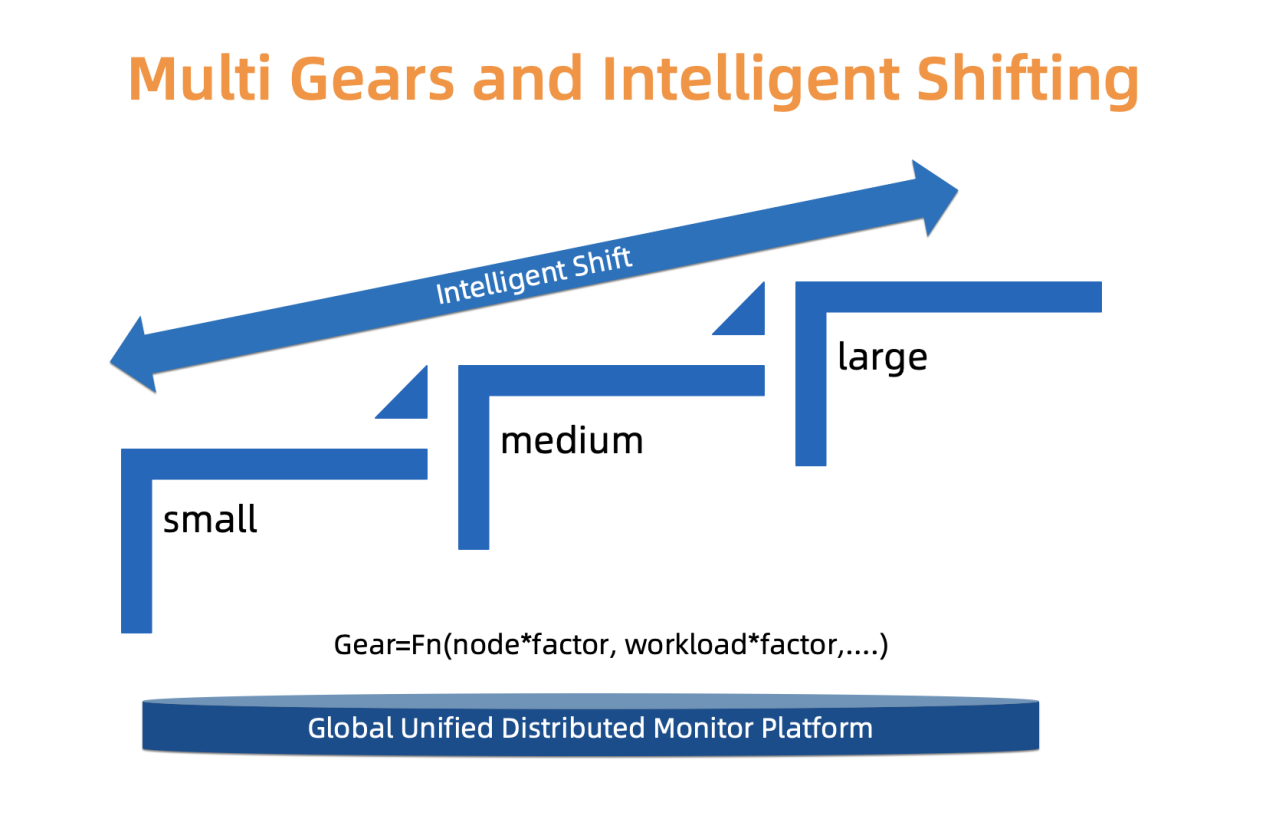

The resource requirements of the Kubernetes master components are not fixed. The number strongly relates to the number of nodes, pods in the cluster, as well as the number of custom controllers and operators that interact with the APIServer.

In ACK, each customer Kubernetes cluster varies in size and runtime requirements. We cannot use the same configuration to host the master components for all user clusters. If we mistakenly set a low resource request for big customers, they may suffer from bad performance if not a total disaster. If we set a conservative high resource request for all clusters, resources are wasted for small clusters.

To carefully handle the tradeoff between reliability and cost, ACK uses a type based approach. More specifically, we define different types of clusters: small, medium and large. For each type of clusters, a separate resource allocation profile is used. Each customer cluster is associated with a cluster type which will be identified based on the load of the master components, the number of nodes, and some other factors. The cluster type may change overtime. ACK monitors the factors of interest constantly, and might promote/demote the cluster type accordingly. Once the cluster type is changed, the underlying resources allocation will be updated automatically with minimal user interruption.

We are working on both finer grained scaling and in place type updates to make the transition more smooth and cost effective.

Figure 4. Multi gears and intelligent shifting

Previous sections describe some aspects on how to manage a large number of Kubernetes clusters. However, there is one more challenge to solve: the evolution of the clusters.

Kubernetes is "Linux" in the cloud native era. It keeps on getting updated and being more and more modular. We need to continuously deliver newer versions of Kubernetes in a timely fashion, fix CVEs and do upgrades for the existing clusters, and manage a large number of related components (CSI, CNI, Device Plugin, Scheduler Plugin and many more) for our customers.

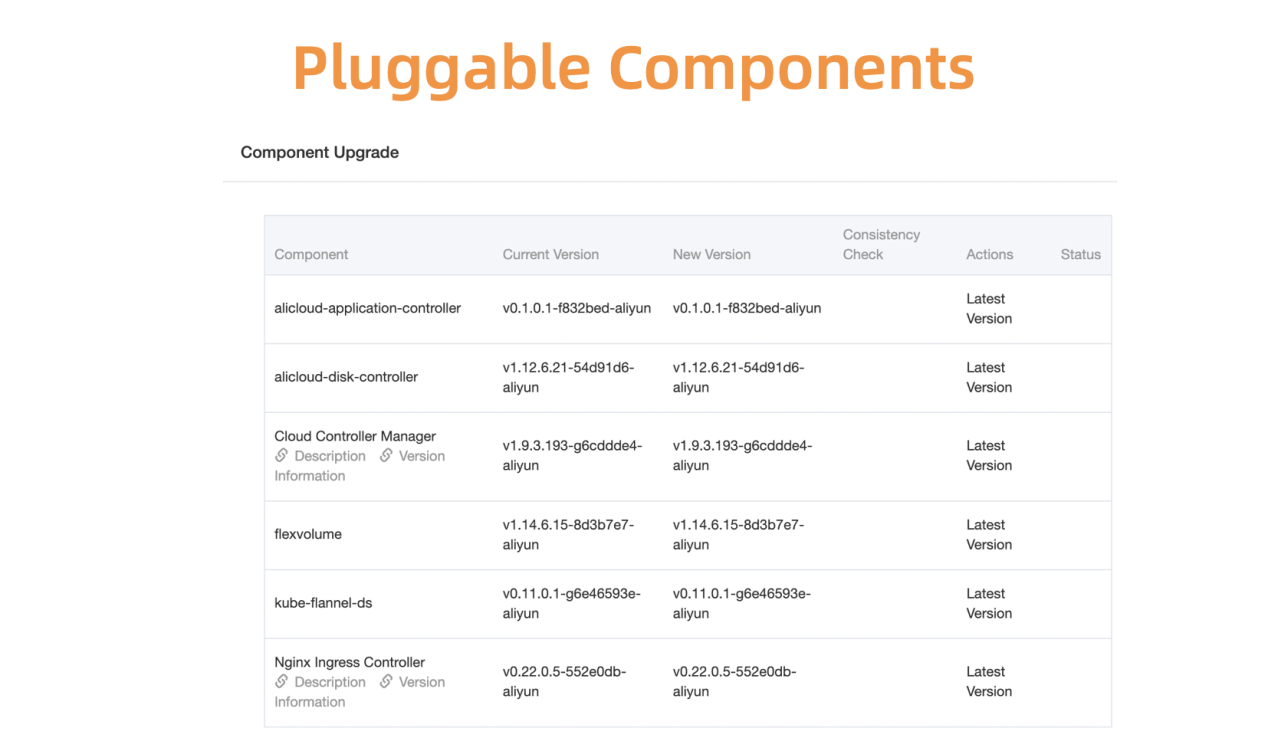

Let us take Kubernetes component management as an example. We first develop a centralized system to register and manage all these pluggable components.

Figure 5. Flexible and pluggable components

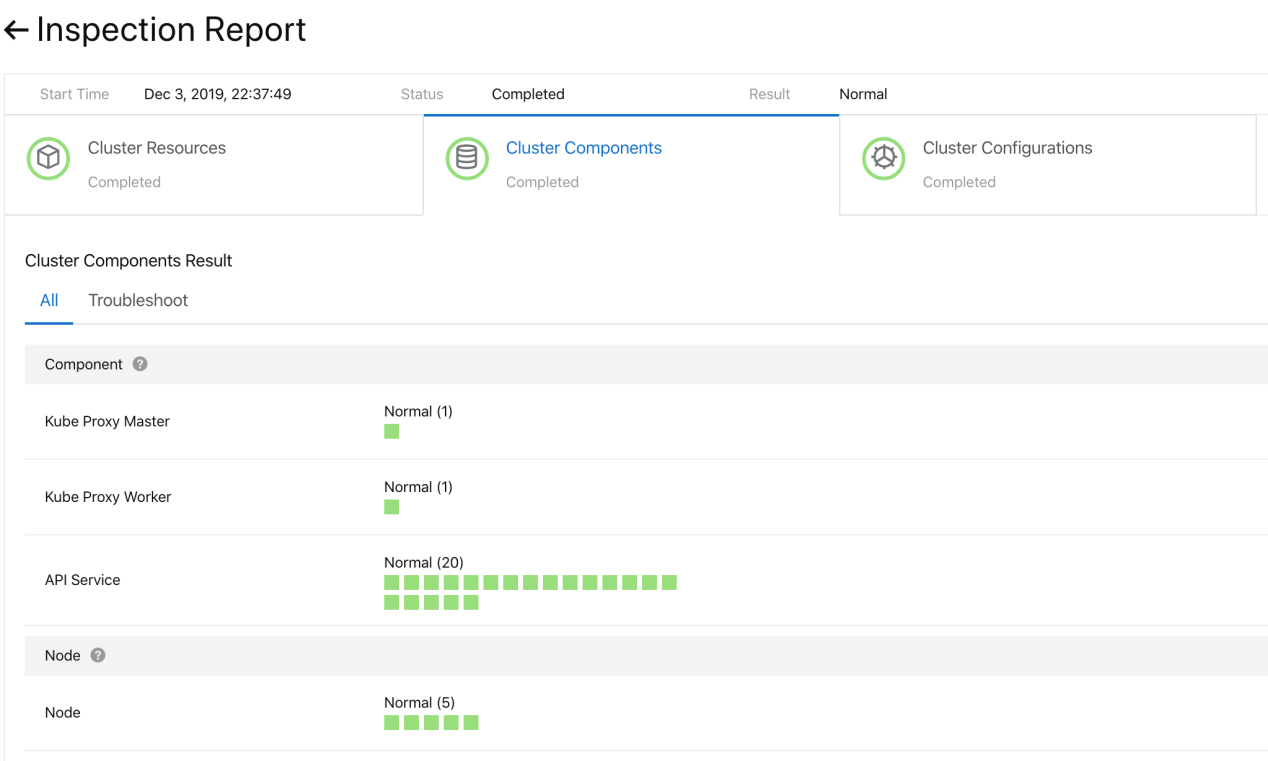

To ensure that the upgrade is successful before moving on, we developed a health checking system for the plugin components and do both per-upgrade check and post-upgrade check.

Figure 6. Precheck for cluster components

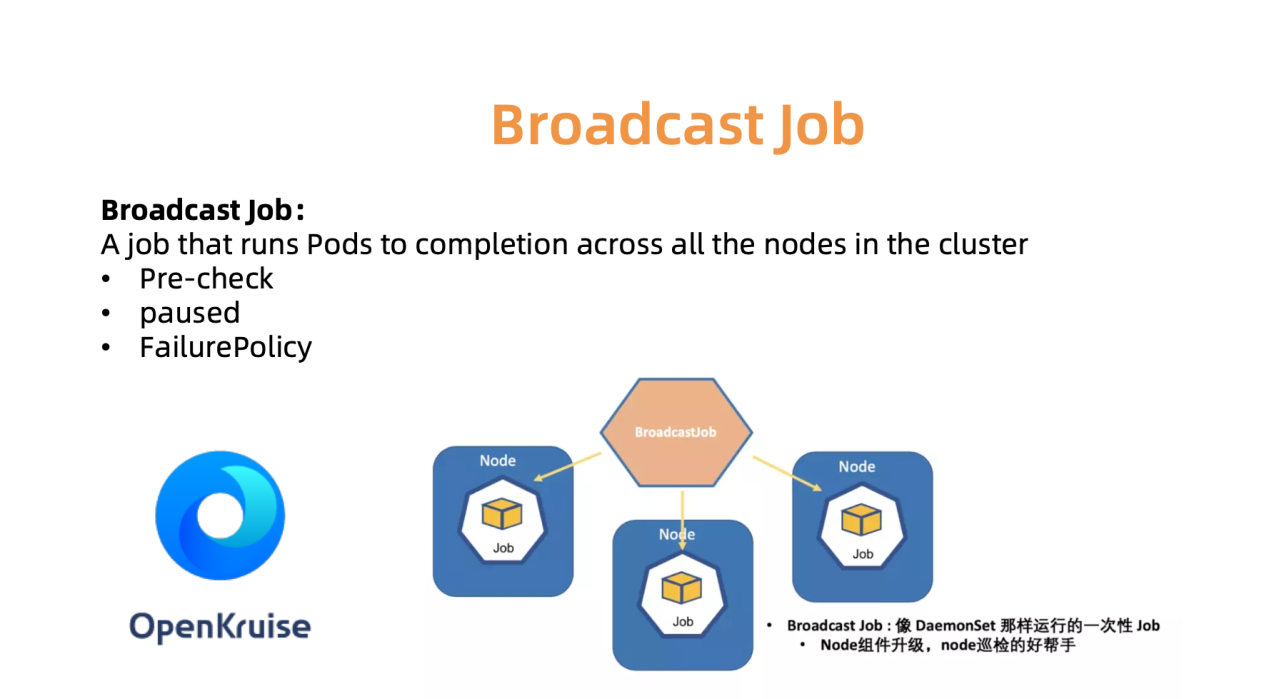

To upgrade these components fast and reliably, we support continuous deployment with grayscale, pausing and other functions. The default Kubernetes controllers do not serve us well enough for this use case. Thus, we developed a set of custom controllers for cluster components management, including both plugin and sidecar management.

For example, the BroadcastJob controller is designed for upgrading components on each worker machine, or inspect nodes on each machine. The Broadcast Job runs a pod on each node in the cluster until it ends like DaemonSet. However, DaemonSet always keeps a long running pod on each node, while the pod ends in BroadcastJob. The Broadcast controller also launches pods on newly joined nodes as well to initialize the node with required plugin components. In June 2019, we open sourced cloud native application automation engine OpenKruise we used internally.

Figure 7. OpenKurise orchestrates broadcast job to each node for flexible work

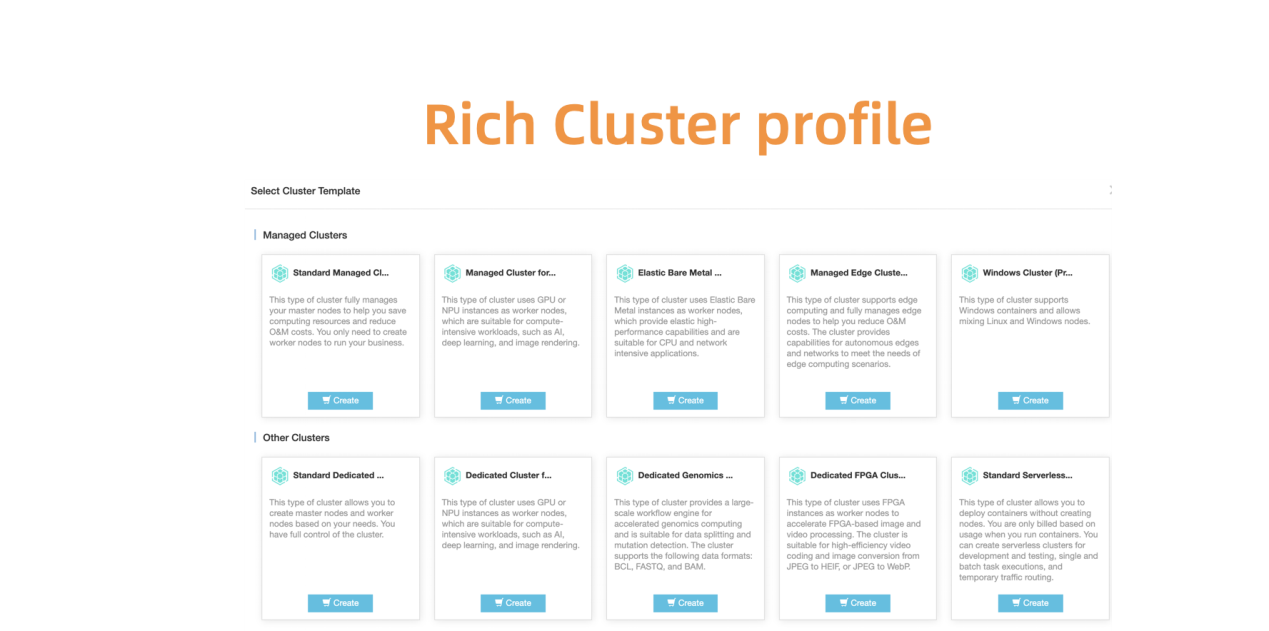

To help our customers with choosing the right cluster configurations, we also provide a set of predefined cluster profiles, including Serverless, Edge, Windows, Bare Metal setups. As the landscape expands and the need of our customers grows, we will add more profiles to simplify the tedious configuration process.

Figure 8. Rich and flexible cluster profiles for various scenarios

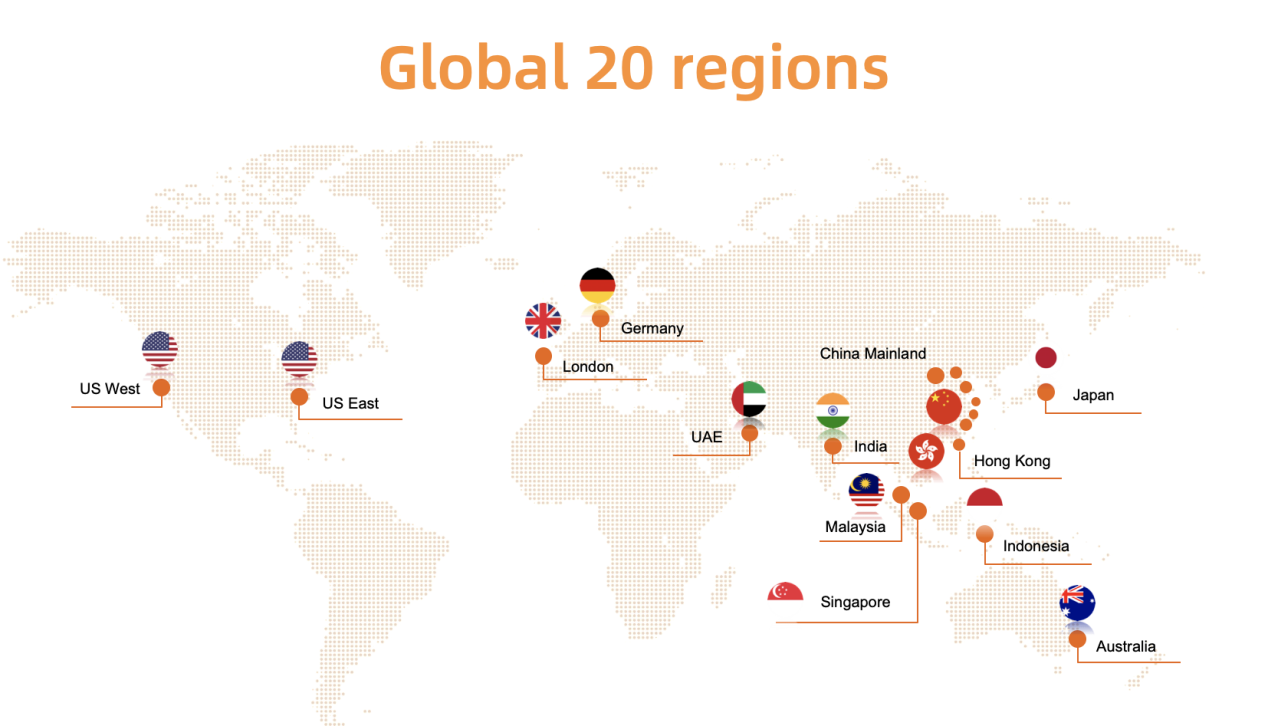

As presented in Figure 9, Alibaba Cloud Container service has been deployed in 20 regions around the world. Given this kind of scale, one key challenge to ACK is to easily observe status of running clusters so that once a customer cluster runs into trouble, we can promptly react to fix it. In other word, we need to come up with a solution to efficiently and safely collect the real-time statistics from the customer clusters in all regions and visually present the results.

Figure 9. Global deployment in 20 regions for Alibaba Cloud Container Service

Like many other Kubernetes monitoring solutions, we use Prometheus as the primary monitoring tool. For each meta cluster, the Prometheus agents collects the following metrics:

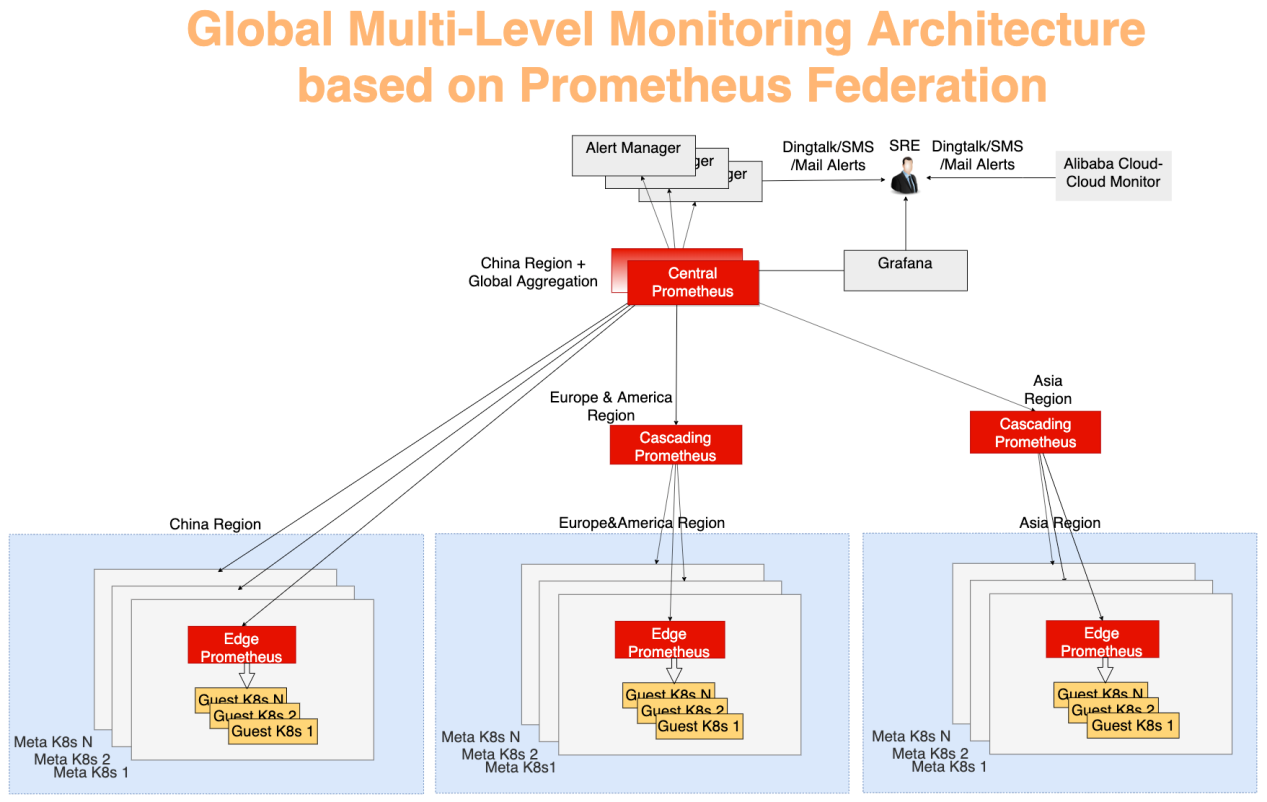

The global stats collection is designed using a typical multi-layer aggregation model. The monitor data from each meta cluster are first aggregated in each region, and the data are then aggregated to a centralized server which provides the global view. To realize this idea, we use Prometheus federation. Each data center has a Prometheus server to collect the data center's metrics, and the central Prometheus is responsible for aggregating the monitoring data. An AlertManager connects to the central Prometheus and sends various alert notifications, by means of DingTalk, email, and SMS, and so on. The visualization is done by using Grafana.

In Figure 10, the monitoring system can be divided into three layers:

This layer is furthest away from the central Prometheus. An edge Prometheus server existing in each meta cluster collects metrics of meta and customer clusters within the same network domain.

The function of cascading Prometheus is to collect monitoring data from multiple regions. Cascade Prometheus servers exist in a larger region, such as China, Asia, and Europe & America. As the cluster size of each larger region grows, the larger region can be split into multiple new larger regions, and always maintain a cascade Prometheus in each new large region. Using this strategy, we could achieve flexible expansion and evolution of the monitoring scale.

Central Prometheus connects to all cascading Prometheus servers and perform the final data aggregation. To improve reliability, two central Prometheus instances are deployed in different AZs and connect to the same cascading Prometheus servers.

Figure 10. Global multi-layer monitoring architecture based on Prometheus federation

With the development of cloud computing, Kubernetes based cloud-native technologies continue to promote the digital transformation of the industry. Alibaba Cloud Container Service for Kubernetes provides secure, stable, and high-performance Kubernetes hosting services, which has become one of the best carrier for running Kubernetes on the cloud. The team behind Alibaba Cloud strongly believe in open source and open community. We will share our insights in operating and managing cloud native technologies.

Why It's Easier Than Ever Go Cloud Native with Alibaba Cloud

Collaborative Cloud-Native Application Distribution across Tens of Thousands of Nodes in Minutes

222 posts | 33 followers

FollowAlibaba Developer - September 23, 2020

Alibaba Clouder - November 7, 2019

三辰 - July 6, 2020

Alibaba Container Service - November 21, 2024

Alibaba Container Service - February 24, 2021

Alibaba Developer - September 6, 2021

222 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Container Service