The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Zhang Yong (Cangmo), Technical Expert at Ant Financial

With its advanced design concept and excellent technical architecture, Kubernetes ranks high in the container orchestration field. A growing number of companies are deploying and practicing Kubernetes in production environments. In particular, Alibaba and Ant Financial have also widely deployed Kubernetes in production environments. Kubernetes greatly simplifies the deployment of containerized applications so that developers can operate and maintain complex distributed systems. However, it is still difficult to maintain and manage production-grade Kubernetes clusters with high availability. This article introduces how Ant Financial efficiently and reliably manages large-scale Kubernetes clusters, and details how to design the core components of a cluster management system.

The Kubernetes cluster management system must provide capabilities for conveniently managing cluster lifecycles so that you can easily create and upgrade Kubernetes clusters, and manage work nodes in the Kubernetes clusters. In large-scale scenarios, whether cluster changes can be controlled directly affects the cluster stability. Therefore, the design of the management system must ensure that the system supports monitoring, canary releasing, and rollback. In addition, problems such as node hardware faults and component exceptions often occur in ultra-large clusters with tens of thousands of nodes. These exception scenarios must be taken into account when a large-scale cluster management system is designed so that the system can automatically recover from the exceptions.

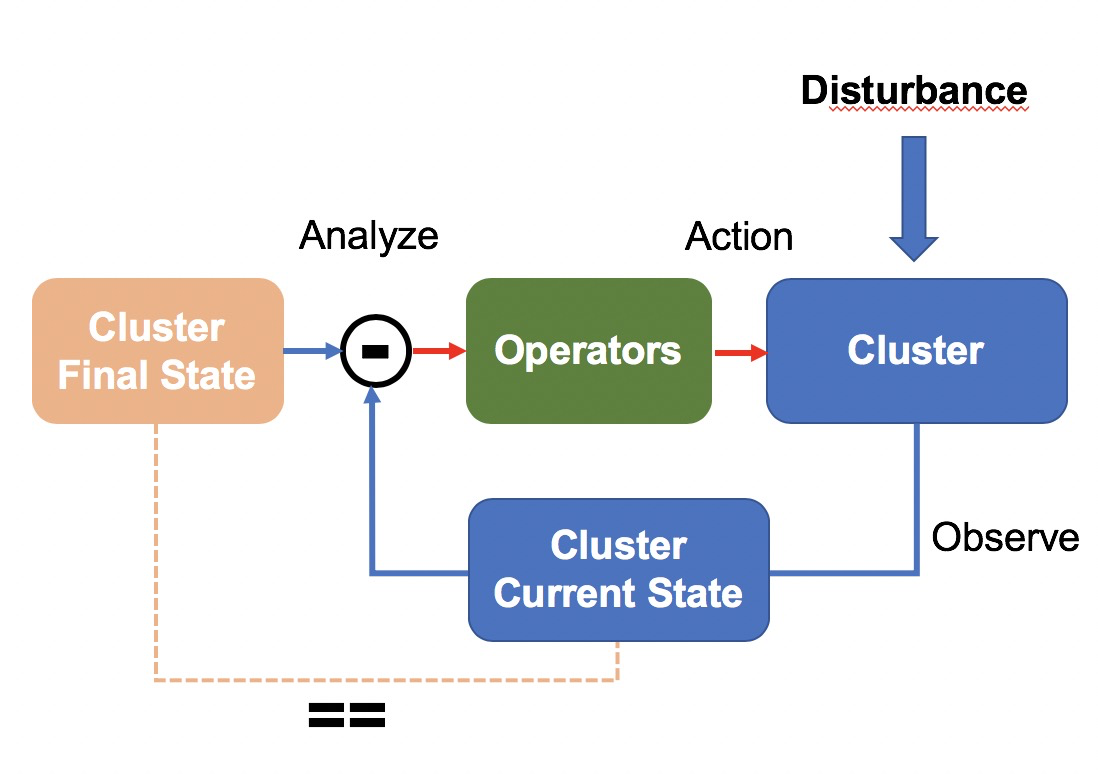

Based on this background, we designed a cluster management system that is oriented to final states. The system regularly checks whether the current state of a cluster is consistent with the target state. If the states are inconsistent, operators initiate a series of operations to drive the cluster to reach the target state. This design uses the common negative-feedback closed-loop control system in the control theory for reference. The system implements a closed loop, which can effectively defend against external disturbances. In our scenarios, the word disturbance refers to software and hardware faults in nodes.

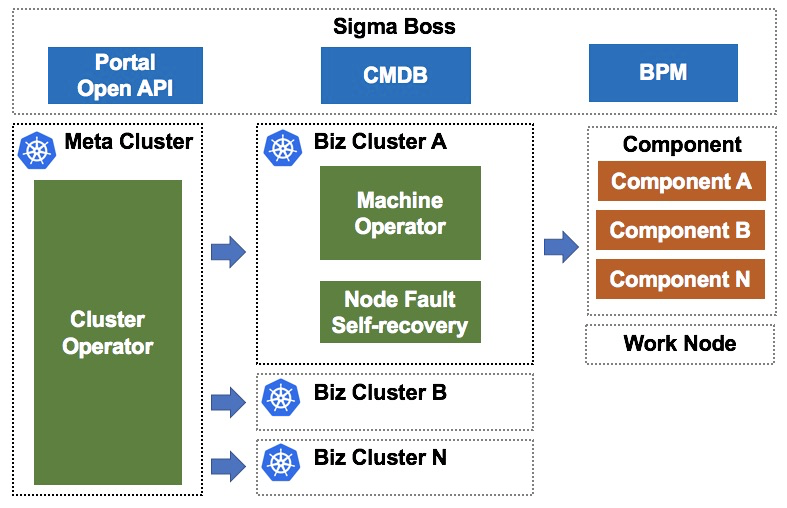

As shown in the preceding figure, a meta cluster is a high-availability Kubernetes cluster that manages master nodes in N business clusters. A business cluster is a Kubernetes cluster that serves production. Sigma Boss is a portal for cluster management, which provides a convenient interface for interacting with users and a controllable change process.

A cluster operator deployed in the meta cluster provides the capabilities of creating, deleting, and upgrading business clusters. The cluster operator is oriented to final states. When the master node or a component in a business cluster is abnormal, the cluster operator automatically isolates and recovers the abnormal master node or component, to ensure that the master node of the business cluster reaches the stable final state. This solution uses Kubernetes to manage Kubernetes, which is referred to as Kube on Kube (KOK).

In addition, a machine operator and a node fault self-recovery component are deployed in a business cluster to manage work nodes in the business cluster. You can use these components to add, delete, and upgrade work nodes, and handle node faults. Based on the machine operator's capability of retaining the final state of a single node, Sigma Boss supports canary releasing and rollback of clusters.

Based on custom resource definitions (CRDs) of Kubernetes, we defined a cluster CRD in the meta cluster to describe the final states of business clusters. Each business cluster is mapped to one cluster resource. If you create, delete, and update a cluster resource, it means that you create, delete, and upgrade a business cluster. The cluster operator watches cluster resources and drives the master component of a business cluster to reach the final state defined in the CRD.

The versions of master components in a business cluster are centrally maintained in a ClusterPackageVersion CRD. A ClusterPackageVersion resource records information such as an image and default startup parameters of the master components, including the API server, controller manager, scheduler, or operators. A cluster resource is associated with a unique ClusterPackageVersion. You can release and roll back the master component of a business cluster by modifying the ClusterPackageVersion version recorded in the cluster CRD.

To manage work nodes in a Kubernetes cluster, you need to:

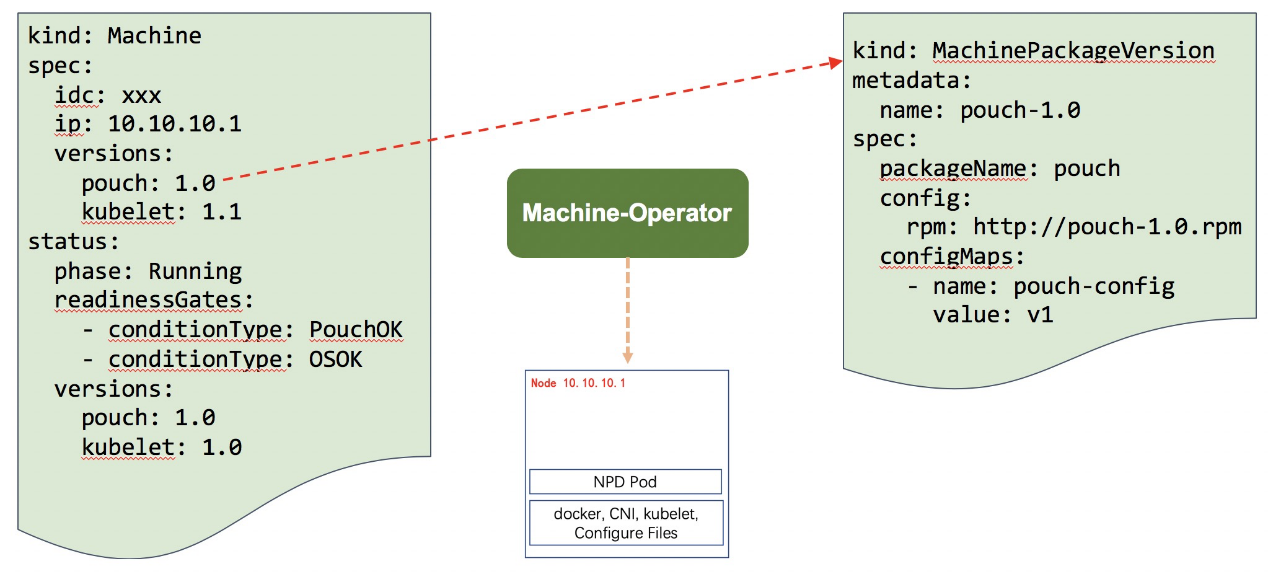

To complete the preceding management jobs, we defined a machine CRD in a business cluster to describe the final states of work nodes. Each work node is mapped to one machine resource. You can manage the work node by modifying the machine resource.

The following figure shows a machine CRD, where spec describes the names and versions of components to be installed in a node, and status records the installation and running statuses of each component in the current work node. In addition, the machine CRD also supports plug-in final-state management, which works with management operators of other nodes. This part will be described in detail later.

The versions of components in a work node are managed by the MachinePackageVersion CRD. MachinePackageVersion maintains information such as the Redhat Package Manager (RPM) version, configurations, and installation method of each component. One machine resource is associated with N MachinePackageVersions so that multiple components can be installed.

Based on machine resources and the MachinePackageVersion CRD, we designed and implemented a machine operator, which is a node final-state controller. Machine operator watches machine resources, parses MachinePackageVersion, performs O&M operations on a node to drive the node to reach the final state, and continuously retains the final state.

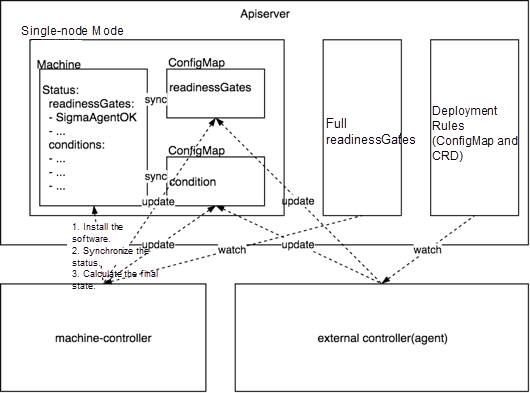

As business requirements continue to change, node management is no longer limited to the installation of components such as Docker and Kubelet. We need to meet requirements such as starting scheduling after a log collection DaemonSet is deployed. In addition, similar requirements are increasing. If all final states are managed by the machine operator, the coupling between the machine operator and other components will increase, and the system extensibility will decrease. Therefore, we designed a mechanism for managing the final states of nodes, which can coordinate the machine operator and the O&M operators of other nodes. The following figure shows the design.

The collaborative relationship between the machine operator and other nodes' O&M operators is as follows:

As we know, the hardware of physical machines may encounter faults at a certain probability. As the nodes in a cluster increase, faulty nodes are commonly found in the cluster. If the faulty nodes are not recovered in time, resources in these physical machines will be left in idle.

To solve this problem, we designed a closed-loop self-recovery system for discovering, isolating, and rectifying faults.

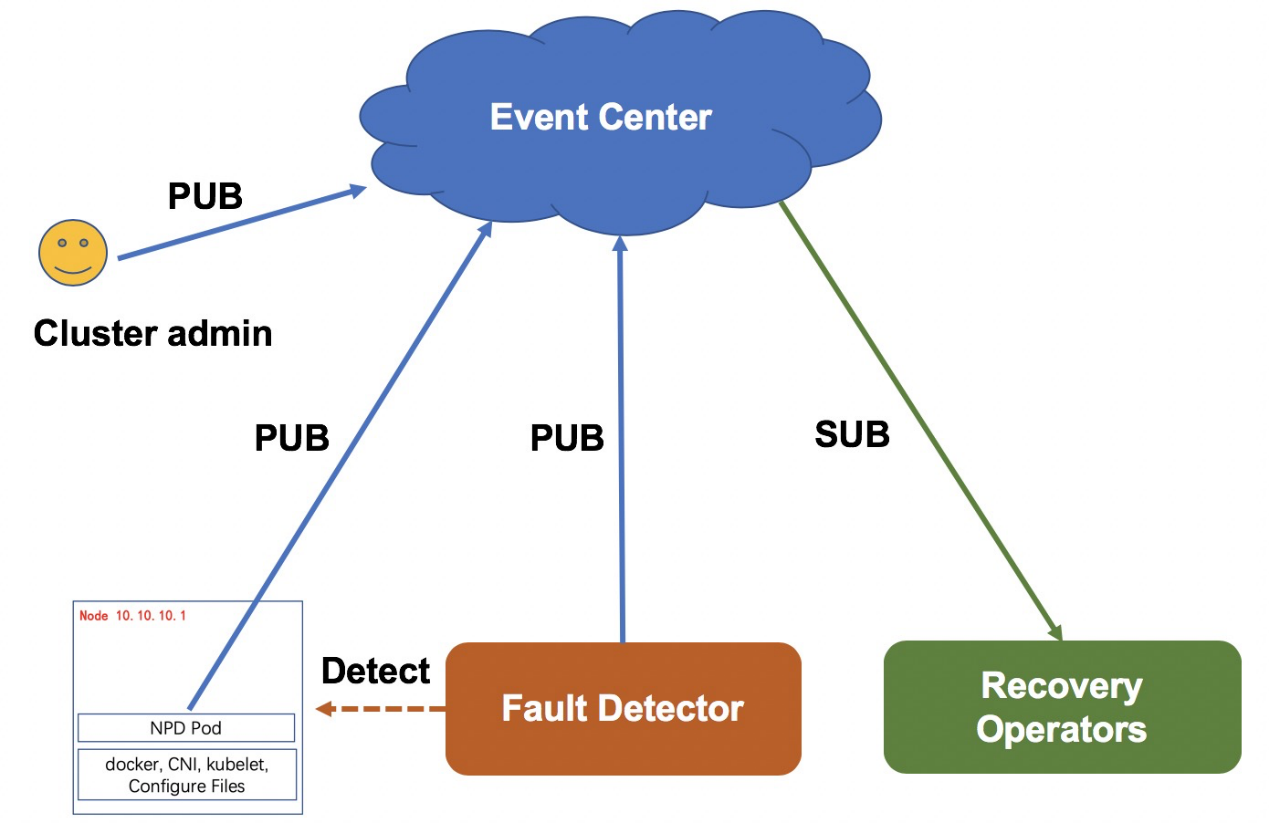

As shown in the following figure, faults can be discovered according to the reporting by an agent and the automatic detection by the fault detector, which ensures high timeliness and reliability of fault discovery. In detail, reporting by an agent ensures higher timeliness, and automatic detection by the fault detector can cover exception cases not reported by the agent. All fault information is stored in the event center. Components or systems that may be affected by cluster faults can obtain fault information by subscribing to events in the event center.

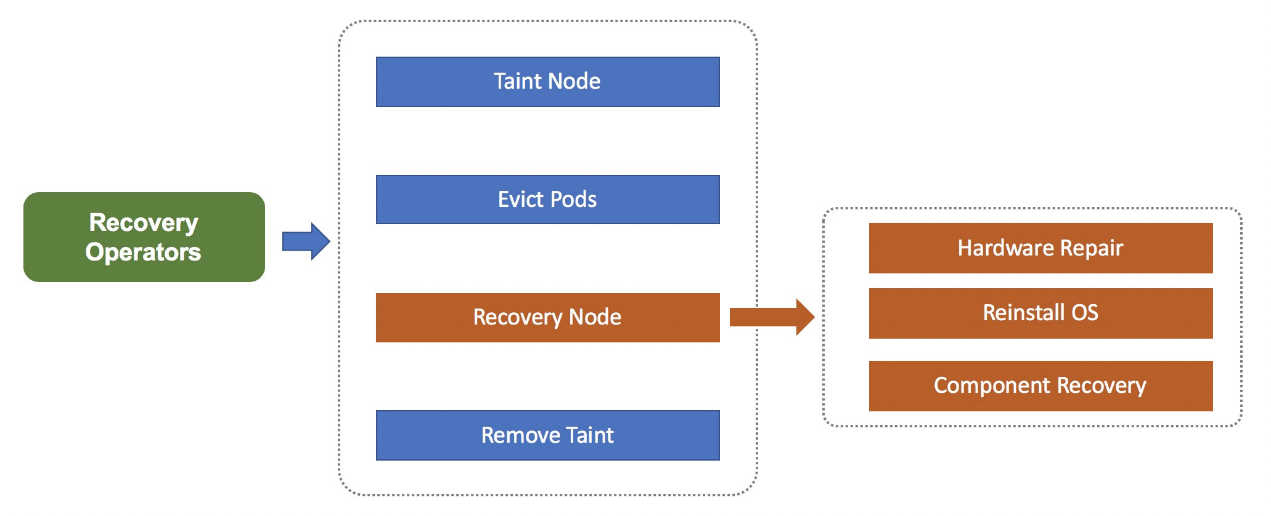

The node fault self-recovery system creates different maintenance processes for different fault types, for example, a hardware maintenance process and a system re-installation process. In a maintenance process, the system isolates a faulty node by stopping the scheduling of the node, and then labels the pod on the node with "to be migrated" to notify the Platform as a Service (PAAS) or MigrateController to migrate the pod. After these preparations are completed, the system attempts to recover the node, for example, by repairing the hardware or re-installing the operating system. If the node is recovered, the system restarts the scheduling of the node. If the system fails to automatically recover a node for a long time, you can try to manually recover the node.

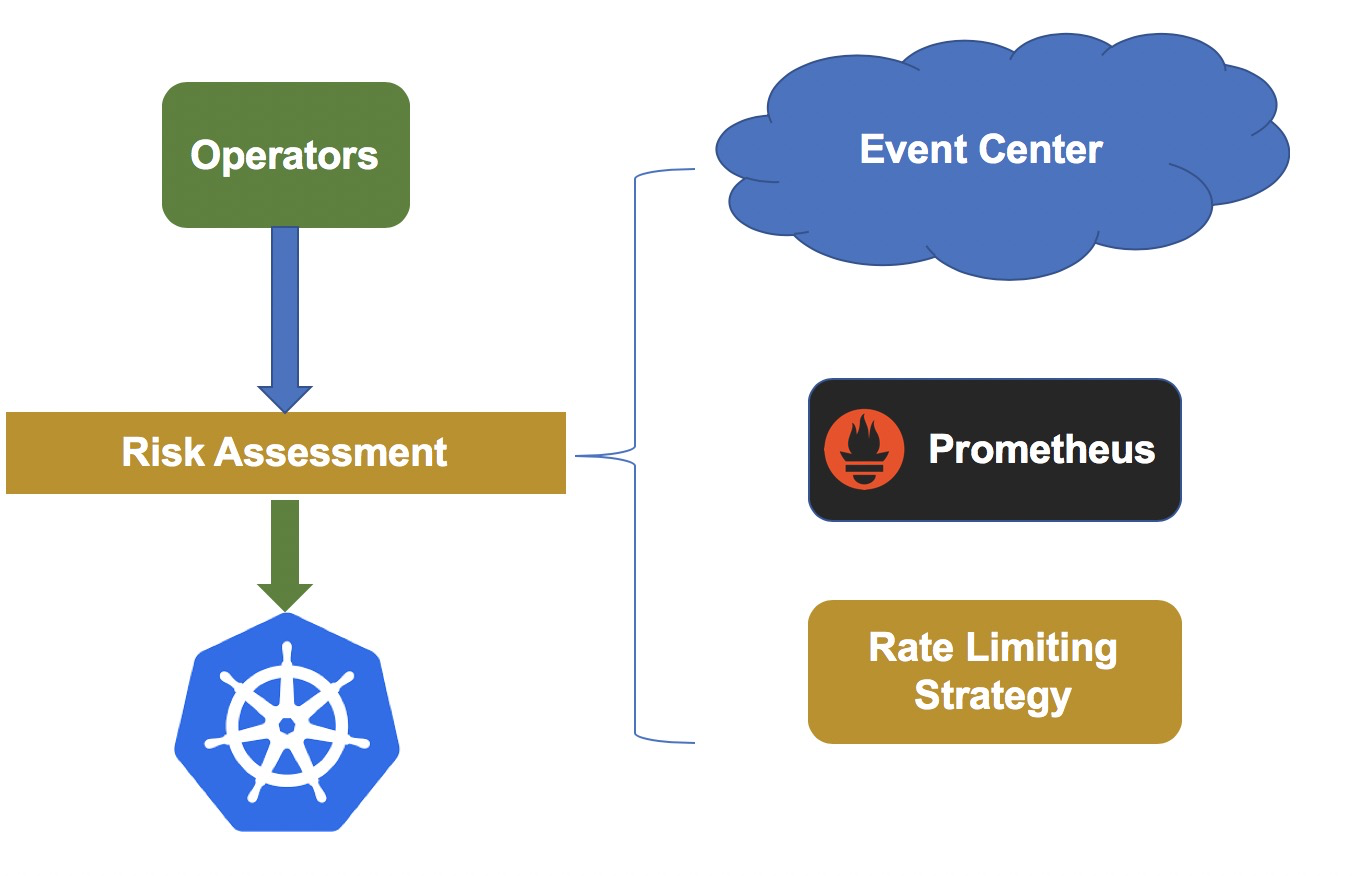

Based on the atomic capabilities provided by the machine operator, the system is designed to support canary releases and rollback of clusters. In addition, to further reduce the risk of changes, operators assess risks before initiating an actual change. The following figure shows the architecture.

High-risk change operations such as node deletion and system re-installation are recorded in the unified rate limiting center. The rate limiting center maintains rate limiting policies for different types of operations. If a change triggers rate limiting, the system stops the change.

To evaluate whether a change process is normal, we perform health check on each component before and after the change. Although most exceptions can be found in the health check on a component, certain exception scenarios may not be covered. To overcome this weakness, the system obtains cluster business metrics such as the pod creation success rate from the event center and the fault detector during risk assessment. If a metric is abnormal, the system automatically stops the change.

This article shared the core design of the Kubernetes cluster management system currently used by Ant Financial. The core components used many operators to manage final states. This final-state-oriented cluster management system successfully passed the test of performance and stability in the preparations for Double Eleven this year.

In addition to ensuring cluster stability and O&M efficiency, a complete cluster management system must also increase the overall resource utilization of clusters. In the future, we will improve the resource utilization of Ant Financial's production-grade clusters by increasing the percentage of online nodes and decreasing the percentage of idle nodes. In addition, the Resource Scheduling Group of the System Department in Ant Financial is looking for talented individuals to join our team. Work with us to solve world-class problems!

Q1: Currently, most of our applications have been deployed in Docker. How can we transform to Kubernetes? Are there any cases we can learn from?

A1: I have been working at Ant Financial for nearly five years. Ant Financial's services first ran in Xen virtual machines, and currently run in Docker and are scheduled by Kubernetes. You can see that interation is performed nearly every year. Kubernetes is an open Platform as a Service (PaaS) framework. If you have deployed Kubernetes in Docker, your applications are "cloud-native" and, in theory, can be smoothly migrated to Kubernetes. Considering heavy historical workloads of Ant Financial, we have enhanced Kubernetes in practice based on service requirements to ensure that services can be smoothly migrated to Kubernetes.

Q2: Will the performance deteriorate if applications are deployed in Kubernetes and Docker? For example, are we recommended to deploy jobs related to big data processing in Kubernetes?

A2: To my knowledge, Docker is a container, not a virtual machine. Therefore, the impact on performance is limited. Ant Financial's services such as big data and artificial intelligence (AI) are being migrated to Kubernetes and deployed with online applications in a hybrid manner. Big data services are time-insensitive and can make full use of idle cluster resources. In addition, hybrid deployment greatly reduces the cost of data centers.

Q3: How can a Kubernetes cluster work better in a conventional O&M environment? Currently, we will definitely not deploy only Kubernetes clusters.

A3: Resources cannot be centrally scheduled if different infrastructures are used. In addition, it is very costly to maintain two O&M systems that are relatively independent. During migration, Ant Financial implemented an "Adapter" to form a "bridge" by converting conventional container creation or release instructions to Kubernetes resource modification instructions.

Q4: How is a node monitored? Will a pod be migrated if the node fails? What can we do if the system does not support the automatic migration of services?

A4: Nodes are monitored at the hardware level, system level, and component level respectively. Hardware-level monitoring data comes from an Internet data center (IDC). System-level monitoring is performed by an internal monitoring platform of Alibaba Cloud. To monitor components such as Kubelet and Pouch, we expanded the Node Problem Detector (NPD) to provide the exporter interface for the monitoring system to collect data. When a node is faulty, the system will automatically migrate the pod in the node. For stateful services, service providers can customize an operator to automatically migrate a pod. The system will destroy pods that cannot be automatically migrated.

Q5: In the future, will you plan to make an entire Kubernetes cluster transparent to developers by enabling developers to program or compile cluster deployment files by using code, instead of developing and deploying applications based on containers?

A5: Kubernetes provides many extended capabilities for building PaaS platforms. However, currently, it is really difficult to deploy applications directly in Kubernetes clusters. I think that using a certain domain-specific language (DSL) to deploy applications will be the trend in the future, and that Kubernetes will become the core of these infrastructures.

Q6: Currently, we manage clusters in a kube-to-kube manner. What are the advantages of kube-on-kube over kube-to-kube? If Kubernetes clusters are deployed at a large scale, what is the performance bottleneck when the Kubernetes cluster nodes are scaled? How can I solve this problem?

A6: Currently, many continuous integration and continuous delivery (CI/CD) processes run in Kubernetes clusters. By using kube-on-kube, we can manage business clusters as common business applications. In addition to Kubelet and Pouch, many DaemonSets and pods also run in the nodes. When a large number of nodes are added, components in the nodes initiate many list and watch operations to the API server. Our optimization will focus on improving the performance of the API server and reducing the total number of list and watch operations of nodes by working with the API server.

Q7: Our company has not deployed Kubernetes clusters. I want to ask several questions: What are the benefits of Kubernetes? What existing problems can Kubernetes resolve? What are the preferred business scenarios and processes where Kubernetes can be used? Can the data of existing infrastructures be smoothly migrated to Kubernetes?

A7: In my opinion, Kubernetes is different in its final state-oriented design concept, rather than O&M actions. This is helpful in complex O&M scenarios. According to the upgrade practice of Ant Financial, data of existing infrastructures can be smoothly migrated to Kubernetes.

Q8: Does a cluster operator run in a pod? Does the master node of a business cluster run in a pod? Does a machine operator run in a physical machine?

A8: All operators run in a pod. The cluster operator pulls up the machine operator in a business cluster.

Q9: Hi! In order to cope with high-concurrency scenarios such as Double Eleven, what is your recommended size of a meta cluster and what is your recommended size of each business cluster to be managed by the meta cluster? As far as I know, cluster operators are used to list and watch resources. In large-scale high-concurrency scenarios, what improvements have you made?

A9: A cluster can manage tens of thousands of nodes. Therefore, a meta cluster can theoretically manage more than 3,000 business clusters.

Q10: If the system kernel, Docker, or Kubernetes in a node is abnormal, how can we maximize the normal performance of the system by using software?

A10: The system can perform health check for the node. After a faulty node automatically exits, Kubernetes will discover the node and pull it up on another node.

2,593 posts | 792 followers

FollowAlibaba Clouder - November 8, 2018

Alibaba Clouder - March 26, 2020

Alibaba Clouder - December 3, 2019

Alibaba Developer - October 24, 2019

Alibaba Clouder - July 12, 2019

AlibabaCloud_Network - December 19, 2018

2,593 posts | 792 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Clouder