Continuing from the previous section, this article presents a systematic overview of the comprehensive engineering upgrade practices conducted by Alibaba Cloud's Tair KVCache team in collaboration with the Server R&D Storage hardware-software integration team on 3FS—the high-performance foundational layer for KVCache.

To meet the core requirements of AI large model inference—high throughput, low latency, and strong stability—the team has advanced deep optimizations across three key dimensions: performance tuning, productization enhancement, and cloud-native management:

● Performance Layer: By optimizing RDMA traffic load balancing and fine-tuning small I/O parameters, 4K random read IOPS have been increased by 150%. A full user-space persistence engine was integrated to reduce system resource overhead.

● Product Layer: Critical stability issues such as Mgmtd IP drift and unbalanced storage allocation were resolved. New capabilities including GDR zero-copy and multi-tenant isolation were introduced, enabling end-to-end efficient coordination between HBM cache and backend storage.

● Operations Layer: A cloud-native control plane was built based on Kubernetes Operator, supporting one-click deployment, self-healing from failures, elastic scaling, and multi-cluster isolation. Integrated with a visual monitoring dashboard, this significantly reduces operational complexity and manpower costs for AI infrastructure.

This practice establishes a reusable technical paradigm for the large-scale deployment of high-performance KVCache in enterprise-grade AI scenarios.

This series of technical articles systematically analyzes the evolution path of KVCache technology tailored for agent-style reasoning:

As an extension of Alibaba Cloud Database Tair's capabilities, Tair KVCache represents three paradigm shifts in caching:

🔹 From Redis's "caching data → reducing I/O"

🔹 To GPU KVCache's "caching computational intermediate states → reducing redundant computation"

🔹 To Tair KVCache's "scalable, intelligent attention state management → reshaping the cost model of large model inference"This evolution marks a transformation of caching from a supporting component into a core capability of the AI infrastructure layer—making "state" storable, shareable, and schedulable, and establishing a scalable foundation for inference in the era of intelligent agents.

During the inference phase of large language models (LLMs), generative inference fundamentally follows an autoregressive paradigm: the model generates tokens sequentially, with each prediction dependent on all previously generated content. While this ensures semantic coherence, it introduces significant computational redundancy—particularly in the attention mechanism, where repeated computation of Key (K) and Value (V) vectors becomes a performance bottleneck.

Specifically, when generating a new token, the model must compute its Query (Q) against the K and V vectors of all historical tokens via dot-product operations to derive attention weights and aggregate contextual information. Notably, the K and V vectors of past tokens remain unchanged throughout subsequent generation steps. Recomputing these static vectors during every decoding step results in substantial unnecessary computation.

To address this, the industry widely adopts KVCache technology: upon first generating each token, its K and V vectors are cached and directly reused in later autoregressive steps, thereby bypassing redundant forward computations. This optimization dramatically reduces inference latency and significantly improves throughput, becoming one of the core techniques enabling efficient streaming generation in modern LLMs.

As LLM inference evolves toward long context, high concurrency, and ultra-low latency—especially in use cases like multi-turn dialogue and Retrieval-Augmented Generation (RAG)—models frequently access vast historical contexts or external knowledge bases. The following characteristics emerge in storage selection for extended KVCache:

| Feature | Technical Implication | Impact on Storage System |

|---|---|---|

| Ultra-long Context | Memory footprint per inference can reach several GB to tens of GB | Requires PB-scale scalable cache pool; DRAM-only solutions are too costly, necessitating cheaper, higher-capacity alternatives |

| High Reuse in Multi-Turn Dialogue / RAG | Historical KV of same user/session is frequently re-read (e.g., backtracking, summarization, correction) | Extremely high read/write ratio (>10:1 typical); writes are sequential appends, reads are mostly random (jumps, lookups) |

| High-Concurrency, Low-Latency SLA | End-to-end response P99 < 50ms (even <10ms) | Storage system must offer ultra-low latency (to avoid becoming bottleneck), bandwidth ≥ 20 GB/s/node |

L3-layer SSD-based KVCache storage addresses shared capacity and cost challenges. However, commonly used distributed file systems exhibit limitations. Traditional closed-source solutions like GPFS deliver strong performance but come with high licensing costs and complex maintenance. Open-source distributed file systems often target general-purpose workloads and fall short in KVCache-specific demands: Ceph, though widely adopted, fails to meet the stringent high-bandwidth, low-latency requirements in this specialized scenario; JuiceFS offers flexible architecture but suffers from performance bottlenecks due to tight coupling with backend object storage, increasing operational complexity and risks.

In contrast, 3FS, the open-source high-performance distributed file system developed by DeepSeek, provides a compelling storage foundation for AI training and inference through its high throughput, low latency, and large-capacity shared storage capabilities.

3FS (Fire-Flyer File System) is an open-source, high-performance distributed file system that leverages SSDs and RDMA networks to provide a shared storage layer, simplifying the development of distributed applications. Designed specifically for AI training and inference workloads, 3FS offers a more cost-effective alternative to DRAM-based caching while delivering high throughput and massive scalability.

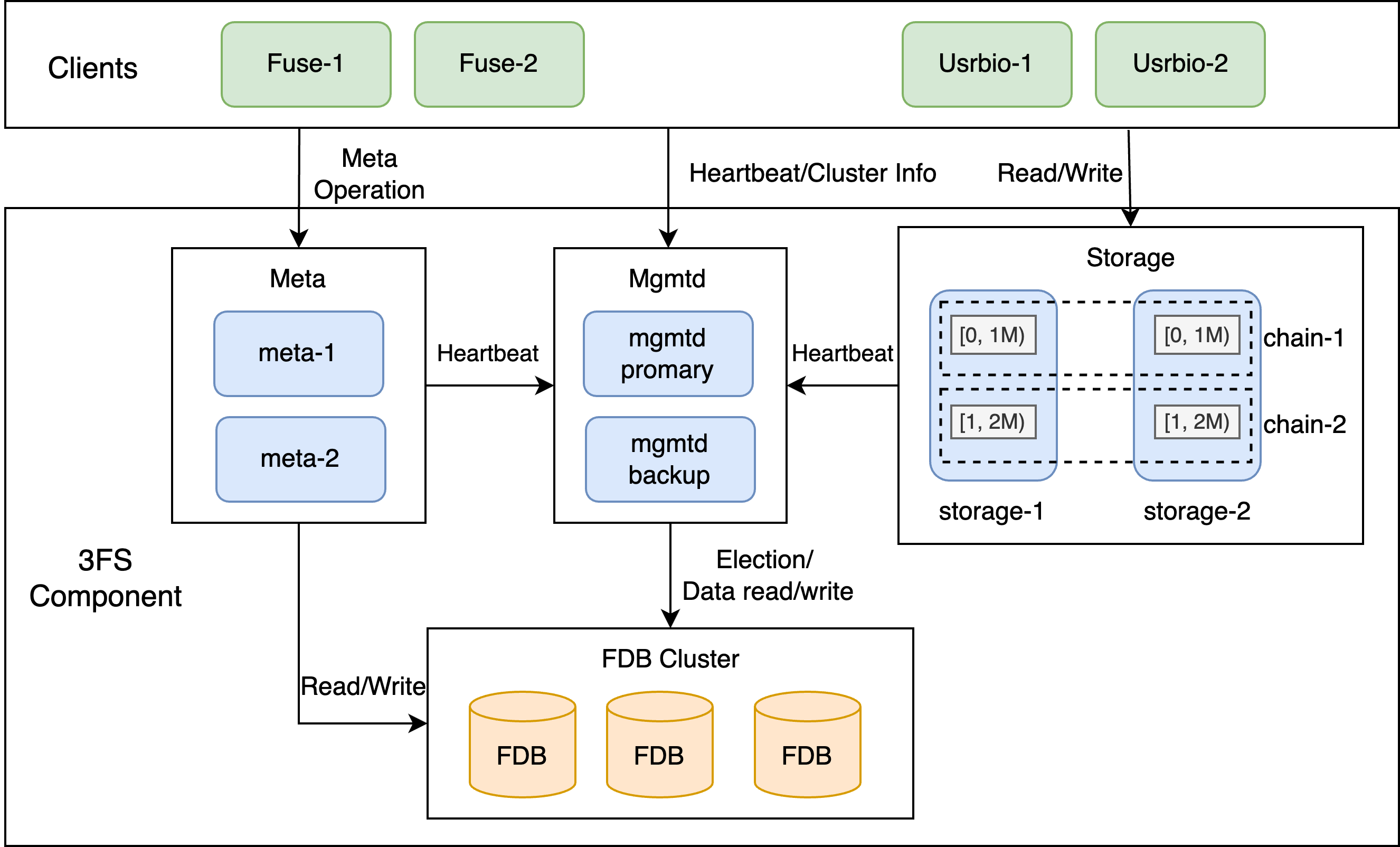

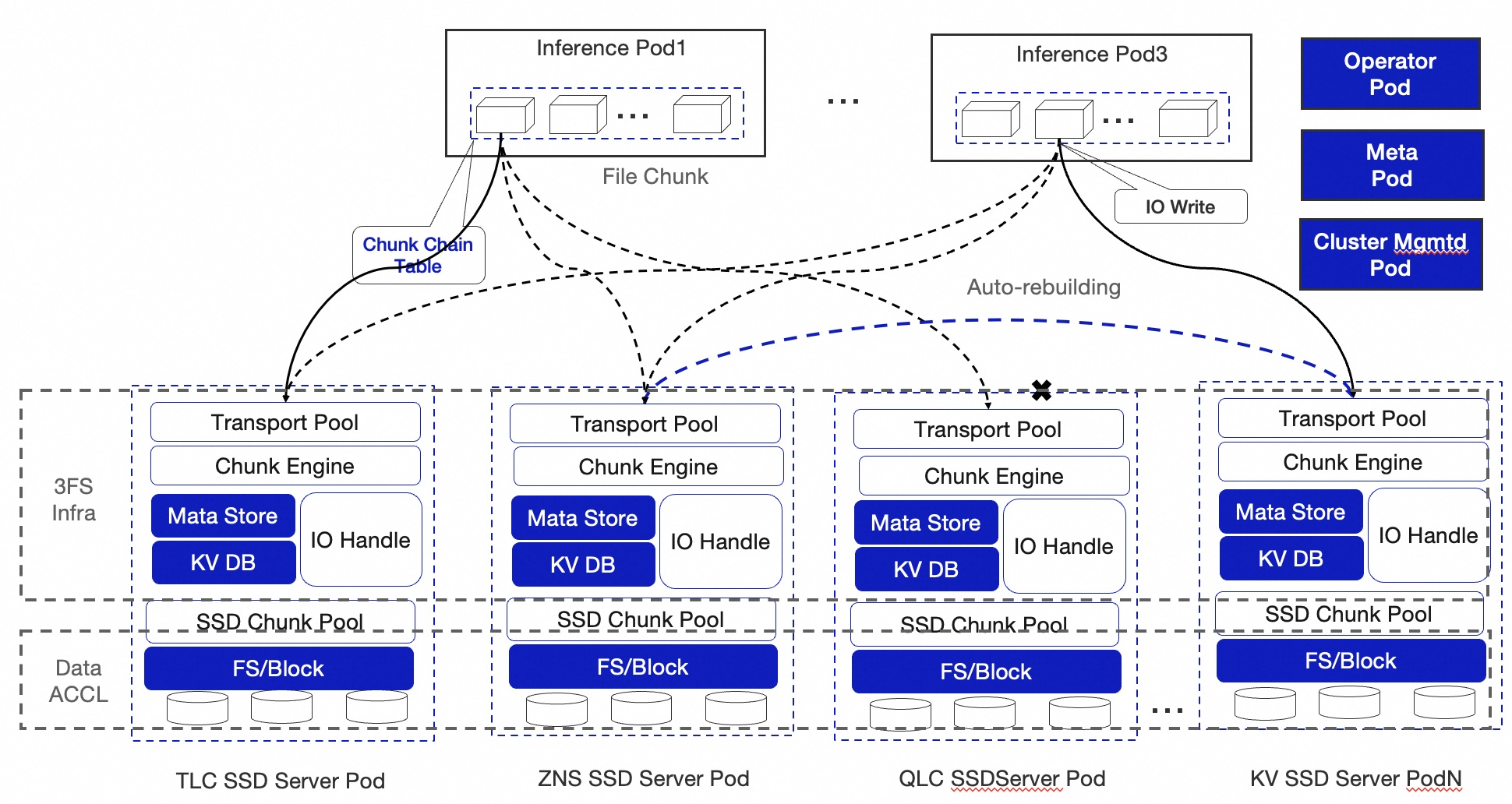

The core components of 3FS include Fuse, Meta, Mgmtd, and Storage. All components are interconnected via RDMA network, with their interaction illustrated below:

Figure 1: 3FS Architecture

(1) Mgmtd: Control service ensuring high availability via primary-backup strategy. If the primary node fails, another Mgmtd replica is elected as the new leader. Mgmtd manages cluster configuration. All Meta, Storage, and Fuse clients maintain liveness through periodic heartbeats and periodically fetch updated cluster states (e.g., topology, ChainTable).

(2) Meta: Metadata service handling operations like file open/close, implementing filesystem semantics. Stateless and backed by FoundationDB (a transactional KV store), multiple Meta instances can scale horizontally. Clients connect to any instance, and requests are forwarded based on InodeId.

(3) Storage: Manages local SSD resources using the underlying filesystem. Each SSD is abstracted into multiple logical units called Targets. Targets across different Storage nodes form a Chain. Replicas are kept consistent via Chain Replication with Apportioned Queries (CRAQ): reads are randomly routed to any Target in the Chain; writes go only to the Head Target and propagate down the chain. This "write-all, read-any" design maximizes SSD and RDMA utilization, especially beneficial for read bandwidth.

(4) Client: FUSE (Filesystem in Userspace) is a Linux kernel interface allowing userspace implementation of filesystems accessible via standard POSIX operations. 3FS uses FUSE to enable transparent file access, making it suitable for compatibility-sensitive applications.

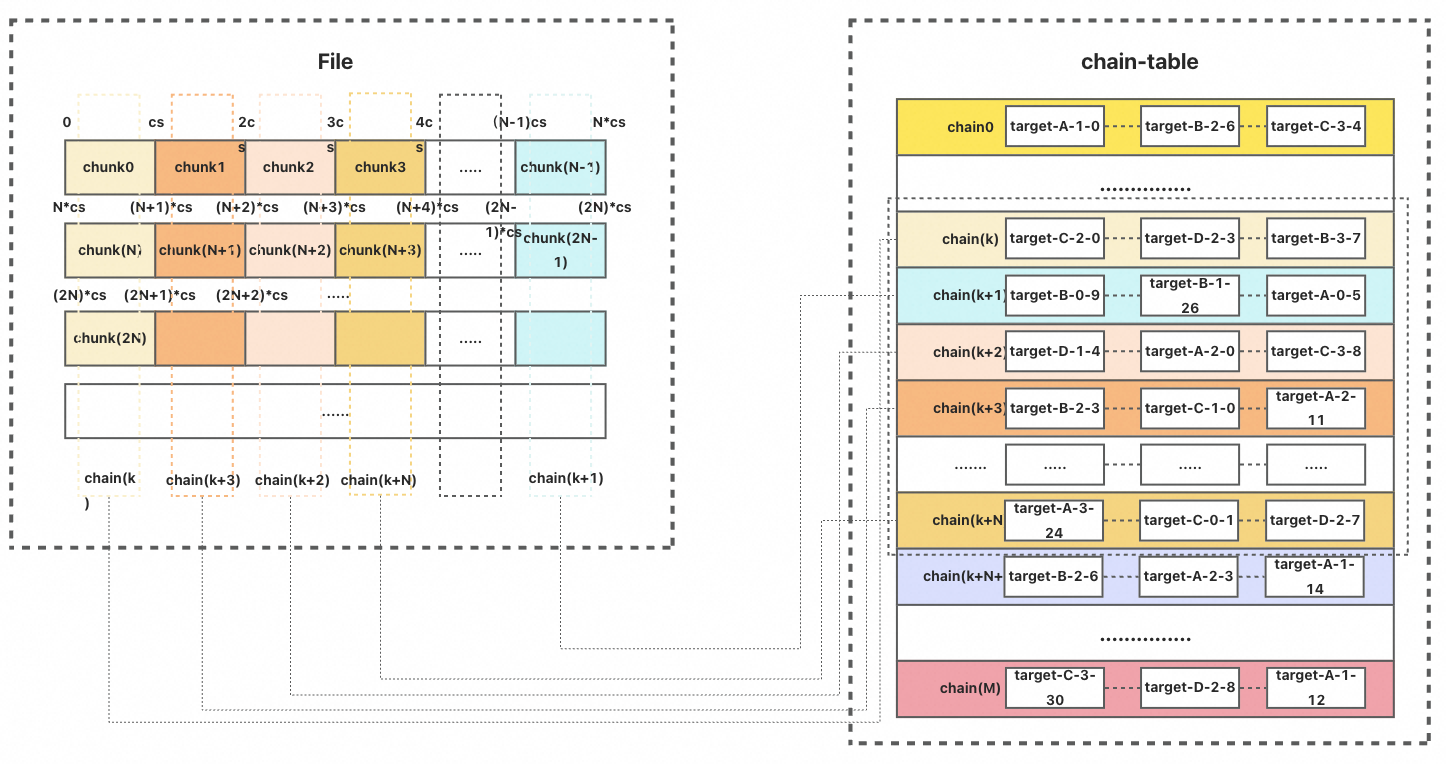

Figure 2: File Chunk Distribution

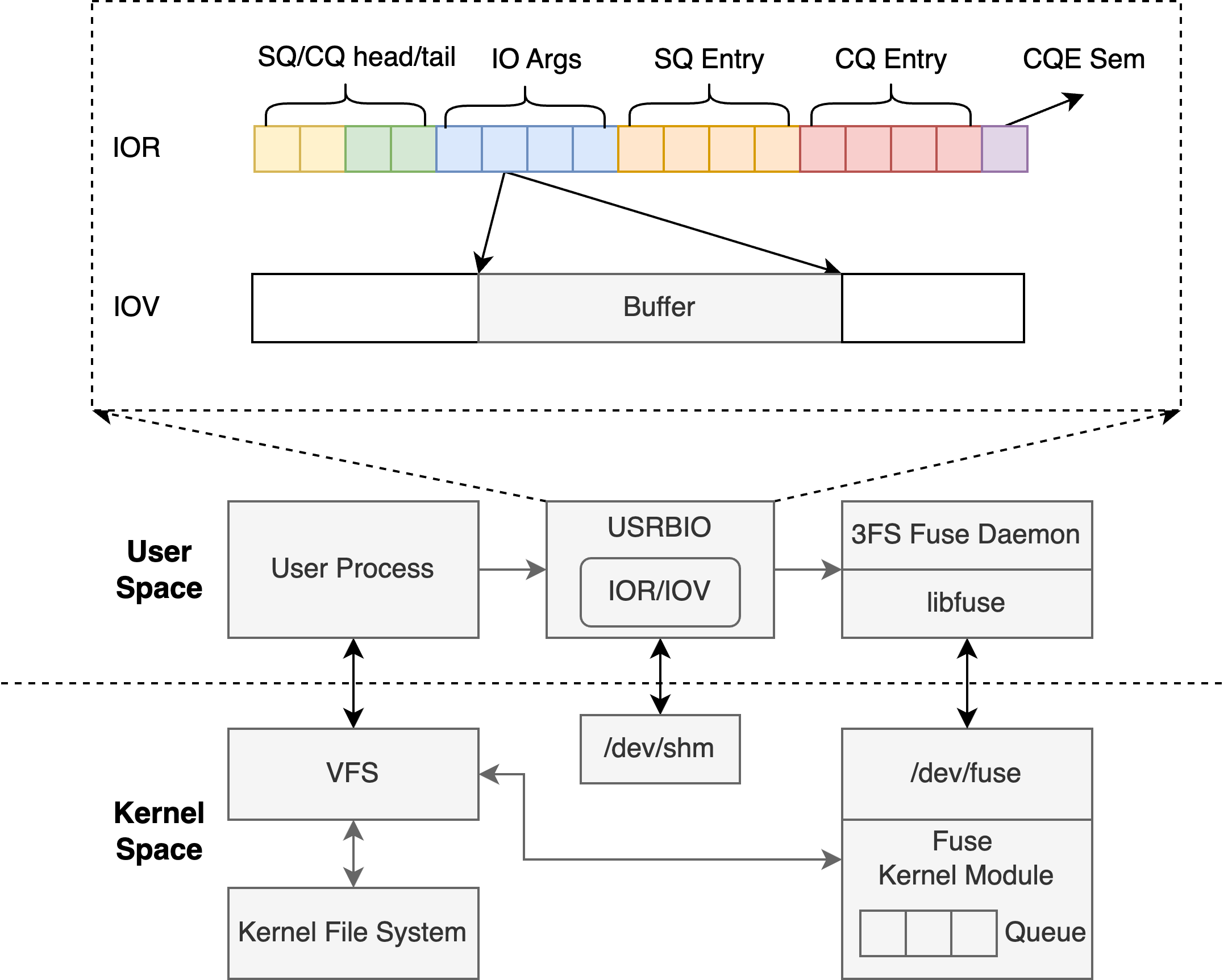

Figure 3: 3FS Client Architecture

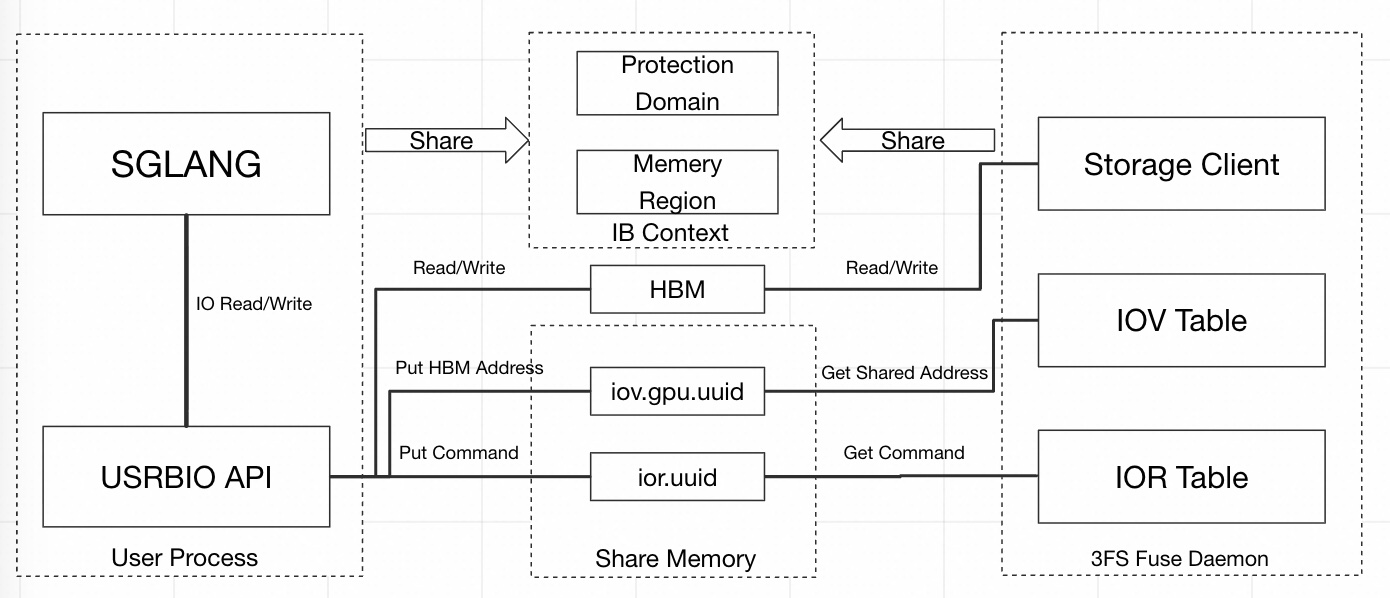

Additionally, 3FS provides a USRBIO client interface—a user-space, asynchronous, zero-copy API requiring some code adaptation. While metadata operations still rely on FUSE, I/O requests are sent directly from user processes to the FUSE daemon, eliminating syscall context switches and data copies, achieving superior performance.

As a distributed file system designed for parallel computing environments, 3FS offers distinct advantages in KVCache use cases:

● Capacity & Cost Efficiency: 3FS pools SSD resources across numerous storage nodes into a unified PB-scale storage pool, meeting the large-capacity needs of KVCache while striking an optimal balance between performance and cost.

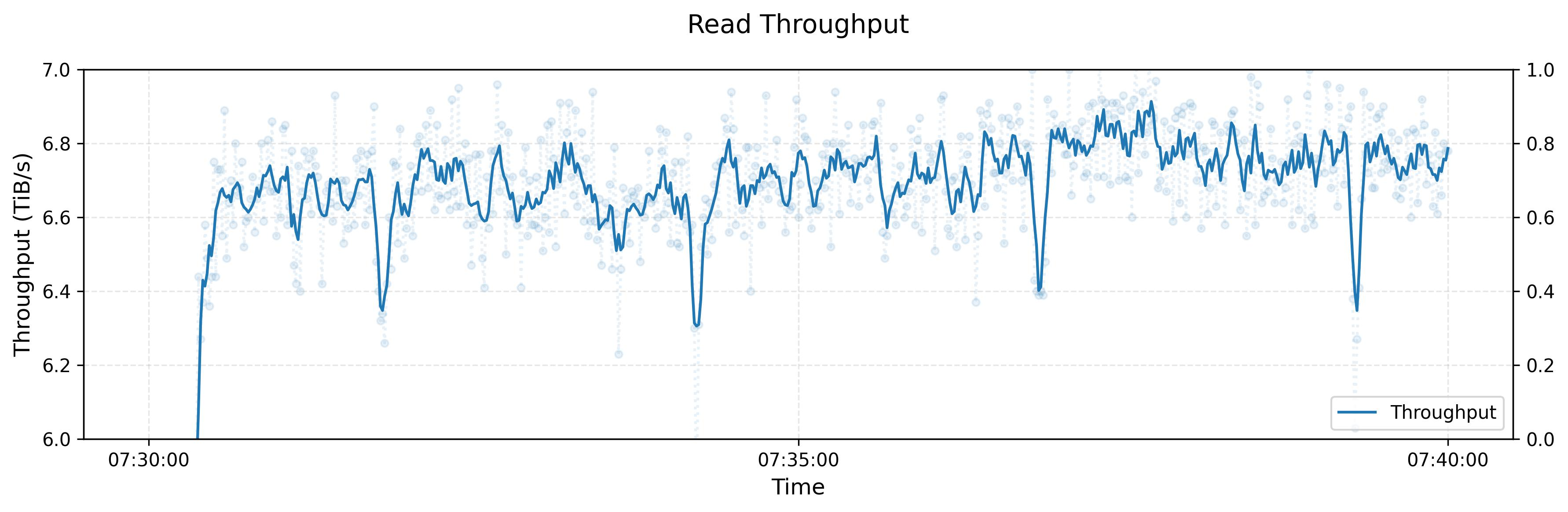

● Bandwidth & Latency: End-to-end RDMA ensures high bandwidth and low latency. Combined with USRBIO’s zero-copy mechanism, it minimizes user-kernel context switching and further reduces I/O latency. Official benchmarks show up to ~6.6 TiB/s read bandwidth in a 180-node cluster.

● Read-Optimized Design: Given KVCache’s typical read-heavy access pattern, 3FS optimizes read paths using CRAQ protocol, allowing random replica selection for reads. This enables excellent performance even under massive concurrent read loads, aligning perfectly with KVCache workload characteristics.

Figure 4: Official 3FS Performance Metrics

Despite its strengths, the open-source version of 3FS has notable shortcomings:

1. Complex Cross-Component Coordination: In cloud-native heterogeneous environments combining GPU/HBM compute units, RDMA networking, and NVMe storage, there is a lack of unified cross-layer orchestration. IP address drift causes component state inconsistency, leading to distributed silos that hinder dynamic elastic scheduling required for high-concurrency AI inference with multiple parallel models and pipeline stages.

2. Low Resource Utilization:

3. Weak Cloud-Native Operability: Deployment and lifecycle management depend on manual scripts, lacking declarative APIs and state introspection. Failure recovery requires human intervention (e.g., manual rebuild after Storage failure). Monitoring lacks visualization, complicating operations and preventing SLO-driven AIOps.

Therefore, the Alibaba Cloud Tair team and Server R&D Storage hardware-software integration team undertook systemic enhancements atop 3FS, improving its adaptability and productization to deliver L3-level KVCache capabilities. These improvements have been open-sourced into communities such as SGLang and vLLM. The solution enables efficient global KVCache reuse, alleviates VRAM pressure, and further boosts inference efficiency and resource utilization.

Alibaba Cloud’s Server R&D Storage hardware-software integration team systematically upgraded 3FS across three dimensions: performance optimization, productization enhancement, and cloud-native management:

● Performance Breakthrough: Optimized RDMA traffic load balancing and tuned parameters for small I/O scenarios, boosting 4K random read IOPS by 150%. Introduced a full user-space persistence engine to further reduce resource consumption.

● Enhanced Product Capabilities: Resolved stability issues including Mgmtd IP drift and uneven storage allocation. Added GDR zero-copy and multi-tenant isolation to enable end-to-end efficient coordination from HBM to storage.

● Cloud-Native Management: Achieved one-click deployment, self-healing, and multi-cluster isolation via Kubernetes Operator, combined with elastic scaling and monitoring dashboards, significantly lowering the operational barrier for AI infrastructure.

Figure 5: 3FS Product Overview

We conducted local deployment validation and performance tuning of 3FS using physical storage servers. Key hardware configurations and cluster topology are summarized below:

| Cluster Topology | CPU | Memory | NIC | Disk |

|---|---|---|---|---|

| Control Node ×1 (co-located FDB/Meta/Mgmtd) Storage Nodes ×6 (3 data replicas) |

Intel(R) Xeon(R) Platinum 8331C 96 cores |

256GB | ConnectX-6 Dx 2 * 200Gb/s |

P5510(3.84TB)*12 |

While 3FS performs well in large-block I/O read bandwidth, total bandwidth does not scale linearly with increasing clients due to inter-client I/O interference. Analysis revealed severe RDMA traffic imbalance—some NICs utilized below 40%, others nearing 100% saturation—mainly caused by insufficient RDMA Queue Pair (QP) count. After adjusting QP-related parameters, NIC port traffic became evenly distributed, and total read bandwidth scaled linearly with client count, demonstrating strong scalability in large-scale distributed settings.

For write bandwidth bottlenecks, we increased I/O concurrency. Post-optimization, a single USRBIO client achieved 4M I/O bandwidth of 40.2 GB/s (read) and 31.4 GB/s (write), up from 29.5 GB/s and 5.312 GB/s respectively.

| Metric | Before Optimization | After Optimization |

|---|---|---|

| Read Bandwidth | 29.5 GB/s | 40.2 GB/s |

| Write Bandwidth | 5.312 GB/s | 31.4 GB/s |

Initial testing showed low IOPS for small-blocks I/O (4K–64K). A single Storage node delivered only ~200K 4K random read IOPS. Root cause analysis identified exhaustion of listening threads under small I/O read workloads. We optimized several Storage parameters—including number of listener threads, I/O worker threads, and queue depth. After tuning, 4K random read IOPS improved to ~500K—a 150% increase.

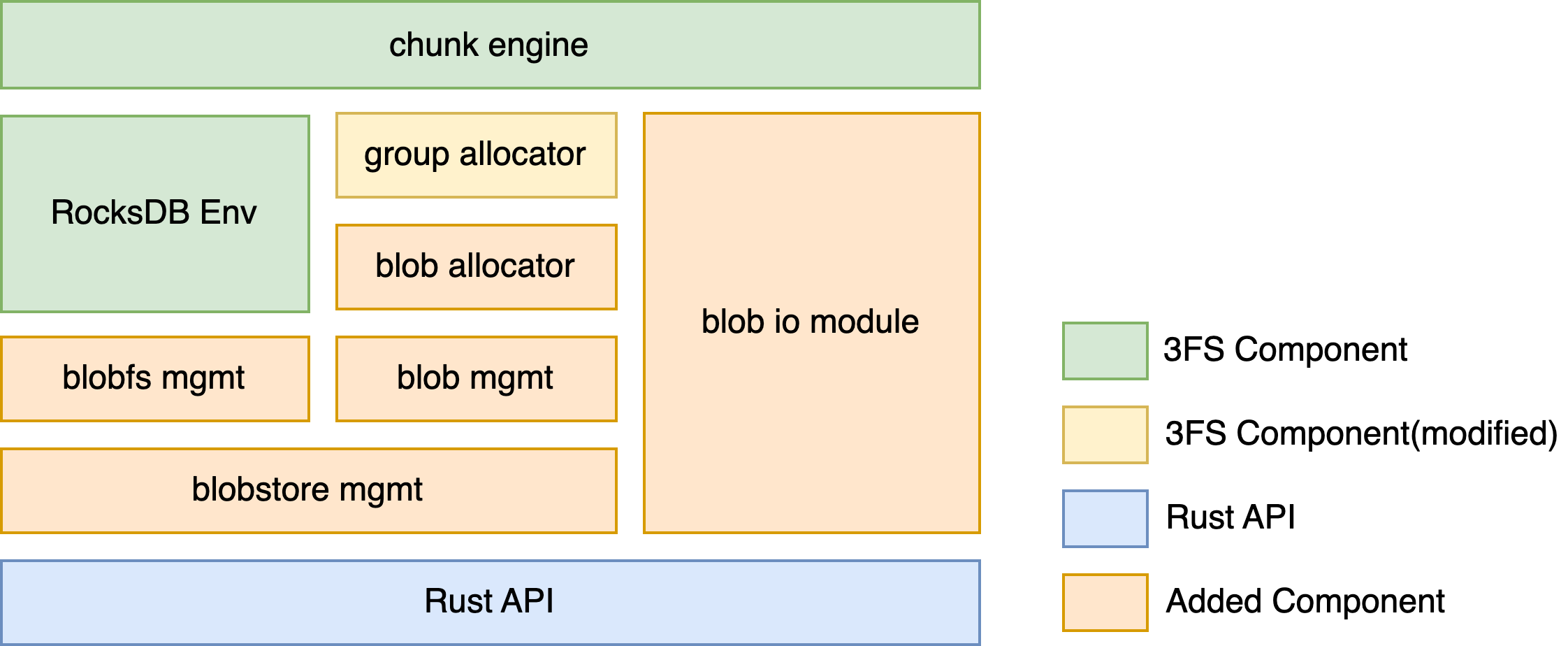

Building on this, considering block storage's inherent advantage in random small I/O over file storage, we replaced the original local filesystem with a full user-space storage engine as 3FS’s persistence backend (see figure below). Testing showed significant reduction in system resource usage: CPU utilization dropped by approximately 27%.

Figure 6: Full User-Space Storage Engine

As 3FS scales across diverse environments, it faces challenges in cluster stability, storage utilization, and performance. To overcome these, we implemented systemic enhancements across multiple dimensions:

● High-Availability Architecture Strengthening: Enabled seamless Mgmtd failover and cross-cluster fault tolerance via DNS decoupling and multi-NIC probing.

● Fine-Grained Storage Management: Refactored ChainTable generation rules and file Chain assignment strategies to eliminate capacity skew and resource waste.

● End-to-End Performance Leap: Enabled GPU Direct RDMA (GDR), removing redundant HBM-to-memory data copies.

● Security and Scalability Upgrade: Introduced multi-tenant isolation with tenant-level access control and physical data separation.

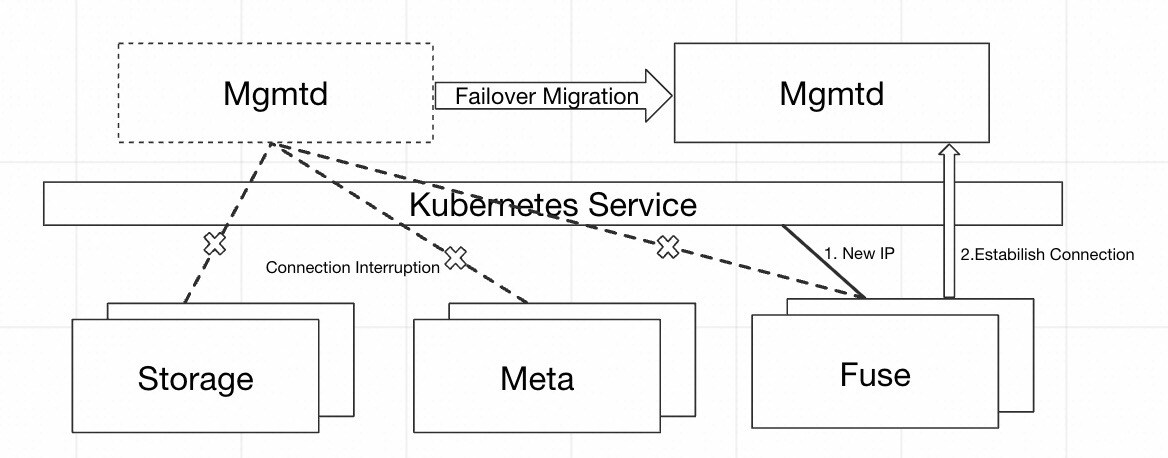

Mgmtd maintains global topology and component status. If other components cannot connect to the Mgmtd Primary, they cannot obtain updated cluster state, rendering the entire 3FS cluster unavailable.

To simplify deployment, we adopted a containerized architecture. However, during runtime, the Mgmtd Pod may restart or be rescheduled due to OOM errors, node eviction, or shutdown. When this happens, its IP address changes, causing other components to lose connection to the primary Mgmtd service and leading to cluster instability.

To solve this problem, we introduced DNS-based service discovery in the Mgmtd client, replacing hardcoded IP addresses with stable DNS names to enable highly available access. In Kubernetes, this is implemented using a Headless Service. This allows components to automatically discover and reconnect to the current primary node using a consistent DNS naming rule, even after the Mgmtd Pod has been recreated or migrated. The approach significantly improves fault tolerance and system availability.

Figure 7: DNS Resolution Mechanism

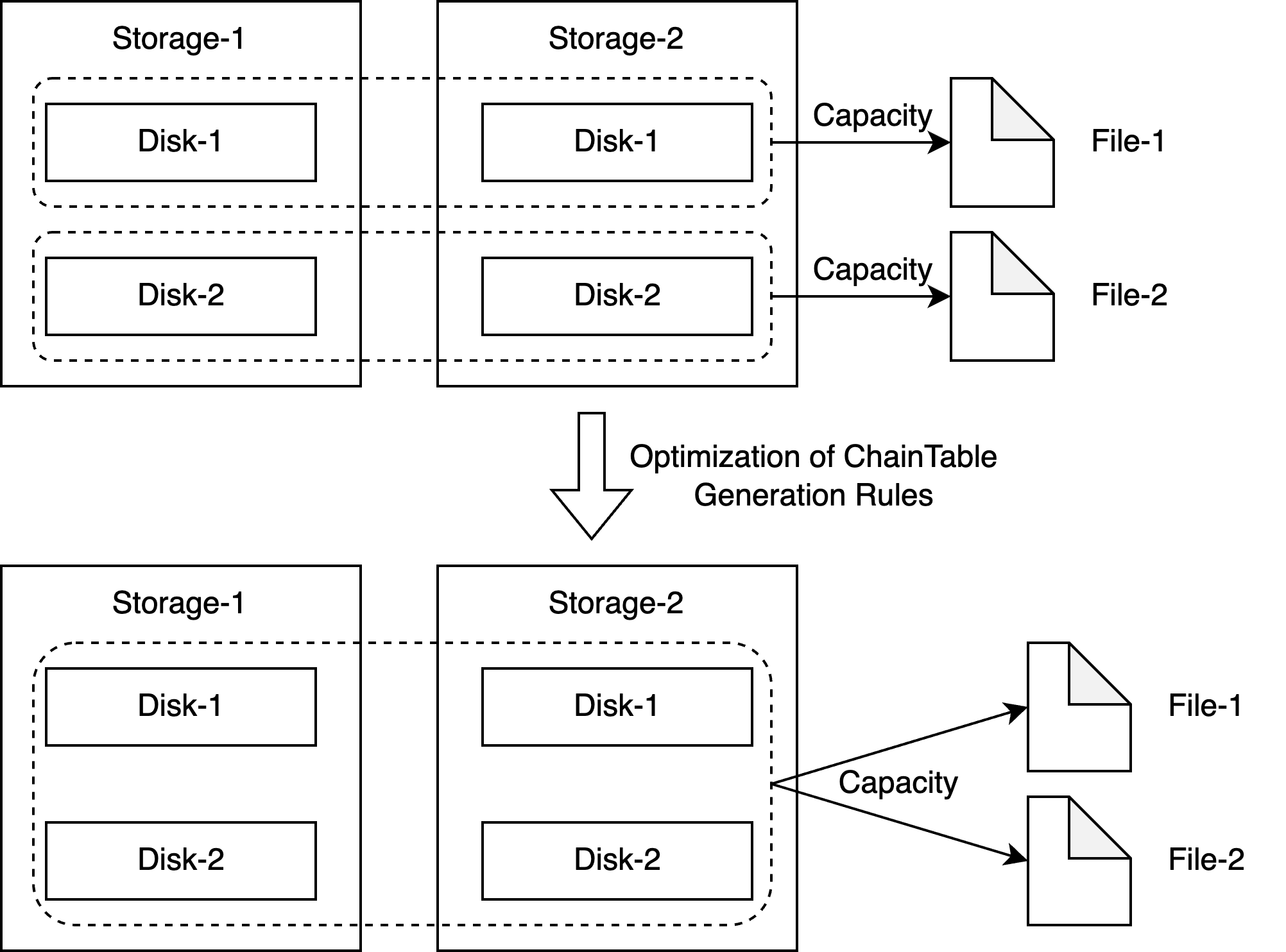

When creating files, 3FS cyclically assigns consecutive Chains from ChainTable based on stripe size to achieve "load balancing" at the Chain level. However, the default ChainTable generation rule places disks with the same index across nodes into the same Chain, and such Chains are adjacent in ChainTable. When stripe size is small and Target count is high, files may only utilize a subset of disks, limiting per-file capacity and underusing backend storage.

To resolve this, we optimized the ChainTable allocation strategy by randomly dispersing Targets across storage nodes during ChainTable creation and setting the minimum stripe size satisfying the condition. This allows each 3FS file to fully leverage backend storage space.

Figure 8: Optimized ChainTable Generation Rule

During 3FS expansion, because file creation randomly selects Chain lists, pre-expansion SSDs become overused while newly added ones remain underutilized. This leads to failed writes when existing SSDs are full, despite available space on new nodes.

We revised the file distribution algorithm, adopting a storage-utilization-priority allocation strategy, achieving more balanced load distribution. Newly created files post-expansion are preferentially assigned to less-used storage nodes, ensuring normal read/write operations.

In multi-NIC setups, when Mgmtd Primary fails and leadership transfers, other components fail to connect to the new Primary, causing cluster unavailability.

Root cause analysis found that after failover, components attempted to probe old Primary’s multiple NICs but retried only once, falling into a loop probing the old node instead of discovering the new Primary. We enhanced retry and probing logic so components can correctly detect and connect to the new Primary, ensuring cluster availability.

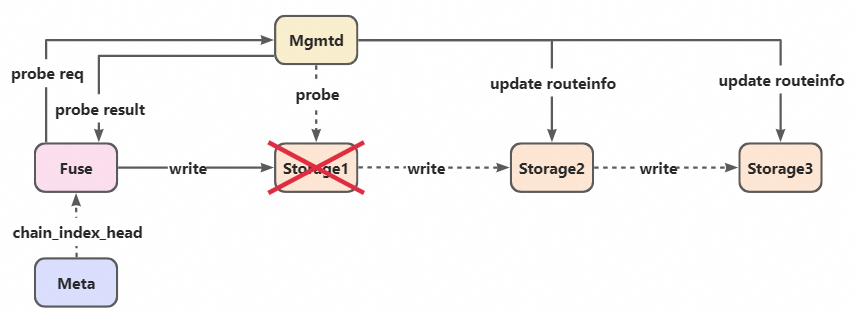

Under multi-replica setup, when a single Storage node fails, I/O drops to zero for 60 seconds before returning I/O Error. Writes proceed sequentially across Targets, succeeding only after all respond. If a node fails, retries continue indefinitely until timeout, causing temporary I/O Error.

Figure 9: Probing Failed Storage

Fuse detects I/O timeout and sends a probe request to Mgmtd. Mgmtd then probes the faulty Storage node. Upon confirming failure, Mgmtd updates the routing table, marking the affected Target as OFFLINE and persisting this change in FDB. Updated routing information is broadcast to all nodes, and Fuse retries I/O along the repaired path.

Figure 10: I/O Path After Recovery

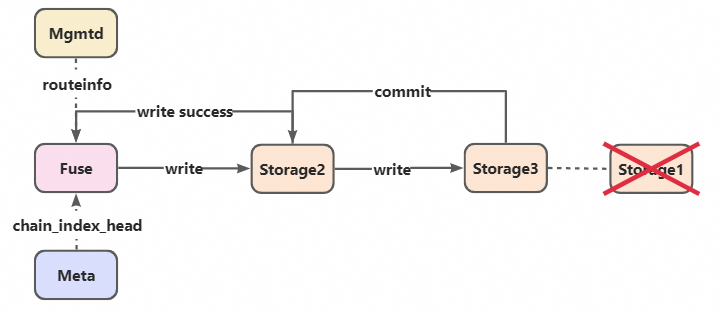

After computation, KVCache data resides in HBM. Writing to 3FS traditionally requires copying data from HBM to host memory, followed by calling USRBIO or POSIX interfaces. This HBM-to-memory copy often becomes a bottleneck, requiring pinned memory and dedicated kernels—adding extra GPU and CPU overhead.

We enabled GDR support in 3FS USRBIO to eliminate redundant memory copies and reduce CPU/GPU burden. In 3FS USRBIO, user processes and the 3FS Fuse Daemon share two memory-mapped files: iov (data buffer) and ior (command buffer). We modified iov to store HBM IPC addresses instead of raw data, enabling the Fuse Daemon to directly read/write the same physical HBM region.

Additionally, due to IBVerbs API constraints, we also shared user-side Protection Domain (PD) and Memory Region (MR) contexts with the Fuse Daemon, completing end-to-end GDR support.

Figure 11: 3FS GDR Data Interaction Design

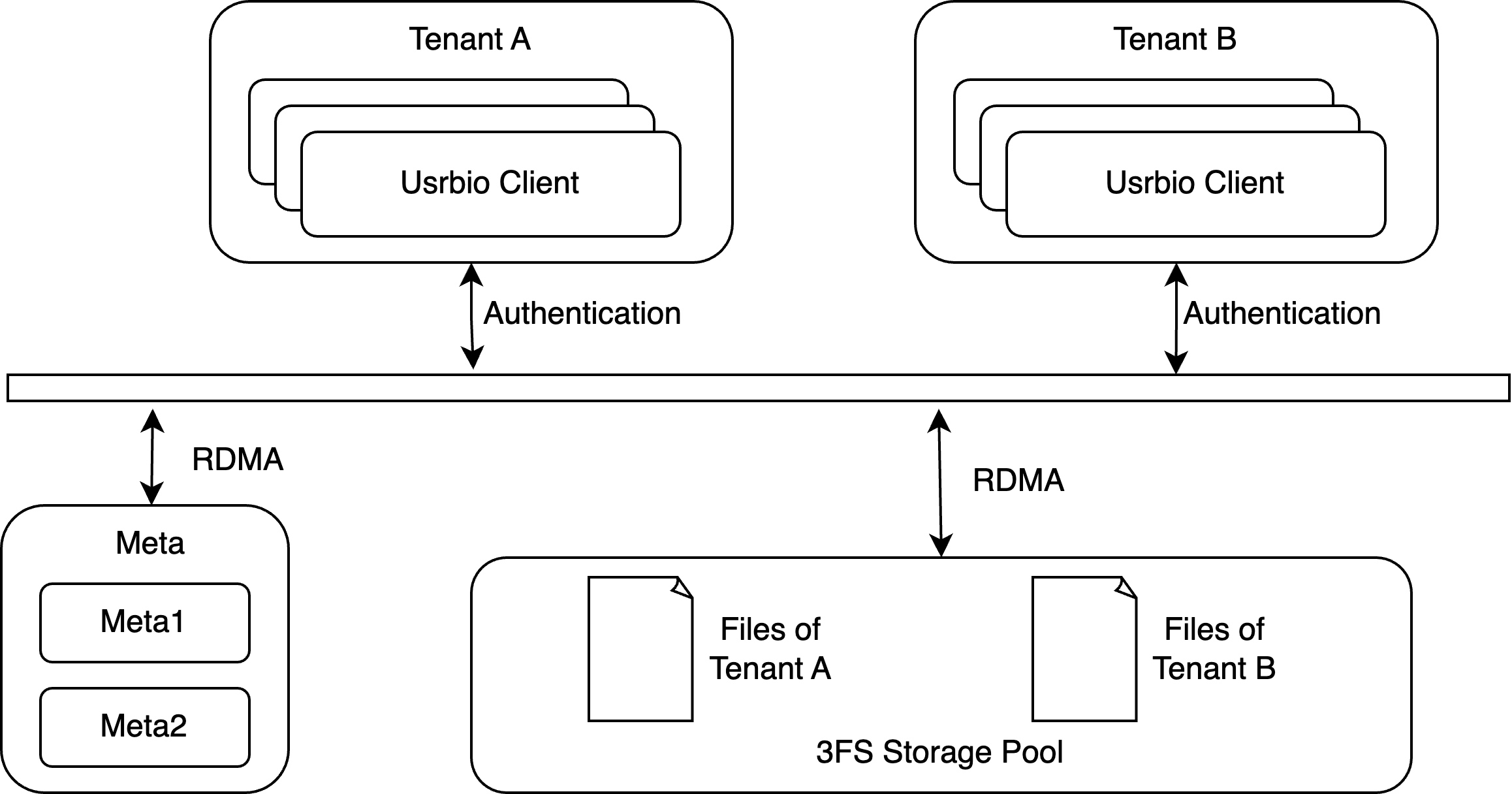

We implemented tenant permission management with access control isolation: tenants can only view, access, and modify their own files. Tenant-level authentication was added to both Meta and I/O access paths to prevent unauthorized access.

Figure 12: 3FS Multi-Tenant Isolation

Since its open-sourcing, 3FS has attracted widespread industry attention for its outstanding performance and high availability, particularly among AI startups. However, 3FS comprises multiple interdependent components, and traditional deployment involves manual configuration and coordination. Failures require heavy manual intervention, resulting in complex deployment, high maintenance cost, and unstable operation. How to help enterprises deploy, manage, and maintain 3FS efficiently became a central concern in our development.

To address this, we open-sourced kvc-3fs-operator, supporting flexible deployment across self-managed physical K8s clusters, Alibaba Cloud ACK, ASI, and more. Built on Kubernetes, the 3FS Operator delivers declarative APIs and automated operations, enabling one-click deployment and self-healing, greatly improving deployment efficiency and system stability.

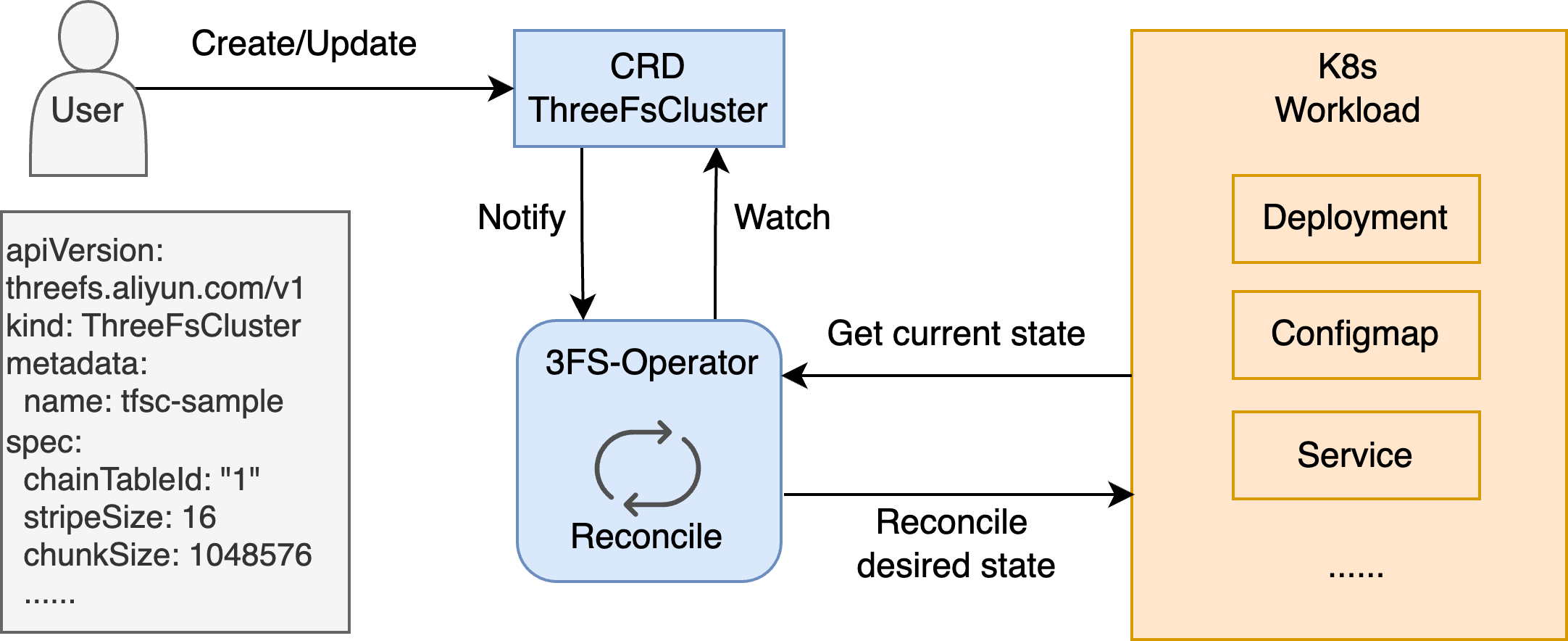

Kubernetes Operator extends Kubernetes through Custom Resource Definitions (CRDs) and Controllers, automating deployment and lifecycle management of complex systems.

In 3FS Operator, we define a CRD named ThreeFsCluster to describe 3FS cluster configuration and desired state. The Operator listens for changes to this CRD and drives a reconcile loop, continuously comparing actual vs. desired state and automatically executing actions (e.g., creating workloads, adjusting configs, handling faults) to ensure the system remains in the intended state.

Figure 13: 3FS Operator Architecture

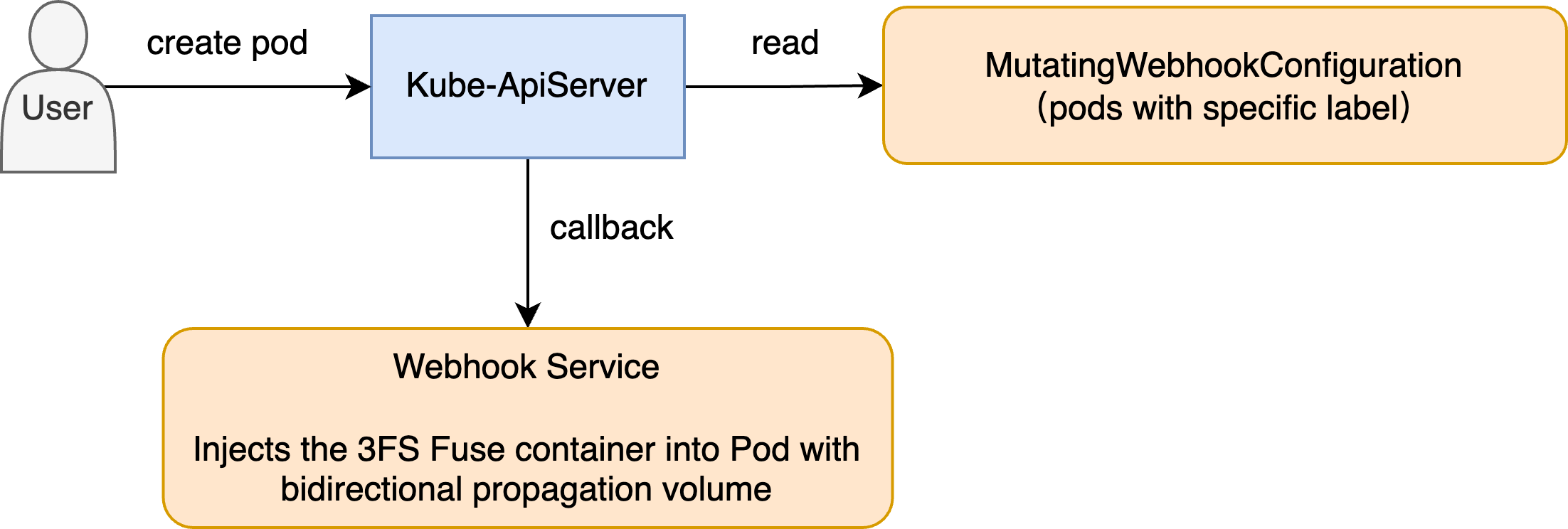

Kubernetes Webhook enables custom admission control via HTTP callbacks with the API server.

As shown below, we registered a Mutating Admission Webhook in 3FS Operator. When a user creates a Pod with specific labels, the webhook triggers and automatically injects a 3FS Fuse container as a Sidecar.

With bidirectional mount propagation volumes, the 3FS mount path inside the Sidecar is propagated to the main application container. The entire injection and mounting process is transparent. When the Pod starts, users can directly access 3FS storage in configured directories without modifying application code.

Figure 14: Dynamic Injection of 3FS Fuse

The 3FS Operator continuously monitors component health. When a component fails, it records the first failure time. If the failure persists beyond a user-defined threshold, the component is marked as failed, and the Operator creates a new replica to replace it, enabling automatic recovery and ensuring high availability.

The Operator supports dynamic storage expansion. Users can define expansion increments based on workload changes. Combined with our optimized data distribution logic during file creation, data is evenly spread across newly added nodes.

By updating component image versions, rolling upgrades of the 3FS cluster are supported. The Operator replaces old images process-by-process, preserving sufficient healthy replicas at all times. This minimizes impact on cluster availability during upgrades, enhancing stability and operational efficiency.

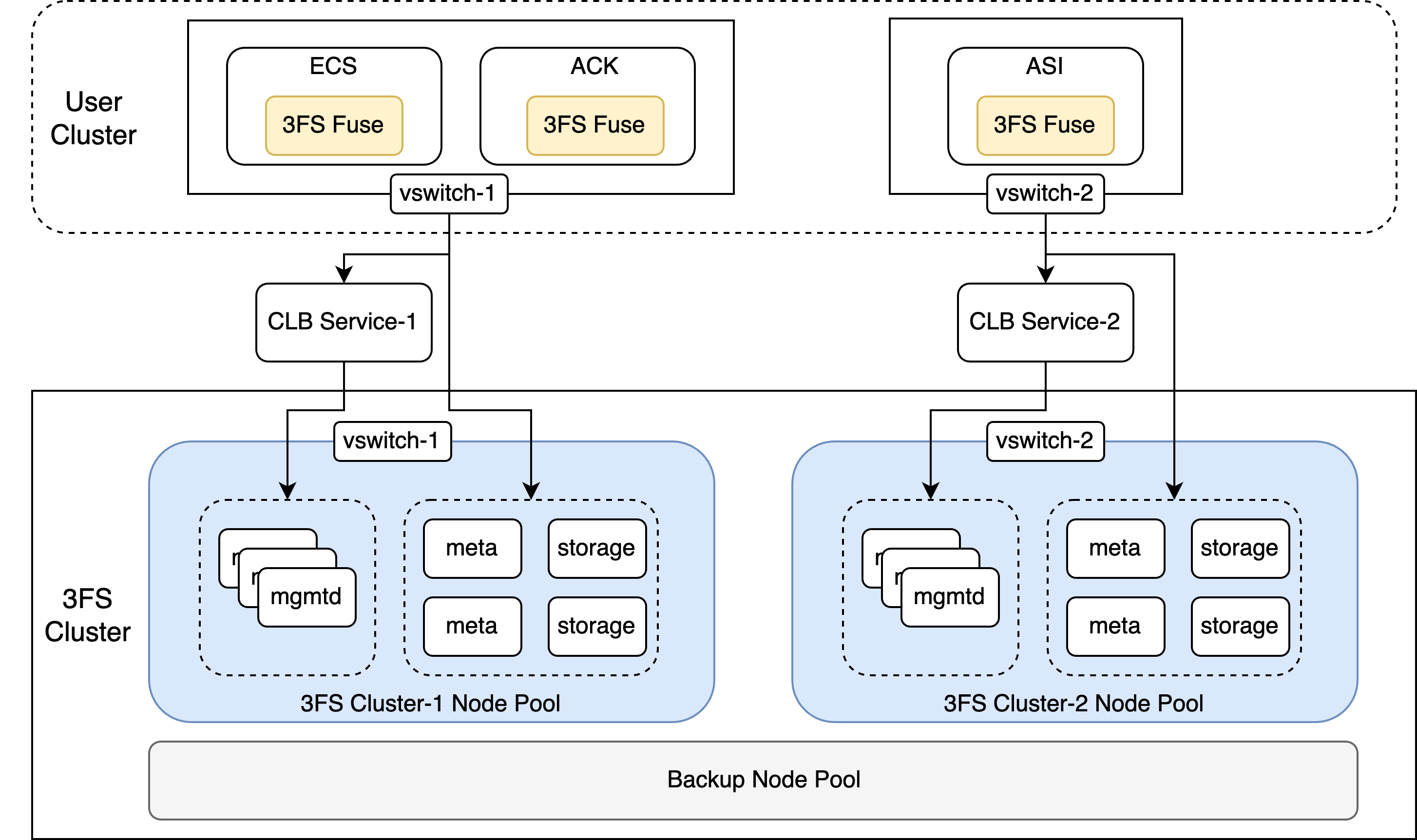

Multiple 3FS clusters can be deployed within a single Kubernetes cluster. Leveraging Alibaba Cloud network isolation features (e.g., VPC subnets, security groups), inter-cluster isolation is achieved, improving resource utilization and reducing infrastructure costs while ensuring data security and service isolation for different workloads. A secondary standby node pool is pre-provisioned to dynamically handle failover and expansion, ensuring high availability. Users can also automate 3FS client deployment via ECS, ACK, or ASI for cross-cluster data access and resource scheduling.

Figure 15: Multi-Instance 3FS Deployment

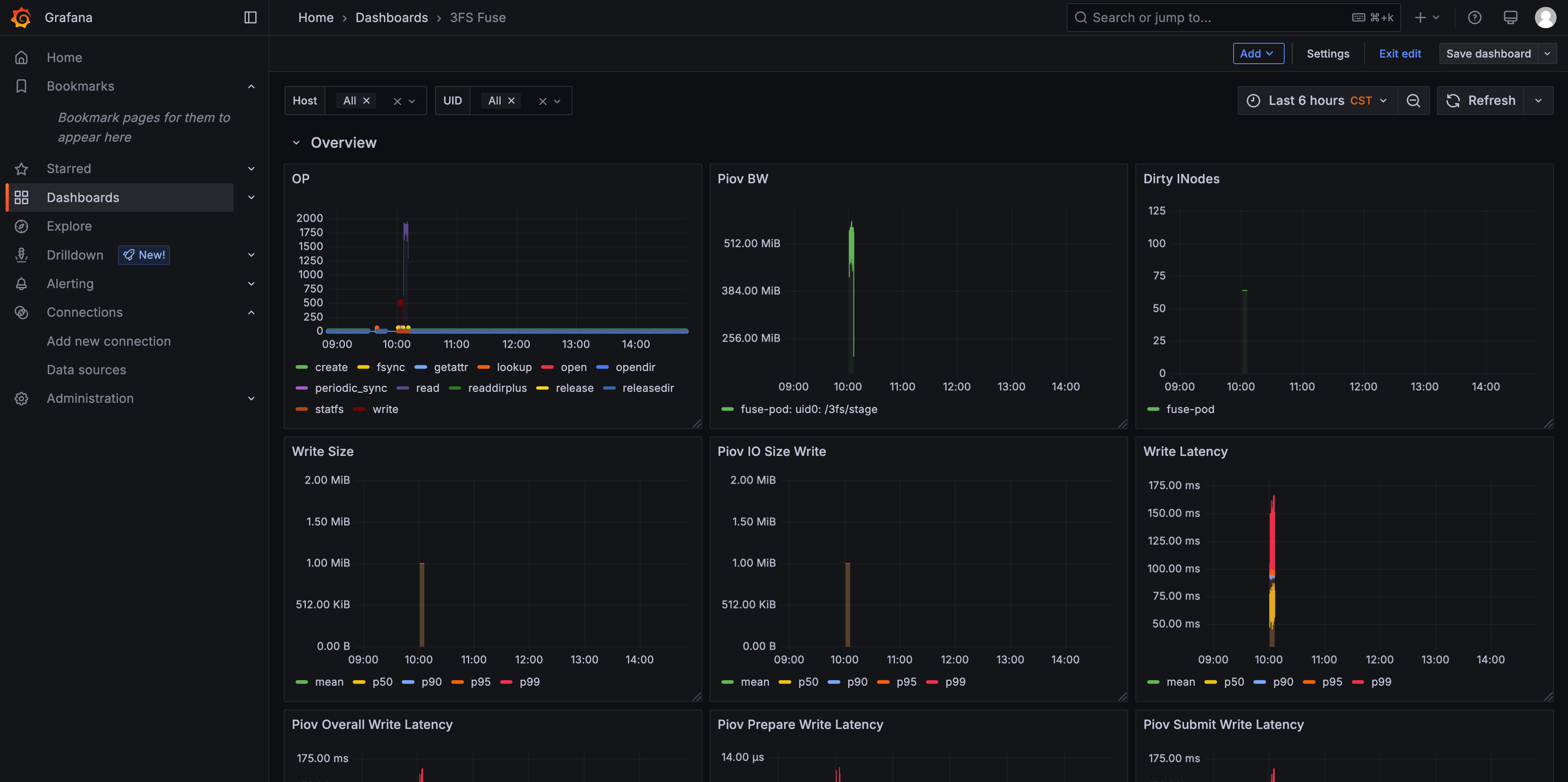

3FS uses ClickHouse as a time-series database to store collected metrics. Through Grafana’s ClickHouse plugin, we built a unified visual monitoring dashboard, centrally displaying key performance indicators of control and data path components, enabling efficient identification of performance bottlenecks via segmented I/O latency analysis.

Figure 16: 3FS Monitoring Dashboard

During early integration testing between SGLang and 3FS USRBIO, limited client concurrency (single-threaded requests) and small I/O submission granularity resulted in only ~200MB/s read/write bandwidth—far below the EGS environment’s 160Gb/s (~20GB/s) theoretical limit.

To break this bottleneck, we applied the following optimizations:

● Multi-threading: Increased client concurrency, with each thread maintaining private IOR/IOV structures to avoid contention.

● I/O Aggregation: Increased page size and I/O queue depth, leveraging batch submission to improve RDMA bandwidth utilization.

Post-optimization, SGLang achieved near-theoretical peak bandwidth of ~20GB/s, validating the feasibility and effectiveness of the 3FS USRBIO integration.

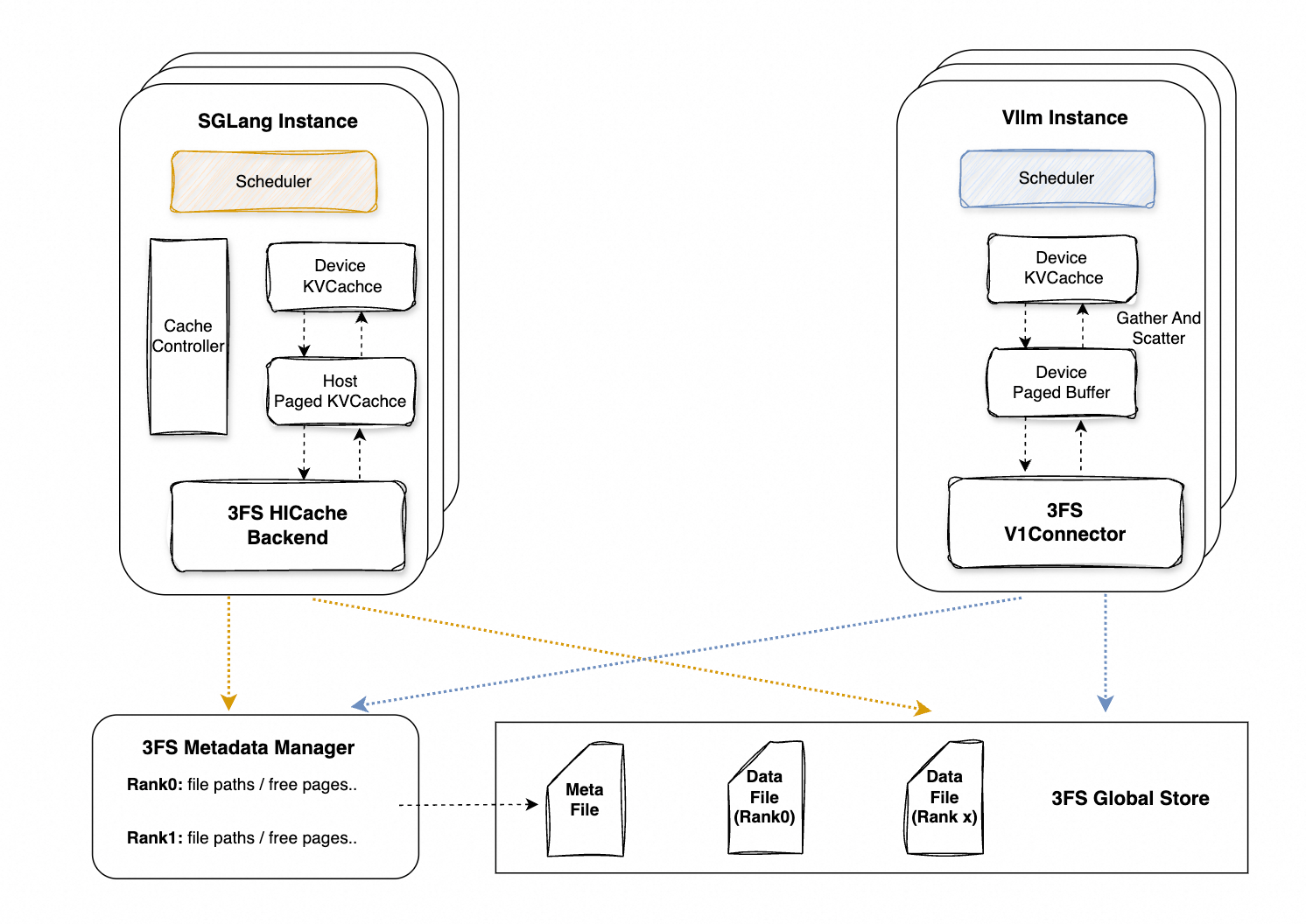

We have completed 3FS KVStore integration in both SGLang and vLLM communities. Key design elements include:

● 3FS Hicache Backend & V1 Connector: High-throughput, low-latency access to 3FS storage using the high-performance libusrbio I/O library.

● Global Metadata Manager: Provides unified metadata management for distributed filesystems, enabling efficient organization, querying, and coordination for global KVCache consistency.

● 3FS Global Storage: High-performance distributed storage engine.

Figure 17: Inference Engine Integration with 3FS

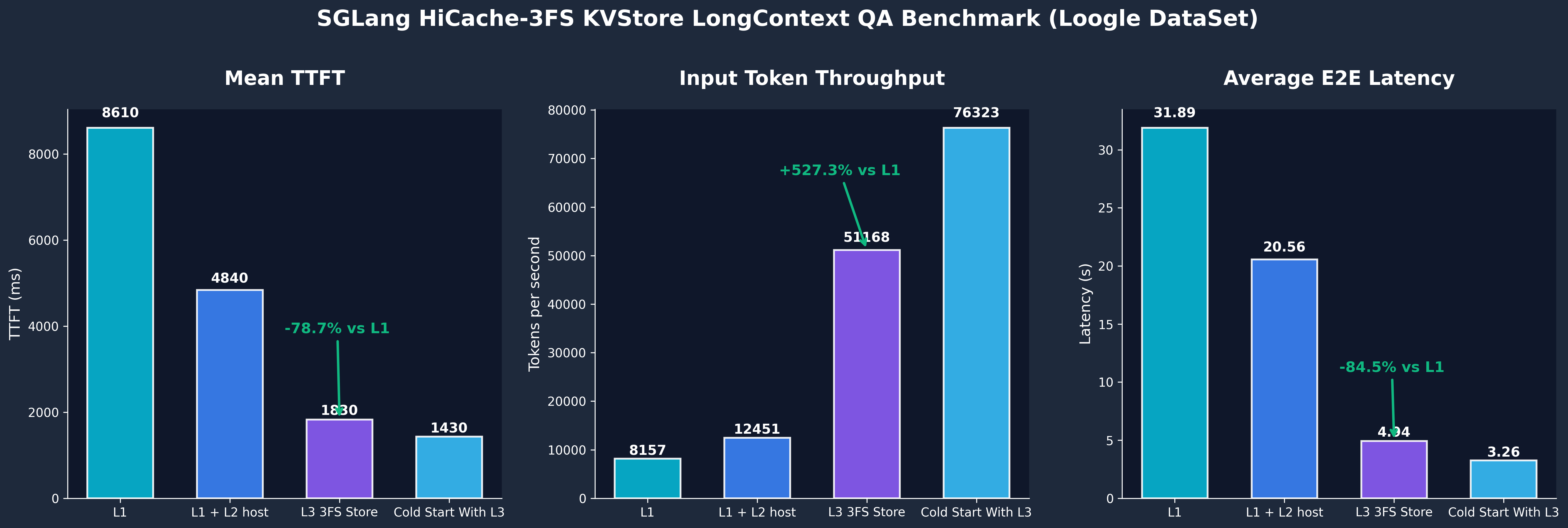

We evaluated SGLang performance on a long-context QA dataset:

● Dataset: Loogle Dataset, ~100 system prompts, 21KB prefix, 20 queries/group

● Model: DeepSeek R1, H20-3e × 8 GPUs

● Test Scenarios: L1, L1 + L2 host, L1 + L2 + L3 3FS, 3FS cold-start acceleration

● Performance Gains:

● For details: https://lmsys.org/blog/2025-09-10-sglang-hicache/

Figure 18: SGLang + 3FS Performance Data

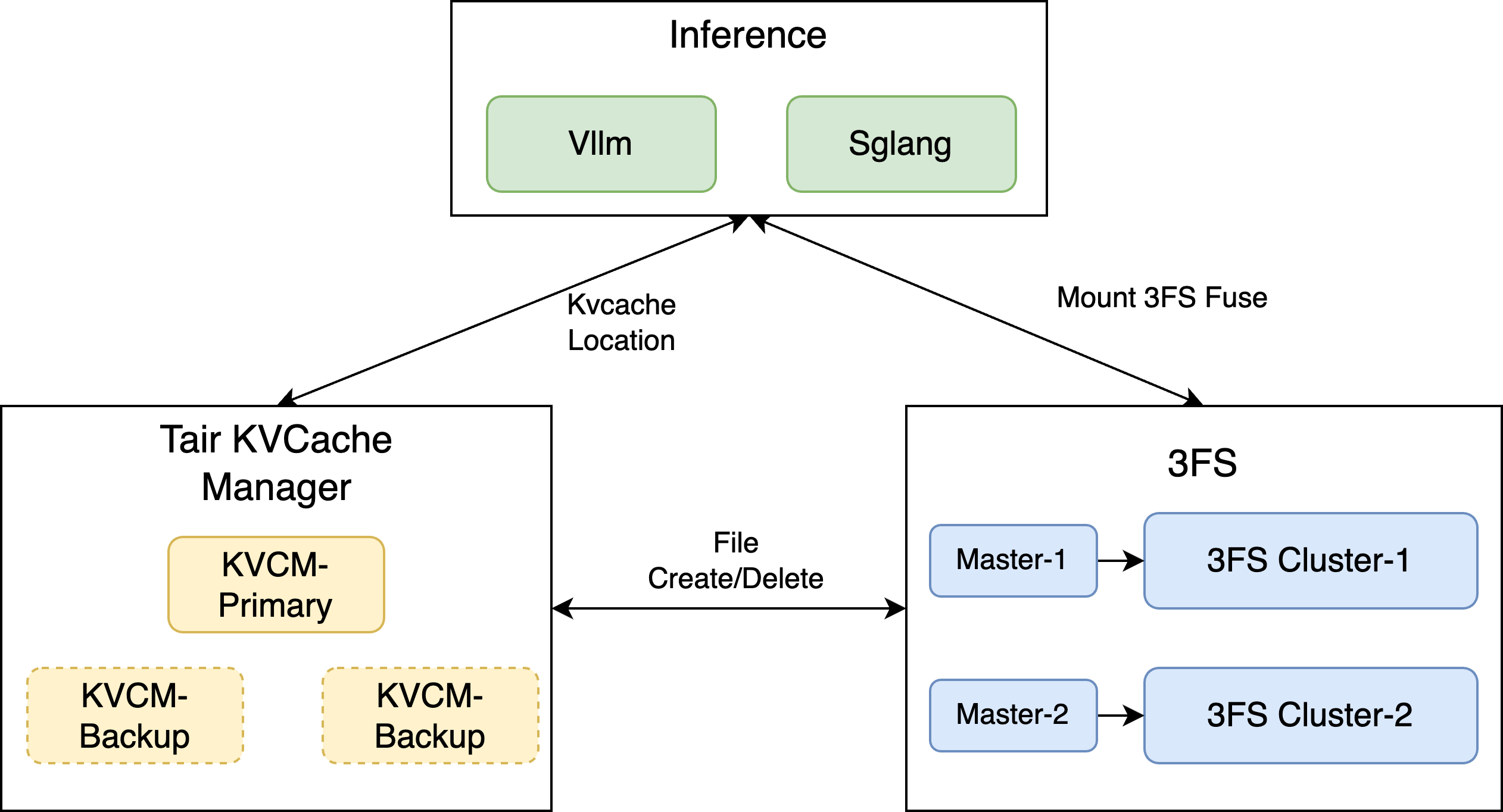

Tair KVCache Manager (KVCM) is an Alibaba-developed global external KVCache management component, providing efficient and reliable KVCache services for inference workloads.

KVCM exposes service interfaces via HTTP/gRPC, supporting integration with various storage backends including 3FS, KV stores, filesystems, memory pools, and block storage. It abstracts heterogeneous storage systems behind a unified interface layer, significantly reducing integration complexity and development cost.

KVCM plays a pivotal role in system architecture. As shown below, it manages dynamic mapping between KVCache entries and physical file locations in 3FS. Inference services query KVCM for data location and access data via mounted 3FS Fuse, eliminating direct dependency on low-level storage management.

Figure 19: KVCM Integration with 3FS

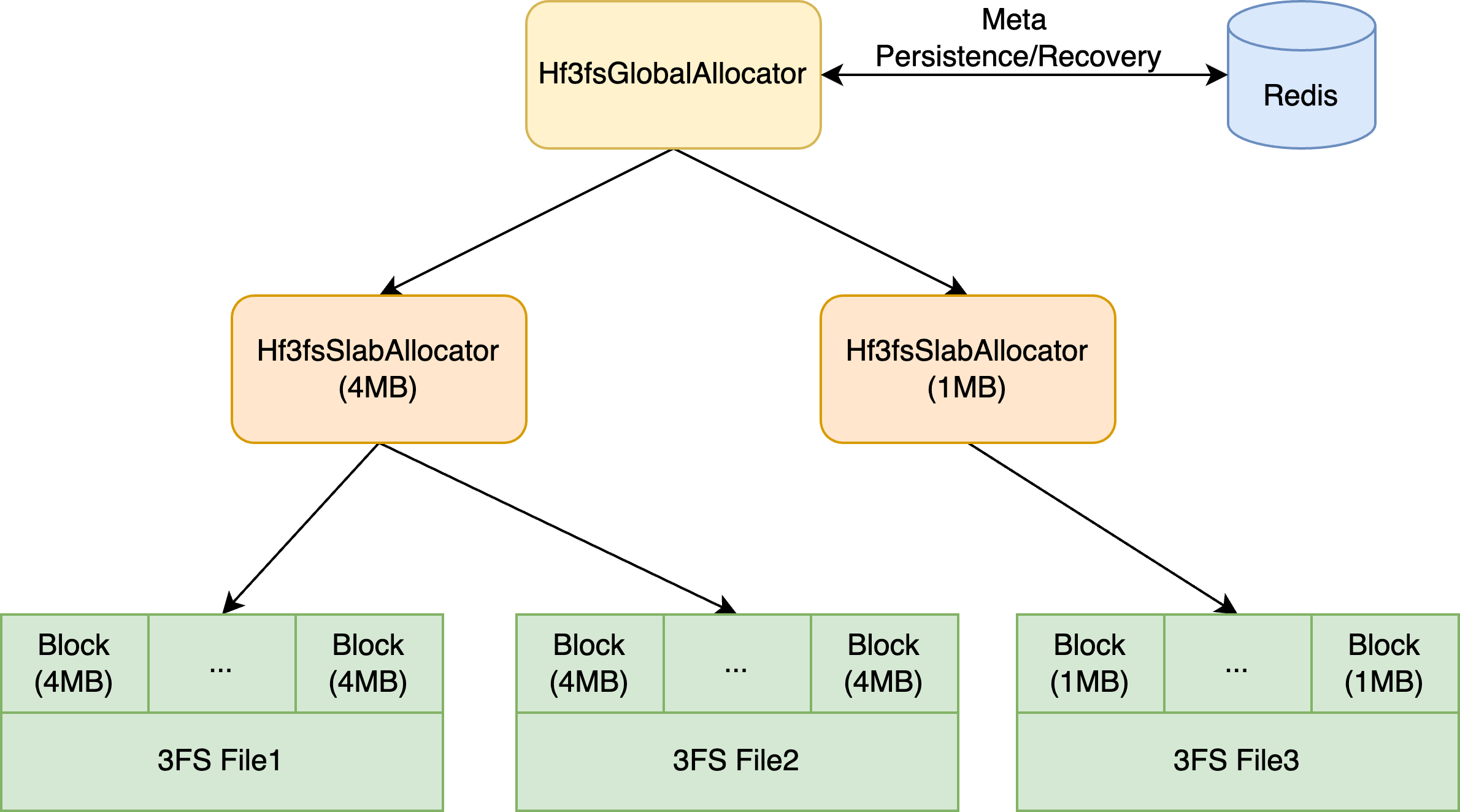

However, current KVCM-3FS integration relies on 3FS Fuse mounting and strict RDMA environment requirements, limiting deployment flexibility in cross-cluster scenarios. Additionally, traditional allocation via massive small files, while simple, incurs frequent metadata operations that overload the backend metadata service, degrading throughput and creating bottlenecks. To address these, we propose:

To enhance deployment flexibility in non-RDMA environments, we introduced a lightweight 3FS Master component in the 3FS Operator. This stateless service, deployed in multiple instances, exposes POSIX-compatible create/delete semantics via HTTP, effectively decoupling KVCM from 3FS Fuse.

To reduce metadata overhead, we adopted a large-file + Slab allocator with configurable block sizes approach:

● Clients open fewer large files and cache metadata, minimizing backend metadata queries.

● The Slab allocator enables fine-grained memory management, reducing frequency of file creation/deletion and easing pressure on metadata services, thereby improving overall throughput.

Figure 20: KVCM 3FS Allocator Design

More design details, use cases, and configuration guides for KVCM will be covered in upcoming technical articles.

Looking ahead, 3FS will continue innovating around the high-performance storage needs of KVCache, focusing on intelligent operations, enterprise-grade multi-tenancy, native KV semantics, extreme high availability, and software-hardware co-design.

● Enhance 3FS Operator CRD Capabilities and Deployment Flexibility: Expand CRD configuration options for finer-grained resource management and intelligent adaptation to varying workloads and performance demands. Broaden Operator functionality to support diverse customer deployment scenarios.

● QoS Mechanisms: Build robust multi-tenant support with dynamic resource scheduling and performance guarantees based on tenant priority and quotas, preventing resource contention and interference—delivering enterprise-grade security and stability in cloud-native shared storage.

● Client Architecture Upgrade & Native KV Semantics: Redesign client architecture to natively support key-value operations. Provide simple, high-performance KV APIs to lower development complexity and lay a solid foundation for building high-performance KVCache systems.

● Strengthen Product Capabilities & Improve Self-Healing: Introduce mechanisms like dynamic replica migration and reconstruction to minimize business impact during failures, maximizing continuity and delivering enterprise-grade high availability.

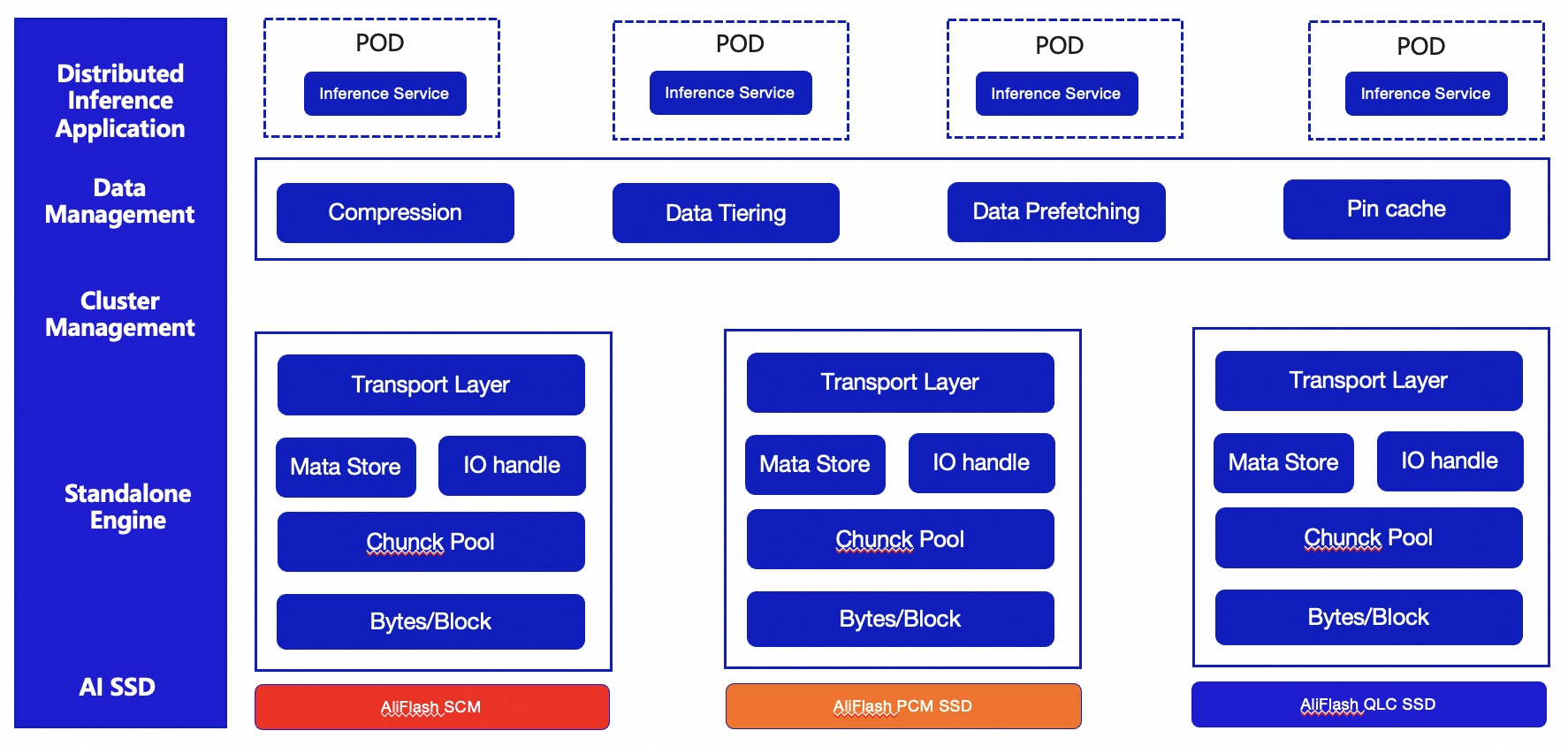

● Persistence Engine Optimization & Hardware Co-Design: Continuously optimize the persistence engine, deeply integrating with Alibaba Cloud’s proprietary hardware such as AliFlash SSD and AliSCM. Through tight software-hardware co-optimization, unlock maximum performance potential and deliver cutting-edge storage experiences.

As AI application demands grow in diversity and complexity, Alibaba Cloud’s self-developed AI SSD and Panjiu storage server platform will continue iterative optimization to precisely match dynamic AI workloads. Our goal is to build end-to-end, integrated infrastructure centered on KVCache, leveraging software-hardware co-design to establish an efficient, intelligent AI foundation.

● Storage Hardware Optimization: Align storage performance (low latency, high IOPS, high bandwidth) with GPU compute power and network bandwidth.

● Compute-Storage Co-Optimization: Enable GPU-direct storage access to reduce latency, eliminate memory wall effects, and optimize KV access patterns.

● Storage System Optimization: Design data placement strategies around KVCache workloads, integrate dedicated storage engines, and minimize filesystem overhead.

● Value-Added Capability Enhancement: Strengthen data compression, tiered eviction, data-aware task scheduling, prefetching, and hot-data pinning/unpinning.

Figure 21: End-to-End Solution for KVCache

PolarDB-X v2.4.2: Upgrades to Open-Source Ecosystem Integration

Hybrid Model Support | SGLang's Support Scheme for Hybrid Architecture Models like Mamba-Transformer

ApsaraDB - February 4, 2026

ApsaraDB - December 29, 2025

ApsaraDB - February 4, 2026

Alibaba Clouder - January 21, 2021

Alibaba Clouder - November 7, 2017

Aliware - May 15, 2020

AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by ApsaraDB