Internet applications have evolved from a single-application architecture to the service-oriented architecture and then to microservices. As microservices are constantly upgraded, stable service interoperability is becoming increasingly important because distributed systems must deal with network latency and instability. Microservices are designed to ensure service idempotence by controlling concurrencies through distributed locks. The stable operation of a microservices system depends on caching, degradation, and throttling.

Continuous business development results in an increasing number of subsystems divided by different business domains. Each business system requires components for caching, throttling, distributed locks, and idempotence. The distributed-tools component, currently not open-source, includes the basic components required by distributed systems.

The distributed-tools component provides two easy-to-use Spring Boot starters based on Tair and Redis, respectively. To use a Redis cache, add the following configuration to application.properties:

redis.extend.hostName=127.0.0.1

redis.extend.port=6379

redis.extend.password=pwdcode

redis.extend.timeout=10000

redis.idempotent.enabled=trueIn the following sections, we will be describe how you can use caching, throttling, distributed locks, and idempotence.

Caching is widely used on the access path of an application request. When a user initiates a request, it goes to the cache of the browser, reverse proxy, web server , application, and database. Cache can be understood as an algorithm for exchanging space for time. Data may be cached in the memory or a disk to avoid some time-consuming operations. Common time-consuming operations include database queries and certain data calculations. Cache can also reduce server workloads. Some frequent but not time-consuming queries or calculations may occupy a large proportion of a server processing time and cause serious queuing problems that are beyond the processing capacity of the server.

The distributed-tools component provides the CacheEngine operation, whose implementation varies depending on whether Tair or Redis is used. The CacheEngine operation is defined as follows.

public String get(String key);

/**

* 获取指定的key对应的对象,异常也会返回null

*

* @param key

* @param clazz

* @return

*/

public <T> T get(String key, Class<T> clz);

/**

* 存储缓存数据,忽略过期时间

*

* @param key

* @param value

* @return

*/

public <T extends Serializable> boolean put(String key, T value);

/**

* 存储缓存数据

*

* @param key

* @param value

* @param expiredTime

* @param unit

* @return

*/

public <T extends Serializable> boolean put(String key, T value, int expiredTime, TimeUnit unit);

/**

* 基于key删除缓存数据

*

* @param key

* @return

*/

public boolean invalid(String key);The GET method queries keys, the PUT method stores cached data, and invalid is used to delete cached data.

Throttling is required to ensure the high availability of distributed systems, especially in scenarios with instantaneous high concurrency, such as flash sales. The throttling mechanism protects systems by rate-limiting concurrent access requests and other requests or requests within a specified time window. When the specified rate is reached, the following results may occur: (1.) service denial, in which case an error page or a message indicating no resources appears; (2.) queuing or waiting, which occurs in scenarios such as flash sales, commenting, or ordering; (3.) degradation, in which case default data is returned, such as the information indicating available inventory on the product details page.

Common throttling algorithms include fixed window, sliding window, leaky bucket, and token bucket. Currently, the distributed-tools component only implements the fixed window algorithm based on counters. The implementation is as follows.

/**

* 指定过期时间自增计数器,默认每次+1,非滑动窗口

*

* @param key 计数器自增key

* @param expireTime 过期时间

* @param unit 时间单位

* @return

*/

public long incrCount(String key, int expireTime, TimeUnit unit);

/**

* 指定过期时间自增计数器,单位时间内超过最大值rateThreshold返回true,否则返回false

*

* @param key 限流key

* @param rateThreshold 限流阈值

* @param expireTime 固定窗口时间

* @param unit 时间单位

* @return

*/

public boolean rateLimit(final String key, final int rateThreshold, int expireTime, TimeUnit unit);Throttling is implemented by using the rateLimit method of CacheEngine. expireTime only sets the fixed window time, rather than the sliding window time. The distributed-tools component provides the RateLimitTemplate template with the Execute method used to directly implement throttling.

/**

* @param limitKey 限流KEY

* @param resultSupplier 回调方法

* @param rateThreshold 限流阈值

* @param limitTime 限制时间段

* @param blockDuration 阻塞时间段

* @param unit 时间单位

* @param errCodeEnum 指定限流错误码

* @return

*/

public <T> T execute(String limitKey, Supplier<T> resultSupplier, long rateThreshold, long limitTime,

long blockDuration, TimeUnit unit, ErrCodeEnum errCodeEnum) {

boolean blocked = tryAcquire(limitKey, rateThreshold, limitTime, blockDuration, unit);

if (errCodeEnum != null) {

AssertUtils.assertTrue(blocked, errCodeEnum);

} else {

AssertUtils.assertTrue(blocked, ExceptionEnumType.ACQUIRE_LOCK_FAIL);

}

return resultSupplier.get();

}The distributed-tools component also provides @RateLimit to annotate RateLimit as follows.

@Retention(RetentionPolicy.RUNTIME)

@Target(ElementType.METHOD)

@Documented

public @interface RateLimit {

/**

* 限流KEY

*/

String limitKey();

/**

* 允许访问的次数,默认值MAX_VALUE

*/

long limitCount() default Long.MAX_VALUE;

/**

* 时间段

*/

long timeRange();

/**

* 阻塞时间段

*/

long blockDuration();

/**

* 时间单位,默认为秒

*/

TimeUnit timeUnit() default TimeUnit.SECONDS;

}The following code implements throttling based on annotation:

@RateLimit(limitKey = "#key", limitCount = 5, timeRange = 2, blockDuration = 3, timeUnit = TimeUnit.MINUTES)

public String testLimit2(String key) {

..........

return key;

}You can add the preceding annotation to any method within the interception range of Spring AOP to enable throttling. For example, the sample code uses the key parameter for throttling. As such, requests may be blocked for three minutes if more than five requests are sent every two minutes.

A single Java process provides the "synchronized" keyword and ReentrantLock to control concurrent resource access in a multithread environment. The cross-process lock provided by a distributed system, also called a distributed lock, is more often used than a local lock. A distributed lock is implemented by storing the lock flag in a public memory, such as Redis and Tair, rather than a process-allocated memory. Locking based on databases and files works in the same way as in a single-server system and only requires flag mutex. A distributed lock is more complex than the locks of processes in a single-server system because the distributed system must consider network latency and instability.

The distributed-tools component provides a distributed lock with the following features:

lock, trylock, and tryLock(long timeOut).The distributed lock provided by distributed-tools is easy to use. The provided lock template, DistributedLockTemplate, can be directly called through the following static method:

/**

* 分布式锁处理模板执行器

*

* @param lockKey 分布式锁key

* @param resultSupplier 分布式锁处理回调

* @param waitTime 锁等待时间

* @param unit 时间单位

* @param errCodeEnum 指定特殊错误码返回

* @return

*/

public static <T> T execute(String lockKey, Supplier<T> resultSupplier, long waitTime, TimeUnit unit,

ErrCodeEnum errCodeEnum) {

AssertUtils.assertTrue(StringUtils.isNotBlank(lockKey), ExceptionEnumType.PARAMETER_ILLEGALL);

boolean locked = false;

Lock lock = DistributedReentrantLock.newLock(lockKey);

try {

locked = waitTime > 0 ? lock.tryLock(waitTime, unit) : lock.tryLock();

} catch (InterruptedException e) {

throw new RuntimeException(String.format("lock error,lockResource:%s", lockKey), e);

}

if (errCodeEnum != null) {

AssertUtils.assertTrue(locked, errCodeEnum);

} else {

AssertUtils.assertTrue(locked, ExceptionEnumType.ACQUIRE_LOCK_FAIL);

}

try {

return resultSupplier.get();

} finally {

lock.unlock();

}

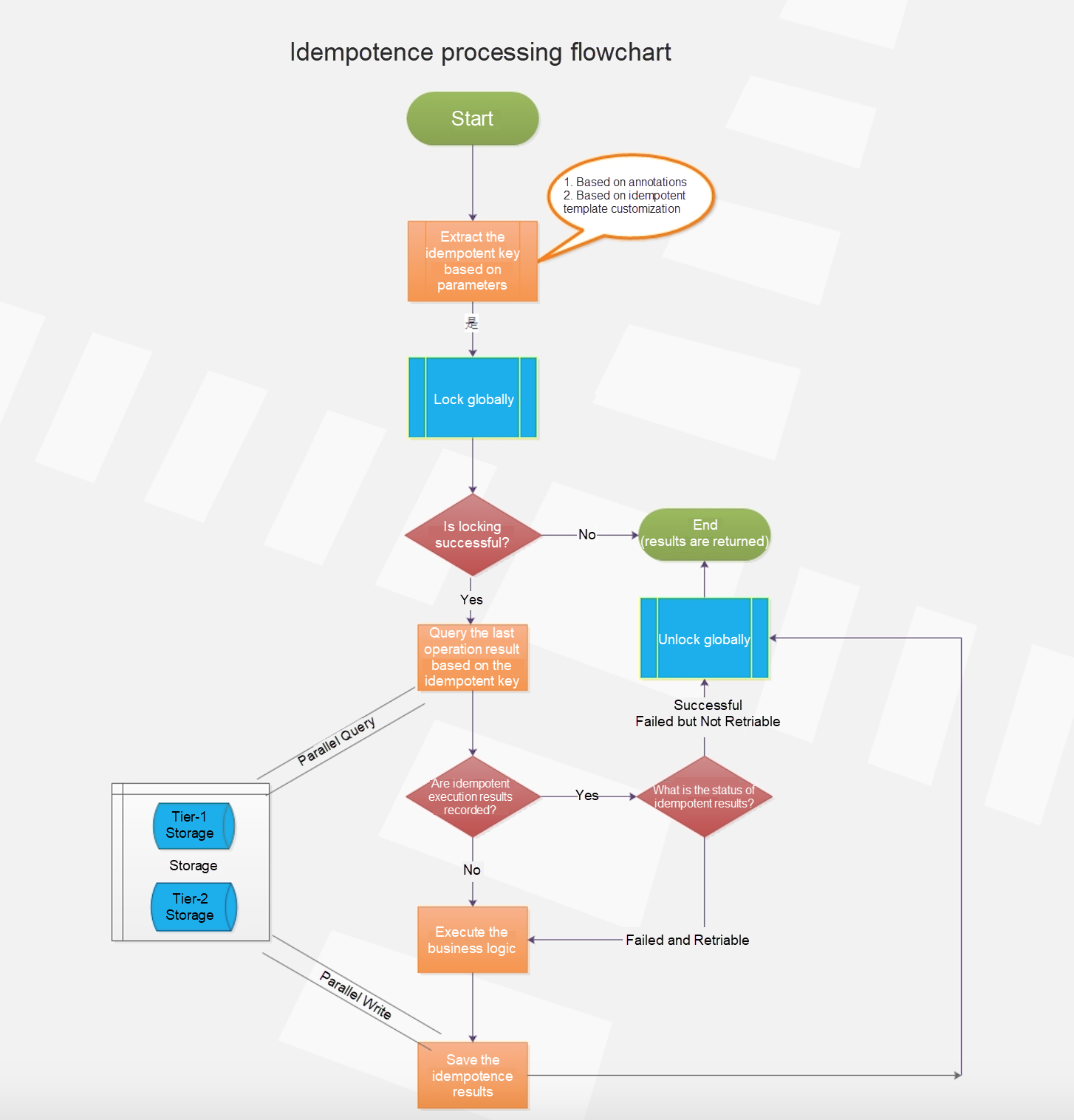

}Idempotence design is essential for distributed system design. A complex microservices system includes multiple subsystem services, each of which calls another service through remote procedure calls or RESTful APIs. Distributed systems are prone to network latency or interruption. This causes the call layer of a service to trigger a retry. Unacceptable system losses occur when calls to APIs of this type encounter exceptions and are retried. This must be avoided.

Idempotence is divided into two dimensions.

Many operations are performed in an actual system, and each operation is supposed to have the same effect or return the same result each time it is performed. Some common scenarios are as follows.

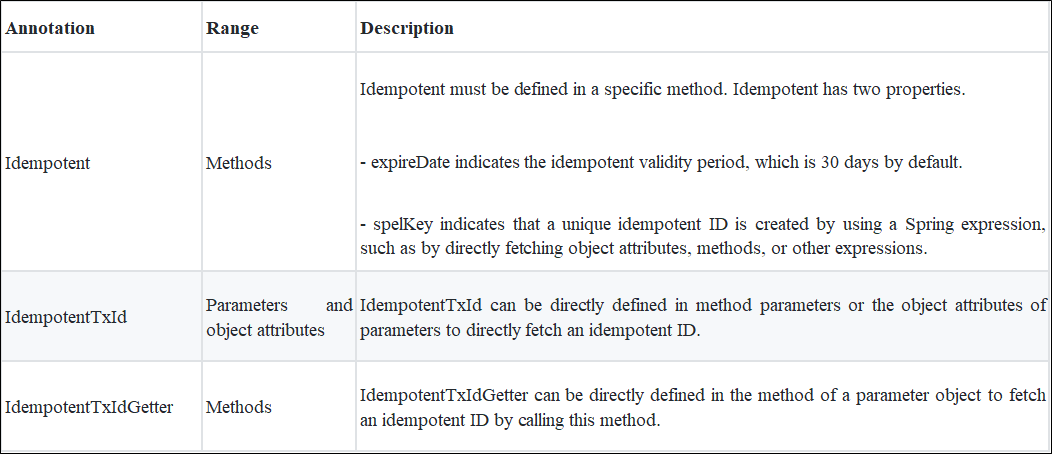

We need to understand the design of the idempotence feature provided by distributed-tools before using it.

IdempotentTxId and IdempotentTxIdGetter annotations to any method that requires idempotence. If you process services only by using an idempotent template, you must configure a unique idempotent key.IdempotentService through Spring dependencies by customizing tier-1 and tier-2 storage. We recommend that you use Tair MDB as tier-1 storage and LDB or TableStore as tier-2 storage. Tier-1 storage ensures high performance, and tier-2 storage ensures high reliability. For a parallel query on tier-2 storage, the result of the fastest idempotent query is returned. Data is written to tier-2 storage in parallel to improve performance.Writes and queries are isolated in tier-1 and tier-2 storage. An error in tier-1 storage has no impact on service implementation.

Next, let's discuss how to use the idempotence provided by distributed-tools. The following table lists the idempotent annotations provided by common-api.

The idempotent interceptor fetches an idempotent ID in the following order of priority:

spelKey property of the Idempotent annotation is null. If it is not null, the idempotent interceptor creates an idempotent ID based on the Spring expression defined by spelKey.IdempotentTxId annotation is included in the parameters. If it is, the idempotent interceptor directly fetches the parameter values to create an idempotent ID.IdempotentTxId annotation is included in these object attributes. If it is, the idempotent interceptor fetches the object attributes to create an idempotent ID.IdempotentTxIdGetter annotation is defined. If it is, the idempotent interceptor creates an idempotent ID through reflection.Sample code:

@Idempotent(spelKey = "#request.requestId", firstLevelExpireDate = 7,secondLevelExpireDate = 30)

public void execute(BizFlowRequest request) {

..................

}This sample code is used to fetch requestId from the request and use it as the idempotent key. The validity period in tier-1 storage is 7 days, and that in tier-2 storage is 30 days.

In addition to idempotent annotations, distributed-tools provides the IdempotentTemplate universal idempotent template. Before using this template, set tair.idempotent.enabled or redis.idempotent.enabled to true (the default value is false) and specify tier-1 storage to store idempotent results. Idempotent result storage is an optional setting.

Use IdempotentTemplate as follows.

/**

* 幂等模板处理器

*

* @param request 幂等Request信息

* @param executeSupplier 幂等处理回调function

* @param resultPreprocessConsumer 幂等结果回调function 可以对结果做些预处理

* @param ifResultNeedIdempotence 除了根据异常还需要根据结果判定是否需要幂等性的场景可以提供此参数

* @return

*/

public R execute(IdempotentRequest<P> request, Supplier<R> executeSupplier,

Consumer<IdempotentResult<P, R>> resultPreprocessConsumer, Predicate<R> ifResultNeedIdempotence) {

........

}request: Assembles the idempotent parameter IdempotentRequest. You can set this parameter and a unique idempotent ID.executeSupplier: This indicates an idempotent method logic. For example, you can use the function-type operation Supplier Callback of JDK 8 in payment and ordering scenarios.resultBiConsumer: This indicates the method for processing the returned idempotent results. If the parameter value is null, the default processing method is used. If the operation is successful or the returned error code does not support retry, results are directly returned. If the returned error code supports retry, the operation is retried.If the parameter value is not null, you can set ResultStatus to perform special logic processing on the returned idempotent results. ResultStatus indicates one of three states: (1.) Successful, (2.) Failed and Retriable, and (3.) Failed but Not Retriable.

Design Ideas for Improving the Transaction System of Ele.me, Alibaba's Food Delivery Service

212 posts | 13 followers

FollowAlibaba Clouder - January 8, 2021

Aliware - June 23, 2021

Alibaba Clouder - June 8, 2020

Alibaba Container Service - March 10, 2020

Alibaba Cloud Native - February 7, 2021

Alibaba Clouder - September 2, 2020

212 posts | 13 followers

Follow Tair (Redis® OSS-Compatible)

Tair (Redis® OSS-Compatible)

A key value database service that offers in-memory caching and high-speed access to applications hosted on the cloud

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by Alibaba Cloud Native