By Bao Kai

As the Internet and AI develop rapidly, the need to store and efficiently search unstructured text data in modern application systems has become more urgent than ever. Full-text indexing is a key technology for text search and plays a critical role in fuzzy match, content search, and multimodal search.

Traditional relational databases such as MySQL and PostgreSQL also offer full-text indexing. However, because they typically do not adopt mainstream inverted-index technology, they commonly have the following shortcomings:

To address these limits, many systems choose to deploy a dedicated full-text search engine such as Elasticsearch or OpenSearch, and synchronize data to the engine for efficient text search. However, using an external search engine introduces several notable disadvantages:

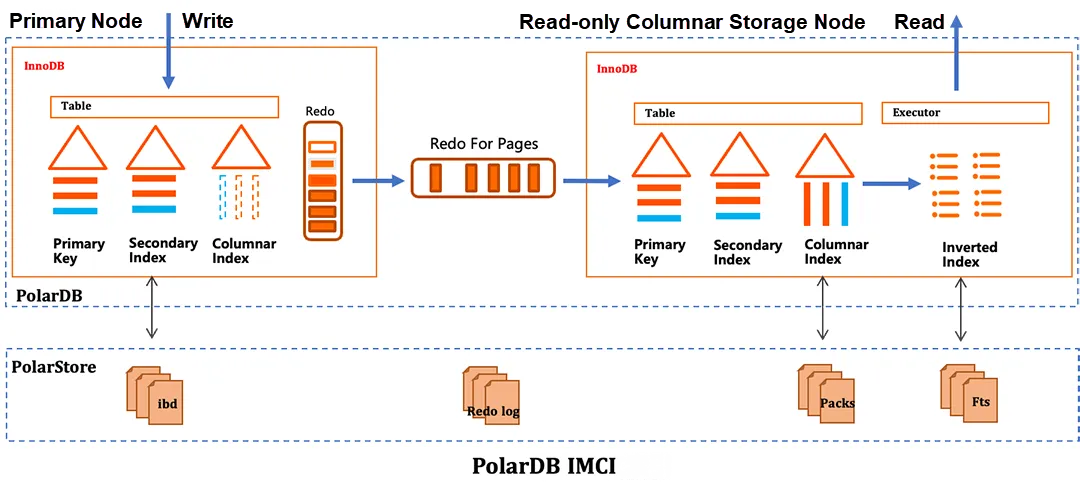

To address the preceding issues, PolarDB In-Memory Column Index (IMCI) introduces an entirely new approach: database-native columnar full-text indexing. This design significantly improves query performance in fuzzy search scenarios such as e-commerce product search, log analysis, document and knowledge base retrieval, and user behavior and profile analysis. Together with built-in vector indexes, IMCI also enables multimodal hybrid search, positioning PolarDB as a unified data platform that supports online transactions, real-time analysis, full-text search, and vector search.

The columnar inverted-index technology in PolarDB, part of the Alibaba Cloud ApsaraDB ecosystem, primarily incorporates tokenizers, the Single-Pass In-Memory Indexing (SPIMI) algorithm, the Roaring Bitmap (RBM) compression algorithm for postings lists, and a Finite State Transducer (FST)-based dictionary implementation.

PolarDB's columnar full-text indexing supports multiple tokenizers, including token, ngram, jieba, ik, and json. It also provides tools such as dbms_imci.fts_tokenize for testing tokenization effects and managing custom dictionaries.

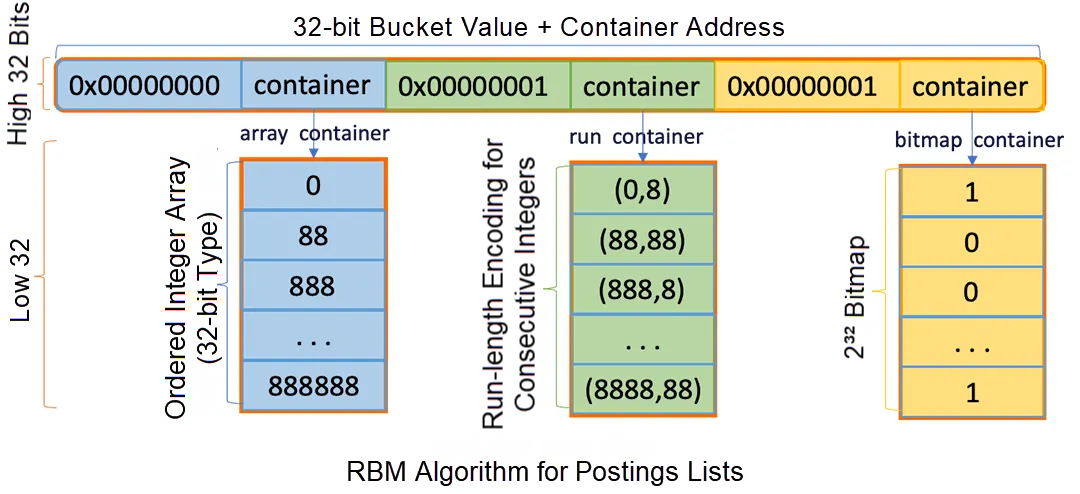

A postings list is essentially a collection of document IDs. Its core technical challenges lie in achieving extreme compression and efficient computation. In PolarDB's columnar full-text indexing, a postings list stores the set of row IDs in all columnar indexes containing a given term, along with optional term frequency and document frequency information. Each term corresponds to a postings list.

The compression of postings lists primarily relies on the Frame of Reference (FOR) algorithm and the RBM algorithm. Bitmap-based RBM is more commonly adopted. For example, it is widely used in systems such as Elasticsearch. As a high-performance compressed bitmap data structure, RBM uses different types of containers to efficiently handle both sparse and dense numeric arrays, achieving strong compression while enabling extremely fast set operations. For more information, see Better bitmap performance with Roaring Bitmaps.

The RBM implementation for PolarDB's columnar inverted-index technology is extended from CRoaring [1] and customized for different cardinalities. It supports on-disk space compaction, single instruction multiple data (SIMD) acceleration during computation, and value-range pre-filtering and iterator optimizations during query processing.

[1] https://github.com/RoaringBitmap/CRoaring

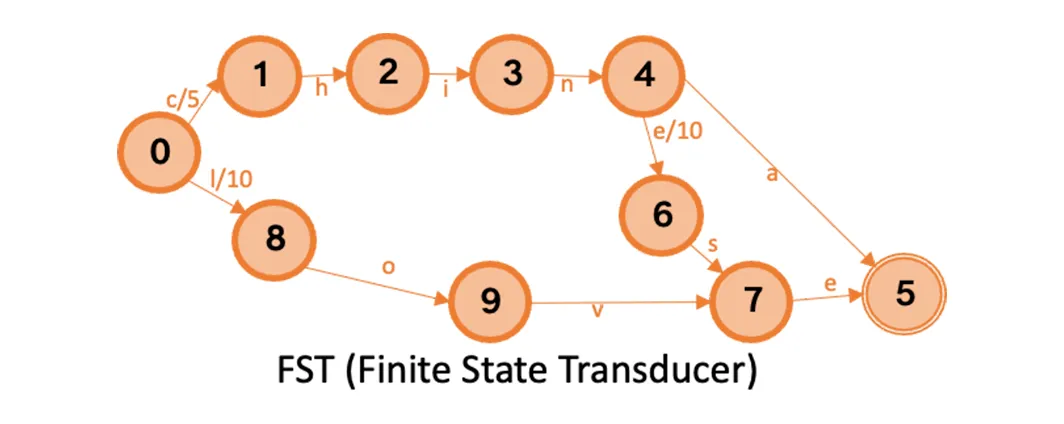

The core idea of an inverted index is to use a dictionary to quickly find the postings list corresponding to a given term. Common dictionary implementations include trie structures, B+ trees, and FSTs.

The FST algorithm provides a good balance between time and space complexity, and is widely used in systems such as Elasticsearch. For more information about the FST algorithm, see Direct Construction of Minimal Acyclic Subsequential Transducers.

PolarDB IMCI's full-text indexing uses an FST-based dictionary to record the relative offsets from terms to their postings lists. For example, given the document list "I am Chinese, and I love China", the terms China, Chinese, and love, together with their corresponding offsets (5, 10, and 15), are inserted in order to build the dictionary.

Inverted index building generally involves tokenizing documents and generating postings lists and dictionaries. Common building algorithms include the block-based Blocked Sort-Based Indexing (BSBI) algorithm and the in-memory, hash-based

SPIMI algorithm. The SPIMI algorithm uses a "Single pass + Memory partitioning + Block-level flush + Final merge" approach to efficiently build high-quality inverted indexes under limited memory. It avoids global sorting and is considered a classic and efficient indexing method in information retrieval systems. For more information, see Efficient Single-Pass Index Construction for Text Databases. PolarDB's columnar full-text indexing adopts the SPIMI algorithm to build its inverted indexes.

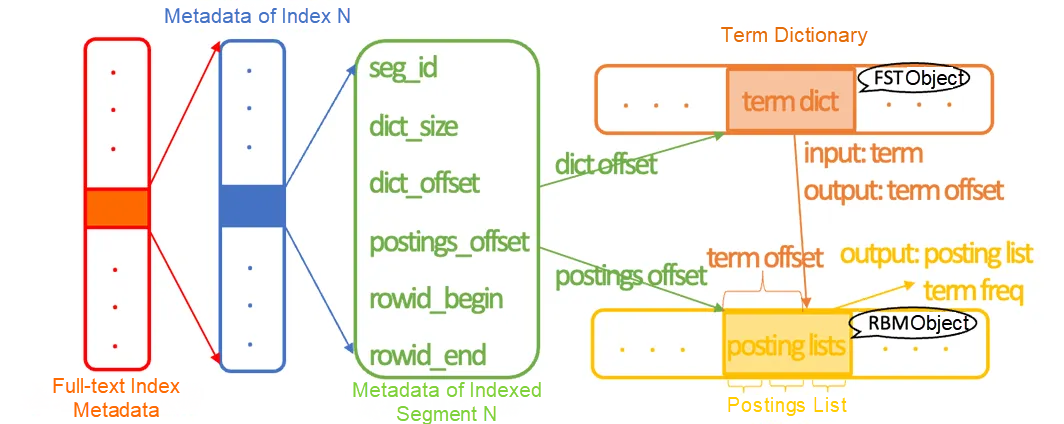

During full-text index building, PolarDB continuously scans the columnar data of a column. For each row, the text is tokenized to obtain a set of terms. Each term and its corresponding row ID are then inserted into an in-memory hash table, while memory usage is tracked. If the size of a segment exceeds a predefined threshold, the hash table is flushed to generate a dictionary and postings lists. This process repeats until all columnar data is processed. The process of building a dictionary from the hash table begins by sorting the hash table by term. All postings lists in the hash table are then written to disks, and their respective offsets are recorded. Next, each term and its corresponding offset are sequentially inserted into an FST to build the dictionary. Finally, the dictionary is compressed and flushed to disks, and the segment metadata is updated to reflect the completed block.

Thanks to its local building approach and mark-delete design, PolarDB's columnar full-text indexing avoids full index rebuilding under frequent updates, requiring only incremental building. During updates, entries are simply marked as deleted, which generates minimal overhead and does not impact write performance. In addition, background asynchronous merge operations further generate more compact inverted indexes.

PolarDB's columnar full-text indexing supports the match function, as well as accelerated LIKE and JSON operations.

Queries can be run by using two methods: the FtsTableScan operator and the match expression. The columnar optimizer estimates the filter rate based on collected statistics and selects the appropriate method. If the operator method is selected, the optimizer rewrites the match function into an FtsTableScan + Filter operator.

PolarDB's columnar inverted indexes are organized into inverted segments, each consisting of independent metadata, a dictionary, and a set of postings lists. The metadata is kept resident in memory, whereas the dictionary is cached by using the least recently used (LRU) policy. Retrieval operates at the segment level: During a query, each inverted segment is traversed, and the starting address of the dictionary is obtained from the metadata. The dictionary data is then read to construct a dictionary object, which is used to look up the given term. If the term is found, its relative postings list address is retrieved, and by adding the postings list's starting address, the complete postings list can be accessed.

ESRally is the official benchmarking and stress-testing tool provided by Elasticsearch. The details of the http_logs dataset can be found in the Elasticsearch Rally Hub [2], containing approximately 247 million rows.

[2] https://github.com/elastic/rally-tracks/blob/master/http_logs/README.md

To evaluate retrieval performance, tests were conducted by using tokens ranging from high-frequency to low-frequency. Under hot-data conditions and with single-threaded execution, PolarDB's match function demonstrated orders-of-magnitude improvements in query performance, and its performance was largely unaffected by data temperature such as hot or cold.

| Query | High-frequency HTTP (247 million lines) | Relatively high-frequency French (15 million lines) | Relatively low-frequency POST (100 thousand lines) | Low-frequency Mozilla (100 lines) |

|---|---|---|---|---|

| like | 1 minutes 21.96 seconds | 1 minutes 18.44 seconds | 1 minutes 24.59 seconds | 1 minutes 31.19 seconds |

| smid like | 25.46 seconds | 22.80 seconds | 21.98 seconds | 21.60 seconds |

| match (self-developed FTS library) | 2.43 seconds | 0.25 seconds | 0.01 seconds | 0.00 seconds |

| Doris match_any (CLucene library) | 3.49 seconds | 0.24 seconds | 0.03 seconds | 0.03 seconds |

Full-text indexing is widely used in business applications such as log analysis, product search, content search, and multimodal hybrid search.

The primary requirement of log analysis systems is to quickly find logs containing specific keywords at low costs. Current industry solutions generally fall into two categories:

PolarDB's columnar full-text indexing, combined with the cold-data archiving feature of partitioned tables, addresses these challenges effectively. Its core technology, inverted index, enables the rapid retrieval of logs containing matching keywords from massive datasets. The cold-data archiving feature allows older partitioned logs to be stored in low-cost OSS buckets without affecting query performance, because recent logs are queried in most cases.

In summary, PolarDB's columnar full-text indexing, together with hot-and-cold data separation, fully meets the demands of log analysis while delivering several-fold improvements in overall cost-effectiveness.

E-commerce platforms often need to search for products by name or description and quickly return a list of items containing the keyword, ranked by relevance. Common approaches include:

This function is simple and easy to use, but offers poor performance and does not support relevance-based ranking. PolarDB's full-text indexing supports BM25 relevance scoring.

It is suitable for small, quasi-static datasets, but struggles to handle frequent updates and high-concurrency queries. It also lacks proper Chinese tokenizers.

PolarDB's full-text indexing supports multiple mainstream Chinese tokenizers and customizable dictionaries, allowing better matching for customized product data.

Such search engines can handle basic product searches, but add architectural complexity and costs. They are limited in handling complex multi-table joins and large-scale aggregate queries.

PolarDB's built-in columnar full-text indexing simplifies the architecture and accelerates queries by using columnar indexes.

Content search is a traditional application of full-text indexing, primarily used for article keyword retrieval, customer feedback and comment analysis, and user behavior and profile analysis.

PolarDB's columnar full-text indexing provides diverse Chinese tokenizers and uses columnar concurrent execution, offering an efficient and precise solution for large-scale content search.

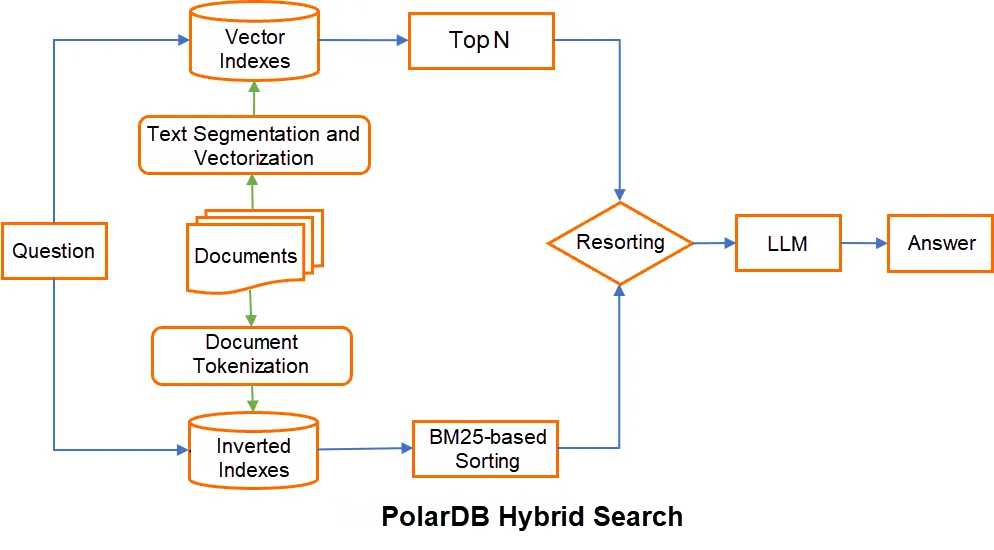

Hybrid search represents a new application of full-text indexing, combining full-text indexes and vector indexes to search documents.

Full-text indexing relies on keywords to accurately match relevant documents and rank them by relevance scores. It excels at exact match and is particularly effective when users know exactly what they are looking for.

Vector embeddings capture semantic similarity, where vectors close in the embedding space represent semantically similar content. Vector indexes use these embeddings to find documents with similar meanings, making them well-suited for natural language queries, similarity search, clustering, and retrieval-augmented generation (RAG) applications.

Hybrid search integrates full-text search and semantic search, combining the strengths of both approaches to produce a unified ranking. This enhances search accuracy and robustness and is especially valuable in RAG applications.

PolarDB's hybrid search, based on its built-in full-text indexes and vector indexes, enables precise and efficient recall in RAG systems that involve specialized knowledge domains.

PolarDB's columnar full-text indexing employs mainstream inverted-index technology along with a mark-delete design, enabling efficient text search with relevance scoring. PolarDB's built-in full-text indexing simplifies system architecture, and when combined with columnar real-time analysis, it effectively handles the high-concurrency retrieval of large text datasets under frequent updates, providing enterprises with a cost-effective platform for large-scale data search. With the rise of foundation models and new applications, PolarDB also offers hybrid search that integrates full-text indexing and vector indexing. Combined with the inductive generation capabilities of Qwen models built into PolarDB for AI, PolarDB effectively addresses the limits of large language models (LLMs) in accuracy and real-time responsiveness.

Looking ahead, PolarDB will continue to expand its capabilities across online transactions, real-time analysis, full-text indexing, vector search, and stream processing, further deepening the integration of databases, search, stream processing, and AI. This empowers enterprises to build unified, all-in-one data platforms.

[Infographic] Highlights | Database New Features in December 2025

ApsaraDB - October 24, 2025

ApsaraDB - July 9, 2025

ApsaraDB - November 18, 2025

ApsaraDB - June 7, 2022

ApsaraDB - August 7, 2023

ApsaraDB - August 8, 2023

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB