By Yan Hua

This article systematically analyzes the high-availability (HA) architecture of PolarDB-X, a cloud-native distributed database of Alibaba Cloud. From disaster recovery fundamentals and HA design principles to real-world deployment solutions, this article reveals how PolarDB-X achieves financial-grade disaster recovery by using multi-layered redundancy and automated failover mechanisms.

The core of disaster recovery design lies in applying tiered protection strategies to different levels of risks. Based on the scope of impact, fault risks can be categorized into four levels.

| Risk level | Fault probability | Scope of impact | Typical faults |

|---|---|---|---|

| Process-level | High | A single service | OOM errors, segmentation faults, and killed processes |

| Machine-level | Medium | All services on a single machine | Kernel panics and disk failures |

| Data center-level | Low | All machines in a single data center | Power outages, fire, and fiber disconnections |

| City-level | Extremely low | All data centers in a single city | Earthquakes and floods |

In the current national standard GB/T 20988 - 2025 "Cybersecurity technology - Disaster recovery specifications for information systems," disaster recovery capabilities are defined in six levels.

| Level | Name | RTO | RPO |

|---|---|---|---|

| Level 1 | Basic support | Greater than or equal to two days | One to seven days |

| Level 2 | Alternate site support | Greater than or equal to 24 hours | One to seven days |

| Level 3 | Electronic transmission and partial device support | Greater than or equal to 12 hours | Several hours to one day |

| Level 4 | Electronic transmission and complete device support | Several hours to one day | Several hours |

| Level 5 | Real-time data transmission and complete device support | Several minutes to several hours | 0 |

| Level 6 | Zero data loss and remote cluster support | Several minutes | 0 |

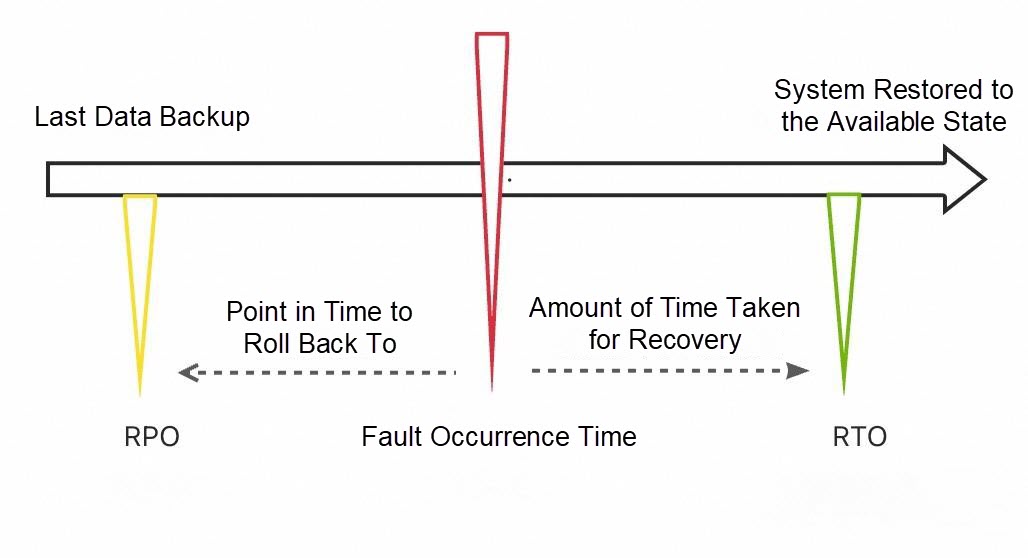

The core metrics are recovery point objective (RPO) and recovery time objective (RTO):

• RPO: the required point in time to which the system and data must be restored after a disaster.

• RTO: the maximum acceptable delay between the interruption and recovery of a service.

The fundamental principle of database HA and disaster recovery is to ensure rapid data restoration and continuous service delivery under various risks, faults, or disasters by employing multi-layered data protection and redundancy mechanisms. The key technologies include three main parts:

• Periodic full backups provide the baseline for disaster recovery.

• Incremental log backups capture data changes in real time or at scheduled intervals based on transaction logs, recording physical or logical entries for each operation such as INSERT, UPDATE, or DELETE.

• Real-time replica synchronization uses primary/secondary replication protocols or distributed consensus protocols (such as Paxos or Raft) to achieve data synchronization across multiple nodes.

| Replication type | Description | Data consistency | Failover speed |

|---|---|---|---|

| Synchronous replication | The primary node must wait for all secondary nodes to complete data persistence before it can commit a transaction. | RPO = 0 | RTO < 30 seconds |

| Asynchronous replication | The primary node does not wait for any secondary node to persist data when it commits a transaction. | RPO > 0 | Within minutes |

| Semi-synchronous replication | The primary node must wait for a number of secondary nodes to complete data persistence before it can commit a transaction. If a replication latency occurs, the mechanism degrades to asynchronous replication. | RPO ≈ 0 | RTO < 30 seconds |

| Distributed consensus protocol | The primary node must wait for a majority (more than half) of secondary nodes to complete data persistence before it can commit a transaction. | RPO = 0 | RTO < 8 seconds |

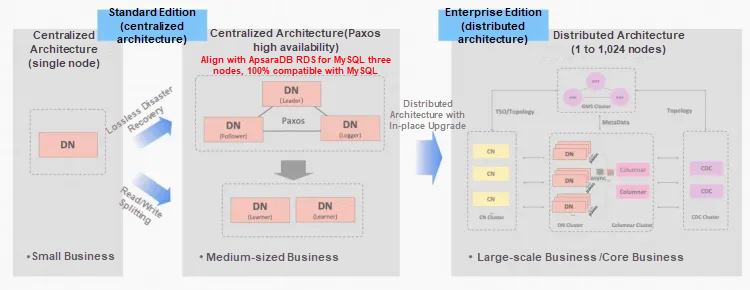

PolarDB-X integrates centralized and distributed architectures. It is offered in two editions: Standard Edition (centralized-compatible) and Enterprise Edition (distributed-compatible). In a database that integrates centralized and distributed architectures, storage nodes independently operate in a centralized manner and are fully compatible with the standalone database model.

When business grows and distributed scaling becomes necessary, the centralized Standard Edition can be upgraded to the distributed Enterprise Edition. The Enterprise Edition adopts a shared-nothing, compute-storage separation architecture, delivering financial-grade HA, distributed horizontal scalability, hybrid-workload support, low-cost storage, and extreme elasticity. Each component implements its own HA mechanisms:

• Compute node (CN): provides distributed routing and computation, and uses the two-phase commit protocol (2PC) to coordinate distributed transactions. A CN also executes DDL statements in a distributed manner and maintains global indexes. CNs are stateless. Failure of any single CN process does not affect overall compute-layer availability. For production, we recommend that you deploy at least two CNs.

• Data node (DN): provides highly reliable storage services and uses multiversion concurrency control (MVCC) for distributed transactions. A DN also provides the pushdown computation feature. DNs use the distributed consensus protocol Paxos to achieve high availability. Failure of any single DN does not compromise overall DN availability. Based on Paxos requirements, a production DN cluster contains at least three nodes, typically deployed as two full-featured replicas plus one logger replica.

• Global meta service (GMS): provides distributed metadata and a global timestamp distributor named Timestamp Oracle (TSO), and maintains meta information such as tables, schemas, and statistics. GMS also maintains security information such as accounts and permissions. A GMS is effectively implemented as an independent DN cluster, with its HA ensured by the same DN-level HA mechanisms.

• Change data capture (CDC) node: provides a primary/secondary replication protocol that is compatible with MySQL. The primary/secondary replication protocol is compatible with the protocols and data formats that are supported by MySQL binary logging. CDC uses the primary/secondary replication protocol to exchange data. CDC nodes are stateful, with their states persisted in GMS. For production, we recommend that you deploy at least two CDC nodes. If the primary CDC node fails, the secondary CDC node automatically promotes itself to primary. If both primary and secondary CDC nodes fail, a CDC node can be rebuilt from HA metadata stored in GMS.

• Columnar node: builds columnar indexes on top of Object Storage Service (OSS) and provides real-time updates and snapshot-consistent columnar queries. Columnar nodes are stateful, with their states persisted in both GMS and OSS. OSS is a highly available distributed file system built on PANGU. In production, we recommend that you deploy at least two columnar nodes in a primary/secondary configuration. If the primary columnar node fails, the secondary columnar node automatically promotes itself to primary. If both primary and secondary columnar nodes fail, a columnar node can be rebuilt from HA metadata stored in GMS and persisted objects in OSS.

In summary, the cluster-level HA of PolarDB-X fundamentally relies on the HA capabilities of DNs, which can deliver an RPO of 0 and an RTO less than 8 seconds.

| Issue type | Synchronous replication | Asynchronous replication | Semi-synchronous replication | Distributed consensus Paxos protocol |

|---|---|---|---|---|

| Data consistency | RPO = 0 | RPO > 0, with inevitable data loss | RPO ≈ 0, with possible data loss | RPO = 0 |

| Failover duration | Automatic failover within seconds | Depending on manual verification, modification, and switchover | Depending on manual verification, modification, and switchover | Automatic failover within seconds |

| Network partition tolerance | Unavailable | With data loss | Degraded to asynchronous replication, with data loss | Available if the majority of nodes are alive |

| Performance | Low | High | Medium | Medium-high |

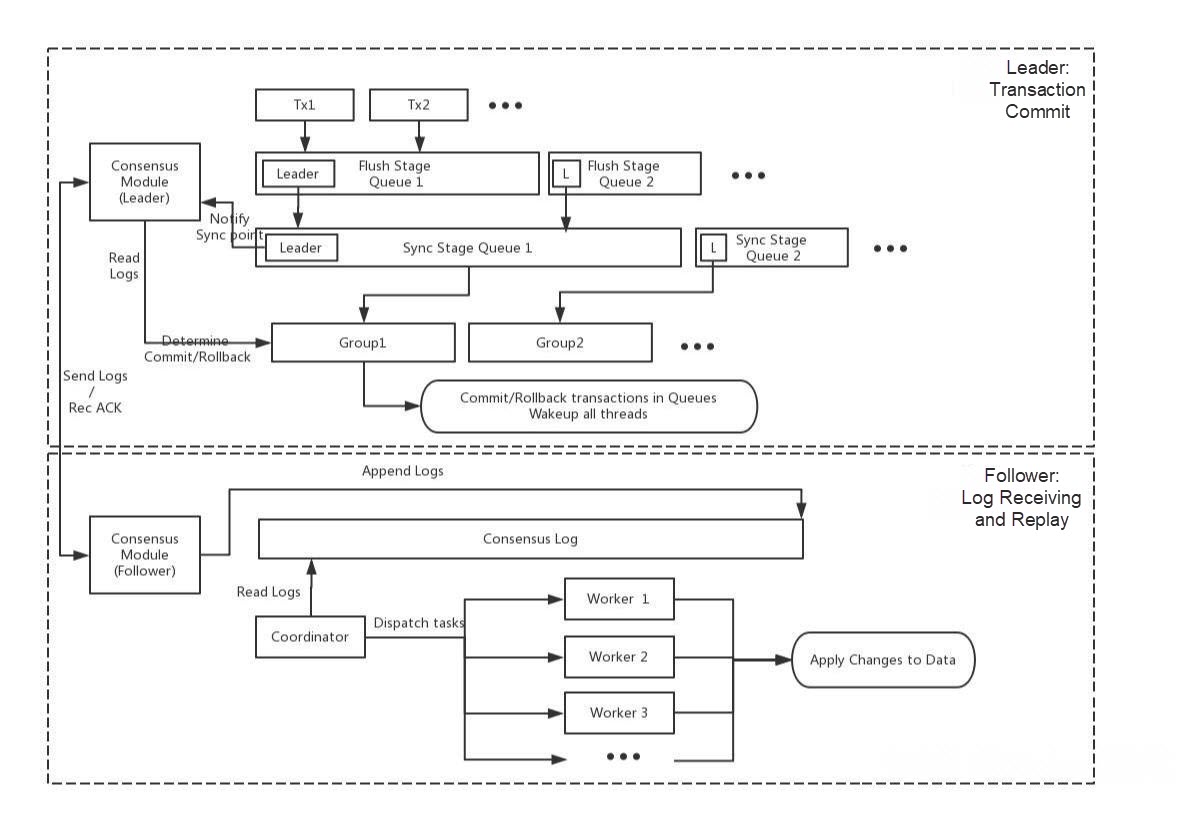

To address the limits of traditional primary/secondary replication, PolarDB-X implements a Paxos-based distributed consensus solution and extends it with its own enhanced, innovative XPaxos design.

• Paxos optimizations with strong leadership

• Deep integration of XPaxos with the logger module

• Performance-oriented enhancements

HA deployment for PolarDB-X DNs must be carefully designed around disaster recovery objectives (RPO/RTO), while considering the risk levels (process/machine/data center/city) of business scenarios. The following sections describe practical deployment solutions tailored to different disaster recovery requirements.

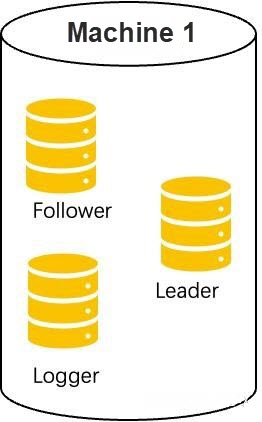

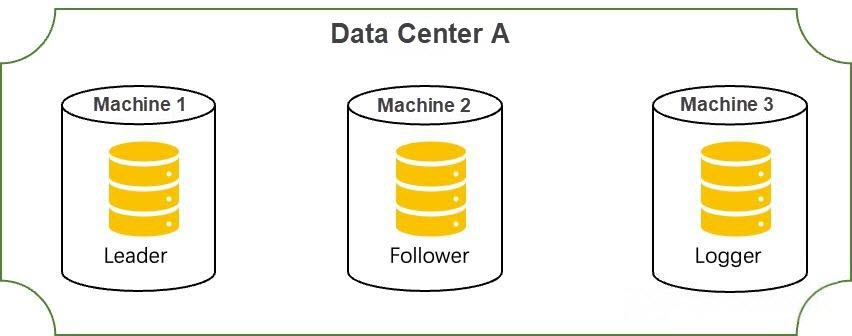

Scenarios: used to mitigate the high-frequency risk of single-process crashes, meeting the most basic single-machine HA requirements.

Limits and suggestions: unable to meet machine-level, data center-level, or city-level HA disaster recovery requirements. Recommended only for testing environments.

Deployment method:

• Topology: Deploy three processes (two full-featured replicas + one logger replica) on a single machine.

• Fault recovery:

• Key configuration points:

Scenarios: used to mitigate high-frequency risks such as single-process crashes or machine hardware failures, meeting process-level and machine-level HA requirements.

Limits and suggestions: unable to meet data center-level or city-level HA disaster recovery requirements. Recommended only for non-critical business workloads.

Deployment method:

• Topology: Deploy three nodes (two full-featured replicas + one logger replica) on different machines in the same data center.

• Fault recovery:

• Key configuration points:

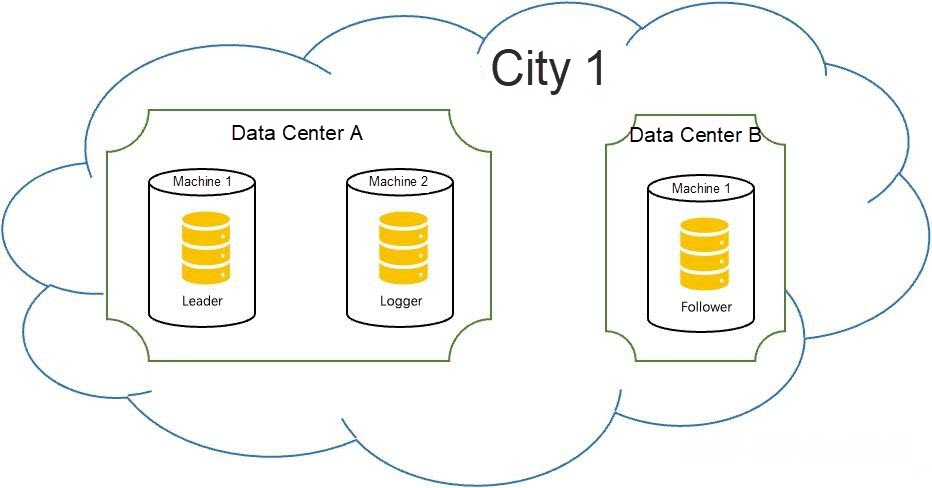

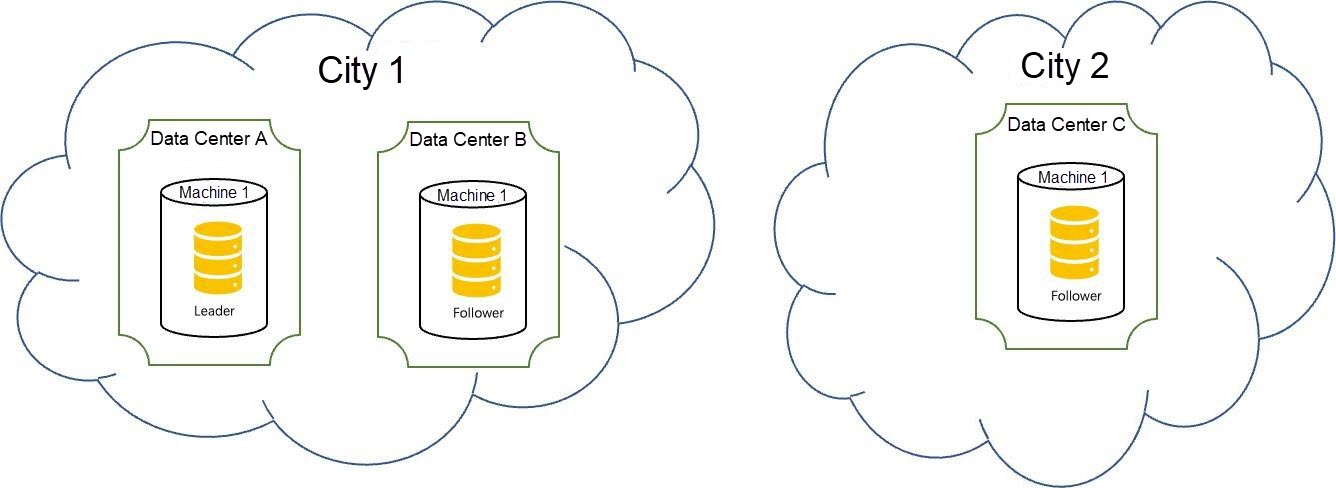

Scenarios: used to mitigate infrastructure failures such as data center power outages or fiber disconnections and ensure business continuity within the same city, meeting process-level, machine-level, and data center-level HA requirements.

Limits and suggestions: unable to meet city-level HA disaster recovery requirements. Data center-level failover has an approximately 50% probability of requiring manual or operational intervention and cannot be fully automated. Recommended for standard business workloads.

Deployment method:

• Topology: Deploy three nodes (two full-featured replicas + one logger replica) in two data centers (A and B) in the same city, where the leader and logger replicas are in Data Center A and the follower replica is in Data Center B.

• Fault recovery:

• Key configuration points:

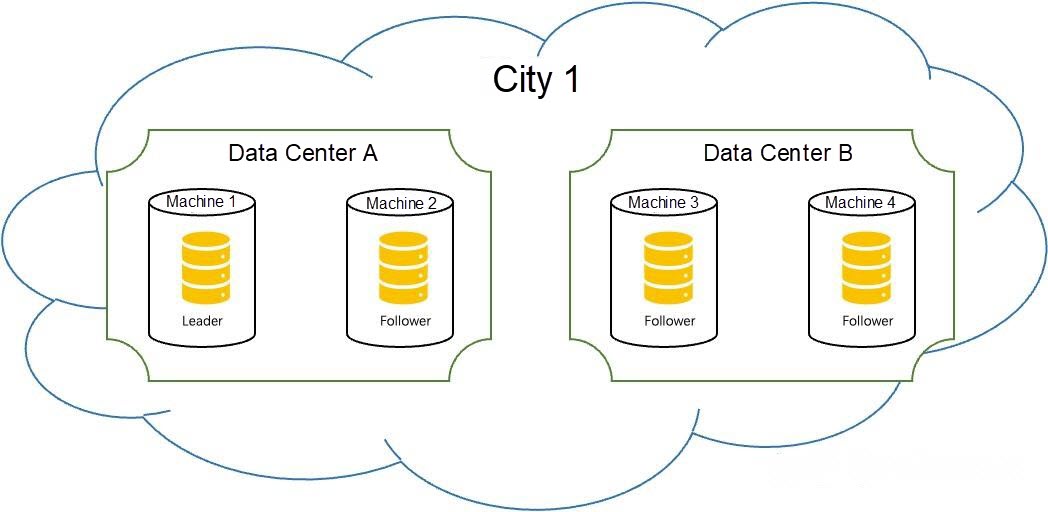

Scenarios: used to mitigate infrastructure failures such as data center power outages or fiber disconnections and ensure business continuity within the same city, fully meeting process-level, machine-level, and data center-level HA requirements.

Limits and suggestions: unable to meet city-level HA disaster recovery requirements. Data center-level failover requires manual or operational intervention and cannot be fully automated. Recommended for standard business workloads.

Deployment method:

• Topology: Deploy four nodes (four full-featured replicas) in two data centers (A and B) in the same city, where the leader and follower replicas are in Data Center A and two follower replicas are in Data Center B.

• Fault recovery:

• Key configuration points:

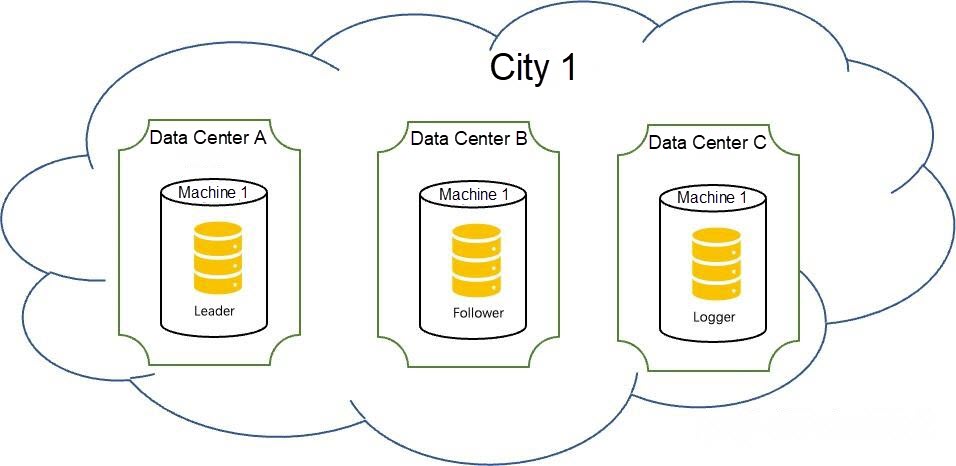

Scenarios: used to mitigate infrastructure failures such as data center power outages or fiber disconnections and ensure business continuity within the same city, meeting process-level, machine-level, and data center-level HA requirements.

Limits and suggestions: unable to meet city-level HA disaster recovery requirements. Recommended for core business workloads.

Deployment method:

• Topology: Deploy three nodes (two full-featured replicas + one logger replica) in three data centers in the same city, with one node in each data center.

• Fault recovery:

• Key configuration points:

Scenarios: used to mitigate city-level disasters such as earthquakes or floods, enabling geo-disaster recovery and rapid restoration.

Limits and suggestions: only partially meets city-level HA disaster recovery requirements. City-level failover has an approximately 50% probability of requiring manual or operational intervention and cannot be fully automated. Recommended for key business workloads.

Deployment method:

• Topology: Deploy three nodes (three full-featured replicas) in three data centers across two cities, with one node in each data center. Deploy the leader node in the city with two data centers.

• Fault recovery:

• Key configuration points:

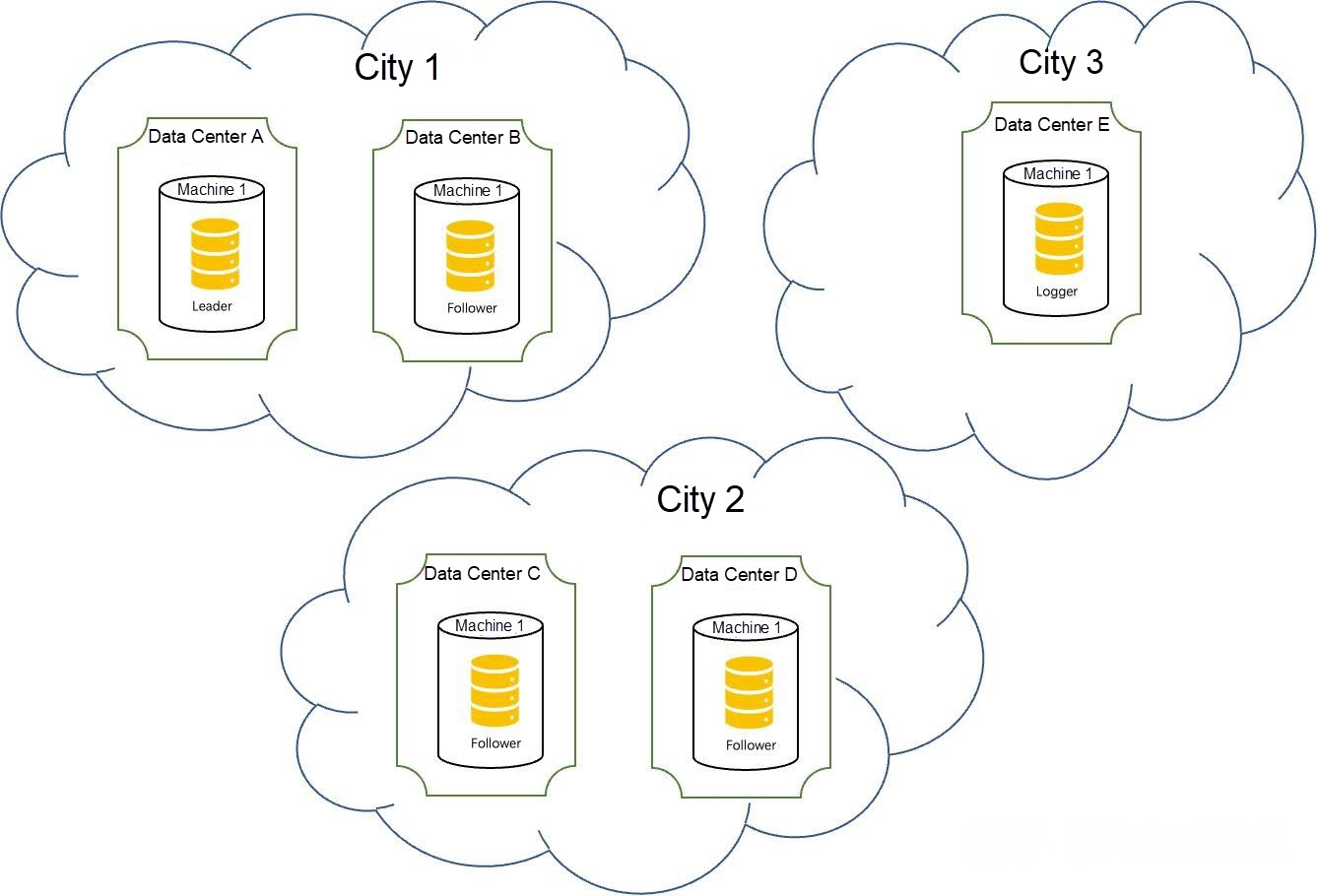

Scenarios: used to mitigate city-level disasters such as earthquakes or floods, enabling geo-disaster recovery and rapid restoration.

Suggestions: recommended for financial-grade or other key business workloads.

Deployment method:

• Topology: Deploy five nodes (four full-featured replicas + one logger replica) in five data centers across three cities, with one node in each data center. Deploy a leader node and a follower node in City 1, two follower nodes in City 2, and a logger node in City 3.

• Fault recovery:

• Key configuration points:

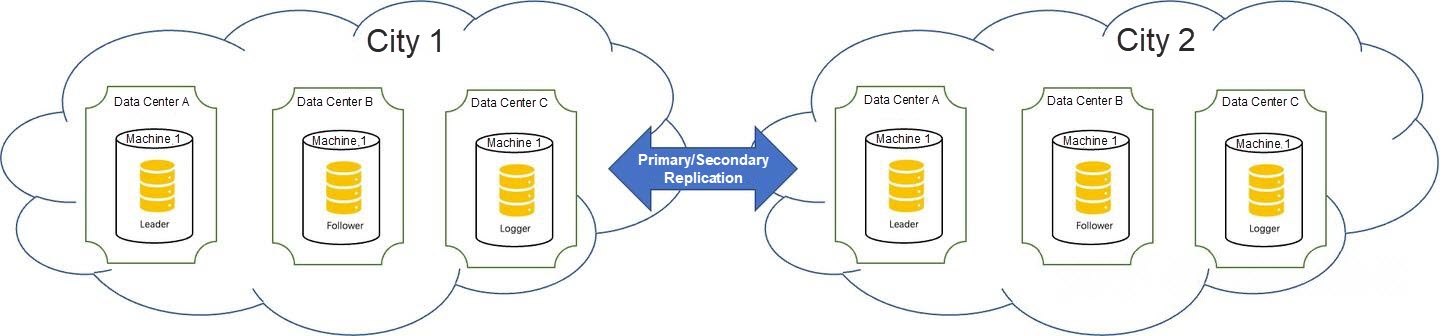

Scenarios: Two independent clusters form a global database network (GDN), meeting requirements for multicloud deployment and active geo-redundancy.

Limits and suggestions: The two independent clusters use a semi-synchronous community primary/secondary replication scheme, so the RPO is not 0. Recommended for standard business workloads.

Deployment method:

• Topology:

• Fault recovery:

When a PolarDB-X DN is deployed as the primary cluster:

When a PolarDB-X DN is deployed as the secondary cluster:

• Key configuration points:

High availability is no longer optional. It is the baseline for business survival. PolarDB-X fundamentally reshapes the disaster recovery paradigm for distributed databases: from backup-and-restore to real-time self-healing, from manual failover to automated, weight-based leader election, and from protecting against process-level crashes to city-level disasters. With its zero-data-loss guarantee, second-level failover, and cost-efficiency, PolarDB-X establishes a new financial-grade disaster recovery standard for the cloud era.

Solution: Manual Logical Migration from a Self-managed MySQL Database to PolarDB-X Standard Edition

ApsaraDB - November 12, 2024

ApsaraDB - June 19, 2024

ApsaraDB - October 24, 2024

ApsaraDB - March 17, 2025

ApsaraDB - January 17, 2025

ApsaraDB - July 23, 2021

Application High availability Service

Application High availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More Backup and Archive Solution

Backup and Archive Solution

Alibaba Cloud provides products and services to help you properly plan and execute data backup, massive data archiving, and storage-level disaster recovery.

Learn MoreMore Posts by ApsaraDB