Che Yang

According to a Gartner survey of global CIOs, artificial intelligence (AI) will become the disruptive driving force of reshaping the business models of all organizations in 2019. Computing power and costs are two critical parts of AI. The Cloud Native technology, with Docker and Kubernetes as two representatives, enables a new model for AI and allows GPUs to be put into a unified resource pool for scheduling and management. This improves the usage of GPU resources and eliminates the cost of manual management. Kubernetes, a leading container and cluster service provider in the world, provides the capability to schedule Nvidia GPUs in container clusters, mainly assigning one GPU to one container This can implement good isolation and ensure that applications that use a GPU will not be affected by other applications. This capability is also suitable for training deep learning models. However, a lot of resources are still wasted in model development and prediction scenarios. In these scenarios, we may want to share a GPU in a cluster.

GPU sharing for cluster scheduling is to let more model development and prediction services share GPU, therefore improving Nvidia GPU utilization in a cluster. This requires the division of GPU resources. GPU resources are divided by GPU video memory and CUDA Kernel thread. Generally, cluster-level GPU sharing is mainly about two things:

1. Scheduling;

2. Isolation. This article mainly describes scheduling. In isolation solutions, users are required to pass specific application restriction parameters (for example, by using per_process_gpu_memory_fraction of TensorFlow). In the future, options on based Nvidia MPS will be available. GPU solutions will also be planned.

For fine-grained GPU card scheduling, Kubernetes community does not have a good solution at present. This is because the Kubernetes definition of extended resources, such as GPUs, only supports the addition and subtraction of integer granularity, but cannot support the allocation of complex resources. For example, if you want to use Pod A to occupy half of the GPU card, the recording and calling of resource allocation cannot be implemented in the current Kubernetes architecture design. Here, Multi-Card GPU Share relates to actual vector resources, while the Extended Resource describes scalar resources.

Therefore, we have designed an out-of-tree Share GPU Scheduling Solution, which relies on the existing working mechanism of Kubernetes:

The advantage of this Share GPU Scheduling Extension is that Kubernetes extension and plugin mechanism are used, which is not invasive to core components, such as the API Server, the Scheduler, the Controller Manager and the Kubelet. This makes it easy for users to apply this solution on different Kubernetes versions without rebasing code or rebuilding the Kubernetes binary package.

1. Clarify the problem and simplify the design. In the first step, only scheduling and deployment are carried out, and then the control of run-time memory is implemented.

Many customers have a clear requirement to allow multi-AI applications to be scheduled to the same GPU. They can accept controlling the size of memory from the application level, and use gpu_options.per_process_gpu_memory_fraction to control the memory usage of the application. The first problem we need to solve is to simplify using memory as the scheduling scale, and transfer the size of the memory to the container in the form of parameters.

2. No intrusive modification

In this design, the core of the following designs of Kubernetes is not modified: the design of the Extended Resource, the implementation of the Scheduler, the mechanism of the Device Plugin, and the related design of the Kubelet. Reusing the Extended Resource to describe the application API for shared resources. The advantage is to provide a portable solution that users can use on the native Kubernetes.

3. The mode of memory-based scheduling and card-based scheduling can coexist in the cluster. But within the same node, they are mutually exclusive and cannot coexist. In this case, resources are allocated either by the number of cards or by the memory.

1. The Extended Resource definition of Kubernetes is still used, but the minimum unit to measure dimension is changed from 1 GPU card to the MiB of GPU memory. If the GPU used by the node is a single-card 16 GiB memory, and its corresponding resource is 16276 MiB;

2. The demand of the user for Share GPU lies in model development and model prediction scenarios. Therefore, in this case, the upper limit of GPU resources applied by the user cannot exceed 1 card, that is, the upper limit of resources applied is a single card.

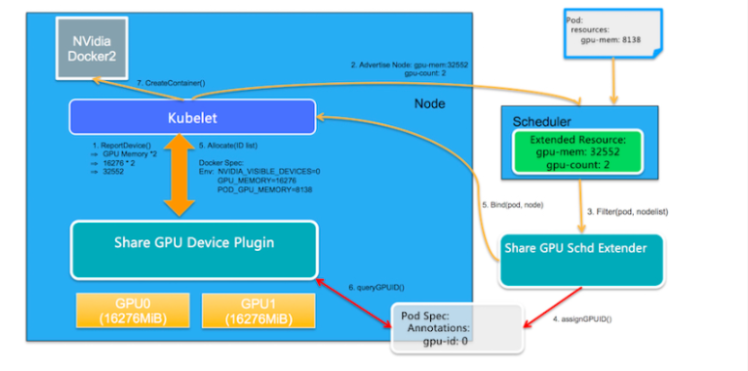

First, our task is to define two new Extended Resources: the first is gpu-mem, corresponding to the GPU memory, and the second is gpu-count, corresponding to the number of GPU cards. Vector resources are described by these two scalar resources, and the vector resources are combined to provide a mechanism to support Share GPU. The basic architecture diagram is as follows:

1. Resource reporting

GPU Share Device Plugin uses the nvml library to query the number of GPU cards and the memory of each GPU card, and reports the total memory (quantity memory) of GPUs on the node as an additional Extended Resource to Kubelet through ListAndWatch(). Then, Kubelet reports it to Kubernetes API Server. For example, if a node contains two GPU cards and each card contains 16276 MiB, the GPU resources of the node are 16276 2 = 32552, and the number of GPU cards on the node, which is 2, is also reported as an additional Extended Resource from the user's perspective.

2. Extended scheduling

GPU Share Scheduler Extender can reserve the allocation information in the Pod Spec in the form of annotations while allocating gpu-mem to the Pod, and can determine whether each GPU card contains enough available gpu-mem allocation at the time of filtering based on this information.

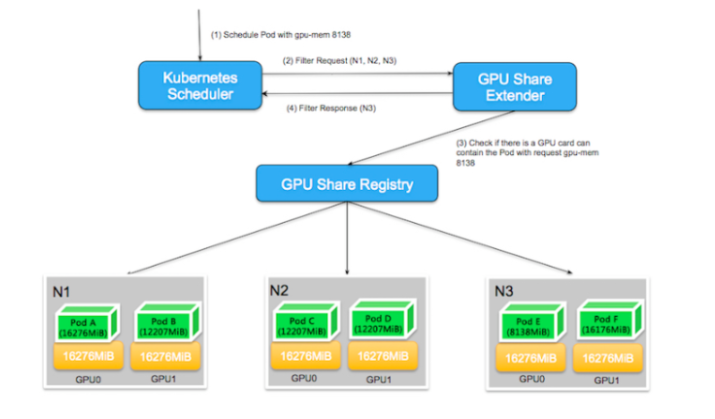

2.1. The default Kubernetes scheduler calls the Filter method of the GPU Share Scheduler Extender over http after all the filter actions have been performed. This is because the default scheduler can only determine whether the total amount of resources has free resources that meet the demand, and cannot specifically determine whether the demand is met on a single card when computing the Extended Resources. Therefore, it is up to the GPU Share Scheduler Extender to check whether a single card contains available resources.

The following figure is used as an example. In a Kubernetes cluster composed of 3 nodes that contain 2 GPU cards, when a user applies for gpu-mem = 8138, the default scheduler scans all nodes and finds that the remaining resources of N1 is 16276 * 2 - 16276 -12207 = 4069, which does not meet the resource demands, so N1 node are filtered out.

The remaining resources of N2 and N3 nodes are both 8138 MiB, which meets the conditions of the default scheduler from the perspective of overall scheduling. At this time, the default scheduler entrusts the GPU Share Scheduler Extender to perform secondary filtering. In the secondary filtering, the GPU Share Scheduler Extender needs to determine whether the single card meets the scheduling requirements. For N2 node, it is found that the node has 8138 MiB of available resources, but from the perspective of each GPU card, GPU0 and GPU1 have only 4069 MiB of available resources, which cannot meet the demand of 8138 MiB of a single card. N3 Node also has a total of 8138 MiB available resources, but these available resources all belong to GPU0, meeting the demand of single-card scheduling. As a result, precise conditional filtering can be implemented through the filtering of the GPU Share Scheduler Extender.

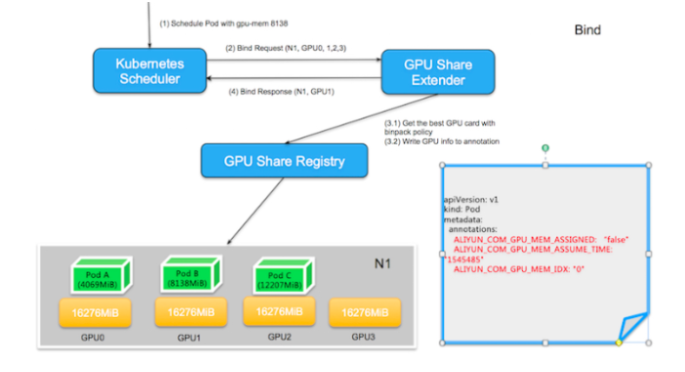

2.2. When the scheduler finds a node that meets the condition, it entrusts the bind method of the GPU Share Scheduler Extender to bind the node and the Pod. Here, the Extender needs to perform two operations:

ALIYUN_COM_GPU_MEM_IDX in the annotation of the Pod. In addition, the GPU memory applied by the Pod is also saved as ALIYUN_COM_GPU_MEM_Pod and ALIYUN_COM_GPU_MEM_ASSUME_TIME to the annotation of the Pod, and the POD is bound to the selected node at this time.Note: The Pod annotation for ALIYUN_COM_GPU_MEM_ASSIGNED is also saved and initialized to "false." It means that the Pod is assigned to a GPU card during scheduling, but the Pod is not actually created on the node. ALIYUN_COM_GPU_MEM_ASSUME_TIME represents the time assigned.

If no GPU resources on the allocated node meet the condition, the scheduler does not perform binding at this time and exits directly without reporting an error. The default scheduler will reschedule after the ASSUME times out.

As shown in the following figure, when GPU Share Scheduler Extender binds the Pod with gpu-mem 8138 to the selected node N1, it first compares the available resources of different GPUs, which are GPU0 (12207), GPU1 (8138), GPU2 (4069) and GPU3 (16276). GPU2 are discarded because its remaining resources do not meet the requirements. Among the other 3 GPUs that meet the condition, GPU1 is the GPU card with the least resources left while the free resources meet the condition, so GPU1 is selected.

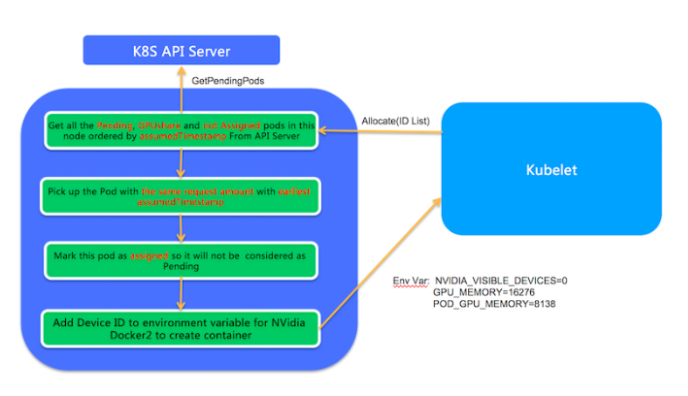

3. Run on the node

When the event that the Pod is bound to the node is received by Kubelet, Kubelet creates a real Pod entity on the node. In this process, Kubelet calls the Allocate method of the GPU Share Device Plugin, and the parameter of the Allocate method is gpu-mem applied by the Pod. In the Allocate method, the corresponding Pod is run according to the scheduling decision of the GPU Share Scheduler Extender.

ALIYUN_COM_GPU_MEM_ASSIGNED to false are listed.ALIYUN_COM_GPU_MEM_POD (in the Pod Annotation) and Allocate applications is selected. If multiple Pods meet the condition, the POD with the earliest ALIYUN_COM_GPU_MEM_ASSUME_TIME is selected.ALIYUN_COM_GPU_MEM_ASSIGNED in the Pod Annotation is set to true, and the GPU information in the Pod Annotation is converted into an environment variable and returned to Kubelet to truly create the Pod.

Currently, the project has been open sourced in github.com.

See the Deployment documentation.

1. First, create an application that uses aliyun.com/gpu-mem.

apiVersion: apps/v1

kind: Deployment

metadata:

name: binpack-1

labels:

app: binpack-1

spec:

replicas: 1

selector: # define how the deployment finds the pods it manages

matchLabels:

app: binpack-1

template: # define the pods specifications

metadata:

labels:

app: binpack-1

spec:

containers:

- name: binpack-1

image: cheyang/gpu-player:v2

resources:

limits:

# MiB

aliyun.com/gpu-mem: 1024See Usage documentation.

See How to build.

636 posts | 55 followers

FollowAlibaba Clouder - October 18, 2019

ApsaraDB - December 29, 2025

Alibaba Cloud Native Community - September 18, 2023

Alibaba Container Service - August 22, 2018

ApsaraDB - October 29, 2025

Alibaba Cloud Native Community - September 20, 2023

636 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Cloud Native Community