In today's data-driven era, the processing and analysis of multimodal data, including text, image, audio, video and other data types, is becoming increasingly important. By integrating multi-modal data ETL with ML (Machine Learning Platform for AI), AI pipelines can be built and optimized more efficiently, enabling a seamless transition from data to intelligent decisions. This article describes the fully managed Ray service provided by Alibaba Cloud AnalyticDB for MySQL, a cloud-native data warehouse. This service unlocks the potential of AI pipelines in data warehouses and seamlessly integrates multi-modal data ETL with ML.

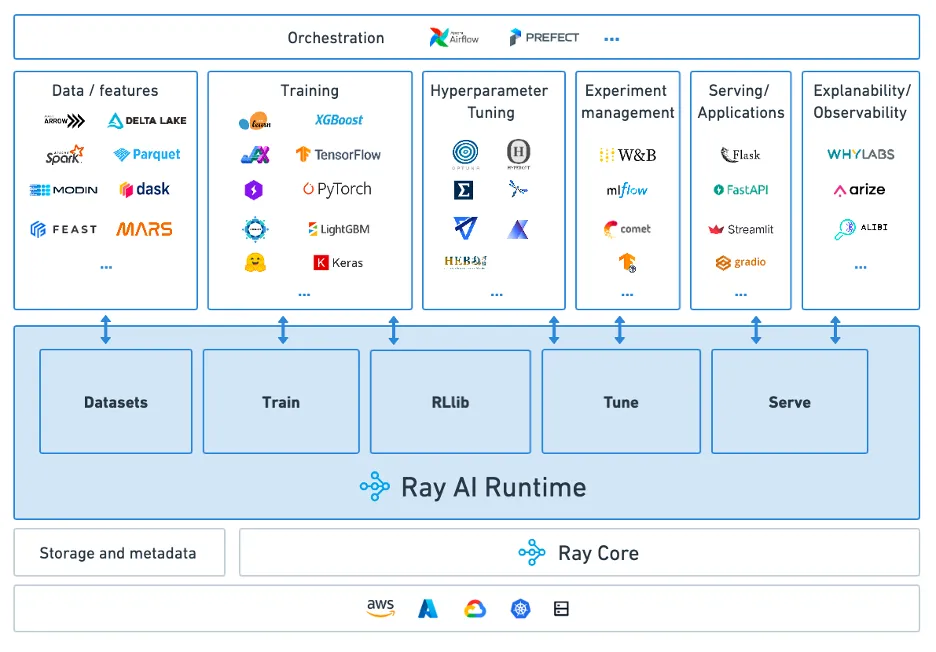

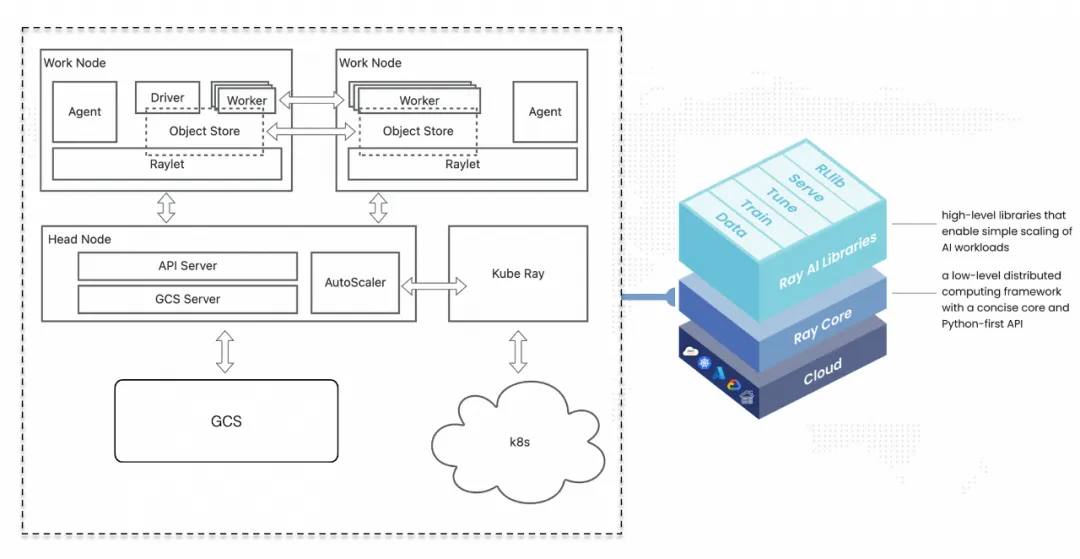

Open-source Ray is a distributed computing framework designed specifically for AI and High Performance Computing. It originated from UC Berkeley's AMPLab, the same lab that produced the Apache Spark project. With its simple API, Ray abstracts distributed scheduling with a concise API. With only a few lines of code, you can scale a stand-alone task to a thousand-node cluster and schedule remote resources like calling a local function. Built-in modules such as Ray Tune, Ray Train, and Ray Serve are seamlessly compatible with the TensorFlow and PyTorch ecosystems to support scenarios such as reinforcement learning and big data processing. The active open source community and support from enterprises such as Anyscale make it a great tool for building AI applications quickly.

Core Highlights of Ray:

● A Unified Framework for All Distributed Computing Scenarios

● Dynamic Resource Scheduling & Efficient Execution: Enables fine-grained, elastic resource scheduling, allocating CPU, GPU, memory, and custom resources on demand. It supports efficient data exchange through formats like Apache Arrow and TensorFlow Datasets to accelerate data processing.

● Multi-Cloud and Large-Scale Scalability: Supports containerized deployment via Kubernetes, Docker Swarm, and others, allowing seamless use of multi-cloud resources. It is ideal for EB-scale data processing and handling models with hundreds of billions of parameters.

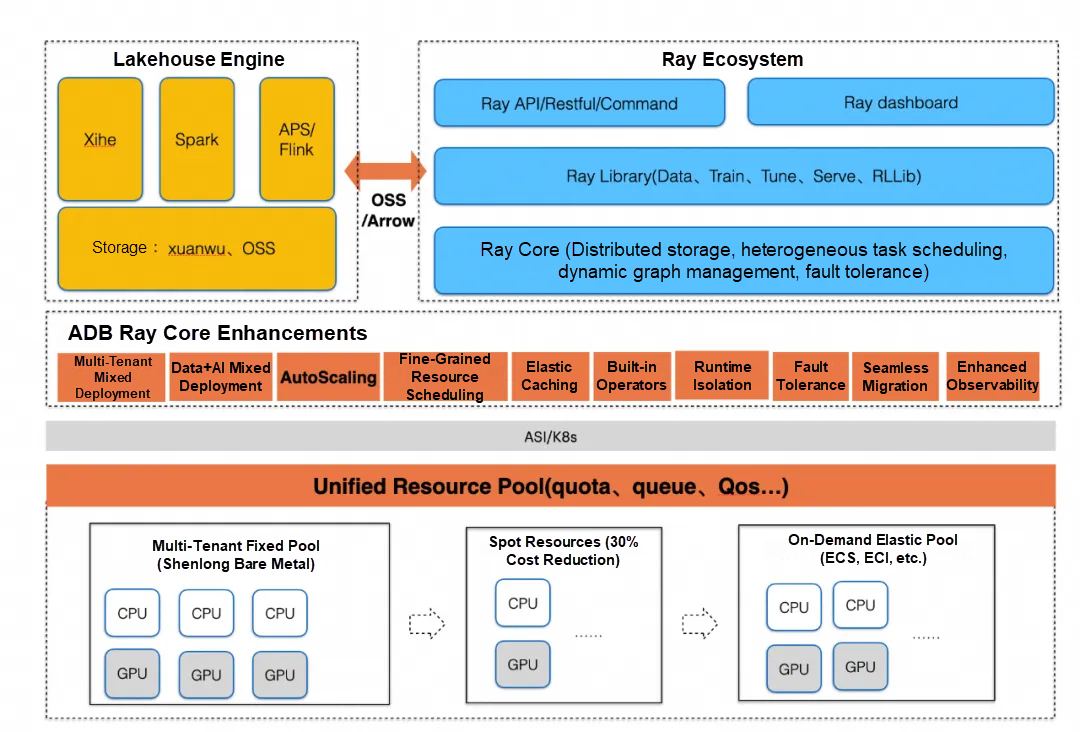

Open-source Ray provides a highly flexible distributed computing framework for developers. In the actual production environment, enterprises often face problems such as distributed job optimization, fine-grained resource scheduling, cluster O&M, stability, and high availability. This is where AnalyticDB Ray (hereinafter referred to as AnalyticDB Ray) breaks down.

AnalyticDB Ray is the fully-managed Ray service launched by AnalyticDB for MySQL based on the rich ecosystem of open source Ray. Multi-modal processing, physical intelligence, search recommendation, financial risk control, etc. The scenario is refined and full-stack enhancements are made to the Ray kernel and service capabilities. Developers can focus on their applications without worrying about cluster O&M, gaining cost-performance optimizations from the AnalyticDB Ray core. It also seamlessly integrates with the AnalyticDB lakehouse platform to build an integrated Data+AI architecture, accelerating the enterprise-scale adoption of AI.

Overview of AnalyticDB Ray's Core Enhancements Compared to Open-Source Ray:

|

|

AnalyticDB Ray features |

|

|

Ease of use |

Automatically create a RayCluster |

The console provides a one-click, GUI-based deployment capability¹. Users can create a RayCluster by simply creating an AI resource group and configuring the resource specifications for the Head and Worker nodes. |

|

Built-in LLM toolchain |

Includes built-in tools for one-click distillation, fine-tuning, inference, and evaluation of LLMs for reinforcement learning. |

|

|

Built-in embodied AI toolchain |

As a resource scheduling foundation for the Python ecosystem, AnalyticDB Ray supports frameworks like Cosmos, NeMo Curator, and GROOT N1 for data simulation, synthesis, and model fine-tuning. |

|

|

Ecosystem integration |

lance |

Integrates with Lance for storing and processing multimodal data. |

|

llama-factory |

Supports distributed fine-tuning via llama-factory-on-ray. |

|

|

spark |

Supports hybrid resource deployment of Spark on Ray via Ray DP. |

|

|

Cost-effectiveness |

Multi-tenant/job resource isolation |

Resolves resource isolation and sharing issues between tenants and jobs through vClusters and shared resource groups. |

|

Deep Data + AI Integration |

AnalyticDB natively supports PB-scale data storage and analysis. Combined with Ray, it connects the entire pipeline from data processing and multi-source feature engineering to model inference. It also improves resource utilization by allowing Ray, AnalyticDB real-time analytics, and Spark workloads to share resources. |

|

|

AutoScaling |

Automatically scales GPU/CPU resources up or down based on workload. It also supports low-cost Spot instances. |

|

|

Elastic caching |

Elastically provisions caching service resources based on the data volume and bandwidth requirements of Ray's read/write operations. |

|

|

Fine-grained resource scheduling |

Automatically schedules tasks based on node resource utilization and adds isolation mechanisms for GPU multi-tenant overselling, along with affinity/anti-affinity scheduling policies between tasks. |

|

|

Stability & HA |

Seamless migration and self-healing |

Supports seamless rolling upgrades for clusters and automatic recovery from node failures. |

|

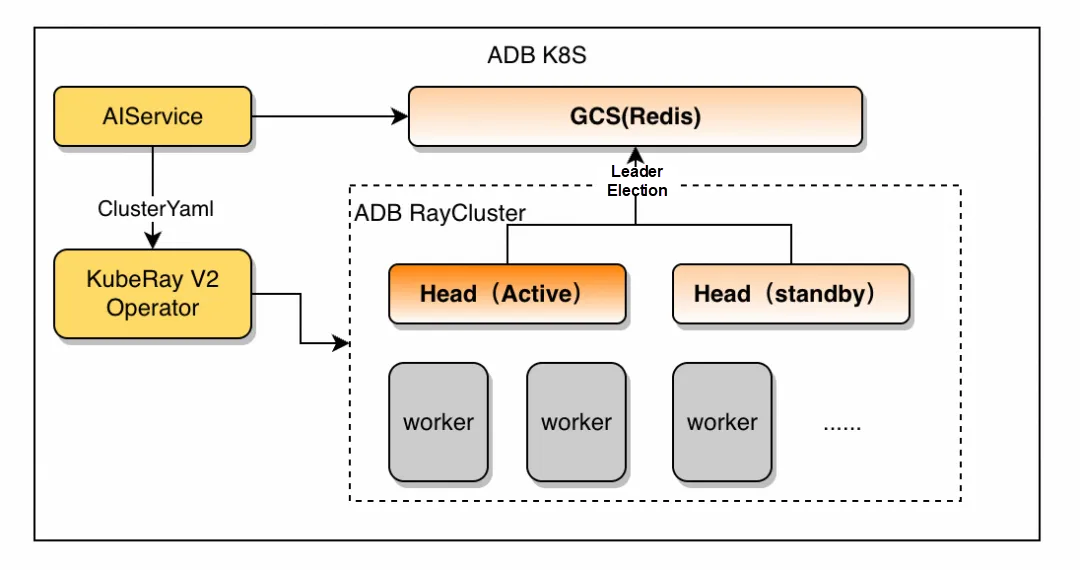

High availability |

Supports primary/standby Head nodes for high availability. |

|

|

Observability |

Monitoring kanban |

Provides persistent task dashboards and unified observability management across multiple clusters. |

[1] https://www.alibabacloud.com/help/en/analyticdb/analyticdb-for-mysql/user-guide/managed-ray-service

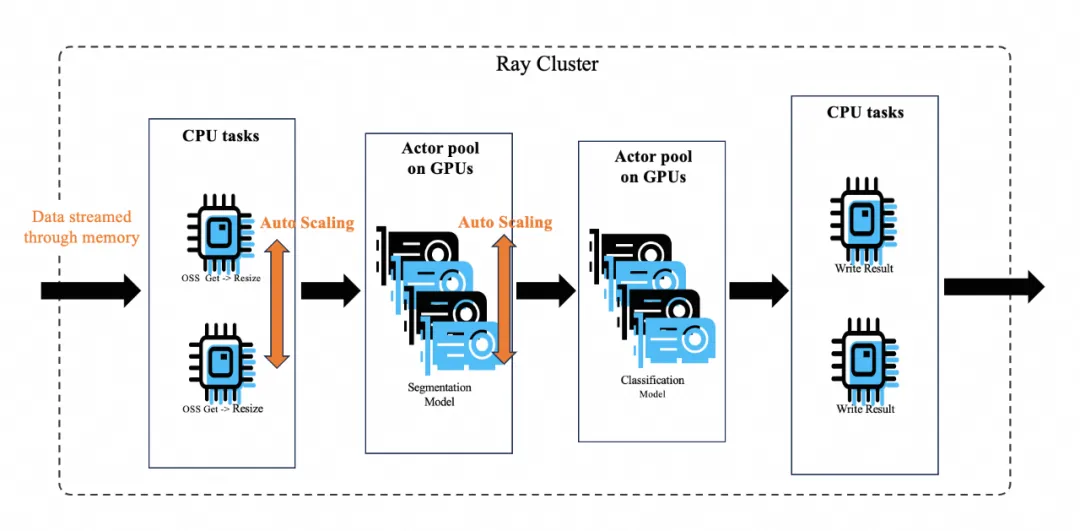

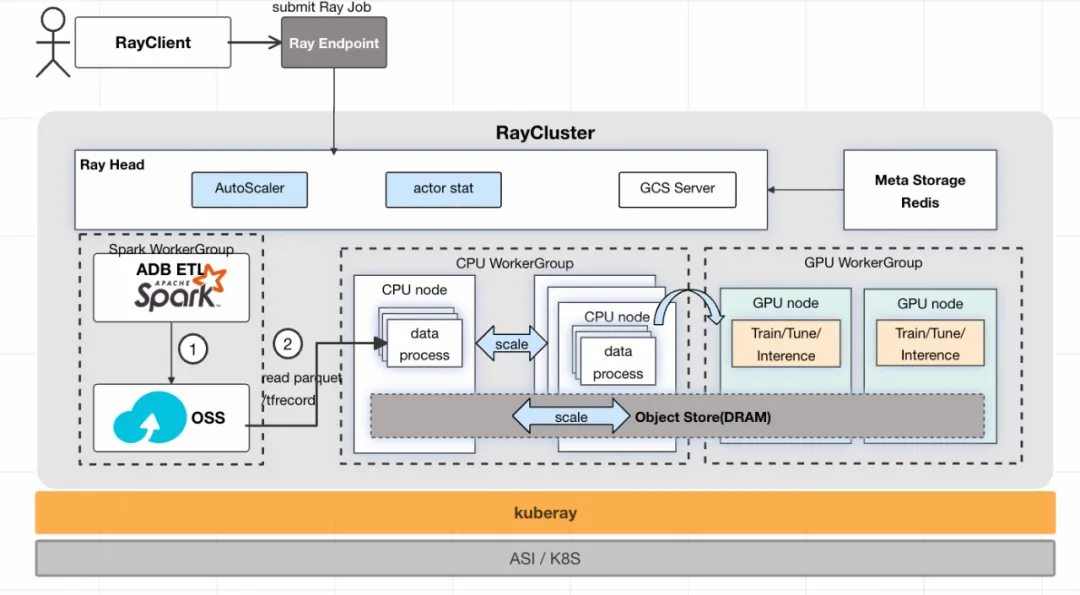

● Streaming Computation: Uses a streaming computation model where intermediate data is stored in the Ray object store, solving the problem of intermittent disk writing in batch mode.

● Heterogeneous Auto-scaling: For data processing that requires both CPU and GPU resources, it independently and automatically scales CPU and GPU resources, maximizing the utilization of scarce GPU resources.

● Head Node HA: Switch within 5 seconds to ensure the stability of inference, high-quality tasks, and multi-tenant clusters.

● Metadata: The metadata store supports hot standby and cross-region disaster recovery.

● Reinforcement Learning Observability: A visual monitoring dashboard provides real-time tracking of task status. For reinforcement learning scenarios, it supports Actor/Task-level topology analysis, improving problem diagnosis efficiency by 80%.

Scenario: Predicting Click-Through Rates (CTR) for ad recommendations to identify target audiences. Offline batch inference is run at night, and the prediction results are delivered to the business team's AnalyticDB data warehouse tables.

Solutions:

Benefits:

Scenario: Prepare data for training large language models.

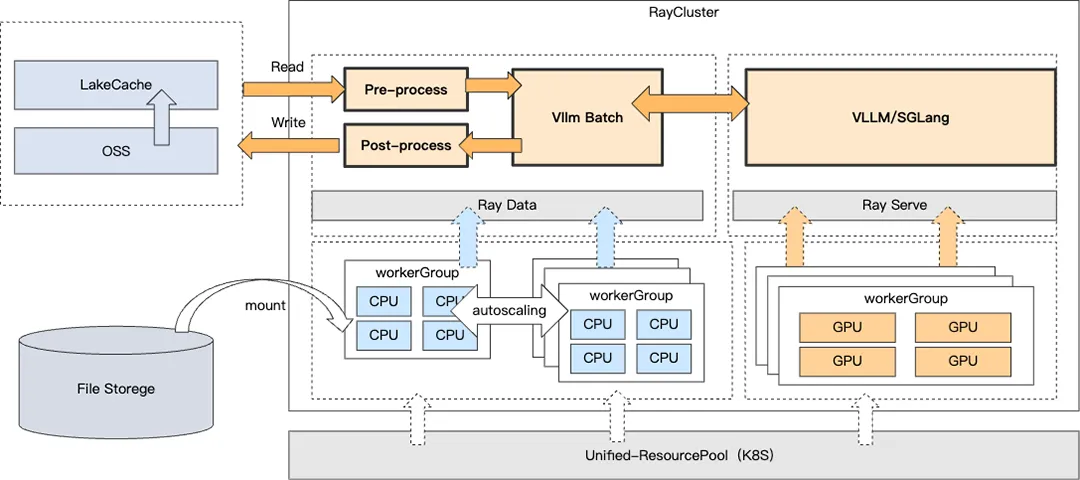

Scenario: Use Ray Data with vLLM/SGLang to deploy models like Qwen and Deepseek for data distillation. The distilled data is then used to train the large models.

Benefits:

Scene: Creating personalized, interactive multimodal experiences.

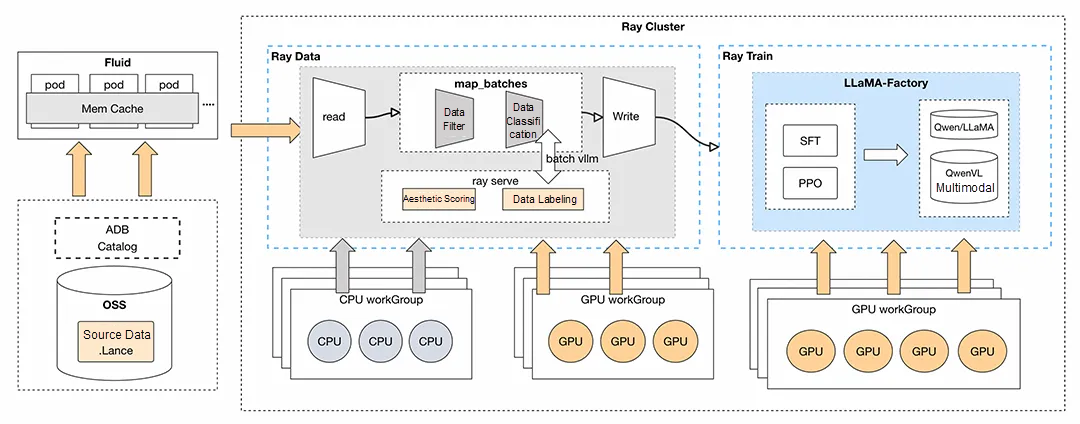

Scheme: With AnalyticDB Ray at the core, integrate with Lance to enhance distributed image-text data processing and structuring capabilities using Ray Data. At the same time, integrate LLaMA-Factory to provide distributed fine-tuning capabilities for the Qwen-VL multimodal model via Ray.

Benefits:

Official website documents: https://www.alibabacloud.com/help/en/analyticdb/analyticdb-for-mysql/user-guide/managed-ray-service

[Infographic] Highlights | Database New Features in September 2025

ApsaraDB - October 24, 2025

Alibaba Container Service - June 23, 2025

Apache Flink Community - July 11, 2025

ApsaraDB - February 7, 2025

Alibaba Container Service - April 8, 2025

5927941263728530 - May 15, 2025

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn MoreMore Posts by ApsaraDB