This series of articles explores some of the common problems enterprise customers encounter when using Kubernetes. This second article in the series addresses the problem of legacy applications that cannot identify container resource restrictions in Docker and Kubernetes environments.

Linux uses cgroups to implement container resource restrictions, but the host's procfs /proc directory is still mounted by default in the container. This directory includes meminfo, cpuinfo, stat, uptime, and other resource information. Some monitoring tools, such as "free" and "top", and legacy applications still acquire resource configuration and usage information from these files. When they run in a container, they read the host's resource status, which leads to errors and inconveniences.

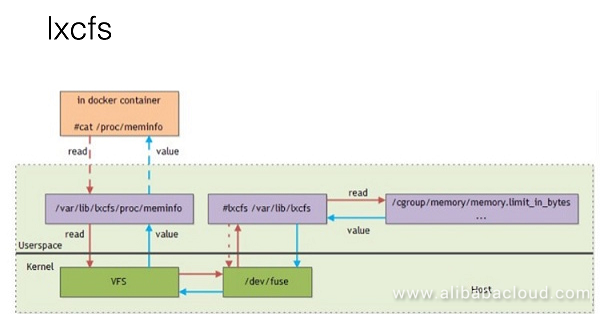

A common solution proposed in the community is to use LXCFS to provide resource visibility in the container. LXCFS is an open source Filesystem in Userspace (FUSE) designed to support LXC containers. It can also support Docker containers.

LXCFS uses a FUSE file system to provide the following procfs files in the container:

/proc/cpuinfo

/proc/diskstats

/proc/meminfo

/proc/stat

/proc/swaps

/proc/uptimeSchematic of LXCFS:

For example, LXCFS mounts the host's /var/lib/lxcfs/proc/memoinfo file to the Docker container after the /proc/meminfo location. Then, when processes in the container read the relevant file content, the LXCFS FUSE implementation reads the correct memory restriction from the container's cgroup. In this manner, applications obtain the correct resource constraint settings.

Here, we use CentOS 7.4 as the testing environment and have already enabled support for the FUSE module. Because Docker for Mac, Minikube, and other development environments adopt highly-tailored operating systems, they cannot support FUSE, and run LXCFS to perform testing.

Install the LXCFS RPM package

wget https://copr-be.cloud.fedoraproject.org/results/ganto/lxd/epel-7-x86_64/00486278-lxcfs/lxcfs-2.0.5-3.el7.centos.x86_64.rpm

yum install lxcfs-2.0.5-3.el7.centos.x86_64.rpm Start LXCFS

lxcfs /var/lib/lxcfs & Test

$docker run -it -m 256m \

-v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \

-v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \

-v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \

-v /var/lib/lxcfs/proc/stat:/proc/stat:rw \

-v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \

-v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \

ubuntu:16.04 /bin/bash

root@f4a2a01e61cd:/# free

total used free shared buff/cache available

Mem: 262144 708 261436 2364 0 261436

Swap: 0 0 0We can see that the total memory is 256 MB, so the configuration has taken effect.

Some users have asked how they can use LXCFS in a Kubernetes cluster environment. To answer their question, we will provide an example to be used for reference.

First, we must install and start LXCFS on the cluster nodes. Here, we use the Kubernetes method, which makes use of containers and DaemonSet to run the LXCFS FUSE file system.

All the sample code used in this article can be obtained from the following GitHub address:

git clone https://github.com/denverdino/lxcfs-initializer

cd lxcfs-initializerThe manifest file is as follows:

apiVersion: apps/v1beta2

kind: DaemonSet

metadata:

name: lxcfs

labels:

app: lxcfs

spec:

selector:

matchLabels:

app: lxcfs

template:

metadata:

labels:

app: lxcfs

spec:

hostPID: true

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: lxcfs

image: registry.cn-hangzhou.aliyuncs.com/denverdino/lxcfs:2.0.8

imagePullPolicy: Always

securityContext:

privileged: true

volumeMounts:

- name: rootfs

mountPath: /host

volumes:

- name: rootfs

hostPath:

path: /NOTE: Because the LXCFS FUSE must share the system PID namespace and requires a privilege mode, we have configured the relevant container startup parameters.

Using the following command, we can automatically install and deploy LXCFS on all cluster nodes. Easy, right?:-)

kubectl create -f lxcfs-daemonset.yamlSo how do we use LXCFS in Kubernetes? Just as in the previous article, we can add volume and volumeMounts definitions for the files under /proc in the pod definition. However, as this makes the K8S application deployment file more complicated, is there a way to have the system mount the relevant files automatically?

Kubernetes provides an Initializer extension that can be used for interception and injection during resource creation. This gives us an elegant method by which to automatically mount LXCFS files.

Note: Alibaba Cloud Kubernetes clusters provide Initializer support by default. For testing on self-built clusters, see the instructions for enabling the function in this document.

The manifest file is as follows:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: lxcfs-initializer-default

namespace: default

rules:

- apiGroups: ["*"]

resources: ["deployments"]

verbs: ["initialize", "patch", "watch", "list"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: lxcfs-initializer-service-account

namespace: default

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: lxcfs-initializer-role-binding

subjects:

- kind: ServiceAccount

name: lxcfs-initializer-service-account

namespace: default

roleRef:

kind: ClusterRole

name: lxcfs-initializer-default

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

initializers:

pending: []

labels:

app: lxcfs-initializer

name: lxcfs-initializer

spec:

replicas: 1

template:

metadata:

labels:

app: lxcfs-initializer

name: lxcfs-initializer

spec:

serviceAccountName: lxcfs-initializer-service-account

containers:

- name: lxcfs-initializer

image: registry.cn-hangzhou.aliyuncs.com/denverdino/lxcfs-initializer:0.0.2

imagePullPolicy: Always

args:

- "-annotation=initializer.kubernetes.io/lxcfs"

- "-require-annotation=true"

---

apiVersion: admissionregistration.k8s.io/v1alpha1

kind: InitializerConfiguration

metadata:

name: lxcfs.initializer

initializers:

- name: lxcfs.initializer.kubernetes.io

rules:

- apiGroups:

- "*"

apiVersions:

- "*"

resources:

- deploymentsNote: This describes the deployment of a typical Initializer. First, we create the service account lxcfs-initializer-service-account and then authorize it to query and modify "deployments" resources. Then, we deploy an Initializer named "lxcfs-initializer" and use the preceding SA to start a container to create "deployments" resources. If "deployments" contains an annotation that sets initializer.kubernetes.io/lxcfs to true, the files are mounted to the container in this application.

We can run the following command to use the Initializer after deployment is complete:

kubectl apply -f lxcfs-initializer.yamlNext, we will deploy a simple Apache application and allocate 256 MB of memory to it. In addition, we declare the following annotation: "initializer.kubernetes.io/lxcfs": "true".

The manifest file is as follows:

apiVersion: apps/v1beta1

kind: Deployment

metadata:

annotations:

"initializer.kubernetes.io/lxcfs": "true"

labels:

app: web

name: web

spec:

replicas: 1

template:

metadata:

labels:

app: web

name: web

spec:

containers:

- name: web

image: httpd:2

imagePullPolicy: Always

resources:

requests:

memory: "256Mi"

cpu: "500m"

limits:

memory: "256Mi"

cpu: "500m"We can use the following method to deploy and test the application:

$ kubectl create -f web.yaml

deployment "web" created

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

web-7f6bc6797c-rb9sk 1/1 Running 0 32s

$ kubectl exec web-7f6bc6797c-rb9sk free

total used free shared buffers cached

Mem: 262144 2876 259268 2292 0 304

-/+ buffers/cache: 2572 259572

Swap: 0 0 0We can see that the total memory returned by the free command is the container resource capacity we set.

We can check the pod configuration to see that the procfs files have been mounted correctly.

$ kubectl describe pod web-7f6bc6797c-rb9sk

...

Mounts:

/proc/cpuinfo from lxcfs-proc-cpuinfo (rw)

/proc/diskstats from lxcfs-proc-diskstats (rw)

/proc/meminfo from lxcfs-proc-meminfo (rw)

/proc/stat from lxcfs-proc-stat (rw)

...In Kubernetes, we can also use Preset to implement a similar function. However, we will not describe this here due to limited space.

This article showed how to use LXCFS to provide container resource visibility, allowing legacy systems to better identify resource restrictions when running in containers.

In this article, we also showed how to the use the container and DaemonSet method to deploy LXCFS FUSE. This approach not only greatly simplifies deployment, but allows facilitates the use of Kubernetes' own container management capabilities, supports the automatic recovery of failed LXCFS processes, and ensures consistent node deployment when the cluster is scaled. This technique also works for other similar monitors and system extensions.

In addition, we showed how to use the Kubernetes Initializer extension to automatically mount LXCFS files. This entire process is transparent to application deployment staff, greatly simplifying O&M tasks. Using similar methods, we can flexibly tailor application deployment activities to meet special business needs.

Alibaba Cloud Kubernetes Service is the first such service with certified Kubernetes consistency. It simplifies Kubernetes cluster lifecycle management and provides built-in integration for Alibaba Cloud products. In addition, the service further optimizes the Kubernetes developer experience, allowing users to focus on the value of cloud applications and further innovations.

Kubernetes Demystified: Restrictions on Java Application Resources

224 posts | 33 followers

FollowAlibaba Cloud Blockchain Service Team - October 25, 2018

Alibaba Cloud Blockchain Service Team - October 25, 2018

Alibaba Developer - August 27, 2018

Alibaba System Software - November 29, 2018

Alibaba Clouder - December 20, 2017

Alibaba Clouder - November 22, 2019

224 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More LedgerDB

LedgerDB

A ledger database that provides powerful data audit capabilities.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Container Service