PouchContainer now serves most of the business units (BUs) of Alibaba Group and Ant Financial Services Group (Ant Financial), including trading and middleware, B2B/CBU/ICBU, and ads search database, as well as companies that have been acquired or invested by Alibaba such as Youku, AutoNavi, and UC Browser. Trading and e-commerce platforms contribute the most to Alibaba's volume. We had seen a record-breaking gross merchandise volume (GMV) during the 2018 Double 11 Global Shopping Festival. A factor behind this success was that all applications ran on PouchContainer. Millions of container instances have been created in total. Applications that use PouchContainer cover a wide variety of scenarios. From the perspective of operating modes, there are standard online apps and special scenarios such as shopping carts, advertising, and test environments. The use of and requirements for PouchContainer vary in different scenarios. From the perspective of programming languages, there are applications written in different languages such as Java, C/C++, Node.js, and Golang. From the perspective of technology stacks, applications cover e-commerce, database, stream computing, big data, and private cloud scenarios. PouchContainer supports each of these scenarios and satisfies their different requirements for container features.

The PouchContainer technology has evolved along with Alibaba's technology architecture, which has undergone an evolution from a centralized single application to a distributed microservice architecture.

Taobao was originally a monolithic application that contained all the nodes along a trading link, including products, users, and orders. With the perfection of functions, the monolithic application became more and more difficult to maintain. To improve the efficiency of research and development (R&D), we started to gradually split this monolithic application into multiple distributed applications in 2008, including applications for products, transactions, and users, as well as frontend and backend applications. We connected these applications through the remote call framework High-speed Service Framework (HSF), Taobao Distributed Data Layer (TDDL), and distributed message middleware Notify. Each of these services contained multiple instances and could be independently developed and evolved, and also be further split. In this way, a large distributed service cluster gradually took shape. From a monolithic application to multiple lightweight service-oriented applications with single functions, the total number of application instances increased, but the system resources required by each instance decreased. Inspired by this change, we naturally began to use Xen, Kernel-based Virtual Machine (KVM), and other virtualization technologies to replace the original mode of directly deploying each instance on a physical machine. After virtual machine (VM) technology was used for some time, we found that the overall utilization of physical machines was still very low. At that time, a 24-core physical machine could be virtualized only into four 4-core VMs. Except for the great overhead of virtualization, each application instance could not use up the allocated VM resources. That is when we started to think about finding a more lightweight process-level resource segmentation method that could replace VMs.

At that moment, Alibaba had already developed a large internal operations and maintenance (O&M) system. The construction, deployment, and distribution of applications as well as the monitoring and alarming systems at runtime all relied on the assumption that each application instance ran on an independent machine. This assumption gradually affected all R&D and O&M phases, including system design and O&M habits. It was impossible for us to rebuild the cluster, stop the existing business, and resume business in the new cluster based on a new O&M model. This was unacceptable to both business and O&M. We could not afford to stop the R&D of e-commerce transactions for several months or suspend the system for several days. Therefore, we first had to guarantee the compatibility of the new resource usage method with the original assumption. After carefully analyzing the connotations of this assumption, we derived the following requirements for each application instance:

First, each application instance must have a unique IP address and support SSH logon. Second, each application instance must use an independent file system dedicated to it. This potential requirement needs to be met because there are inevitably many hardcoded paths in the existing code and configurations. Third, whether through tools or code, each application instance must have access only to its own allocated resources. For example, an application instance with four CPUs and 8 GB memory allocated can monitor the usage of these resources and report alarms when conditions are met. In summary, these four requirements meant that the new resource usage method must provide the same user experience as physical machines or VMs. We could then smoothly migrate applications that originally ran on VMs to the new architecture without making great changes to the existing application and O&M systems.

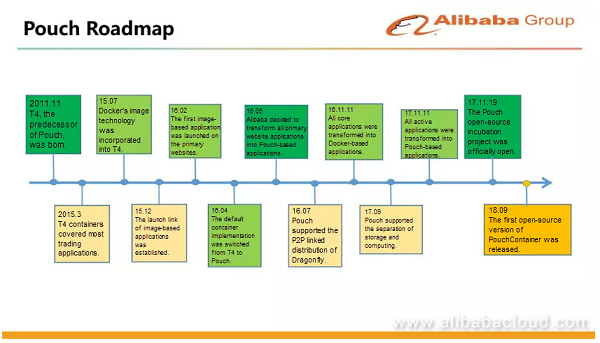

To meet the preceding four requirements, master Duo Long first invented a method of manually hacking system calls, and later we used the glibc basic library and other methods to isolate resources. For example, to assign a unique IP address and support SSH logon, we used a virtual network interface card (vNIC) and started an sshd process in each container. To meet the resource isolation and visibility requirements, we used kernel features such as Cgroup and Namespace. Later, we noticed that the open-source Linux Containers (LXC) project was also working on this field but in a more general and elegant way than manual hacking. Therefore, we integrated LXC into our architecture. We installed a custom resource visibility isolation patch on the kernel to give each user instance access only to the CPU and memory resources allocated to it. We also installed a directory-based disk space isolation patch on the kernel. In this way, we obtained our first-generation container product. We named this product T4, meaning the fourth generation of Taobao technology, Taobao 4.0. In 2011, we launched the T4 container technology in gray release mode. Compared to VMs, T4 eliminates the overhead at the virtualization Hypervisor layer. It is more flexible in resource segmentation and allocation, and can support resource overselling at different levels. These features can well support the explosive growth of business and free us from increasing the number of physical machines in proportion to business growth. In addition, because T4 is fully compatible with the previous physical machine and VM use habits of R&D and O&M personnel, most applications can be transparently switched over to T4 without affecting business. Because of these features, T4 gradually took over online applications of trading and e-commerce platforms during the next few years.

In 2015, the Docker technology became popular. As programmers, we are familiar with a famous formula: Programs = Data structures + Algorithms. For programs to be delivered and used as a software product, we can use this formula: Software = Files (fileset) + Processes (process group)

From a static point of view, the construction, distribution, and deployment of software are ultimately based on a fileset with a hierarchy of dependencies. From a dynamic point of view, the fileset, including binary files and configuration files, is loaded by the operating system (OS) into the memory for execution. From this view, software can be regarded as an interactive process group. Our previous T4 container was basically similar to Docker from the process (process group) or runtime perspective. For example, they both use Cgroup, Namespace, Linux Bridge, and other technologies. T4 also has its own unique features, such as directory-based disk space isolation, resource visibility isolation, and compatibility with the kernel of earlier versions. During our evolution from the earliest physical machines to VMs, and now to containers, the kernel has undergone a long upgrade cycle and slow iterations. In 2015, all existing machines used Linux kernel 2.6.32, with which T4 was compatible. However, Docker is better and more systematic in the processing of files (filesets). T4 creates a thin image only with the aim to provide a basic operating and configuration environment within the same business domain, but does not incorporate each specific application into the image. In contrast, Docker packages the entire dependency stack of each application into an image. So in 2015, we introduced Docker's image technology to improve our containers.

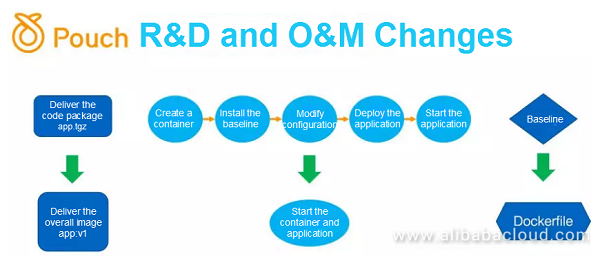

The integration of Docker's image technology has greatly affected the original T4-based R&D and O&M system. First of all, the delivery method had changed. Previously, we used to build a code package for an application and submitted the code package to our deployment release system. The system created an empty container and ran this empty container based on a thin business template. Then, we installed some dependent IPM packages in the container, set some configuration items, installed them one by one according to a configuration list specified by the application, and decompressed the application code package to start the application. We internally called the dependent software and configuration list of the application the baseline of the application. After the image technology was introduced, we packaged the code package of an application and all the dependent third-party and second-party software of the application into an image, which was known as a Dockerfile. We used to maintain an application's dependent environment through its baseline. Now, we package this environment in each application's own Dockerfile. The entire R&D, construction, distribution, and O&M process is greatly simplified.

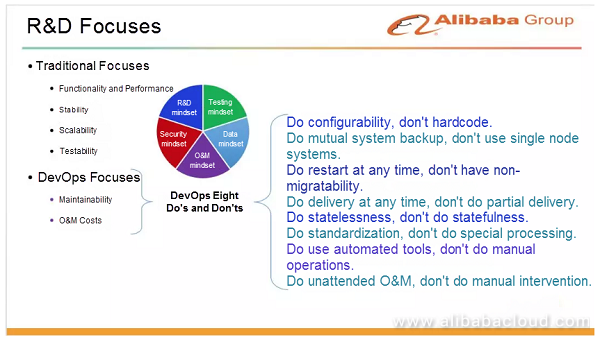

After these changes were made, the responsibilities and boundaries between R&D and O&M also changed. In the past, R&D personnel needed to focus only on certain aspects such as functionality, performance, stability, scalability, and testability. After the image technology is introduced, R&D personnel have to write their own Dockerfiles for each application. They must understand the dependencies and operating environment of an application to run the application. These used to be the responsibilities of O&M personnel. After R&D personnel have sorted out and maintained an application's dependent environment, they will know whether the application's dependencies are reasonable and whether they can be optimized. In addition, R&D personnel need to pay more attention to the maintainability and O&M costs of each application, such as whether an application is stateful or stateless, because the O&M costs of stateful applications are relatively high. This conversion of responsibilities motivates R&D personnel to master full-stack capabilities. With O&M problems taken into consideration, they can deeply understand how to design a better system. In this respect, the introduction of Docker also raises new requirements for R&D personnel. Facing the new era and new O&M model, we sum up the capability requirements for R&D personnel as several principles, as shown in the following figure.

To better build a system, we requested R&D personnel to consider the ultimate maintainability from the first day they start to build the system. For example, they must consider whether parameters are configurable and whether the system can restart at any time. Considering that hardware failures may occur on machines every day, we are not able to handle all these failures manually, but must seek help from automatic processing. During automatic processing, there can be a scenario where only some instances on a machine are affected by certain failures and other instances are intact, but all instances need to be processed. An example is when the business on a faulty physical machine needs to be completely migrated to another before the physical machine is repaired. So, regardless of whether the business in a container is normal conditions, the container must be capable of being restarted and migrated at any time. We did partial delivery in the past. Now, we need to think about an operating environment suitable for an application to run, and try to perform standardized operations. For example, if the startup path has been written in an application's Dockerfile, no special processing shall be performed. Otherwise, we cannot implement unified scheduling or O&M. Unified business migration cannot be achieved even through machine relocation. Our goal was to develop automated tools and systems to get rid of the original extensive O&M mode. That is, we modeled some fixed troubleshooting processes in the earlier manual O&M phase, and finally extracted some mechanisms to support automatic failure handling and recovery. Our ultimate goal is to achieve unattended O&M. All of these are the new requirements raised for R&D and O&M after we introduced the image technology and drove the evolution towards unattended O&M.

The preceding figure shows the roadmap of PouchContainer. T4 was launched in 2011 and covered most trading applications by March 2015. Then, we introduced Docker's image technology and did a lot of compatibility work. For example, we converted the original lightweight template of T4 into a corresponding base image, which was compatible with many previous O&M habits and tools, such as account push, security policy, and system check. In early 2016, we launched our first image-based application. In May 2016, Alibaba decided to implement containerization for all applications on Alibaba.com. Before introducing the image technology, Alibaba assigned a team of 100 to 200 people to be responsible for the deployment, O&M, and stability control of each application. Later, this team was dismissed. All the team members were transferred to the development & operations (DevOps) department to develop tools and O&M platforms to solve O&M problems through code and tools. The heaviest burden of the former full-time O&M personnel lay in the change of the online environment. After receiving a change application from R&D personnel, O&M personnel performed online operations. In the meantime, R&D personnel had no idea of which basic software the code runtime environment depended on. After the image technology was introduced, R&D personnel needed to write each application's Dockerfile themselves. O&M personnel could hand over environment change tasks to R&D personnel through the Dockerfile mechanism. The boundaries between O&M and R&D are clearly defined by the Dockerfile. R&D personnel are responsible for defining the environment on which application code depends in the Dockerfile, whereas O&M personnel guarantee that no exception occurs during the construction and distribution of an application. On November 11, 2016, we completed the image-based and PouchContainer-based transformation of core trading applications. On November 11, 2017, we transformed all trading applications into image-based applications. Then, we announced the official open source of PouchContainer on November 19, 2017.

Through extensive practices inside Alibaba, our internal PouchContainer version had proven its support for a variety of business scenarios, various technology stacks, and different operating modes. We had accumulated plenty of experience. Initially, the experience was tightly coupled with Alibaba's internal environment. Take our network model as an example. We embedded it into Alibaba's internal network management platform. Independent internal systems were dedicated to specific tasks including IP address assignment. For example, a unified software defined network (SDN)-based network management system was used to manage when to enable an IP address and when to deliver routes. There were also similar internal storage systems and some instruction push systems used for O&M purposes. Because of the tight coupling with internal systems, the internal PouchContainer version could not be directly open sourced. Finally, we chose to incubate a new external project from scratch, and move internal features one by one to this project. During this process, we also reconstructed our internal version and developed plug-ins to decouple internal dependencies. In this way, the brand-new project could run properly in an external environment, and the internal version could also run properly by using plug-ins coupled with the internal environment. Our ultimate goal is to develop an open-source version that applies to both the internal and external environments.

The differences between our PouchContainer and other containers mainly lie in the isolation, image distribution optimization, rich container mode, scaled application, and kernel compatibility. Traditional containers isolate resources based on Namespace and Cgroup. To meet resource visibility requirements, we used to install a patch on the kernel for the past few years. In this way, statistics on the memory usage, CPU usage, and other metrics of a container were associated with the container's Cgroup and Namespace, ensuring the resources that a container could use and had used were specific to it. After we introduced LXCFS, a fuse file system mainly designed for use by LXC containers, from the community this year, we no longer rely on this specific kernel patch. To set limits on the disk space, we also installed a patch on the kernel of an earlier version to isolate the disk space based on the file directory and limit the rootfs of each container. On the kernel later than version 4.9, we use the Overlay2 file system to replace this patch and provide the same functions. We are also working on a Hypervisor-based container solution to improve the isolation and security of containers. We have integrated RunV into PouchContainer to serve some multi-tenancy scenarios.

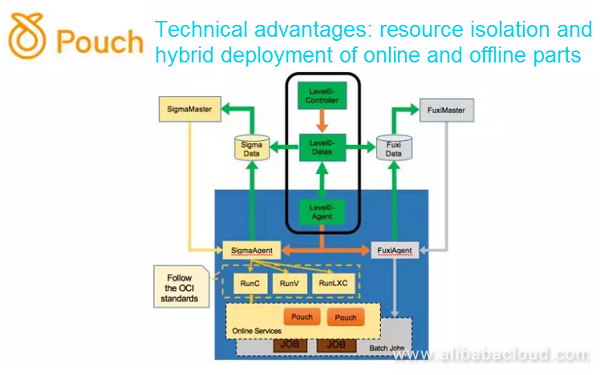

Alibaba has implemented an internal mechanism to run both online business and offline tasks on the same machine, without significant interference. The core technology is to use PouchContainer to isolate the resources used by different business based on priority and ensure that online business can preferentially use resources such as the CPU, memory, CPU cache, disk, and network.

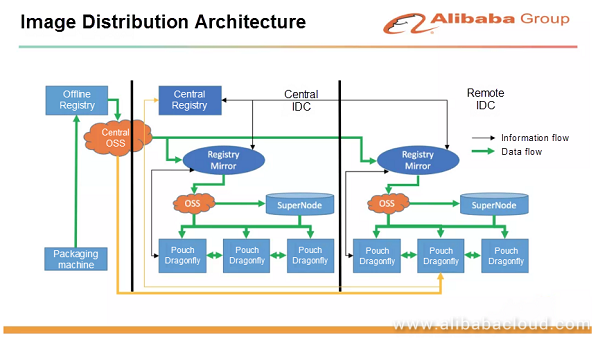

The preceding figure shows the image distribution design of PouchContainer. At Alibaba, we have a lot of large core applications with instances distributed over tens of thousands of physical machines. When a new version is released, if tens of thousands of machines pull images at the same time, no central registry can withstand such a heavy workload. Therefore, we have designed a two-level architecture for image distribution. We build a mirror in each region and use P2P distribution technology to pull images in the same region. We internally named this product Dragonfly, which has already been open sourced. With this technology, servers that need to pull images can cooperate and pull file fragments from one another. This directly relieves the service pressure and network pressure of the central registry. There are better image distribution solutions. We are trying remote images. Through the separation of storage and computing, we can mount images to a remote disk to skip or desynchronize image distribution. This solution is now running in our internal environment in gray release mode.

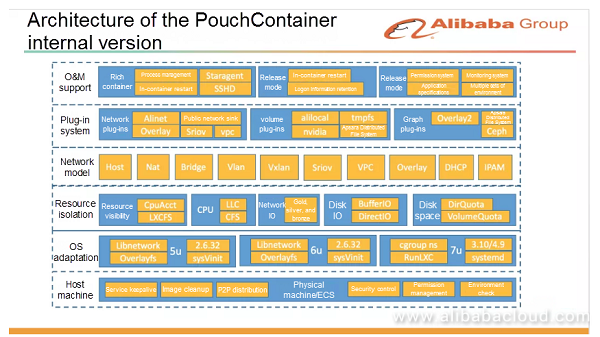

The preceding figure shows the architecture of the PouchContainer internal version. At the bottom host machine layer, we do some management and O&M work to ensure a healthy underlying environment for running containers. This layer provides features such as image cleanup, security control, and permission management. For OS adaptation, we ensure that PouchContainer can adapt to the earliest version of Linux kernel 2.6.32, including the adaptation of process management in containers. We have discussed resource isolation earlier. For the network model, we mainly use Bridge, but also support various other scenarios. We have developed many plug-ins. After PouchContainer was open sourced, we have gradually standardized these plug-ins to make them compatible with the community's container network interface (CNI) standards. At the top layer, we provide support in rich container mode. Each container can start some components closely related to the internal O&M tools and O&M system. This layer also includes some optimization of the release mode. As shown in this figure, the architecture of our internal version is complex and depends on many other internal systems. In this case, the internal version cannot directly run in an external environment or directly be open sourced.

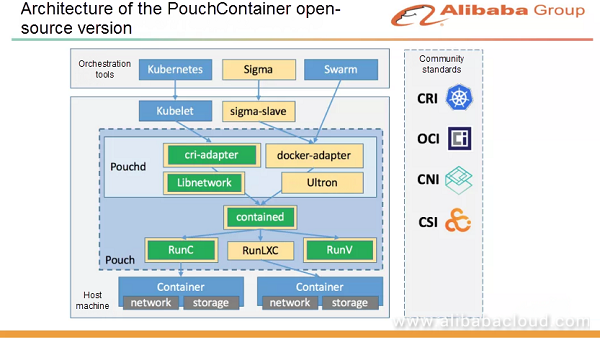

Therefore, we have chosen to establish a new open-source version to ease our work. We have introduced Contained to support different forms of runtime implementation, including RunLXC, which we have developed by packaging LXC. RunLXC can support the earliest version of Linux kernel 2.6.32. The open-source version of PouchContainer is compatible with all Docker interfaces, and also supports the container runtime interface (CRI) protocol, meaning that it supports the two mainstream cluster management systems. We enhance the network based on Libnetwork to solve some problems exposed in different scenarios, improve stability, and optimize various details of scaled development. In terms of storage, we support a variety of storage modes, such as multiple disks, memory disks, and remote disks. PouchContainer can be seamlessly integrated with the upper-layer orchestration tools, including Kubelet and Swarm. Our internal Sigma scheduling system is compatible with different versions of the Docker protocol and CRI protocol.

Recently, we have released the open-source GA version of PouchContainer. Thanks to the support of the container community, we were able to release the GA version of PouchContainer in such a short period of time. Behind more than 2,300 commits, over 80 community developers have made great contributions, including contributors from first-tier Internet companies and star container startup companies in China.

Prior to the release of the PouchContainer open-source GA version, this open-source container engine technology has been extensively verified in the Alibaba data center. After the GA version is released, we believe that it can serve the industry with a series of outstanding features. As an out-of-the-box system software technology, it can help service providers in the industry take the lead in advancing the transformation to the cloud native architecture.

To learn more about Alibaba PouchContainer, visit github.com/alibaba/pouch

For more information about contributing to PouchContainer, see github.com/alibaba/pouch/blob/master/CONTRIBUTING.md

Cloud Native Image Distribution System Dragonfly Announces to Join CNCF

Resilience4j and Sentinel: Two Open-Source Alternatives to Netflix Hystrix

639 posts | 55 followers

Follow淘系技术 - November 4, 2020

Alibaba System Software - August 6, 2018

Alibaba Clouder - March 8, 2021

Alibaba Developer - March 11, 2020

Alibaba Cloud Community - December 18, 2023

Alibaba Clouder - November 8, 2018

639 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Cloud Native Community