In semantic indexing, the embedding process is the key factor that determines the semantic recall rate. Throughout the entire semantic indexing process, embedding also represents a core cost component. The cost of embedding 1 GB of data can reach several hundred CNY, while the speed is limited to about 100 KB/s. In comparison, the costs of index construction and storage are negligible. The inference efficiency of embedding models on GPUs directly determines the speed and total cost of building a semantic index.

For scenarios of knowledge bases, such costs may be acceptable, since knowledge bases are relatively static and infrequently updated. However, for Simple Log Service (SLS) streaming data, new data is continuously generated, which creates significant pressure on both performance and costs. With the cost of a few hundred CNY per gigabyte and a throughput of only 100 KB/s, such performance is unsustainable for production workloads.

To improve performance and cost efficiency for large-scale applications, we conducted systematic optimizations targeting the inference bottlenecks of the embedding service. Through in-depth analysis, solution selection, and customized improvements, we achieved a 16× increase in throughput while significantly reducing resource costs per request.

To achieve optimal cost-efficiency of the embedding service, we need to address the following key challenges:

1. Inference framework:

2. Maximizing GPU utilization: This is the core of cost reduction. GPU resources are so expensive that underutilizing them is such a waste. This is quite different from the way programs operated in the CPU era.

3. Priority-based scheduling:

4. Bottlenecks in the E2E pipeline:

We eventually implemented the following optimization solution.

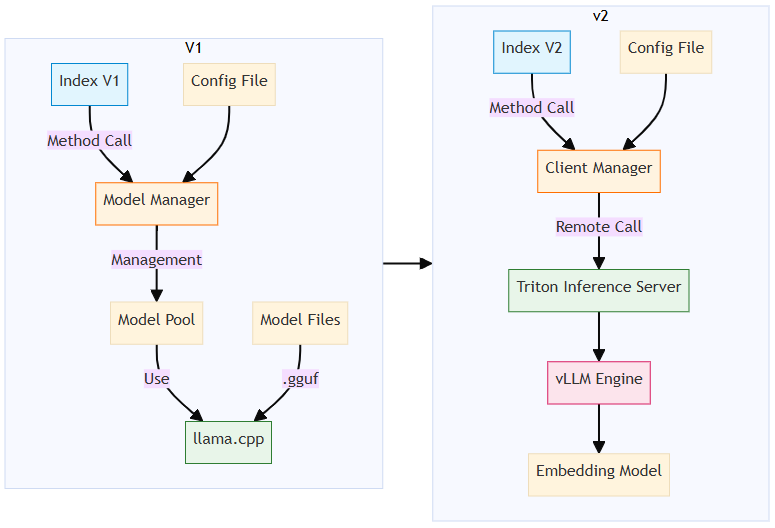

● Our initial choice of llama.cpp was mainly based on its high performance in C++, CPU friendliness (some of our tasks run on CPU nodes), and ease of integration. However, recent test results showed that under the same hardware conditions, the throughput of vLLM or SGLang was twice that of llama.cpp, while the average GPU utilization was 60% lower. We believe the key difference lies in vLLM's Continuous Batching mechanism and its highly optimized CUDA kernels.

● We eventually separated the embedding module as an independent service and deployed it on Elastic Algorithm Service (EAS) of Platform for AI (PAI). This way, both vector construction and query operations obtain embeddings through remote calls. Although this introduces network overhead and additional O&M costs, it delivers a significant basic performance boost and lays a solid foundation for subsequent optimization.

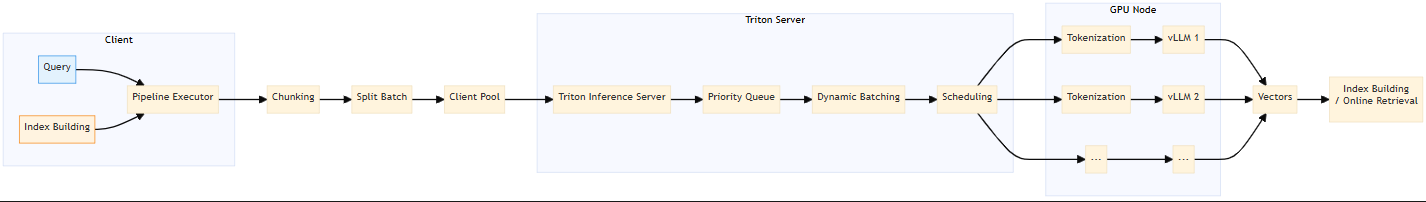

● To improve GPU utilization, we needed to deploy multiple model replicas on a single A10 GPU. After evaluating several solutions, we finally chose Triton Inference Server as the service framework. It allows us to easily control the number of model replicas on a single GPU and take advantage of its scheduling and dynamic batching capabilities to route requests to different replicas. In addition, we decided to bypass vLLM HTTP Server and invoke the vLLM core library (LLMEngine) directly in Triton's Python Backend, reducing certain amount of overhead.

● We observed that with multiple vLLM replicas, the tokenization stage became a new performance bottleneck after the GPU throughput was improved. Our tests also showed that the tokenization throughput of llama.cpp was six times higher than that of vLLM. Therefore, we decoupled the tokenization and inference stages. We used llama.cpp for high-performance tokenization, and then passed token IDs to vLLM for inference. This effectively bypassed the tokenization bottleneck of vLLM and further improved the E2E throughput. After we implemented this optimization, we noticed that Snowflake published an article describing a similar approach, indicating that this is a common issue. We are also actively working with the vLLM community to help address this problem.

● Triton Inference Server is built in with a priority queuing mechanism and a dynamic batching mechanism, which align perfectly with the requirements of the embedding service. Embedding requests during query operations are assigned a higher priority to reduce query latency. In addition, we use dynamic batching to group incoming requests in batches, which improves the overall throughput efficiency.

After addressing the performance bottlenecks of embedding, it was also necessary to refactor the overall semantic indexing architecture. The system needed to switch to calling remote embedding services and enable full asynchronization and parallelization across the data reading, chunking, embedding request, and result processing/storage steps.

In the previous architecture, the embedded llama.cpp engine was invoked directly for embedding. In the new architecture, embedding is performed through remote calls.

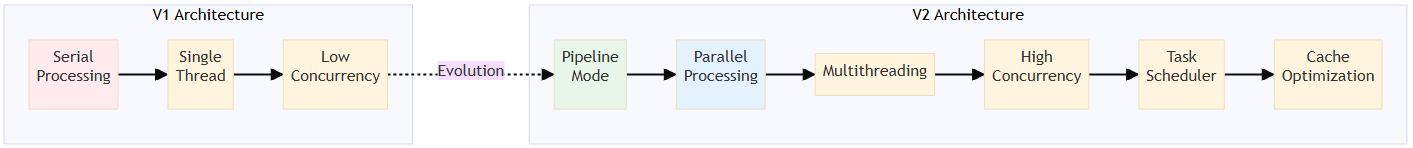

In the old architecture, from data parsing to chunking and then embedding was a fully sequential process, which prevented the embedding service on GPUs from reaching full loads. Therefore, we designed a new architecture that implements full asynchronization and parallelization, efficiently utilizing network I/O, CPU, and GPU resources.

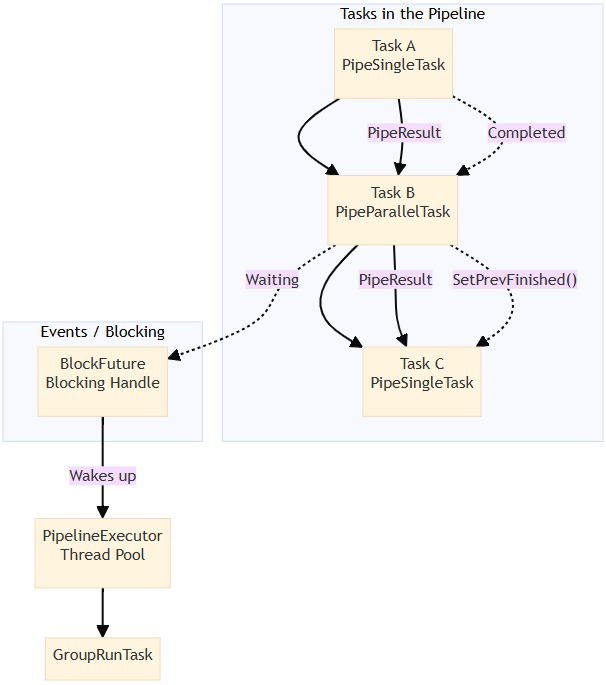

We divided the semantic index construction process into multiple tasks and built them into a directed acyclic graph (DAG) for execution. Different tasks can run asynchronously and in parallel, and within a single task, parallel execution is supported. Overall process:

DeserializeDataTask → ChunkingTask (parallel) → GenerateBatchTask → EmbeddingTask (parallel) → CollectEmbeddingResultTask → BuildIndexTask → SerializeTask → FinishTask

To efficiently execute pipeline tasks, we also implemented a data- and event-driven scheduling framework.

Through complete code modifications, we achieved a major architectural leap, enabling high-performance semantic index construction.

After the full pipeline transformation, tests showed the following results:

You are welcome to use this service. For more information, see the usage guide.

Exploring Mobile Performance Monitoring: iOS RUM SDK Architecture and Practices

640 posts | 55 followers

FollowAlibaba Cloud Native Community - August 7, 2025

ApsaraDB - December 30, 2025

ApsaraDB - November 18, 2025

ApsaraDB - October 24, 2025

Justin See - November 7, 2025

Kidd Ip - May 29, 2025

640 posts | 55 followers

Follow Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Vector Retrieval Service for Milvus

Vector Retrieval Service for Milvus

A cloud-native vector search engine that is 100% compatible with open-source Milvus, extensively optimized in performance, stability, availability, and management capabilities.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community