By Nanlong Yu

In the current wave of AI driven by large models, vectors play a crucial role in everything from building RAG applications to implementing long-term memory for Agents. A typical vector data flow involves two core stages:

1. Ingestion: Unstructured data, such as text, is converted into vectors by an embedding model and stored in a vector index.erts unstructured data such as text into vectors by using the embedding model, and then stores the vectors in the vector index.

2. Retrieval: A user's query is also converted into a vector, which is then used to retrieve relevant information from the index.

However, in real-world AI application development, developers often face a fragmented technology stack. Vector indexes, embedding models, and business databases are typically three separate systems. This separation introduces numerous "hidden costs":

1. Development Hurdles: Developers must select and integrate different software and cloud services, writing a large amount of glue code that increases complexity.

2. Operational Hurdles: Business data and vector data must be synchronized manually or through ETL tools. This not only increases the O&M burden but also leads to data latency, affecting the real-time performance of AI applications.

3. Usability Hurdles: Different vector databases and services have inconsistent APIs and lack a unified standard. When performing hybrid queries involving both vectors and scalars (such as product prices or tenant IDs), capabilities are often limited, leading to high learning and implementation costs.

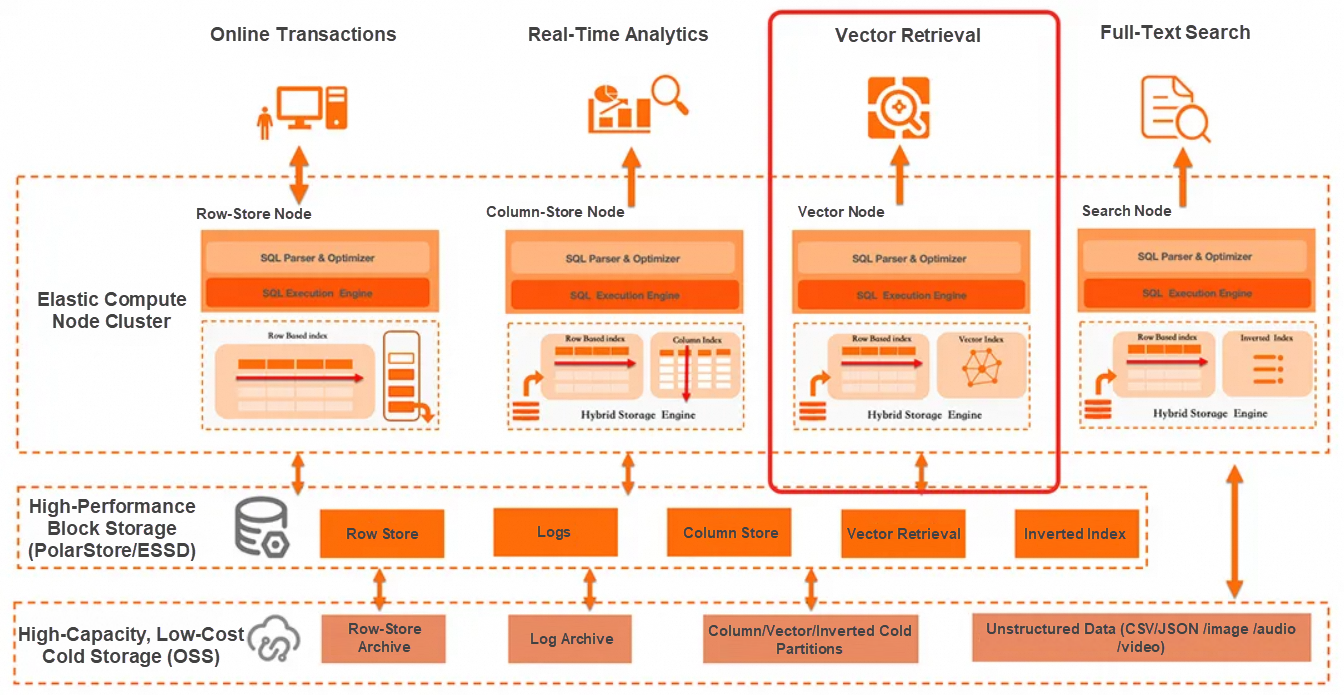

PolarDB's Multi-Modal Hybrid Retrieval Architecture

In order to solve these problems, PolarDB IMCI (In-Memory Column Index, referred to as IMCI) proposed a new solution - in the database kernel to integrate vector indexing and embedding capabilities, building a multi-modal hybrid retrieval architecture, dedicated to providing developers with integrated vector full lifecycle management services.

Vector Lifecycle Management Process

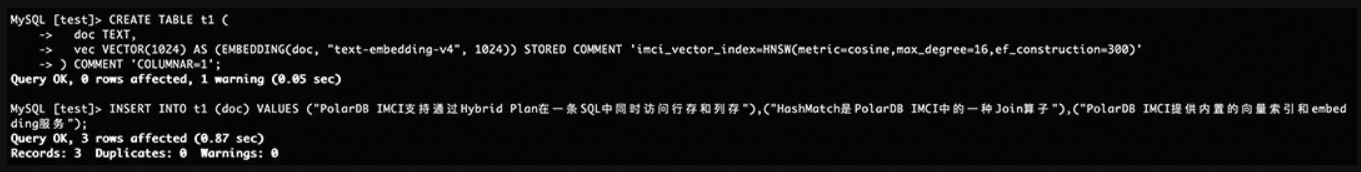

Before diving into the technical details, let's use a simple example to see the changes that PolarDB brings. Assume we want to build a technical Q&A bot for PolarDB IMCI, and our knowledge base contains the following three documents:

• "PolarDB IMCI supports accessing both row-store and column-store data in a single SQL statement by using Hybrid Plans."

• "HashMatch is a join operator in PolarDB IMCI."

• "PolarDB IMCI provides built-in vector indexing and embedding services."

In the past, this required coordinating multiple systems. Now you only need a few lines of SQL.

-- Create a table where the vec column is automatically generated from the doc column by using the EMBEDDING expression.

-- At the same time, declare an HNSW vector index by using the COMMENT syntax.

CREATE TABLE t1 (

doc TEXT,

vec VECTOR( 1024) AS (EMBEDDING(doc, "text-embedding-v4", 1024 )) STORED COMMENT 'imci_vector_index=HNSW(metric=cosine,max_degree=16,ef_construction=300)'

) COMMENT 'COLUMNAR=1';

-Insert the raw text data. The database automatically handles vector generation and index construction.

INSERT INTO t1 (doc) VALUES ("PolarDB IMCI can use Hybrid Plan to access both row store and column store in an SQL statement" ),( "HashMatch is a join operator in PolarDB IMCI" ),( "PolarDB IMCI provides built-in vector indexes and embedding services" );

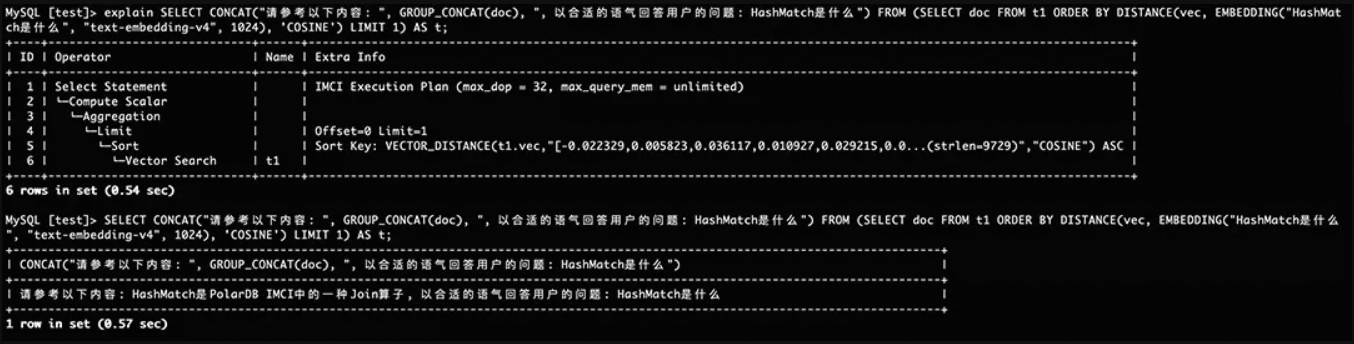

When a user asks, "What is HashMatch?", you can retrieve information from the knowledge base and generate a prompt as follows:

SELECT CONCAT ("Please refer to the following: ", GROUP_CONCAT (doc),"and answer the user's question in the appropriate tone: What is HashMatch?") FROM ( SELECT doc FROM t1 ORDER BY DISTANCE(vec, EMBEDDING("What is HashMatch?","text-embedding-v4",1024),'COSINE') LIMIT 1) AS t;

This example perfectly demonstrates the advantages of PolarDB:

EMBEDDING expression is seamlessly integrated with the vector index. By using materialized generated columns, text vectorization requires no application-level intervention.EMBEDDING and DISTANCE expressions are as easy to use as standard SQL functions, resulting in a minimal learning curve.Next, we will take a deep dive into the underlying technical design.

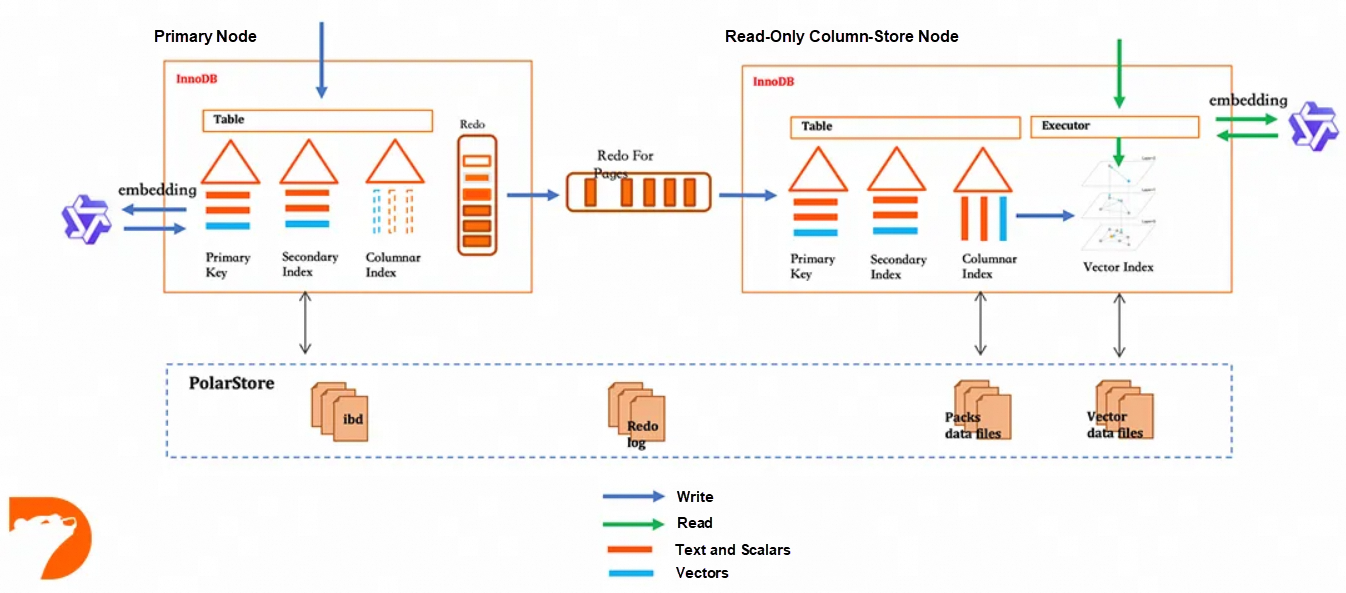

We have deeply integrated vector capabilities into PolarDB IMCI, an architectural decision that provides unique technical advantages. Instead of "reinventing the wheel," we've built our vector index "on the shoulders of giants."

We implement the HNSW vector index as a kind of columnar storage secondary index. It stores the mapping from vector to data row ID(RowID). This design offers several benefits:

• Leverage Mature Capabilities: Vector indexes typically lack mature, enterprise-grade features like transactions or backup and recovery (Checkpoint/Recover). By integrating with columnar storage, these capabilities are perfectly reused. For example, data visibility checks can directly use the columnar store's delete bitmap, which natively supports transactions.

• Unified Data Management: The vector index is responsible only for vector retrieval, while scalar data (such as tenant IDs, timestamps, and categories) is stored in the columnar store. During a query, RowIDs can be used to quickly link the two.

• High-Performance Scalar Filtering: During a hybrid retrieval of "vector + scalar" data, the system can fully leverage the columnar engine's powerful I/O pruning (reading only required columns) and vectorized executor to achieve highly efficient scalar filtering.

Building a vector index is resource-intensive. To avoid impacting the physical replication of columnar storage, we use an asynchronous build process. However, this introduces new challenges:

To this end, adopted the design principles of the LSM-Tree and broke down the asynchronous build task into two sub-tasks:

Incremental Data Sync (similar to a Flush): This task dynamically monitors the latency of index building.

Baseline Index Merge (similar to a Compaction): This task merges small indexes and cleans up holes left by deleted data.

PolarDB IMCI supports both exact and approximate search. Exact search is done via a simple brute-force scan, so our focus is on high-performance approximate nearest neighbor (ANN) search.

• Optimizer: Intelligent Path Selection for Hybrid Retrieval

In hybrid retrieval scenarios, the order of filtering scalars and retrieving vectors has a significant impact on performance.

price < 100) can filter a large amount of data, scalar filtering is performed first. Vector brute force computing on a small result set is very fast.The PolarDB optimizer dynamically selects a Pre-filter or Post-filter execution plan based on the statistics. In the future, we will also introduce execution feedback and adaptive execution to make decisions more intelligent.

• Executor: Transaction-Level, Real-Time Retrieval

To ensure both data visibility (transactions) and data freshness (real-time), the executor uses a two-phase recall policy:

delete bitmap of column store for visibility judgment, naturally supports transaction isolation.Additionally, through Sideway Information Passing, if an upstream Filter operator filters out some of the retrieved vector results, the executor can dynamically retrieve more candidates from the vector index to ensure the quantity and quality of the final result set.

Embedding model technology is evolving rapidly. We believe that the core of a database is data management and computation, not the models themselves. Therefore, PolarDB IMCI adopts a more open and flexible approach:

• API calls to external embedding services (such as Alibaba Cloud's Bailian) are encapsulated as built-in SQL expressions.

• Users can call EMBEDDING directly in SQL expressions, just as simply as calling AVG, SUM.

This design allows users to always have access to state-of-the-art (SOTA) models while remaining compatible with the simple SQL ecosystem, perfectly integrating the AI and database worlds.

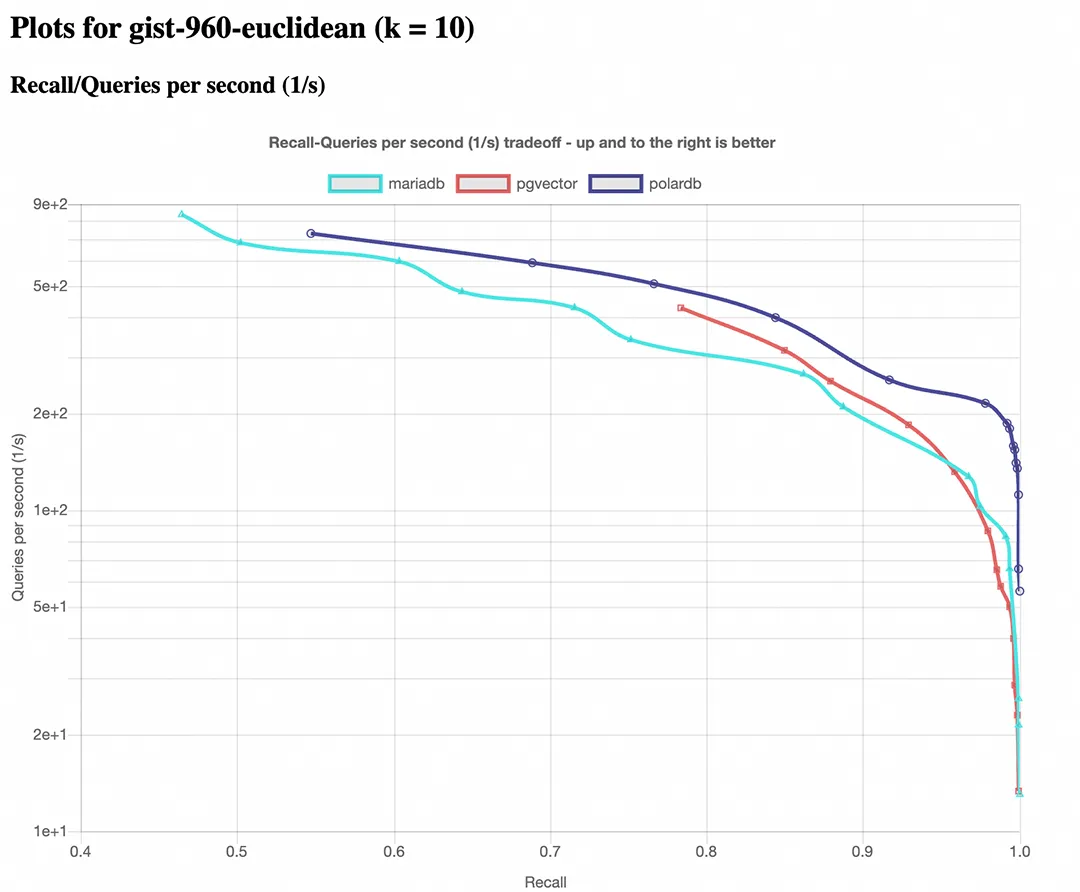

We compared the performance of PolarDB IMCI with open-source PGVector and MariaDB on the public GIST-960 dataset. Under the same hardware specifications (see Appendix), the test results show that at various recall rates, the vector retrieval performance (QPS) of PolarDB IMCI is 2 to 3 times higher than the other two products.

By integrating vector retrieval and embedding capabilities into the database kernel, PolarDB IMCI fundamentally solves the pain points of fragmented tech stacks, data silos, and complex O&M in traditional AI application development. It not only provides a high-performance, transactional, and real-time vector database but, more importantly, unifies everything under the familiar SQL language. This dramatically lowers the barrier to developing and maintaining AI applications. We believe this "all-in-one" design will become the new paradigm for the convergence of AI and databases, providing a solid and efficient data foundation for developers building the next generation of intelligent applications.

The tests were performed on an Intel(R) Xeon(R) Platinum 8357C CPU @ 2.70GHz. PolarDB was configured with 128 GB of memory. The parameters for open-source PGVector and MariaDB are as follows:

1. MariaDB:

innodb_buffer_pool_size = 256G

mhnsw_max_cache_size = 128G2. Open source PGVector:

shared_buffers = 128GB

work_mem = 1GB

maintenance_work_mem = 128GB

effective_cache_size = 128GBAlibaba Cloud AnalyticDB: The AI-Powered Data Lakehouse for a Unified AI+BI Experience

ApsaraDB - November 18, 2025

Alibaba Cloud Big Data and AI - January 21, 2026

ApsaraDB - January 28, 2026

ApsaraDB - November 21, 2023

Apache Flink Community - July 11, 2025

Alibaba Cloud Native Community - July 28, 2025

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by ApsaraDB