By Zhang Lei, CNCF TOC member

Even though cloud-native has been around for some time, many people still have the following questions:

When we come into contact with many cloud-native open-source technologies and products, we will find out that cloud-native is not a very exact object. That means there is no specific definition of cloud-native but a process of continuous evolution. Rather than talking about the nature of cloud-native, let's refer to it as a vision.

Well, what is the content of this vision?

In the cloud era in the future, software or applications are born and grow on the cloud. Cloud computing can reduce costs and improve efficiency for software and maximize the business value. This is what cloud-native aims to do. Therefore, cloud-native is not a specific technology, a specific method, or a specific scientific research project.

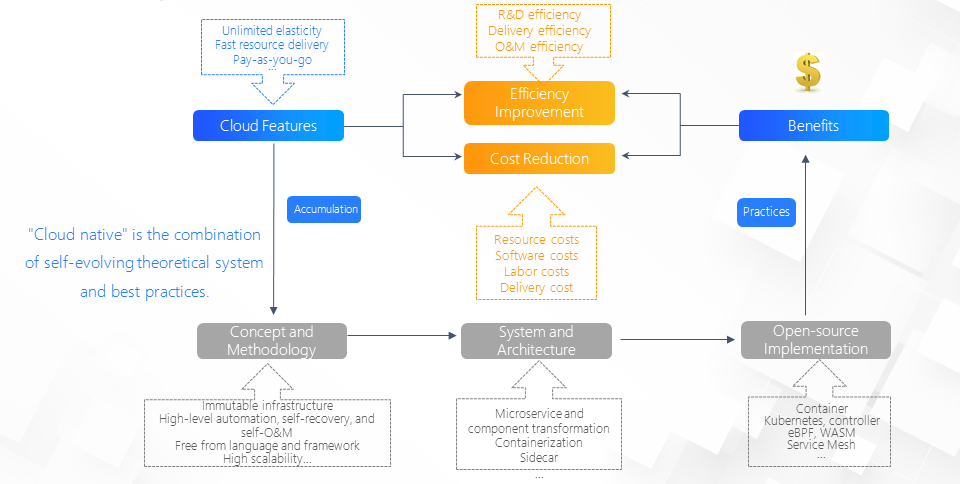

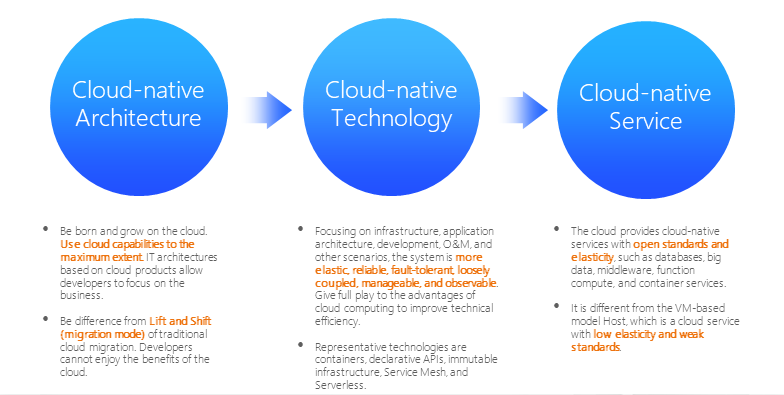

The following figure illustrates how cloud-native evolves and develops:

Cloud-native emphasizes the use of cloud features, so its core methodology and concepts focus on how to use cloud features for software and applications. What are the cloud features? The cloud can be scaled infinitely, cloud resources can be delivered quickly, and cloud usage can be charged in pay-as-you-go billing mode. These are all essential features of the cloud.

Based on these cloud features, cloud-native provides a set of basic methodologies and concepts. For example, you may have heard of immutable infrastructure. Let's assume that the application carrier is immutable when an application is deployed on the cloud. You can remove or replace it at any time. Then, it will be very easy to update the application. If you want to upgrade the application, you can remove the old one and launch the new one instead of dynamically changing application configuration items or the code. Therefore, immutable infrastructure is a very typical set of methodologies based on the rapid resource delivery capability of the cloud.

Cloud-native emphasizes a high degree of automation to implement self-maintenance and self-recovery. Developers hope the software can make better use of cloud features. The cloud can provide a variety of O&M capabilities. So, we should not focus on O&M based on cloud capabilities after applications are developed. Instead, the cloud can provide many capabilities to the application layer during application or software development. We need to take that into consideration. By doing so, we can create cloud-native applications.

Cloud-native applications do not concern languages or frameworks. This is also an obvious feature. The cloud is an infrastructure capability, so it should not be locked to a certain language or framework. We hope all software worldwide can utilize the capabilities of the cloud, not limiting the cloud to a specific place or language.

These are important concepts that cloud-native bring up in the context of the cloud. In technical research, these concepts are mapped into a series of systems or architectural ideas. For example, you can remove the old instance of an application to replace it with a new instance in immutable infrastructure. How can it be implemented? It depends on container technology. The container technology provides container images. A container image is a running environment that contains an application, including the application itself. Versions of the image can be replaced at any time for a new version. This means that containers are a good implementation of immutable infrastructure.

Does this mean that some technology will be able to implement immutable infrastructure better in the future? This is very possible, and of course, this technology is also cloud-native. If a new technology is available to implement immutable infrastructure better, such a technology must be the core of cloud-native. Similarly, the Sidecar architecture emphasized by cloud-native today aims to connect middleware capabilities into the business containers through Sidecar containers. The business itself is not customized and integrated with middleware. This is expected to be able to practice the architecture proposed by the methodology of regardless of language or framework. The characteristic of this architecture is that middleware capabilities no longer need to be embedded into the business code in the form of languages or frameworks. Therefore, the combination of Sidecar and containers can implement this set of methods.

This is a series of technologies and architectures constantly deduced from the cloud-native methodology. In the cloud-native ecosystem, these technologies and architectures are often used as open-source projects. For example, the preceding containers include projects in Docker. The Sidecar and self-maintenance concepts we mentioned will eventually be implemented for you through Kubernetes.

Another example is the recently popular Service Mesh. Essentially, it helps you build middleware capabilities using Sidecar, which is independent of languages. eBPF and WASM are practicing some ideas and architectures behind the cloud-native system. They meet user needs in the open-source form. This series of open-source projects allows users to get such open-source projects and technologies and practice the idea of cloud-native. They can achieve the two essential effects of the cloud mentioned earlier:

In general, this set of cloud-native methods is a complete closed loop. First, constantly explore how to use cloud features to help users improve efficiency and reduce costs. Then, summarize these series of methods or ideas into the cloud-native concept and methodology and implement them through a series of corresponding architectures and open-source projects. Finally, enable users to use these technologies to unleash the essential benefits of cloud computing.

Therefore, cloud-native does not have a specific definition. Instead, it is a combination of a constantly evolving theory system and best practices.

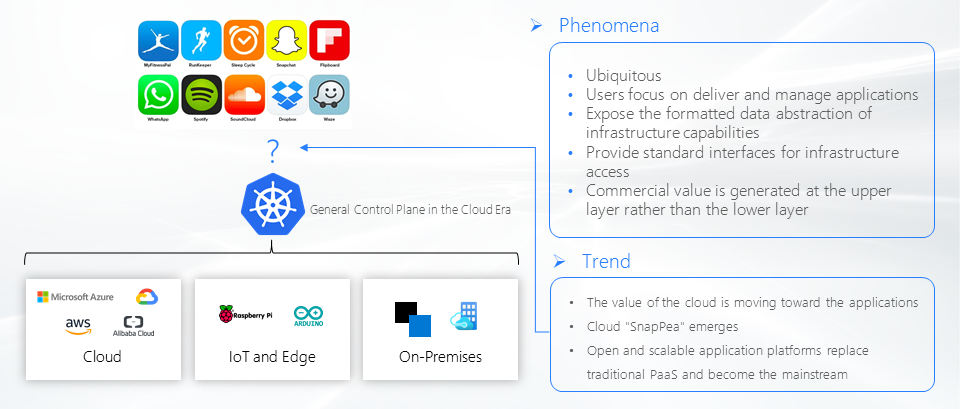

Nowadays, cloud-native may be built around containers and Kubernetes, but such projects are helping us practice the essential concepts of cloud-native, including immutable infrastructure and automation. Today, Kubernetes is considered a general-purpose control plane in the cloud era. Some people regard it as an operating system. This means that all your operations can be completed on the cloud by using Kubernetes.

The role of Kubernetes may be more like Android. For example, Kubernetes is being used everywhere today. It is deployed everywhere, including the cloud, end, and edge environments, just like Android. We can find Android in cars, TVs, even air conditioners.

What is the essential purpose of using Kubernetes? The answer is software delivery and management. For example, if you use Kubernetes, you must have deployed something, such as an AI service or Taobao. Then, you use Kubernetes to manage the software. Kubernetes itself exposes a series of formatted abstractions, such as Deployment, Service, and Ingress, so you can manage and deliver applications. For lower layers, it provides a set of standard APIs. For example, it can connect to the Alibaba Cloud Network using CNI, and it can also connect to exclusive network plug-ins. Therefore, Kubernetes is essentially a middle layer or a control plane. A large number of infrastructures are connected, and what they expose becomes some of the capabilities required by applications. Thus, these capabilities can be used to manage applications.

If this trend is studied continuously, you will see how this is particularly similar to Android. For example, technically, Android is open-source, but users must pay for applications in the application market. The value of Android is how it abstracts, packages, and encapsulates users' mobile phones into APIs that can be used by a series of applications. Therefore, the value of Android is the same as the value of Kubernetes today.

In the future, Kubernetes will appear in different places. More importantly, it will provide a series of complete capabilities covering the full lifecycle of application R&D, O&M, and delivery. At the same time, many other projects like Kubernetes will help you solve the software delivery problems of Kubernetes in the future. In addition, traditional PaaS will no longer exist because Kubernetes will take over all its capabilities. In the future, more open and scalable PaaS will emerge. They enable you to deliver and manage software in a simpler way, like SnapPea on Android. We call this trend Android-based Kubernetes.

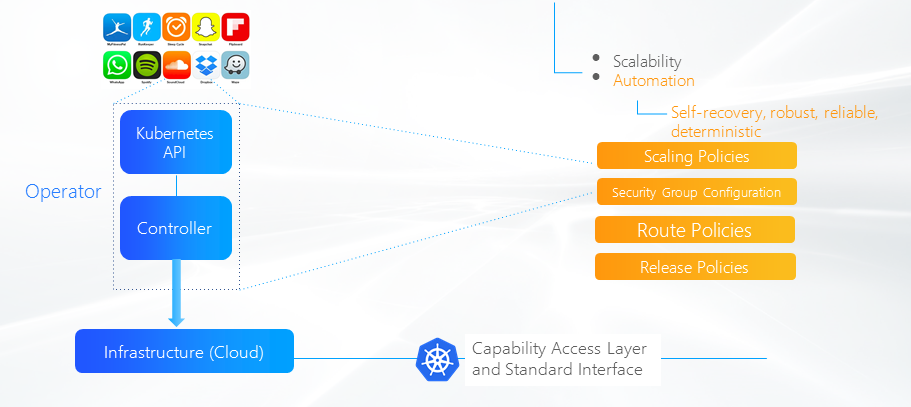

In today's cloud-native ecosystem, applications and capabilities will evolve towards automation. We call this trend Operator-based Kubernetes.

Operator is a core concept of Kubernetes. It means any application and the capabilities it requires can be defined as a Kubernetes API. A Controller mechanism allows you to use cloud capabilities and access various infrastructures. A direct result of Operator-based transformation is that applications are highly automated with features, such as self-recovery, robustness, reliability, and operation certainty. Kubernetes can implement these features. Users or application owners do not need to worry about these issues.

This is another trend that we can see today in the context of Android-based Kubernetes. In other words, applications and capabilities required for business will continue to evolve towards automation. This is also in line with the idea of cloud-native because the stronger your application automation and self-recovery capabilities, the more you can connect with the cloud, and the lower the cost of manual recording with less time. We can focus more on connecting automation capabilities with the cloud so the cloud can help us solve all problems.

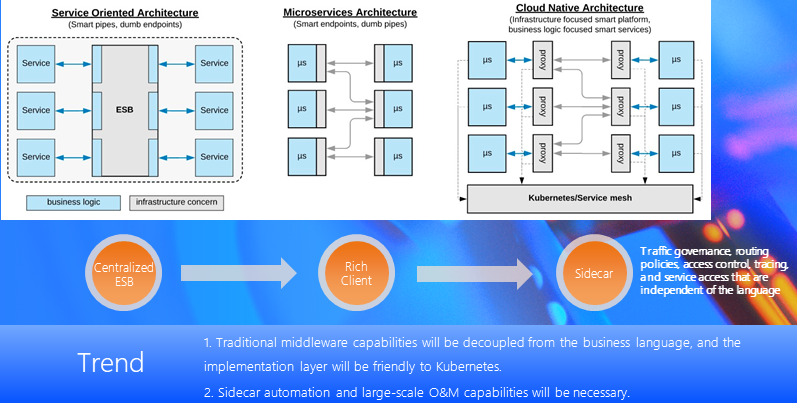

Another trend is the simplification of middleware capabilities required by applications. Previously centralized middleware has evolved into microservice architecture over the past few years.

In essence, microservice architecture splits the previous sets of centralized middleware and puts them in the business code. You need to introduce and use them. In general, a relatively rich client or library will be provided for you to use. This is one way to use the typical middleware in the microservice era. However, as cloud-native becomes more popular, is there a mechanism similar to Sidecar today?

Today, middleware is used mainly through Sidecar, so applications do not need to introduce another library or a specific framework to do many things. You don't even need to know. For example, if you want to perform traffic splitting today, you don't need to introduce such a library into the application. Instead, you will leave the whole task to the cloud or infrastructure.

As for the interaction between applications and the cloud, a bypass container named Sidecar is used as a proxy to handle the inbound and outbound traffic required by applications. Therefore, the cloud can regulate and split traffic easily through such a proxy, which is the principle of Service Mesh.

Today, middleware capabilities are being constantly simplified in such a way, which will cause a trend. Middleware is no longer relevant to the business or the programming language, and it does not need to depend on the framework. Its implementation will be closely integrated with the Kubernetes container system. In addition, Sidecar is depended on more, so the corresponding Sidecar management capability is improving gradually. We can summarize this as the further simplification of application middleware capabilities.

In addition, the continuous development of the cloud-native ecosystem is causing a large number of cloud services to move closer to the cloud-native ecosystem frequently, leaving revolutionary impacts.

For example, the cloud-native database of Alibaba Cloud is based on the core idea of cloud-native, such as unlimited elasticity and high scalability. It proposes a brand new database architecture, which allows databases to scale out easily. By doing so, databases can cope with the extremely high traffic with high requirements and massive data processing demands, meeting the database requirements of today's modern Internet applications.

Another example is the Alibaba Cloud infrastructure. It brings us the ultimate resource utilization efficiency, reduces the performance loss of many layers of virtualization, and makes the container elastic and easy for O&M, deployment, and management. It ensures the isolation between containers through security containers and stronger security boundaries. It can bring the ultimate physical-level network storage and computing performance to containers, which is very important. This is also a very typical example of how applications use cloud computing services through the idea of cloud-native.

Amazon Cloud Technology makes it easier or more direct for chips to adapt to containerized applications. A container may only have one very independent or modular process running. Therefore, we can use the kernel of the chip to adapt to such a service. We can give full play to the capabilities of the infrastructure to the maximum extent and ensure that there is very little interference between such kernels at the same time. Chips can adapt to the use of containerized microservice applications.

Amazon Cloud Technology recently launched a deployment engine for cloud-native applications, which can deploy any cloud services or container services consistently. It is a typical product that can help us use cloud capabilities to improve the efficiency of application management, delivery, and O&M.

When talking about these products or open-source projects, we may think about whether these cloud products are cloud-native. It is very simple. We only need to check whether it can help our applications reduce costs and improve efficiency using cloud computing and whether it can release the maximum business value this way. By doing so, we can know whether a product is cloud-native instead of checking whether the product is a container.

We can see that Alibaba's infrastructure has completed the cloud-native transformation based on a complete set of technologies, such as the Kubernetes container. If we look back at such a thing, we will find that cloud-native has brought some very important changes to Alibaba.

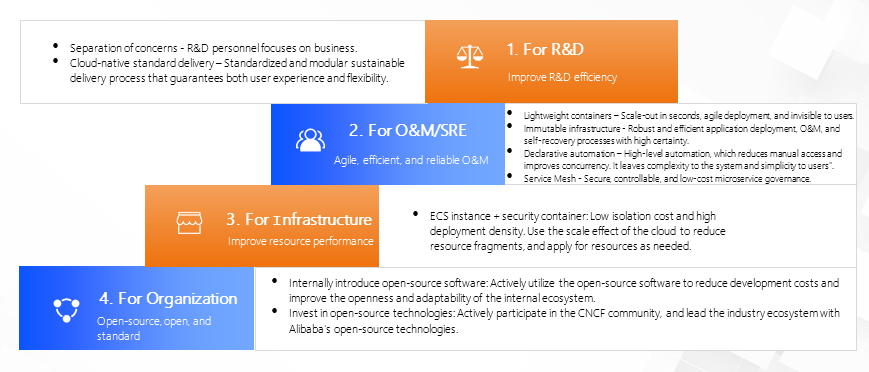

First, the cloud-native concept mentioned previously has helped Alibaba achieve the separation of concerns and focus more on business for R&D. We have also put forward specifications for cloud-native standardized delivery to implement sustainable delivery in a standardized and modular manner through the standardized delivery mode of cloud-native. This considers user experience and flexibility and improves the R&D efficiency of businesses, allowing R&D personnel to fully focus on businesses without having access to complex infrastructure. This is the biggest value that cloud-native brings to business R&D.

The idea of agile O&M and efficient operation provided by the cloud-native system and its technical implementation, including lightweight immutable containers, infrastructure, highly automated applications, and O&M methods, make software O&M extremely simple and efficient for business O&M and SRE. The container-based and automated method improves the automation level of O&M operations significantly, especially when compared with the traditional methods. It can reduce manual intervention and improve the operation concurrency, leaving complexity to the system and simplicity to the users. This is Alibaba's cloud-native system today.

Let's not forget to mention today's containerized applications, such as Taobao and other applications. They can be scaled and upgraded quickly and efficiently. However, your mobile phone application will still be available after upgrades in the cloud-native era.

For infrastructure, Alibaba's ECS bare metal servers and security containers can help us improve the resource utilization efficiency of today's data centers. This is called resource efficiency improvement. In particular, it can help us deploy security containers at a high density, take advantage of scale, and reduce resource fragments. We can complement fragments of resources according to the different forms of the workload. ECS bare metal servers ensure that we can still have extremely high business operation efficiency. At the same time, there will be no interference with each other. These are important changes that the cloud-native infrastructure can bring us in today's cloud-native environment. The introduction and development of cloud-native technologies have brought great changes even to Alibaba. Alibaba's technology stacks are standardized and open and can be integrated with the ecosystem seamlessly to reduce R&D costs. Therefore, the reliability and R&D efficiency of the entire system are improved.

Moreover, Alibaba's technologies are entering the open-source community rapidly because of the standardization of the infrastructure. Today, Alibaba has the most open-source projects in CNCF, greater than any other vendor and organization. One of the key reasons is that Alibaba's technologies are integrated seamlessly with the ecosystem, so Alibaba can actively participate in a broader open-source ecosystem and output open-source technologies. Alibaba leads and influences the development of the entire industry ecosystem. We have seen major changes since Alibaba implemented cloud-native.

If we review the idea of cloud-native discussed in this article, we can see that it is a process of continuous evolution from architecture to technology and product. In terms of architecture, cloud-native allows the software to take advantage of the capabilities of the cloud to its maximum extent because software is born and grown on the cloud naturally. Cloud-native is different from traditional modes because it allows developers to enjoy benefits and modernizes software and applications.

We have a series of technologies based on this architecture and idea, including open-source and exclusive ones, but the logic and ideas behind them are highly consistent. Cloud-native technologies are used to make systems more reliable and scalable with better fault tolerance in scenarios of infrastructure, application architecture, development, operation, and delivery. Components are loosely coupled to facilitate management, and better observability is provided to fully adopt the capabilities of the cloud. Cloud-native can maximize the potential of the cloud, and it cannot be separated from the support of cloud-native ideas and technologies. These ideas and architectures, including containers and immutable infrastructure, are an efficient means for us to implement cloud-native.

Based on these methods, we have various products powered by cloud-native ideas, including cloud-native databases, cloud-native service products, middleware, function compute, and containers. They can make use of the value of the cloud and help application R&D, O&M, and delivery personnel that use the cloud. They are all very different from traditional cloud computing services.

Therefore, we will see how the cloud becomes more Service-, SaaS-, and Service-oriented in the future, while the infrastructure layer is less focused. Users worry whether the application of the cloud can maximize the business value.

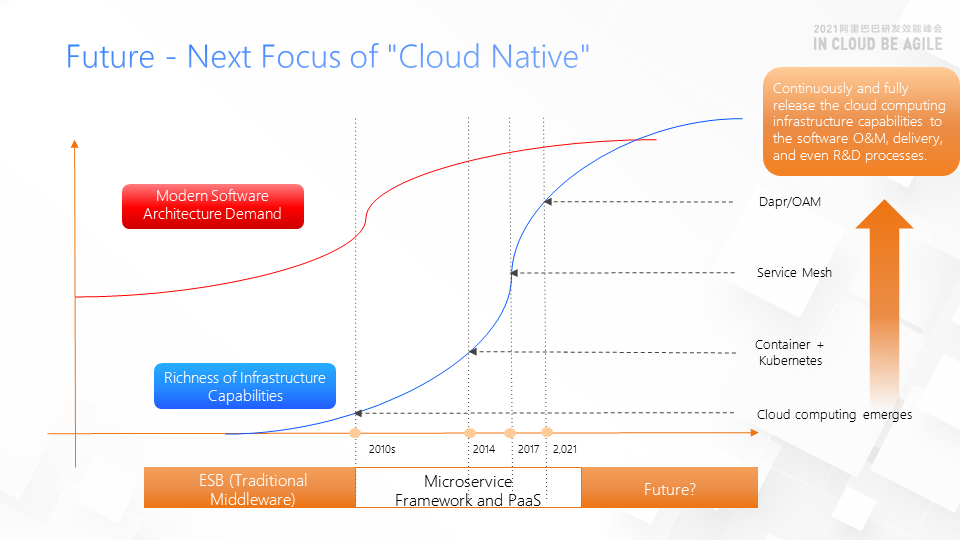

The entire future evolution trend is accompanied by a very important point. The cloud capabilities are constantly getting richer, which is very important. In the past, the software architecture needed much traditional middleware, such as some microservice frameworks or PaaS services, to help us manage the software. The reason lies in the insufficient capabilities of the cloud or infrastructure. For example, you want the blue-green release capability today, but many clouds have not had this capability for a long time. So, some middleware or framework must be used to help you solve this problem. Today, things have changed. The cloud can provide the management capabilities required by applications. Cloud capabilities have almost exceeded most of the software architecture requirements. Therefore, you don't need an additional layer, whether it's traditional middleware, microservice framework, or PaaS, to help bridge the gap between software demands and infrastructure.

As this gap narrows, various cloud-native technologies emerge. Any cloud-native technology is no longer an improvement of certain capabilities. Instead, it provides cloud capabilities to applications in a simpler and more efficient way. Containers, Kubernetes, and Service Mesh help applications use cloud services better at different stages. You can also use the infrastructure capabilities behind the cloud, such as Kubernetes, to allow applications to access the cloud storage and network and use cloud computing capabilities. Service Mesh allows you to use the traffic control capability of the cloud as a microservice for governance through completely non-intrusive Sidecar.

In the future, the development of cloud computing, including the focus behind cloud-native, will constantly, continuously, and fully release the infrastructure capabilities of cloud computing. The software R&D and delivery and the whole lifecycle can be covered. This is an important aspect. Cloud capabilities will be more powerful in the future. With such a trend, we can see how cloud-native is gradually leading the way in the cloud computing ecosystem.

dubbo-go-pixiu: The Mythical Animal of Cross-Language Calls in Dubbo

664 posts | 55 followers

FollowAlibaba Cloud Community - March 2, 2022

Alibaba Cloud Native Community - March 14, 2022

Apache Flink Community - May 9, 2024

Alipay Technology - March 4, 2021

Alibaba Cloud Security - December 5, 2019

DavidZhang - April 30, 2021

664 posts | 55 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn MoreMore Posts by Alibaba Cloud Native Community