By Xiaofei Chen

In the article entitled, How Does the IMCI of PolarDB for MySQL Achieve Ultimate TopK Query Performance?, we described how PolarDB In Memory Column Index (IMCI) uses statistics to prune at runtime to improve the query performance of TopK algorithms. This article describes the query pruning technology of PolarDB IMCI.

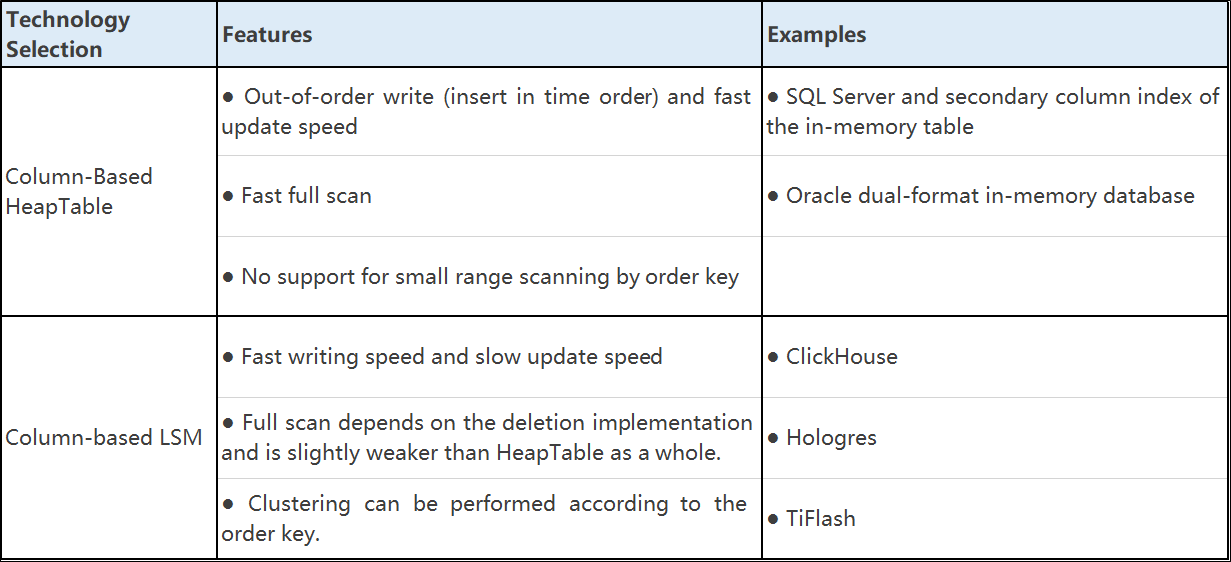

In the HTAP scenario, PolarDB IMCI selects column-based HeapTable as the underlying main storage architecture to support real-time updates. SQL Server and Oracle column index also use this storage architecture. However, although the HeapTable architecture is fast to update, it does not perform well in small-scale scans. In many cases, a full table scan is required. SQL Server mainly reduces the amount of scanned data through minmax, partitions, and clustered indexes to minimize the problem of full table scanning. Oracle is a full-memory column index, which mainly reduces full table scanning through minmax and metadata dictionaries. In contrast, PolarDB IMCI belongs to the column-store table mode, meaning data can be stored, and more diversified methods are implemented to optimize full table data scanning.

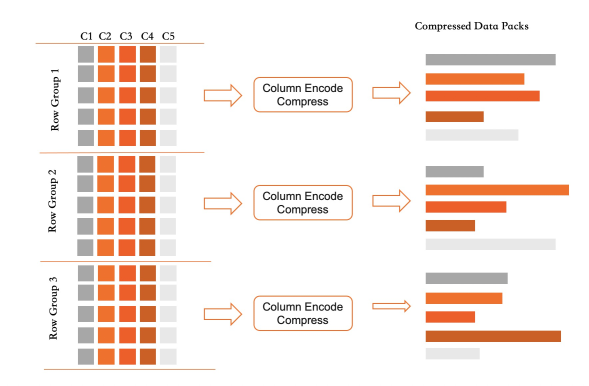

Records are organized by Row Group in PolarDB IMCI. Each Row Group contains 64K rows. The column index of each column is stored in an unordered and append-write format. Therefore, IMCI cannot filter out data that does not meet the requirements as accurately as the normal ordered index of InnoDB. When reading a datapack, we need to load it from the disk into memory and decompress it. Then, traverse all the records in the datapack and use the filter condition to filter out the records that meet the conditions. For large tables, these scan tasks are costly and cause a certain degree of pollution to the LRU cache, resulting in longer overall query latency and a significant reduction in QPS.

PolarDB IMCI introduces query pruning technology to cope with the high cost of large table scans. This technology can filter out datapacks that do not need to be accessed in advance when filtering data, thus reducing data page views and improving query efficiency. The specific implementation is to cut out data that does not meet the conditions before the query by accessing partition information and statistical information and combining them with specific filter conditions. As such, the number of scans for storage can be reduced, thereby reducing data transmission and computing consumption. This technique applies to single-table data queries and multi-table join queries. It can significantly improve the query performance of PolarDB IMCI.

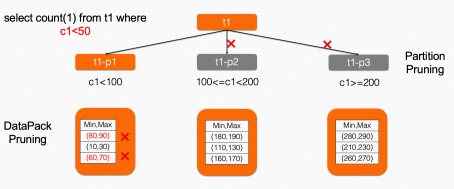

The partition pruning of IMCI refers to a technology that filters out partitions that do not need to be queried according to the conditions of the partition key during the query, reducing the amount of data queried and improving query efficiency. IMCI supports the following partition types: Range, List, and Hash. Range and List divide a data table into several ranges or lists, and Hash hashes data to different partitions. When partition pruning, we need to use a query statement that meets the partition conditions and use the partition key as the query condition.

For example, there is an order table that is divided into 12 partitions based on the order date. We can use the following query statement to query the orders of a certain day:

SELECT * FROM orders WHERE order_date = '2022-01-01';When IMCI queries, it finds the partitions that meet the conditions based on the partition key order_date of the order table and queries only the data of this partition. This reduces the amount of data to query and improves query efficiency. IMCI supports the derivation of the equivalence relation of JOIN columns to fully perform partition pruning. For example, the partition keys of R and S are all a, and the query select count(1) from R,S where R.a = S.a and R.a > 10 can be used to derive S.a > 10 from R.a = S.a and R.a > 10, which can be used for partition pruning of S.

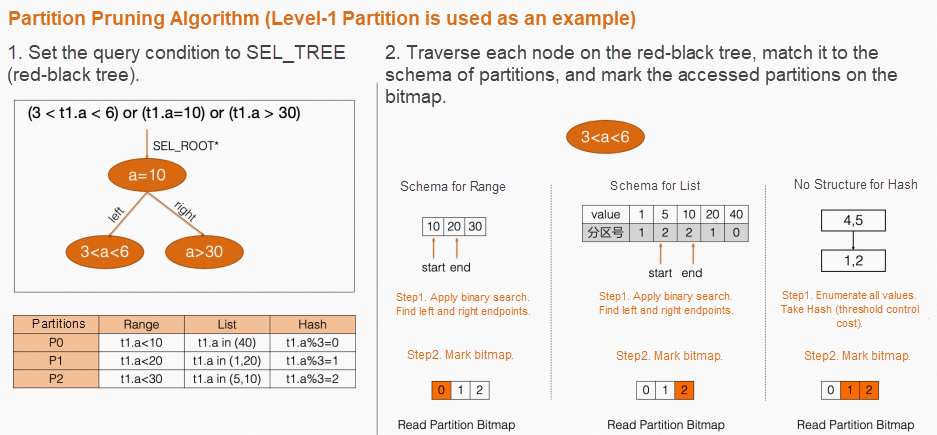

The following figure describes the pruning algorithms for different partition types. For Range, the demarcation points of different partitions are stored in an array orderly, so the binary search method is directly used. For List, the list values of all partitions and corresponding partition IDs are formed into tuple, which is stored orderly by value, and the hit partition is also found according to the binary search method. For Hash, enumerate possible values for hash and compute, which partitions may fall in. It can only be used for integer fields and requires a small number of enumerations.

In actual use, we need to select an appropriate partition type and partition key based on the specific data volume and query requirements to achieve optimal query performance.

IMCI uses statistics on datapacks to skip unnecessary packs. This is similar to the skipping index in ClickHouse and the knowledge grid in InfoBright. In IMCI, this kind of skipping is called pruner (rough index). Pruner can help IMCI optimize query performance. Its basic principle is to use statistics and filter conditions to prune during queries to determine whether a pack needs to be scanned. Since the statistics information occupies less memory, it can be resident in memory. If pruning is successful, we can reduce the number of I/O operations and conditional judgments to improve query performance.

For a datapack, there are three possible results after it is filtered by pruner: accept (AC), reject (RE), and partial accept (PA). The accepted datapack does not need to filter each record, which reduces computing overhead. The rejected datapack is irrelevant to this query and does not need to be loaded into the memory. I/O and computing overhead are reduced, and LRU Cache pollution is avoided. The partially accepted datapack needs to scan each record to filter out eligible records.

The pruner of IMCI has two types (minmax and bloom filter), which are suitable for different scenarios. IMCI is optimized for nullable columns. During queries, the pruner can skip datapacks that contain null values to improve query speed. In addition, IMCI supports bitmap indexes at the data block level. When the pruner cannot filter, bitmap indexes are used to avoid scanning datapacks, thus speeding up queries.

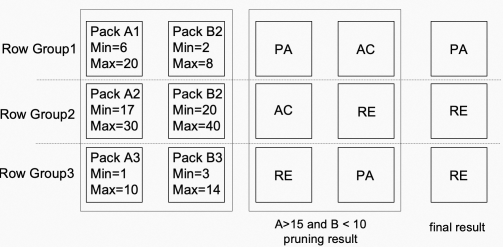

Minmax index is an enhanced index technique for large datasets. It indexes data sets by storing the minimum and maximum values of each data block to provide fast and efficient data retrieval. The minmax index is suitable for continuous numeric data in a dataset, such as timestamps or real values. It splits a data set into blocks, computes the minimum and maximum values for each block, and stores them in the index. When performing data queries, the minmax index can quickly locate data blocks based on the minimum and maximum values of the query range, reducing access to irrelevant data. In the following figure, columns A and B contain three datapacks. If we use the conditional A>15 and B<10 and minmax indexes, Row Group2 and Row Group3 can be skipped, and we only need to access Row Group1. This reduces the scan cost by 2/3.

The advantage of minmax index is that it can process large data sets in a fast time. It can reduce the amount of data that must be scanned to process a query because it only needs to process blocks of data relevant to the query scope. In addition, the minmax index helps reduce the space required to store indexes because it only needs to store the minimum and maximum values of each block rather than the indexes of all data.

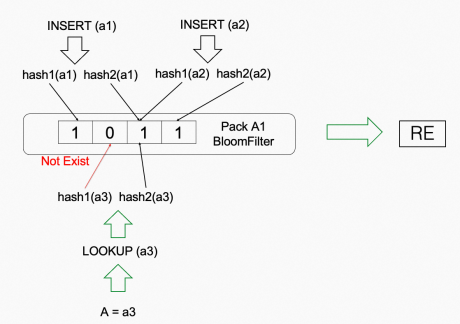

Bloom filter is a commonly used probabilistic data structure used to determine whether an element belongs to a set. It uses an array of bits and a set of hash functions to store and search for elements. When an element is added to the filter, the hash function maps the element to several positions in the bit array and sets the corresponding bit to 1. When checking whether an element is in the filter, the hash function is applied to the element again. If all corresponding bits are 1, the element may be in the set. However, if any one of the corresponding bits is 0, the element is not in the set. Bloom filter is a space-efficient representation that can quickly determine that an element is not in the collection, but it can produce false positives. It may indicate that an element that is not in the collection is in the collection.

The advantages of bloom filters are high efficiency, high space efficiency, strong scalability, and controllable misjudgment rate. These advantages make the bloom filter a useful data structure for dealing with the existence of elements in large-scale data sets.

Bloom filter and minmax can be used in combination. IMCI determines whether to skip a data block based on the combined results of the two.

Due to the special processing logic of null values, database indexes generally do not support columns with null values. Different databases process nullable columns differently. For MySQL, it builds secondary indexes on columns that contain null values and can only be used for IS Null. For ClickHouse, it needs to create additional files to store null tags for nullable columns and does not support indexes. Therefore, ClickHouse has poor query performance on nullable columns.

PolarDB IMCI is optimized for nullable columns. This reduces the impact of null values on query performance. In PolarDB scenarios, columns that contain null values are frequently met. If we ask users to use default values to fill null values, we need to spend much time changing the schema of the table through DDL. We may also need to change the SQL statements of existing services. PolarDB IMCI supports building minmax and bloom filter indexes for columns with null values. It supports predicates IS Null/IS NOT Null and queries with other predicates, such as >, <, and =.

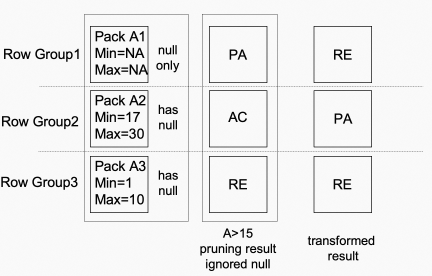

If a pack contains a null value, this value is skipped when the pruner is built. For example, if the pack contains [1, 2, 3, null], min=1, and max=3. During querying, the query is processed based on the logic that no null values are included, and the result is converted based on whether the processing result contains a null value. As shown in the figure above, Pack A1 only has null, while Pack A2 and A3 both contain partial null. At this time, the condition A>15 obtains the result of [PA, AC, and RE] without considering null (since there is no minmax in A1, it cannot be filtered) and then converts the result into [RE, PA, and RE] according to the case that each Pack contains null values. Finally, two of the datapacks can be pruned, improving query performance.

The runtime filter is a query optimization technique dynamically generated during query execution. During querying, runtime filters can filter out unnecessary data based on scanned data values or other information, thereby reducing the amount of data to be queried and improving query performance. Common runtime filters include Bloom Filter and minmax Filter. They can be used in a variety of query scenarios, such as join, group by, and order by.

For example, PolarDB IMCI optimizes TopK (order by + limit). We can use the Self-sharpening Input Filter built by the Topk operator and the minmax index on data blocks at the storage layer to perform pruning to accelerate TopK performance. Please see How Does the IMCI of PolarDB for MySQL Achieve Ultimate TopK Query Performance? for more information about the implementation principle.

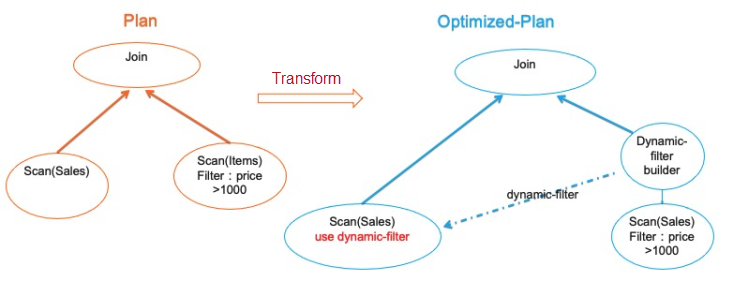

In addition, Runtime Filter can be used to accelerate hash joins. SELECT * FROM sales JOIN items ON sales.item_id = items.id WHERE items.price > 1000. The sales table is a fact table, and the items are a dimension table, which is small. The number of returned records can be further reduced using the condition price > 1000. When executing join, a filter can be established according to the item_id result set that meets the condition. For example, if min and max that meet the condition are 1,100, filter expressions of id > 1 and id < 100 or id in(id1,id2,id3 ...) can be generated. Runtime Filter is passed to the left table. When the left table scans records for probes, we can use the filter to filter records that do not meet the conditions in advance to reduce the number of probes. For the string type, we can create a bloom filter for the result set of the right table to filter in advance. The bloom filter has a cost and is not suitable for scenarios with large result sets. Furthermore, if there is a pruner on the left table column, we can filter according to the filter to reduce data block scanning. In MPP scenarios, Runtime Filter can play a role, which can effectively reduce the amount of shuffle data.

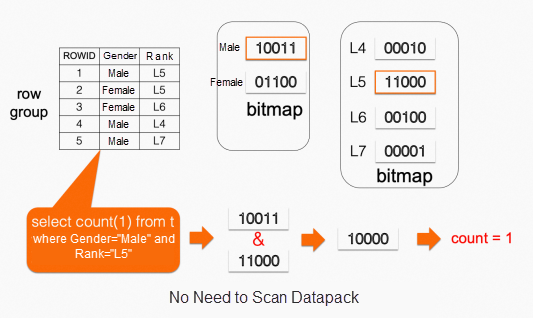

Bitmap indexes are used in row-based stores. For example, Oracle provides a bitmap index, which is more suitable for scenarios with less cardinality of columns. Specifically, the position information of a row is stored for each column value, and each position information only needs one bit to identify whether the value exists or not. When executing query filtering, we only need to obtain the bitmap information based on the column values to locate the position of the rows, which is especially suitable for predicates (such as AND/OR of multiple column combinations(. Generally speaking, the B+tree index is more suitable for high-carryover columns, which is convenient for fast filtering and positioning. Bitmap index is complementary to B+tree. B+tree is suitable for scenarios with fixed search patterns and is not friendly to predicate OR. Bitmap indexes can have good results in this scenario too.

Another advantage of the bitmap index over B+tree is that storage space is small for low cardinalities. As shown in the following figure, a bitmap index is created for the gender column and rank of the table. There are only five rows of data in the table, so the bitmap corresponding to one column value only needs five bits. Compared with traditional B+tree, bitmap index requires little storage space, and the specific size is related to the cardinality and total number of rows. For the global bitmap index, due to the characteristics of the bitmap index, it is necessary to maintain the bitmap index and lock the entire table to modify any row. Therefore, the cost is high, and it is suitable for scenarios where more reads and fewer writes are required.

PolarDB IMCI currently supports a bitmap index at the datapack granularity. We can use a bitmap index to return the row numbers of the required data, which can effectively reduce access to datapacks.

PolarDB IMCI supports multiple query pruning technologies that are complementary to each other. Therefore, they can be used in combination. Users need to choose a method based on data characteristics and query scenarios. IMCI query pruning techniques all require data to have certain distribution characteristics. The stronger the locality, the better the pruning effect. However, real-world scenarios may not be intuitive. At this time, careful design is required.

select count(*) from t where xxx).All query pruning technologies of IMCI have certain limitations. In actual scenarios, multiple technologies need to be combined to improve its query pruning capabilities.

1) Reduce Pack Size: For minmax and bloom filters, a smaller pack means a finer-grained index and usually has a better pruning effect. IMCI supports resizing the column index pack of a table. However, decreasing the pack size may increase memory usage.

2) Sort Data: If data distribution is random, we can use the sort key feature of PolarDB IMCI. The data has a good locality, which is conducive to statistical pruning.

3) Disable Pruner: Like any other index, statistical pruning may not be effective, but there are certain computing and memory overheads. There are certain I/O overheads for bloom filters. Then, we can disable the pruner when querying.

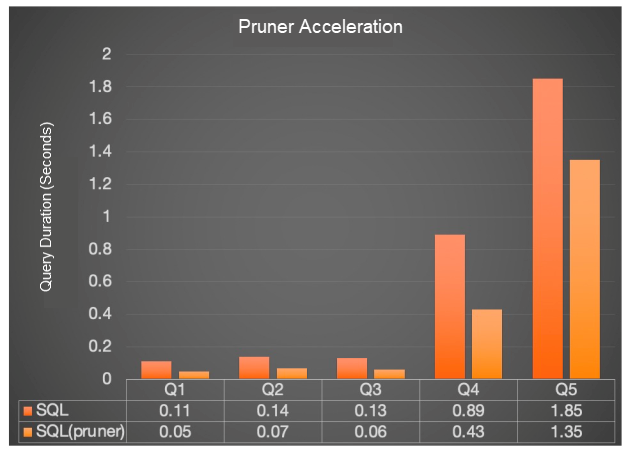

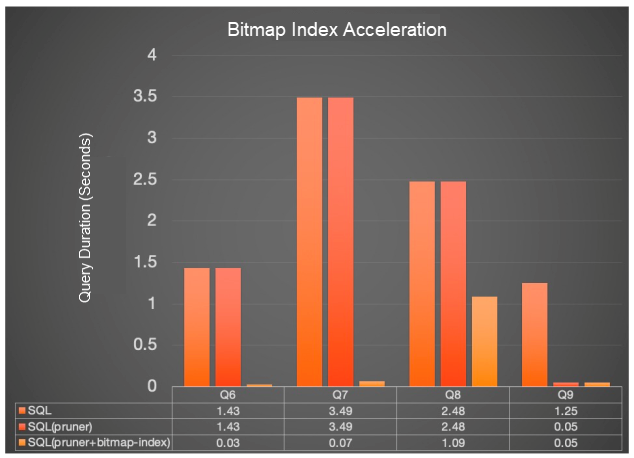

This section tests the performance of the pruner and bitmap index in PolarDB HTAP. The pruner mainly covers minmax and bloom filters. The test dataset uses 100 GB of TPCH data, and several typical query scenarios are tested, including point query and range query. The data types mainly involve numeric and string types.

The effectiveness of lightweight indexes depends on data distribution and query types. In order to reflect the effect of lightweight indexes, several artificially constructed SQL statements based on the Scan operator are used to compare the acceleration effects before and after pruner and bitmap-index are used. The test environment uses an all-memory scenario, and the concurrency is set to 1. In scenarios with I/O, the acceleration effect of indexes is more obvious.

Q1:select count(*) from partsupp where ps_suppkey = 41164;

Q2:select count(*) from partsupp where ps_suppkey in (41164,58321);

Q3:select count(*) from partsupp where ps_suppkey between 40000 and 50000;

Q4:select count(*) from orders where o_clerk = 'Clerk#000068170';

Q5:select count(*) from orders where o_clerk in ('Clerk#000068170', 'Clerk#000087784');

Q6:select count(*) from customer where c_mktsegment = 'AUTOMOBILE';

Q7:select count(*) from customer where c_mktsegment in ('AUTOMOBILE','FURNITURE','BUILDING');

Q8:select count(*) from customer where c_mktsegment = 'AUTOMOBILE' or c_phone = '18-338-906-3675';

Q9:select count(*) from customer where c_mktsegment = 'AUTOMOBILE' and c_phone = '18-338-906-3675';Q1 to Q5 verify the acceleration effect of the pruner. The ps_suppkey columns and the o_clerk column of the partsupp table and the orders table can be accelerated by minmax index and the bloom filter index, respectively. The acceleration ratio is proportional to the number of filtered blocks. Q6 to Q9 verify the acceleration effect of the bitmap index. The c_mktsegment column values of customers are evenly distributed in each data block. Therefore, the pruner cannot accelerate the execution of this SQL statement. The execution efficiency of this SQL statement can only be improved using both the pruner and bitmap index.

Based on the existing work, the index acceleration technology can be developed further.

For the bitmap index, we can consider combining it with data encoding to use common encoding techniques (such as dictionary and RLE) to implement compressed data-based lookups.

In addition, we can enrich the types of indexes, such as function indexes for expression operations and inverted indexes for text searches.

Xiaofei Chen (Alibaba Cloud ApsaraDB - PolarDB Team) is engaged in the research and development of PolarDB IMCI.

[1] https://clickhouse.com/docs/en/optimize/skipping-indexes

[2] https://clickhouse.com/docs/en/sql-reference/data-types/Nullable#storage-features

[3] https://dev.mysql.com/doc/refman/8.0/en/is-Null-optimization.html

[4] https://zhuanlan.zhihu.com/p/604725889

[5] https://www.researchgate.net/publication/221213121_Data_warehouse_technology_by_infobright

[6] http://mysql.taobao.org/monthly/2022/01/03/

[8] Oracle Database In-Memory on Active Data Guard: Real-time Analytics on a Standby Database

[9] SQL server column store indexes

[10] Small Materialized Aggregates: A Light Weight Index Structure for Data Warehousing

[11] An Analytic Data Warehouse for Ad-hoc Queries

[12] SuRF: Practical Range Query Filtering with Fast Succinct Tries

About Database Kernel | What Happens to Distributed Databases When Two Machines Are Hung Up?

[Infographic] Highlights | Database New Feature in July 2023

ApsaraDB - November 21, 2023

ApsaraDB - March 26, 2024

ApsaraDB - August 7, 2023

ApsaraDB - January 4, 2024

ApsaraDB - August 4, 2023

ApsaraDB - June 7, 2022

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB