Being voted as the most popular database in the Stack Overflow Developer Survey and named as database management system of the year by DB-Engines in 2023, Postgres continues to deliver existing enhancements and innovative features to developers.

PostgreSQL 17 has officially been released, bringing with it a host of new and innovative features which are set to revolutionize the developer experience. At Alibaba Cloud, we are proud to announce that Postgres 17 is now available on ApsaraDB RDS, allowing developers to harness the power of the latest advancements in the world's most popular database.

In this blog post, we will delve into the most exciting highlights of Postgres 17 and explore how the new release of ApsaraDB RDS for PostgreSQL 17 can benefit developers and enterprises alike.

According to the release notes of Postgres 17, some of the standout features include:

In this article, we’re going to take a closer look at three key features geared towards performance improvements from a developer perspective:

• Adaptive Radix Tree for Faster Vacuuming

• Enhanced Optimizer

• Streaming I/O for ANALYZE operations

• SQL/JSON: The One Liked By Numerous Developers

Additionally, we’ll explore an innovative feature that is sure to capture the attention of every developer. Join us as we discover the groundbreaking advancements that are set to elevate the PostgreSQL experience to new heights.

First, let's see how the new Vacuum works and what it will affect.

In Postgres, when rows are deleted or updated, the space they occupied is not immediately reclaimed. This is to improve the performance of concurrent transactions and to avoid immediate disk I/O. However, this can lead to wasted disk space and decreased performance as the database becomes fragmented. Vacuum is used to reclaim storage space occupied by deleted or outdated rows (dead tuples) and to reorganize the physical structure of the database.

Vacuum also helps to prevent transaction ID wraparound, a situation where the database transaction ID counter exceeds its maximum value, leading to database corruption and downtime. Therefore, regular use of Vacuum is essential to maintain the health and efficiency of a PostgreSQL database.

Vacuum process, such as sorting and optimizing data storage, can be very memory intensive. Traditionally, to prevent vacuum operations from consuming too many resources and impacting the database performance, there was a 1GB limitation for the autovacuum function in versions before Postgres 17.

On one hand, this can limit the memory used by vacuum to improve memory efficiency. On the other hand, this could affect performance by introducing a lot of overhead for indexes on the tables, which can often happen when a large number of rows (100 million rows for example) are deleted or updated. Previously, in versions before Postgres 17, Postgres used a simple array that stored the IDs of dead tuples, and the autovacuum was easier to run from working memory (up to 1GB) when there was too much dead tuple information to remember. When the dead tuple information became too much, it had to go over all of the indexes and scan the table. As is well known, the full table scan can be very slow for big tables, leading to performance issues due to the 1GB limitation.

In short, there are 3 main improvements which make Vacuum faster in Postgres 17. They are:

• New Data Structure: efficient adaptive radix tree replaces old array

• Dynamic Memory Allocation: no 1GB limit

• Faster Tuple Identifier (TID) Lookup: speedier Tuple ID searches.

Postgres 17 allows vacuum to store more tuple references, as well as surpassing the 1GB limitation of memory by using adaptive radix trees, to provide an improved dead tuple storage for autovacuum.

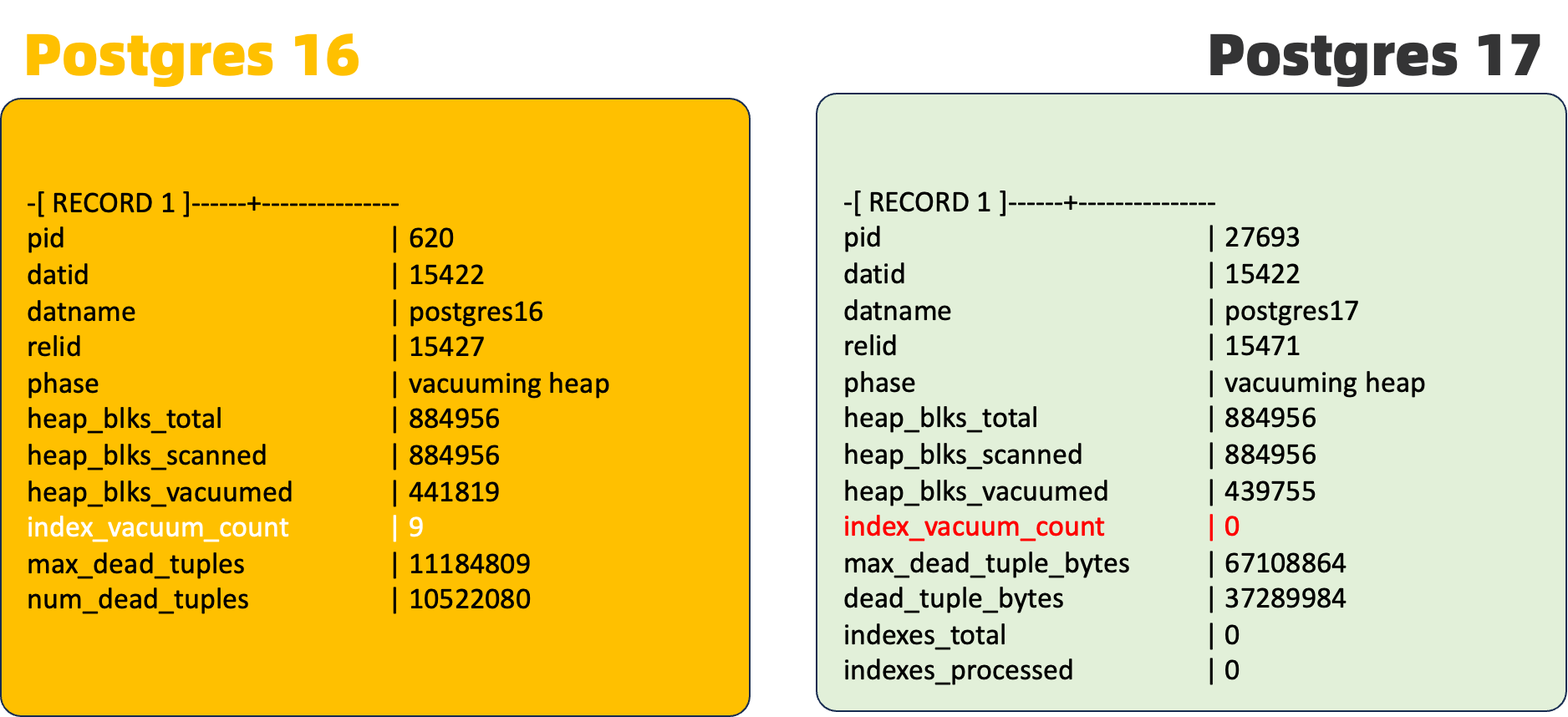

It significantly reduces autovacuum memory consumption and avoids the need for multiple index vacuum phases. Below is a practical comparison which sets out the differences in Postgres 17.

Both time and memory consumption can be significantly reduced; the bigger the data, the better the results reflecting the reduction. In one of our tests, we achieved 20x memory reduction with 20% more efficiency improvement.

But there’s even more to get excited about with the new optimizer.

There are several improvements in optimizer in Postgres 17 but, for today, we just want to introduce one which can be used most frequently.

Postgres 17 removes IS NOT NULL restrictions from queries on NOT NULL columns and eliminate scans on NOT NULL columns if IS NULL is specified. Additionally, it allows correlated IN subqueries to be transformed into joins. These can significantly make SQL queries faster for less table scans. To better understand the benefits, check the execution plan for the two examples below.

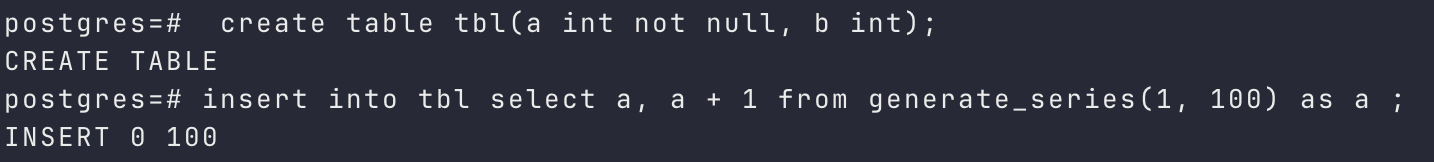

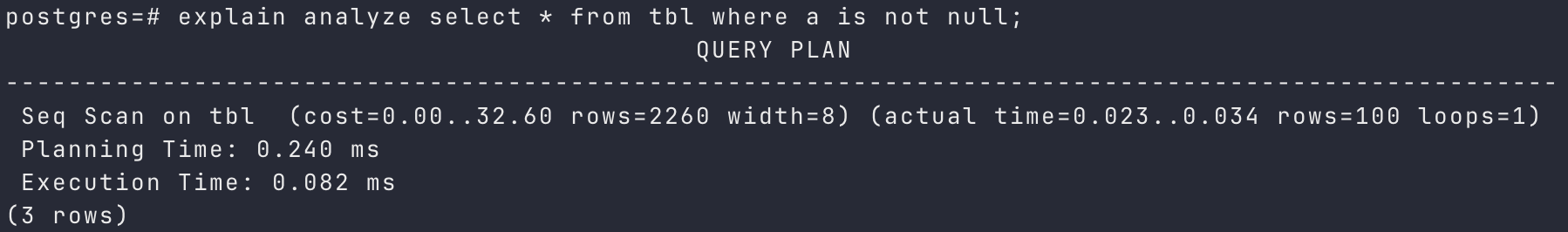

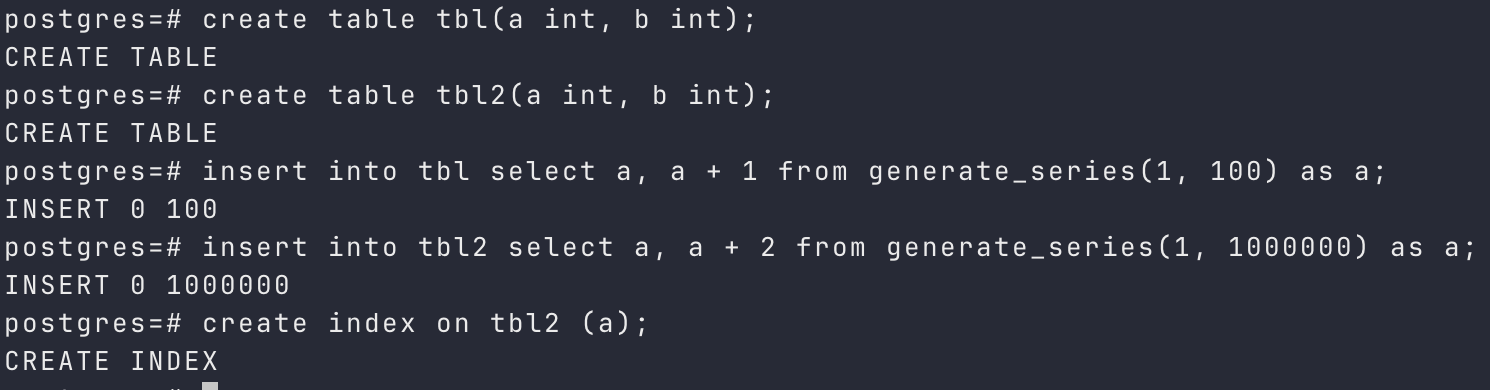

Example 1:

Compare the query execution plan between two versions over the following table.

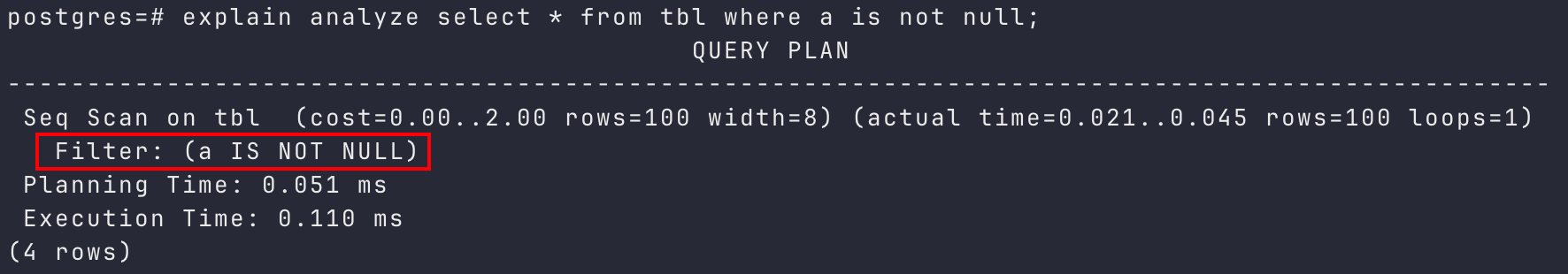

Query Execution Plan in Postgres 17: no filter and the execution time is less.

Query Execution Plan in Postgres 16: has a filter and costs more time than in Postgres 17.

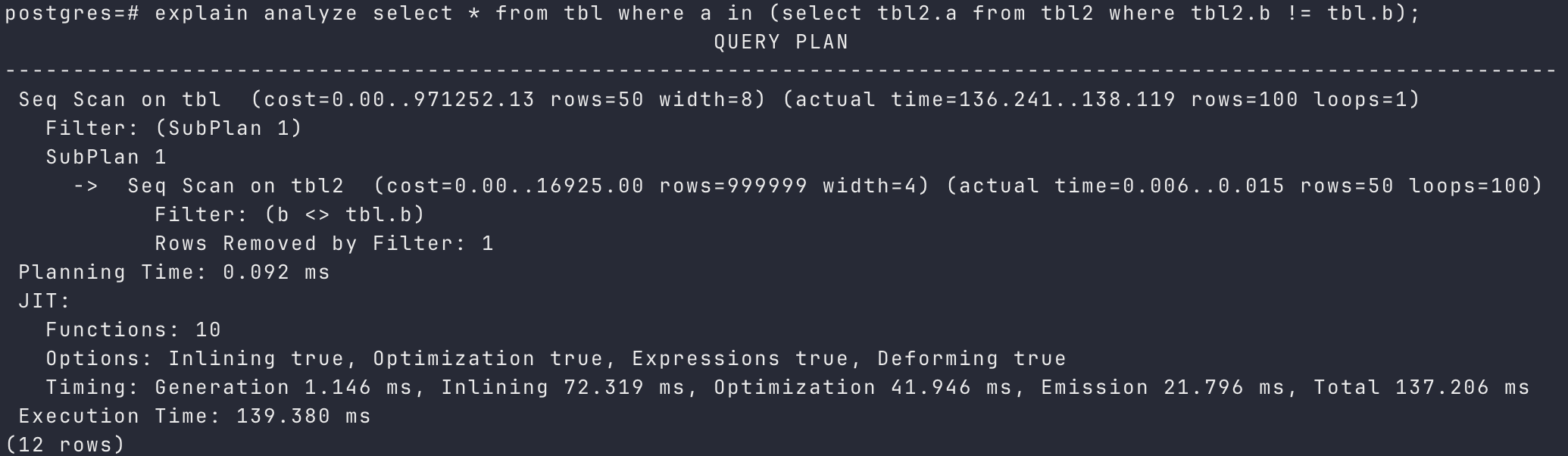

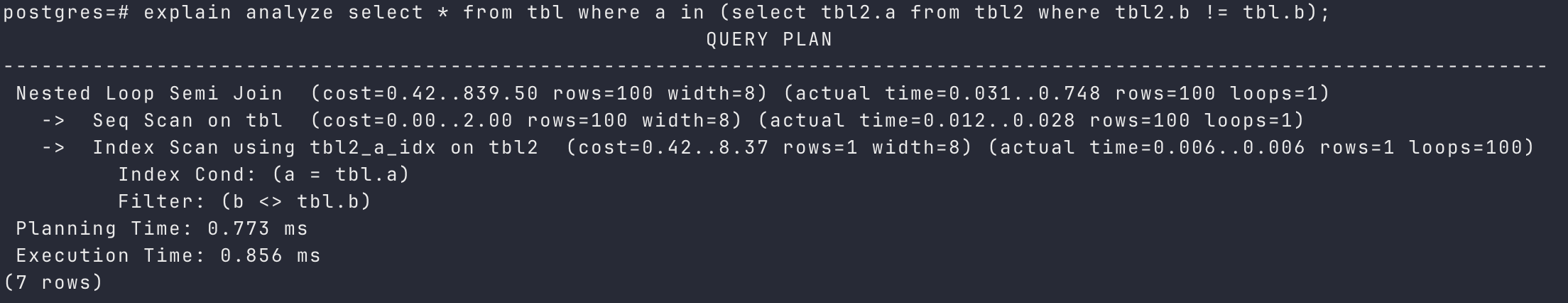

Example 2:

In this example, it’s easier to see the performance improvements on Postgres 17 by automatically transforming in subquery clause to joins.

Query execution plan in Postgres 16: the execution time is 139.380 ms.

Query execution plan in Postgres 17: the execution time is only 0.856 ms. This is 163X faster than that in Postgres 16.

The term "in" typically refers to a subquery that checks for the existence of a certain value within a specified set of data. This approach can be slower because it requires a full scan of the data set to check for the existence of the value.

On the other hand, "join" merges two sets of data based on a common field, resulting in a faster and more efficient operation when compared to the "in" subquery.

In general, the subquery using "in" has to compare each value in a set with the result set, which can make it much slower compared to using a "join" operation to merge two sets of data.

Then, turning to another performance improvement on Postgres 17, the streaming I/O for sequential reads, the most vital update on this feature is that it uses the new API to process larger reads. More specifically, the default size of read syscalls is changed from the old 8kB to 128kB reads when reading enough data.

By increasing the default size of read syscalls, the database may be able to retrieve larger chunks of data from a disk in a single operation, reducing the number of system calls required to fulfill a read request. This can lead to lower overhead and improved throughput, particularly for workloads that involve frequent reads of large amounts of data, such as a large number of small reads.

As modern storage devices continue to increase in speed and capacity, optimizing read operations to retrieve larger chunks of data at a time will also take advantage of these advancements and improve overall database performance. Particularly, Streaming I/O for sequential reads benefits ANALYZE by efficient data reading via prefetching.

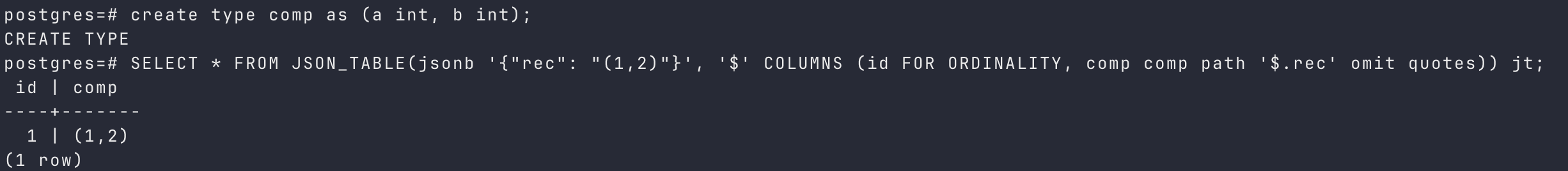

In addition to the performance enhancements, Postgres 17 also offers a new feature that many developers will find attractive: SQL/JSON improvement. Let's take a quick look at this with a practical example before moving on to the next topic.

JSON (JavaScript Object Notation) is a popular, widely used data interchange format for transmitting data between a server and a client. Many modern web applications and APIs rely on JSON for data exchange, and it has become the de facto standard for representing and manipulating data.

Having strong support for JSON in programming languages, frameworks, and libraries is important because it allows developers to easily parse, manipulate, and generate JSON data. It also enables interoperability between different systems and platforms, making it easier to integrate and exchange data between various components of a system.

Additionally, support for JSON is important because it enables developers to work with data in a way that is concise, human-readable, and easy to understand. This makes JSON a preferred choice for representing and storing structured data, especially in web development and API design.

The key updates on JSON data are listed below:

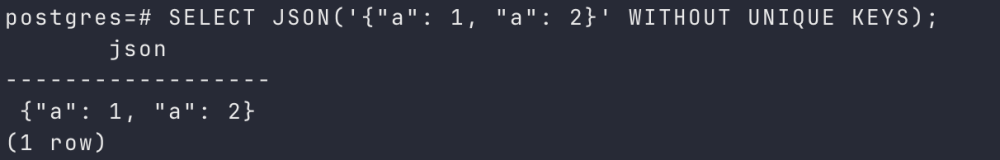

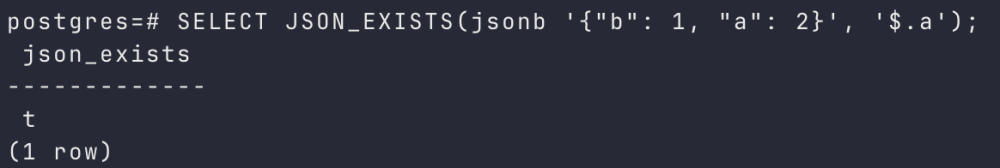

Here are some examples to illustrate how these developer-friendly features can be effectively utilized to maximize their impact.

• eg.JSON_TABLE()

• eg. JSON(), JSON_SCALAR() and JSON_SERIALIZE()

• eg. JSON_EXISTS(), JSON_QUERY() and JSON_VALUE()

The release of the PG17 has been an exciting event. Now, let's have a look at what else we can expect for Postgres 17 on the cloud.

In addition to amazing innovative features on Postgres 17, ApsaraDB RDS for PostgreSQL 17 also provides the following enterprise features.

• Rich plugins including GanosBase, a proprietary time-spatial extension which is faster than PostGIS

• Diverse security features including SQL auditing, SSL/TLS, transparent data encryption, cloud disk encryption, fully-encrypted database, and more

• Intelligent database management and monitoring such as SQL throttling and slow SQL analyze

• Easy and smooth major version upgrade in just one click (check out the details here.)

Why wait? Upgrade now or give it a spin with our 30-day free trial.

Core Technology of PolarDB-X Storage Engine | Optimization of Large-Scale Transactions

GanosBase H3 Geospatial Grid Capabilities and Best Practices

ApsaraDB - November 29, 2024

digoal - September 28, 2022

ApsaraDB - July 13, 2023

digoal - January 30, 2022

Farruh - June 30, 2025

ApsaraDB - July 12, 2023

ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB supports multiple storage engines, including MySQL InnoDB to meet different user requirements.

Learn MoreMore Posts by ApsaraDB

5199201999494666 October 21, 2024 at 7:37 am

rds pg too fast ~~~