By Bin Wang, Lei Zhu and Mingwei Shi

Thanks to the development of the Internet, there is a new carrier for knowledge dissemination. The scale of students using online learning platforms is increasing yearly, with more students obtaining and using learning resources online. Educational technology companies are relatively unique. They play the role of educators, innovators, and practitioners of new technologies.

As a high-tech online education enterprise, Hangzhou Mistong has been committed to Internet + Education to enable more students to enjoy high-quality education and promote their all-around growth. While gathering high-quality educational resources throughout the country, Mistong focuses on teaching efficiency and advanced technology and promotes its wide application in school education intelligence and personalized learning.

Currently, the demand for online teaching is normalized, but the demand for teachers to review homework online is soaring. In order to reduce the workload of teachers and improve teaching efficiency, Mistong creatively developed a learning note evaluation system based on Serverless to improve elasticity efficiency and significantly reduce costs.

Mistong's business covers more than 20 provinces across the country. Since its establishment more than ten years ago, it has been gathering high-quality educational resources from all over the country and researching and applying advanced technology to school education intelligence and personalized learning. Amid education informatization 2.0, Mistong is committed to promoting the high integration of online and offline education. It takes schools as the core scene and works with schools to build Internet learning space to provide learning solutions for schools and students and improve teaching efficiency.

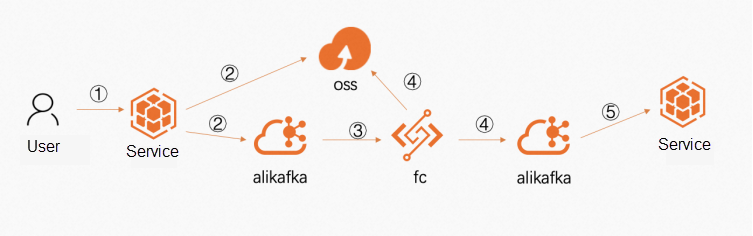

After students take photos of their finished homework and upload it to the homework marking system, the backend system will have the following actions:

After step 3, the system uses connectors of Kafka to drive Function Compute (FC) to process the data. As a computing platform for business, FC carries all processing logic and automatically identifies homework completion through image recognition and data classification algorithms.

The business traffic is stable most of the year but peaks during winter and summer vacations. An average of more than 1 million homework pictures were processed every day during the 2022 summer vacation, with peak traffic reaching the 10,000 level.

The picture processing program was originally deployed in Kubernetes (K8s). The program subscribed to Kafka topics to obtain data paths and data from OSS for processing. This part involves handling data concurrency and has the following two main problems.

Mistong's architecture group searched for new architecture to better meet the real-time requirements and finally found Alibaba Cloud FC after comparing various cloud products.

The new FC-based solution solved the problems of the old architecture. At the same time, the iteration speed and O&M efficiency have improved, and costs have been slashed. The comparison between the new and old solutions is listed below:

| Items | Solution 1: Deploy the Consumer Program on Kubernetes | Solution 2: Kafka Connector + FC |

| Elasticity Efficiency | Kubernetes provides CPU- and memory-based elasticity policy, whose elasticity efficiency is unsatisfactory. Besides, limited by the consumption model of Kafka, elasticity concurrency is up to the number of partitions. | FC triggers deliver data in real-time, and the FC processing program does not need to subscribe to Kafka topics. This avoids concurrency limitation in the consumption model and improves elasticity efficiency, which significantly improves the concurrency processing efficiency. |

| Resource Cost | Service-Level Deployment: Its resource granularity is coarse, and its elasticity mainly depends on a self-built scheduling system, so there is a certain waste of resources. | Function-Level Deployment: As a single function occupies a small number of resources and backend services are scaled in real-time based on requests, its resource utilization is high. |

| Iteration | Service-Level Release: The frequent update requires internal coordination and needs to stagger daytime business periods. | Function Granularity Update: It provides a seamless runtime upgrade by adding an alias to the existing version. Team members can upgrade functions at any time. |

| O&M Efficiency | Kubernetes O&M, log collection, and monitoring report construction are labor-intensive, which requires continuous learning. | FC integrates a log collection and monitoring system, which can be checked in the console in real-time. |

The comparison above shows that the FC is very suitable for the Mistong learning note evaluation system. It cuts resource costs and improves development and O&M efficiency while solving the pain point of elasticity.

The following problems were encountered during the implementation of the technical architecture:

Java Cold Start: The first problem lies in the language. The original backend program used the Java microservice framework, and there were multiple interfaces in the entire service. In the beginning, the entire service was deployed to FC. Due to the characteristics of Java program startup and the large number of modules and data loaded by the entire service framework, the cold start time is relatively long. The response requirements of the business interface cannot be met when the cold start is triggered.

Mistong developers made two iterations for this problem. First, they refined the code granularity and deployed the real processing code on the FC platform. Second, they replaced the Java language code with TypeScript. The reasons for the replacement are that the developers are familiar with TypeScript, and Node.js starts quickly. The elasticity efficiency of the function was significantly improved through these two iterations, and a single request can be completed within 50ms in the case of a cold start.

Resource Utilization: Due to the detailed division of function logic, a single request requires little CPU and memory. The developers chose to apply a single instance with multiple concurrencies of FC to improve the utilization. A good balance between concurrency and resources was achieved through PTS stress testing, and the resource utilization rate is as high as 70%.

After solving these two problems, the overall development process was smoother. The project achieved good results after it launched. The performance exceeded expectations in some small aspects. The main surprises were fast execution time and high elasticity efficiency.

Fast Execution Time: When the service was deployed in Kubernetes, the response time of a single request was about 100-200ms during the peak period. After the service was deployed in FC, the request processing time could be maintained at about 50ms during the peak period, which was much higher than expected. The main reason is that the FC running resources are relatively independent, and each instance handles a fixed concurrency limit, with the excess carried by new instances. Therefore, when the peak request pulse arrives, there will be no resource contention.

High Elasticity Efficiency: Before the architecture design, we were worried about the cold start of FC because the cold start involves the initialization of software and hardware resources. However, according to the operation performance, this worry can be ignored. The backend machine of FC is ECS bare metal server, and the configuration of a single machine is very high. A single machine can split many running instances, and FC is optimized for image pulling and instance hot backup, so the speed of running instances is very fast. Coupled with the fast startup of Node.js, even when encountering a cold start, FC can still respond to a request within 100ms, which is friendly for real-time business.

After the service interface was launched to FC, the accumulation problem during the peak period was solved. What's more, the built-in monitoring and log service of FC can better assist in troubleshooting when problems occur. Most importantly, through the real-time elasticity of FC, it is no longer necessary to plan resources and deploy redundant services in advance, which reduces resource costs to a certain extent.

Through this program, the application of FC has been further promoted in Mistong. The interfaces with high pulse and high resource requirements have been separated from the original services and placed on the FC platform. The internal system has completed a Serverless architecture upgrade.

During use, Mistong Architecture Team also put forward the following shortcomings of FC:

In the future, Mistong will work with the Alibaba Cloud FC Team to strive for better integration, user experience, and technology depth and explore the implementation of Serverless in actual business. The aim is to serve education with science and technology and change education with the Internet to make high-quality education available for all Chinese people.

FC is a fully managed, event-driven computing service. FC users only need to focus on writing and uploading code or images without procuring and managing infrastructure resources (such as servers). FC allocates computing resources, runs tasks elastically and reliably, and provides features (such as log query, performance monitoring, and alerting). FC includes functional components (such as services, functions, runtime environments, triggers, layers, and application centers).

The underlying FC layer uses Alibaba Cloud infrastructure (such as ECS bare metal server, network communication, storage, and security components) to build secure, reliable, and high-performance services. A proprietary system is used for auto scaling, load balancing, traffic control, tenant isolation, and disaster recovery to ensure FC computing density, elasticity efficiency, billing accuracy, and other core competitiveness. FC usage process is listed below:

The overall call link is listed below:

Process Details:

Alibaba Cloud Serverless | Serverless App Engine · Toolchain and Deployment

99 posts | 7 followers

FollowAlibaba Cloud Native - October 18, 2023

ApsaraDB - November 26, 2024

Alibaba Cloud Native Community - July 6, 2022

Alibaba Developer - February 1, 2021

ApsaraDB - January 4, 2024

Alibaba Clouder - November 26, 2020

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn MoreMore Posts by Alibaba Cloud Serverless