By Qingjie Zhao (Alibaba Cloud Function Compute), Xueqing Lin (Alibaba Cloud Function Compute), Lingling Du (Amap), and Bicheng Wang (Amap)

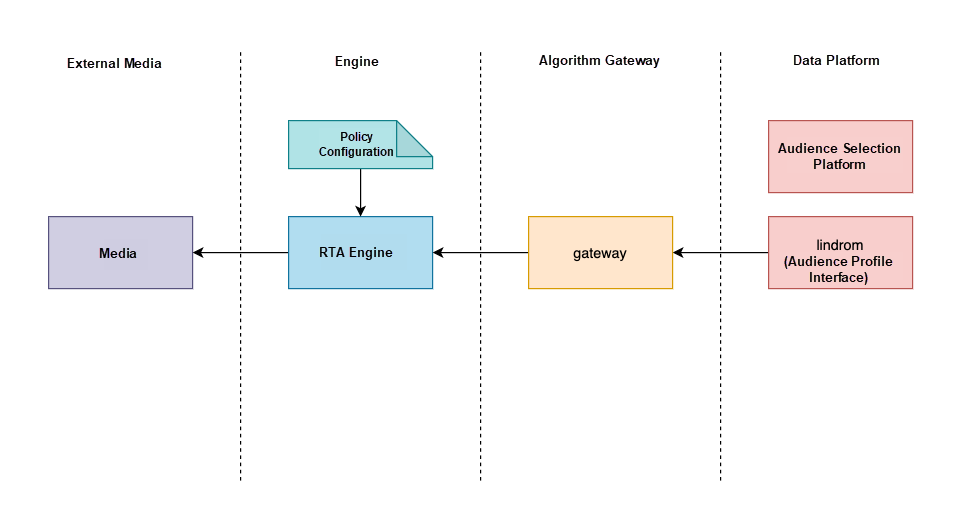

RTA is a real-time advertising program interface that enables real-time advertising optimization by utilizing the data and model capabilities of both media and advertisers. It is not just an interface technology but also a delivery capability that is focused on strategy.

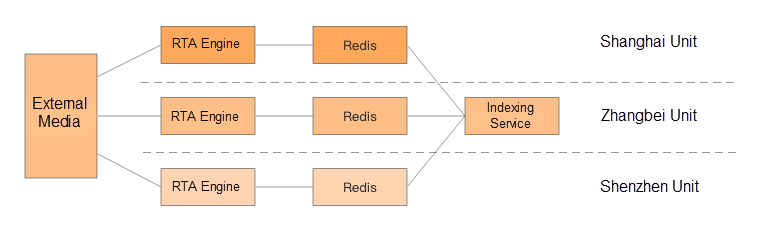

Advertising media can inquire about placing advertisements using Amap's RTA interface. RTA services query Amap's crowd information and return placement results, making media advertising more accurate.

The original system utilized a large number of servers and had a long dependency chain. Whenever the system expanded, the dependent services were required to be expanded accordingly, resulting in a considerable amount of resources being utilized.

The crowd hit function is essentially a problem of determining whether an element is in a set. The industry commonly uses Bloom filters to solve this problem. Bloom filters combine hash algorithms and bitmaps to achieve high query efficiency and require minimal space. Redis Extended Edition provides the bf (bloom filter) feature. Since golang is used for reading and Java is used for writing, the bf (bloom filter) function of Redis extended version can be used to implement the bf function on the Redis server to ensure data consistency in different languages.

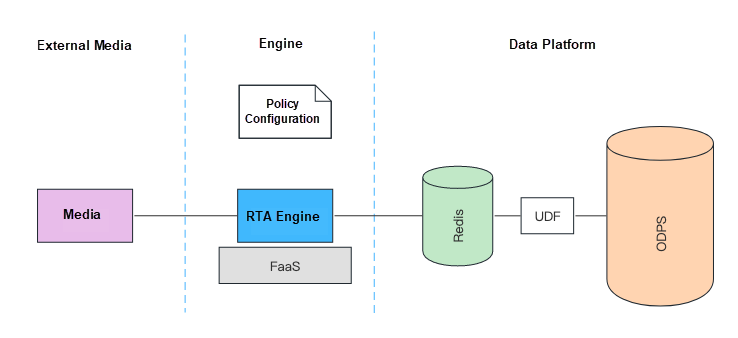

Redis is used to implement the crowd hit function, eliminating the need for an algorithm gateway and saving resources on the data middle platform.

The audience selection platform currently has four types of data updates: online, real-time, offline single-time, and offline periodic.

Currently, the audience selection strategy is based on the selection of offline people. Although it is possible to use online and real-time situations in the future, the real-time requirements are not high due to the large size of the population delineated by RTA advertisements, the low proportion of real-time population changes, and the media side's cache, which has low real-time requirements. If real-time performance is necessary, consider offline updates in the hour dimension (real-time performance depends on the UDF update frequency and trigger method). The offline periodic update of Redis can also be considered.

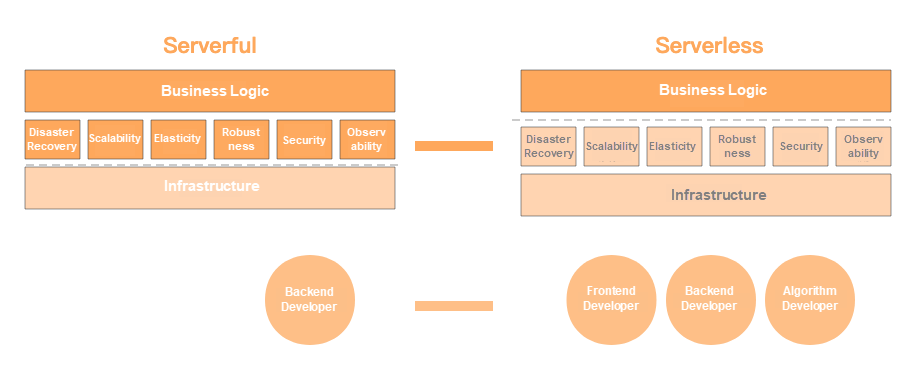

By separating the interface between applications and platforms, Serverless allows businesses to focus on their core business logic. It enables businesses to quickly develop and deploy stable, secure, elastic, and scalable distributed applications.

In the new technology selection, the engine service needs to access Redis, which raises the question of how to make a system serverless with high-frequency storage access.

Serverless is commonly considered to be FaaS + BaaS. FaaS (Function as a Service) generally refers to various backend microservices, while BaaS (Backend as a Service) refers to backend services that are not suitable for FaaS, such as storage services.

A serverless system architecture has high requirements for cloud storage. Cloud storage needs to be able to achieve automatic scaling capabilities that are equal to or close to those of applications in terms of scalability, latency, and IOPS.

Alibaba Cloud provides the ApsaraDB for Redis Enterprise Edition, which features a variety of instance types supporting a maximum bandwidth of 2 GB and 60 million QPS. The architecture and specifications of an instance can be adjusted to meet different performance and capacity requirements and implement non-sensitive scaling. This can satisfy the storage requirements after the engine service adopts serverless.

FaaS is the most common technology for serverless backend microservices.

The RTA advertising delivery system provides related services for external media and has large traffic and high latency requirements. It is a typical high-performance requirement scenario, where the timeout period set by the client is generally very short. Once the timeout period is reached, the interface call will fail.

After adopting a serverless architecture, request traffic is first sent to the Alibaba Cloud Function Compute system and then forwarded to the function instance for processing. In this scenario, Function Compute must control the average system time (excluding the execution time of the function itself) and the P99 value of request processing to a very low level to ensure the request success rate SLA requirements in situations of multi-tenancy and high traffic.

In the new architecture, after the middle platform generates a crowd, it calls Redis BF.INSERT and other instructions to generate bf. After the engine obtains the device ID, it uses the BF.EXISTS command to determine if the device is in the corresponding population.

Features:

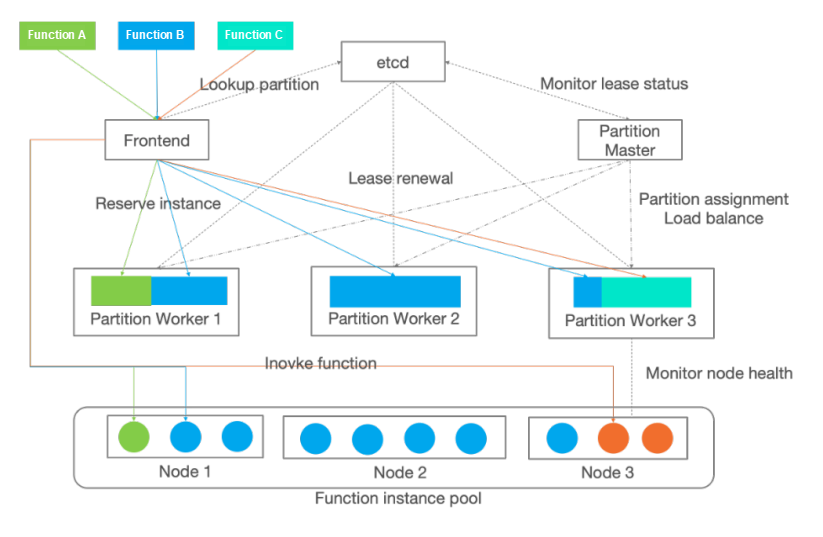

Earlier, we mentioned that the RTA advertising system has high-performance requirements due to its large traffic and high latency. Alibaba Cloud Function Compute is a multi-tenancy system that not only supports the advertising function of RTA but also many other business functions. This puts high demands on the request scheduling of Function Compute.

Let's see how Function Compute was able to meet these demands.

To realize real-time elasticity, when the request of the function reaches the front-end machine of the Function Compute, the front-end machine finds the scheduling node (Partitionworker) to process the request and forwards the request to it. After the scheduling node receives the request, if an instance is available, it obtains an instance according to the load balancing policy and returns it to the front-end machine; if not, it creates one in real-time and returns it to the front-end machine. The instance creation time can take hundreds of milliseconds.

As we can see, a request needs to go through the front-end machine and scheduling node before being forwarded to a specific function instance. This means that the system time consumption of request processing includes the processing time of the front-end machine, scheduling node, communication time between the front-end machine and scheduling node, and communication time between the front-end machine and function instance.

Over the past year, we have made lots of optimizations to the front-end machine and scheduling system of Function Compute, ensuring that the system can handle large traffic. The system time required for request processing must be kept to an average value of less than 2ms and a P99 value of less than 10ms.

In serverless scenarios, businesses no longer need to manage resources as the platform is responsible for resource management and scheduling. As business traffic increases, the platform needs to quickly and reliably deliver the necessary computing resources, and when traffic decreases, the platform must automatically release idle resources.

To ensure the reliable delivery of resources for functions, including the Amap RTA advertising function, Alibaba Cloud Function Compute has continuously optimized resource management implementation.

Initially, Function Compute instances were delivered in the form of Docker containers, which had security risks such as container escape storage. To ensure security, each host could only deploy functions for one tenant. However, as Function Compute has a large number of long-tail functions, the specifications of function instances are often relatively small, limiting the improvement of resource utilization, for example, 128M/0.1 cores.

To address this issue, Alibaba Cloud Function Compute worked with relevant teams to upgrade the resource base to ECS Bare Metal + Secure Container. This upgrade improved virtualization efficiency and security assurance by using hardware integration technology and secure containers, enabling multi-tenant high-density hybrid deployment and greatly improving resource utilization.

In Kubernetes clusters, the pod output efficiency can hardly meet the requirements of creating tens of thousands of instances per minute in Serverless scenarios. Therefore, Function Compute works with relevant teams to further subdivide computing resources in pods. Function Compute directly controls containers in pods, achieving high-density deployment and high-frequency creation.

In Serverless scenarios, resource delivery needs to be accelerated to seconds and milliseconds. To resolve the contradiction between the time-consuming startup of Kubernetes infrastructure and the extreme elasticity demand of Function Compute, FC implements technologies like pod pooling, image acceleration, image preheating, and computing instance recycling to ensure extremely fast resource delivery.

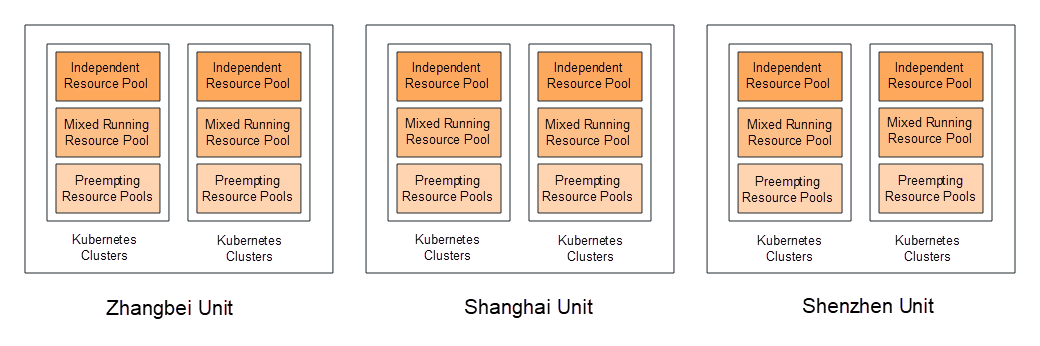

To achieve high availability, Alibaba Cloud Function Compute distributes FC resources across multiple Kubernetes clusters in each region, ensuring automatic switching to a normal cluster in case of any problem. Each cluster has exclusive, hybrid, and preemptible resource pool types, and Function Compute performs unified scheduling based on business characteristics, further reducing costs.

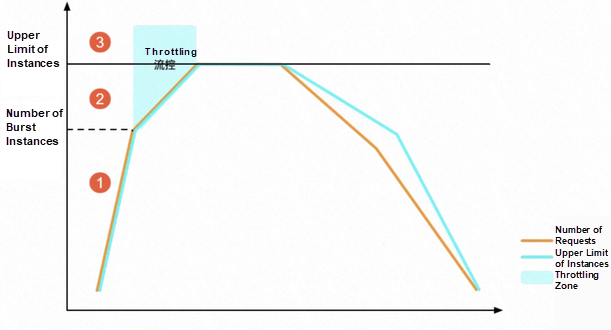

Alibaba Cloud Function Compute has delivered tens of thousands of instances for a single function in terms of the total amount of resources delivered. With dynamic resource pool supplementation, a single function of FC can theoretically deliver far more instances than tens of thousands. Function Compute can achieve instance creation speeds of 100 milliseconds in terms of resource delivery speed. In cases of traffic spikes, FC controls resource delivery speed from the following two dimensions:

These parameters are adjustable. The figure below shows the flow control behavior of FC in scenarios of rapidly increasing call numbers.

The system uses three-unit deployment to ensure that external media can be accessed nearby, reducing network latency.

After upgrading the system architecture, thousands of machine resources were saved, and full serverless was implemented. Call links were shortened, and the system became more elastic, robust, and easy to maintain. As a result, good business outcomes were achieved.

99 posts | 7 followers

FollowAlibaba Cloud MaxCompute - November 15, 2021

Alibaba Cloud Serverless - June 13, 2022

Alibaba Cloud Native Community - April 17, 2025

Alibaba Clouder - December 4, 2020

Alibaba Clouder - April 1, 2020

amap_tech - August 27, 2020

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More IDaaS

IDaaS

Make identity management a painless experience and eliminate Identity Silos

Learn More Elastic Desktop Service

Elastic Desktop Service

A convenient and secure cloud-based Desktop-as-a-Service (DaaS) solution

Learn More Blockchain as a Service

Blockchain as a Service

BaaS provides an enterprise-level platform service based on leading blockchain technologies, which helps you build a trusted cloud infrastructure.

Learn MoreMore Posts by Alibaba Cloud Serverless