By Chengzhong Yang

In Memory Column Index (IMCI) is an HTAP solution of PolarDB for MySQL. It improves the real-time analysis of MySQL for PolarDB in a transparent manner by optimizing the execution of complex queries. For more information about PolarDB HTAP and IMCI, see 400x Faster HTAP Real-time Data Analysis with PolarDB. [1]

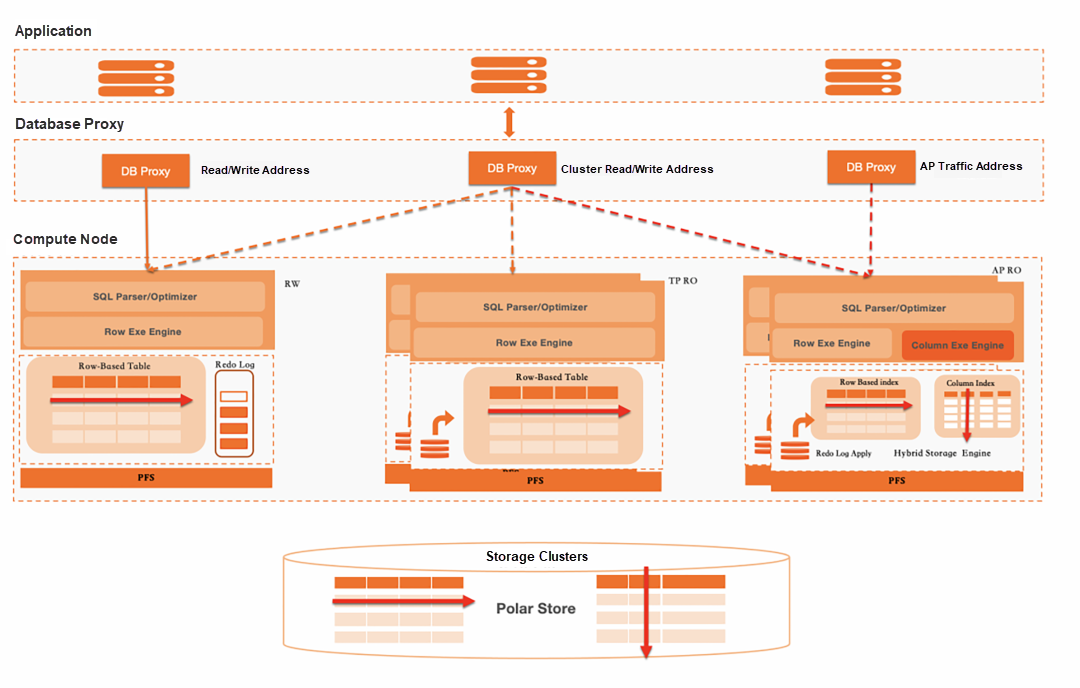

Figure 1: PolarDB HTAP architecture

HTAP efficiently handles transaction (TP) requests and analysis (AP) requests simultaneously within a system, bringing both convenience and resource scheduling challenges. Load in the HTAP system typically follows a time-specific schedule, with TP and AP loads occurring at different periods. This can result in wasted AP resources during TP request processing, and vice versa. Therefore, the HTAP system should be able to detect load changes and adjust resource allocation accordingly to optimize cost efficiency.

The newly released PolarDB for MySQL Serverless offers several core benefits, such as high availability, scalability, zero operations and maintenance (O&M), and uninterrupted business continuity. It allows dynamic adjustment of database cluster resource specifications based on business load. It simplifies resource evaluation and O&M tasks by automatically reducing specifications to save costs during low-load situations, and increasing specifications to handle high-load traffic and ensure business stability2. Building upon PolarDB for MySQL Serverless, IMCI Serverless further optimizes the elasticity of column store nodes in AP scenarios, enhancing cost efficiency, ease of use, and user experience. To enable IMCI Serverless, please refer to the User Guide[4]. The following section describes the specific optimizations from the perspective of IMCI Serverless' core benefits.

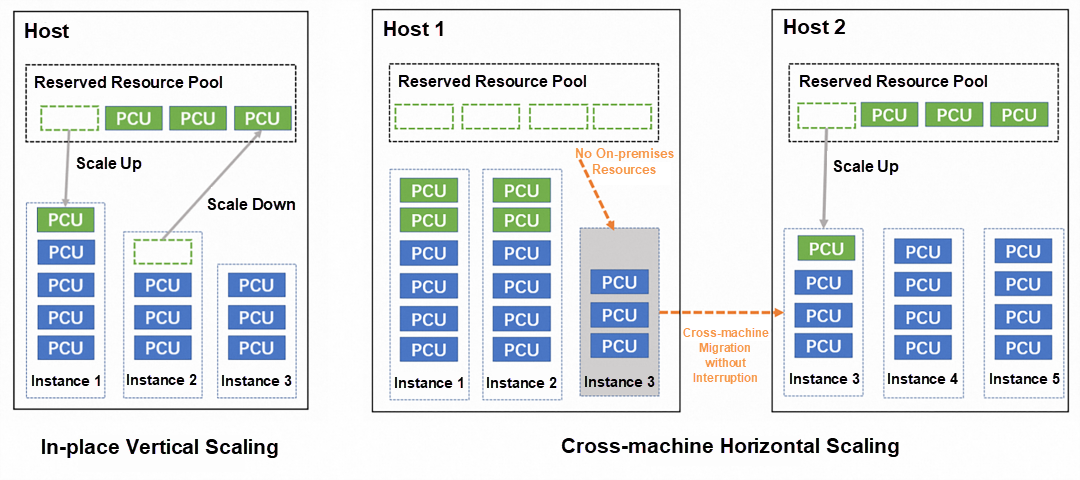

As the load increases, the stress on column store node resources also grows. When the stress exceeds the warning threshold, the column store node requests resources from the host, which is known as in-place vertical scaling. In most cases, local resources are sufficient to meet elasticity requirements, enabling quick response within seconds. However, if local resources are insufficient, column store nodes need to be built on other machines with ample resources, known as cross-machine horizontal scaling. The challenge here is to ensure uninterrupted business operations. This involves quick cold starts for the new nodes to load data and provide services without performance disruptions caused by cooling states like cache page missing. Consequently, data migration and state migration must occur simultaneously. The figure below illustrates the processes of in-place and cross-machine scaling. In the figure, PCU (PolarDB Capacity Unit) represents the basic unit of PolarDB elastic resources. One PCU is equivalent to the standard service capacity of an instance providing 1 core and 2 GB of memory..

Figure 2: Process of in-place and cross-machine scaling on instances

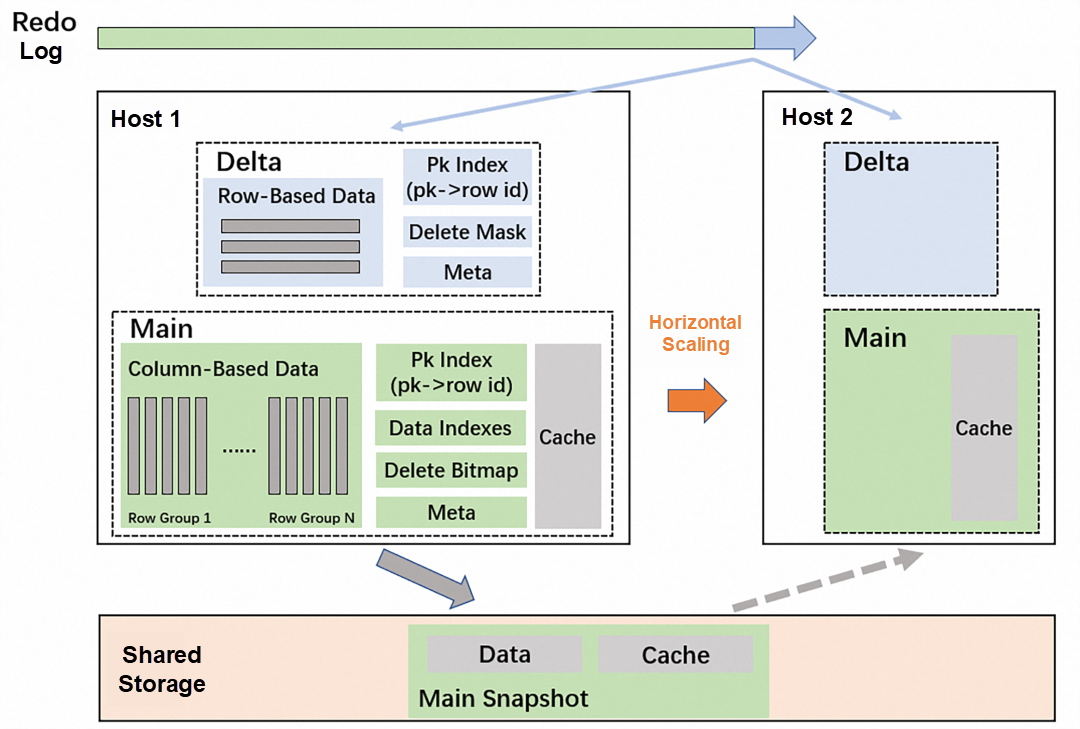

IMCI stores data using a delta-main architecture, which enables smooth data migration under the PolarDB compute-storage separation architecture. The delta file contains incremental data and is periodically merged into the main file, which is persistently stored in remote shared storage. Therefore, when a new node starts, only a small amount of delta data needs to be restored from the redo log to load the complete data and provide services. This migration process can be completed within seconds.

Figure 3: Realize IMCI cross-machine scaling

To optimize state migration, IMCI storage performs cache preheating. The main file follows a copy-on-write update model. Combined with the immutable feature of the main data, cache preheating becomes more convenient. Before switching, IMCI dumps the hot spot data from the old node and provides services once the new node loads the hot spot and completes the preheating process. This approach avoids performance fluctuations caused by a high number of cache failures. Through fast startup and cache preheating, IMCI can scale resources in a timely manner without business interruption.

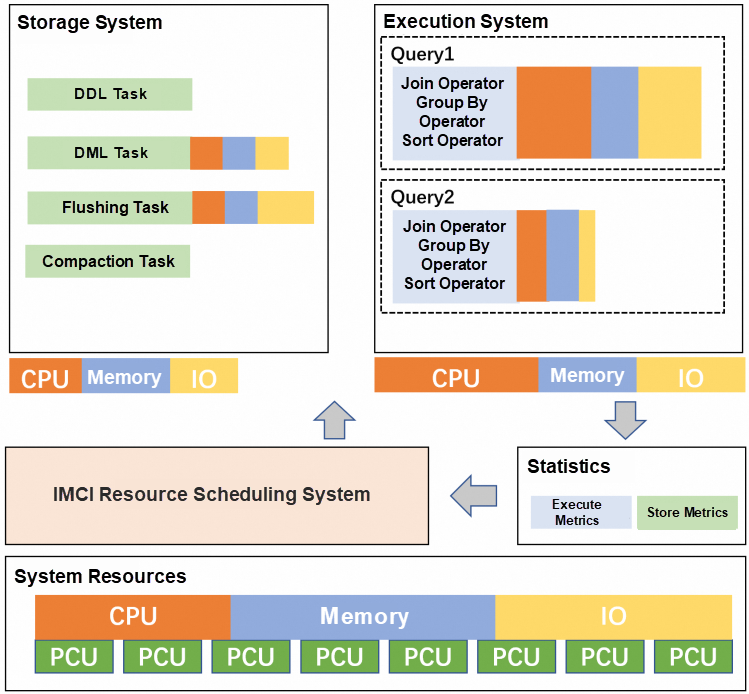

After successfully scaling the resources, IMCI strives to make the most out of the newly allocated resources by allocating them where they are most needed to enhance performance. To achieve this, IMCI has implemented an internal resource scheduling system. This system evaluates the bottleneck of the ongoing workload and allocates all available computing and I/O resources of the column store nodes to each module as required. The goal is to maximize resource utilization and improve system throughput.

A typical scheduling scenario occurs in the HTAP system, where TP and AP requests are time-scheduled. For instance, during the daytime when transactions are being executed to write data, IMCI reclaims the computing and I/O resources used for queries and allocates them to the write module. During the nighttime when analysis requests are executed, IMCI reclaims the resources used for writing and allocates them to the query module, ensuring more accurate utilization of the available resources.

Figure 4: IMCI resource scheduling system

In addition, apart from scheduling resources between write and query scenarios, IMCI also performs more detailed scheduling within these two scenarios. For example, when writing data, IMCI distinguishes database filling scenarios and batch updating scenarios. In different scenarios, the system adjusts the allocation of resources such as write cache, row-column mapping cache, playback thread, and flash thread to better adapt to the write load. When searching for requests, IMCI configures the available resources for processing each query request based on the complexity, concurrency, and queuing to maximize resource utilization and improve query performance.

IMCI resource scheduling system is the core feature of IMCI serverless. It continues to refine scheduling rules to improve the rationality of resource allocation so that fewer resources can play a better role. In the future, the scheduling system will be integrated with the row-oriented storage engine resources to better support row-column hybrid execution, evolve to multi-machine parallel task scheduling and explore more accurate scheduling policies from the perspective of the overall cluster resources.

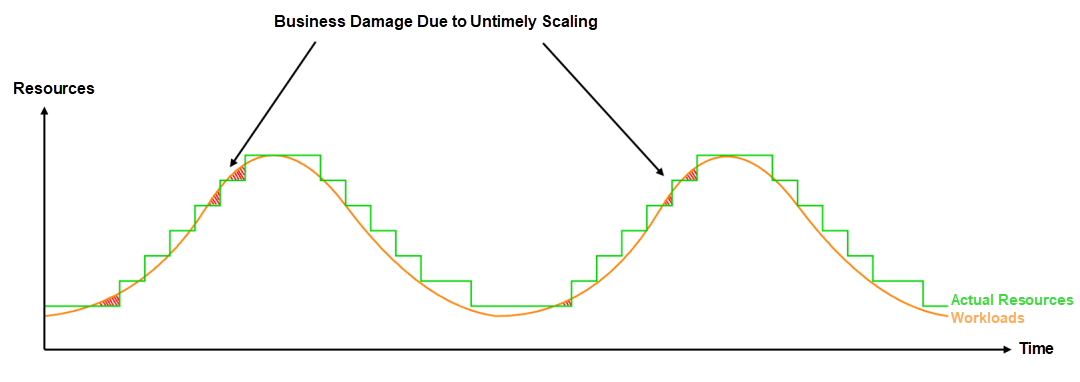

After the traffic ends, reclaiming system resources can reduce costs. However, improper reclaim policies may cause business jitter. For example, during a period, query requests arrive intermittently. If the time of resource recycling is misaligned with the time of processing query requests, performance jitter may occur intermittently due to cache miss, as shown in the following figure.

Figure 5: Business damage under fixed scaling policy

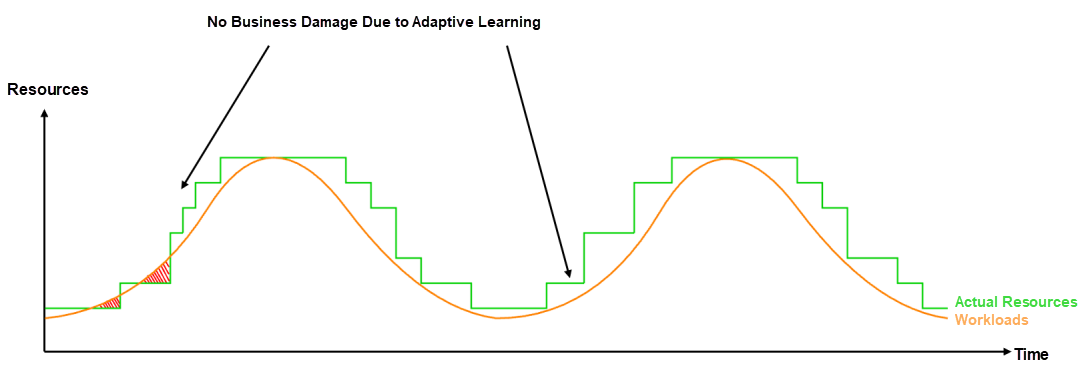

IMCI serverless provides anti-jitter at the kernel level. The resource scheduling system adaptively corrects resource specification changes based on the recent cache hit statistics. In addition, when reclaiming resources, IMCI will gradually enlarge the granularity of reclaimed resources with a more cautious strategy to reduce possible jitter.

Figure 6: No business damage under dynamic scaling policy

IMCI expects that serverless can relieve customers from specification evaluation and O&M. It is hoped that there is no need for customers to evaluate the purchase specifications, worry about which tables need columnar indexes, or explore how to set various parameters during columnar operation to achieve the best results. To this end, IMCI has made the following refinements:

● Optimize the lower limit of the specifications to minimize user costs during idle hours: All IMCI data-related components can be scaled down to the bottom. After the scaling of metadata-related components is optimized, IMCI serverless will charge based on minimum specifications during idle hours.

● Automatically adjust the kernel parameter configuration and provide the best out-of-the-box practice: IMCI resource scheduling system can adaptively adjust the parameter configuration of each component according to the load bottleneck. For example, by storing the cache ratio of each module, caching elimination algorithm, or executing materialization strategy, it reduces the burden of learning, testing, and O/M for users.

● Optimize index recommendation: IMCI provides index recommendations based on query requests. It has completed the function of recommending columnar indexes based on SQL queries. In the future, it will recommend features such as automatic partitioning, sort keys, filtering indexes, and stream computing virtual columns to actively "push" the most suitable features to customers.

IMCI serverless will continue to iterate in terms of ease of use. Its kernel will adapt to user loads so that customers do not need to track the release of IMCI features to leverage the best practices of business.

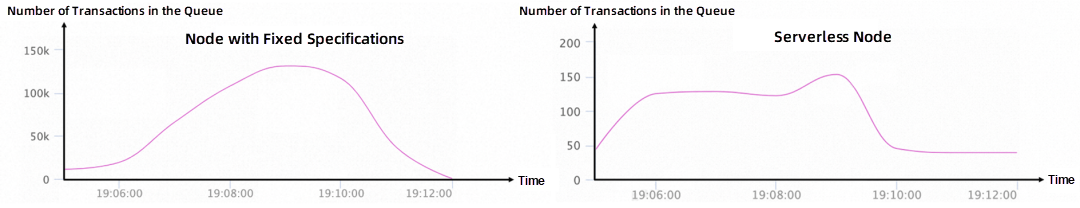

In a simple SysBench write-only scenario, we conducted a comparison between a fixed-specification column store node and a serverless column store node under stress to analyze their replication latency. The results highlight the advantages of the serverless column store node in terms of cost and performance. During the test, the SysBench update traffic was directed to the PolarDB primary nodes. The column store nodes replayed the redo logs from the primary nodes and synchronized the data to IMCI. We purchased two primary nodes with 16 vCPUs and 64 GB of memory and added a fixed-specification column store node and a serverless column store node with 8 vCPUs and 32 GB of memory, respectively.

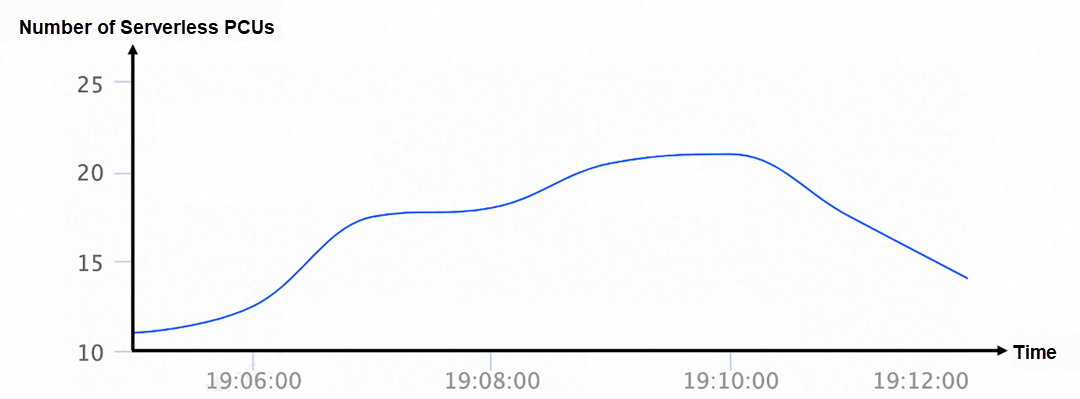

After preheating, we increased the traffic load and then reduced it after some time. The statistical difference between the fixed-specification column store node and the serverless column store node became apparent. As shown in the results, due to the resource allocation of the fixed-specification column store node being only half of that of the primary node, it processed transactions at a slower rate compared to the primary node's writing. As more and more transactions accumulated during the playback process, the number reached a maximum of 130,000 before the traffic was decreased, resulting in high latency in primary-secondary replication. On the other hand, the serverless column store node initially had 8 PCUs (8 cores and 16 GB) of resources before the traffic was increased. Although its memory was smaller than that of the fixed-specification column store node, it adapted to the load and gradually scaled up its resources. No transaction accumulation occurred during this period, preventing subsequent query latency. After the traffic was decreased, the resources of the serverless column store node were gradually released.

Figure 7: Transaction accumulation in column store nodes

Figure 8: Elasticity of serverless node resources

The comparison of the stress test shows that serverless column store nodes use fewer resources during off-peak traffic hours and provide elasticity to ensure that the business is not damaged during peak traffic hours. This lowers costs, improves efficiency, and reduces the O&M burden on users to evaluate traffic.

The evolution goal of IMCI Serverless is to enhance the range and sensitivity of resource elasticity without causing any impact on business operations.

● Timely resource scaling-up: The current resource allocation strategy, where the load monitoring program on the host drives kernel resource changes, can sometimes result in delays or inadequate allocation of resources. In contrast, the kernel accurately senses the load. Therefore, IMCI adopts a reverse resource driving mode from the kernel, ensuring the timely and comprehensive reflection of all required resources.

● Faster cross-machine horizontal scaling, reducing window switching impact: IMCI combines the cloud-native PolarDB three-layer decoupling technology [5] to cache hot data in distributed memory nodes. This eliminates the need for state migration and preheating, further accelerating cross-machine scalability.

● Advanced resource scaling to ensure zero business damage: When there is a burst of traffic and the resource pool is insufficient, temporary cross-machine resource scheduling becomes necessary. IMCI supports user-defined timing elasticity, reducing the need for temporary scheduling to a certain extent. It allows users to define resource specifications in advance. Additionally, IMCI plans to predict future traffic based on historical data to enable early scheduling.

● Multi-machine elasticity and resource isolation: Complex analysis SQL statements often require parallel execution on multiple machines for acceleration. In the future, IMCI Serverless will sense the load and allocate multiple nodes temporarily to process complex SQL statements, optimizing for typical OLAP workloads. Considering that processing complex SQL statements may consume significant computing resources, IMCI will divide resource groups through the proxy to ensure resource isolation. This way, multi-machine execution will not cross resource groups. For example, the resource group for offline analysis can execute tasks with multiple nodes in the group without impacting the resources of the online analysis group.

● A lower limit for resource scaling-down, helping users save costs: IMCI will continue to optimize the resident resources of storage components. The ultimate goal is for instance resources to be consumed without billing when there is no traffic.

[1] 400x Faster HTAP Real-time Data Analysis with PolarDB

[2] Serverless

[3] What is Failover with Hot Standby and How it Works

[4] Enable Serverless on a Read-only Column Store Node

Open-Source PolarDB-X 2.3: An Integration of Centralized and Distributed Architectures

Alibaba Clouder - May 20, 2020

ApsaraDB - April 10, 2024

ApsaraDB - November 28, 2022

ApsaraDB - November 21, 2023

ApsaraDB - January 6, 2023

ApsaraDB - August 7, 2023

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Real-Time Livestreaming Solutions

Real-Time Livestreaming Solutions

Stream sports and events on the Internet smoothly to worldwide audiences concurrently

Learn MoreMore Posts by ApsaraDB