By Muwu, Senior Technical Expert of Alibaba Cloud Intelligence

The load of a business is not constant, but fluctuates over time. Traditional methods of constructing applications typically allocate excessive resources to ensure business availability, resulting in low resource utilization. With the increasing popularity of container technology, applications can enhance resource utilization through auto scaling or application colocation. However, due to the complexity of resource management, it is challenging to strike a balance between business availability and resource utilization.

The emergence of the serverless platform shifts the responsibility of resource management from the user to the platform. This shift allows users to concentrate on business development, while the platform leverages its resource scale and load diversity to focus on improving resource utilization. The serverless platform can significantly enhance resource utilization, leading to cost reduction and efficiency improvement.

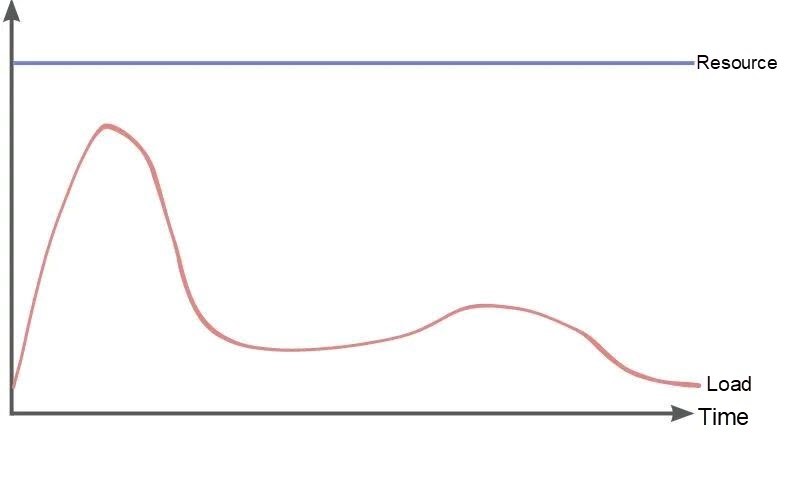

The workload of a business changes dynamically, and the elasticity of resources often lags behind the workload changes, resulting in low resource utilization. To simplify the complexity of deployment and operations and maintenance (O&M), it is common to specify a fixed number of instances when deploying an application. The following figure illustrates the changes in resources and workloads.

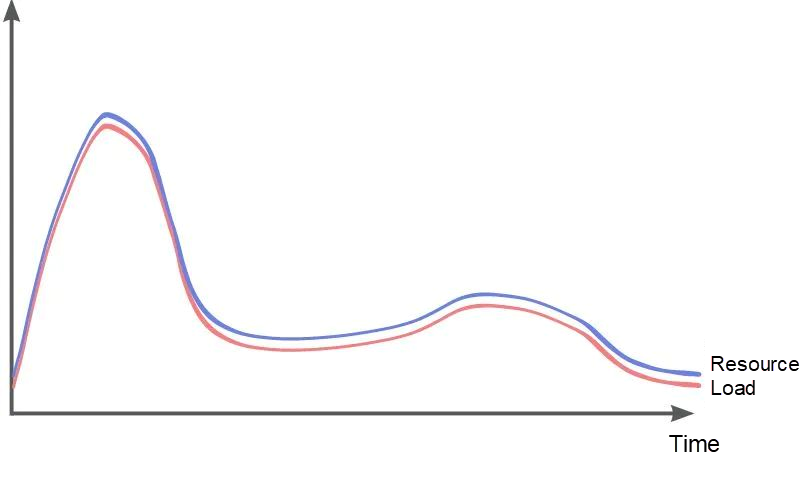

As shown, there is a significant waste of resources for a substantial amount of time, and the average daily resource utilization is less than 30%. Resource utilization directly affects costs, and by doubling resource utilization, costs can be reduced by 50%. The ideal scenario is when resources perfectly match the workload, as shown in the following figure

However, in reality, achieving such a perfect match is challenging due to two reasons:

Therefore, the actual resource situation falls between the two ideal scenarios mentioned above. Business developers can employ various methods to improve resource utilization and get it close to 100%. Next, let's explore some common means of enhancing resource utilization.

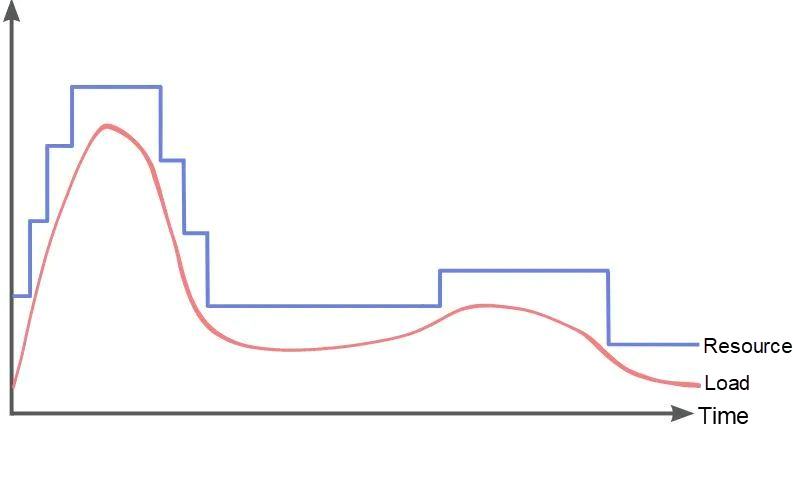

Auto scaling is commonly used to enhance resource utilization in containerized applications. One typical method is to utilize the HPA (Horizontal Pod Autoscaler) policy in Kubernetes, which sets a threshold for CPU utilization. When the CPU utilization of a container exceeds the threshold, containers are automatically increased. When it falls below the threshold, containers are automatically decreased. The following figure illustrates the changes in business load and resources after implementing HPA.

As shown, before new resources are created, existing resources need to have some margin to accommodate the increase in load. So, what would the utilization be in the case of the aforementioned step-shaped resource change? Let's analyze it quantitatively.

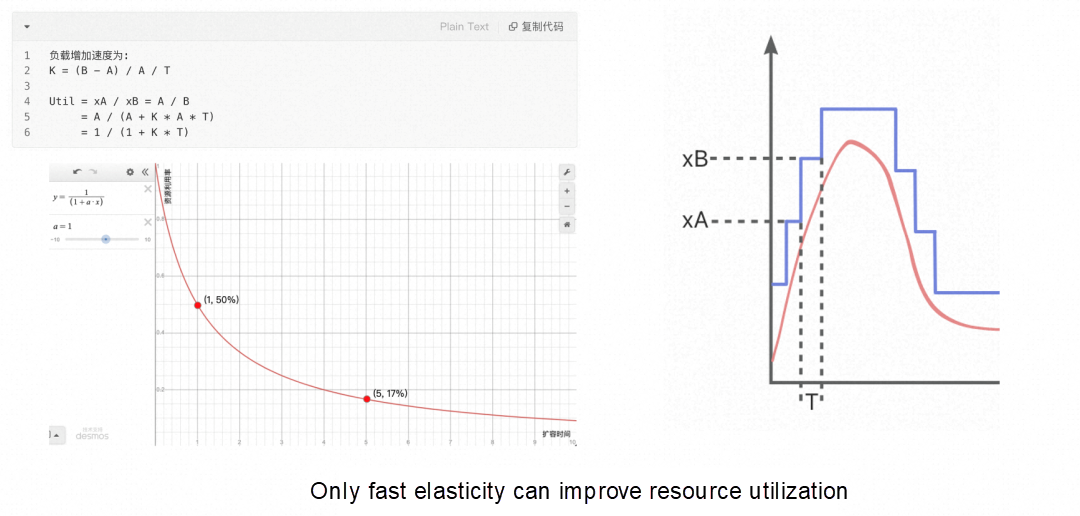

As you can see, the resources that need to be reserved are dependent on the increase in load and the time it takes for scaling out. Assuming the load increases from A to B during the scale-out time T, and the required resources scale out from xA to xB. To cope with the load before the resource creation is completed, the number of resources needed is xB when the load is A. Therefore, the resource utilization is a function of the load growth slope and the scale-out time. When the load growth ratio K is determined, the resource utilization (Util) is an inverse function of the scale-out time (T). The shorter the scale-out time, the higher the resource utilization.

For example, when the load increases by 100% per minute, the relationship between resource utilization and scale-out time is as follows:

• With a scale-out time of 1 minute, the resource utilization is 50%.

• With a scale-out time of 5 minutes, the resource utilization is 17%.

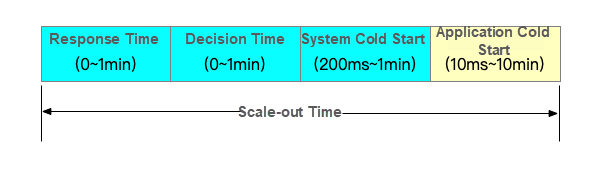

Shortening the scale-out time is key to improving resource utilization. The entire scale-out time, from when the load begins to increase to when the new container is created, can be divided into four parts as shown in the following figure.

1. Response Time

a) Metric collection time: For example, to collect CPU metrics, you need to obtain the average CPU utilization over a period.

b) Decision time: For example, the collection of CPU metrics needs to be greater than the threshold for N consecutive times before the scale-out is triggered.

2. Start Time

a) System cold start: Time for the system prepares the machine and container environment.

b) Application cold start: The initialization time of the application after the container is started, such as JVM startup, middleware initialization, and data loading.

How can we shorten the scale-out time? The following table compares the optimization of Kubernetes and Function Compute[2] at various stages.

| Time | Kubernetes | Function Compute |

| Metric Collection Time | 15s | 0 Concurrency is calculated in real-time based on requests. |

| Decision Time | 0 The default Stabilization window [3] for Kubernetes is 0. | 0 Concurrency is calculated in real-time based on requests. |

| System Cold Start | Image: ~30s management + scheduling + container startup. | Code package: 200ms image: 3s container pooling, code package or image acceleration. |

| Application Cold Start | 10ms ~ 10min | 10ms ~ 10min |

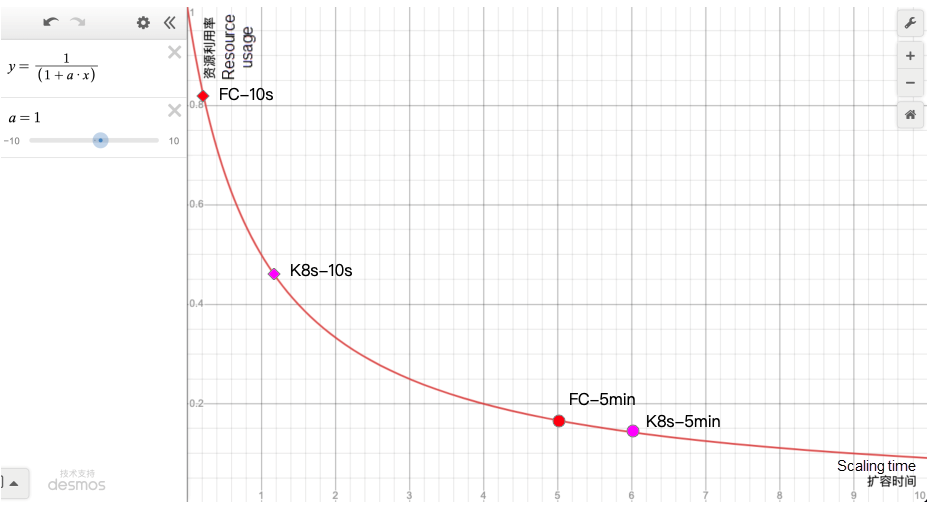

Function Compute shortens the response time to 0 through request-level scheduling. It optimizes the cold start time to a minimum of 200ms through code package and image acceleration. With the same application cold start time, the scale-out time of the Function Compute is 1 minute faster than that of the Kubernetes. If the application cold start is faster (10s), the resource utilization of the Function Compute will be significantly better than that of Kubernetes. If the application cold start is slower (1min), the gap between the utilization of Kubernetes and the Function Compute will become smaller. As shown in the following figure.

The optimization of cold start time can improve resource utilization in Function Compute scenarios. However, because the application cold start is related to the specific application logic, it is difficult to optimize generally. Some possible optimization directions are:

To sum up the problems of HPA:

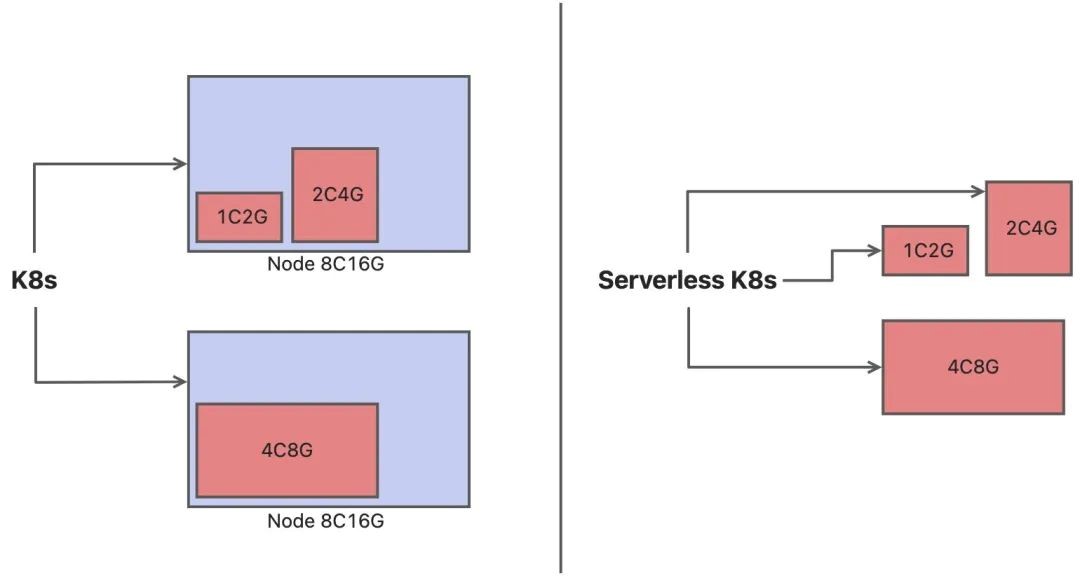

Another way to improve resource utilization for containerized applications is through colocation and overselling. There are two usage modes for container clusters.

In the classic Kubernetes mode, the auto scaling of containers does not effectively improve resource utilization. Even if containers are deleted, the nodes still remain. The auto scaling of nodes is less flexible compared to containers. Therefore, colocation and overselling are common practices to enhance resource utilization.

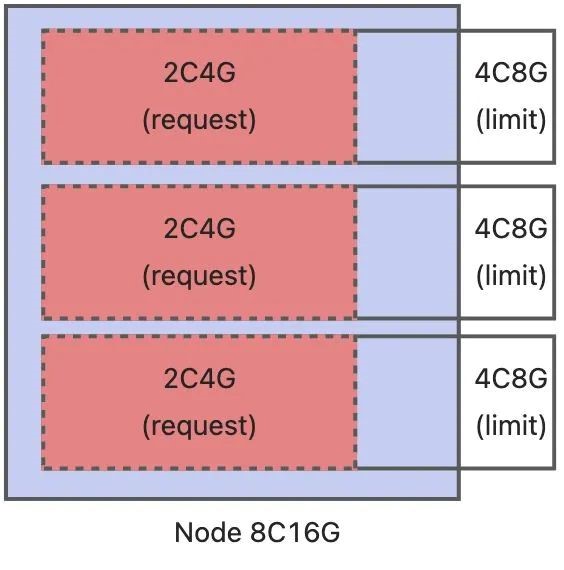

In Kubernetes, overselling can be achieved by setting resource.request [4] to be less than resource.limit. Kubernetes allocates containers based on resource.request during scheduling and limits the resource usage of containers based on resource.limit..

It is important to note that adding up the maximum resource usage of containers on a node may exceed the resource limit of the node. The assumption here is that the resource usage of each container will not reach the resource.limit at the same time. If this assumption is not met, resource competition can occur, resulting in performance degradation or even out-of-memory (OOM) issues. However, due to the dynamic nature of container resource usage, there is a certain probability of resource competition, making it challenging to achieve optimal results through colocation and overselling. Factors that affect the effectiveness of colocation and overselling include the size of the resource pool, diversity of the workload, performance stability, and overload migration strategy.

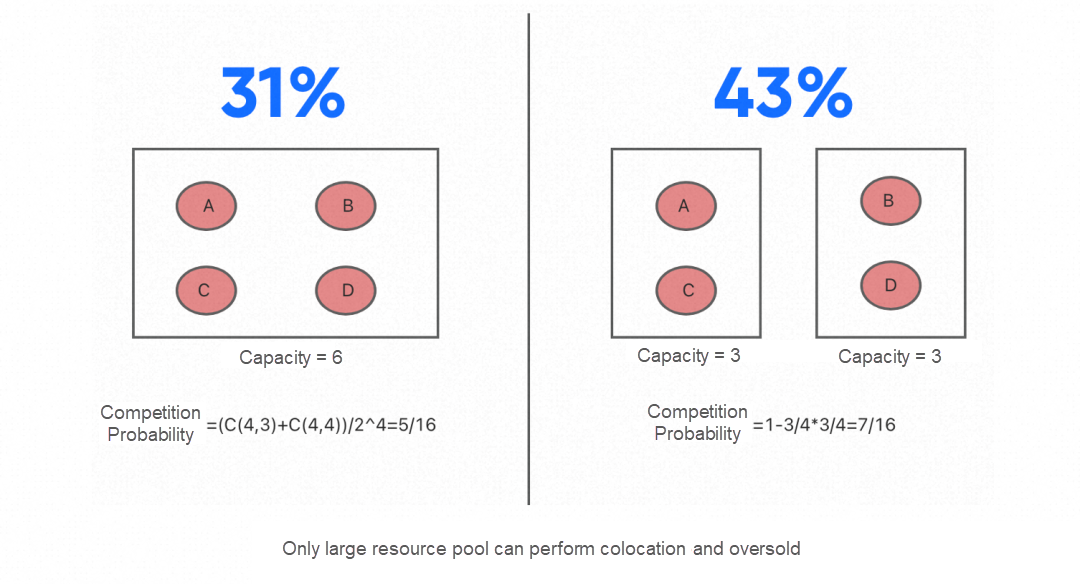

The size of the resource pool is the first factor to consider. The larger the resource pool, the lower the probability of resource competition. Let's quantitatively analyze the probability of competition. Suppose we have 4 applications, where each application has a 50% probability of using 1 resource and a 50% probability of using 2 resources. Let's compare the probability of competition by placing them in one large resource pool versus two small resource pools.

As shown in the analysis, the probability of competition in a large resource pool is lower compared to a small resource pool. For specific business applications, where the load scale is small, the resource pool tends to be smaller, resulting in a higher probability of competition generated by colocation.

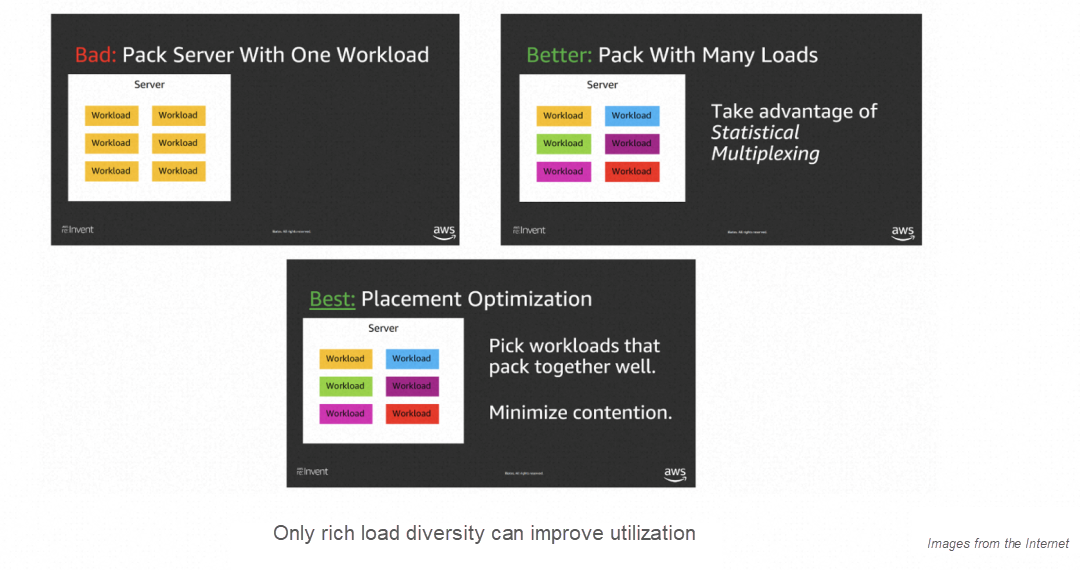

The second factor is the diversity of the workload. The more diverse and complementary the workload, the better the effectiveness of colocation, leading to higher resource utilization. This diversity includes variations in resource requirements, such as CPU-intensive or I/O-intensive workloads, as well as fluctuations in time, such as morning peaks or evening peaks.

For specific business applications, if the load lacks diversity, it is difficult to further improve resource utilization.

The final factor is overload migration. When the load of a node is too high, some containers need to be migrated to other nodes. This migration process needs to be smooth and does not affect the business. In Kubernetes, the scheduler is not aware of the application request traffic. Therefore, when overloaded migration occurs, the application layer needs to migrate by cooperating with mechanisms such as health check and offline and offline. If the migration is not timely, the request will fail, and the service quality will be affected.

Summarize the problems in colocation:

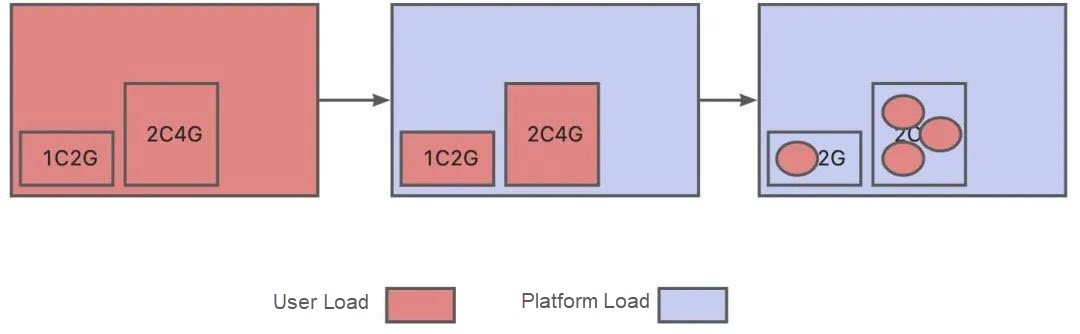

Auto Scaling and colocation and oversold are effective ways to improve resource utilization. However, due to their inherent complexity, business developers need to invest a lot of energy to achieve better results, and these infrastructure-related tasks are not the core competitiveness of business developers. The fundamental reason why business developers need to try every means to improve resource utilization is that machines belong to business developers. Can business developers be freed from machine operations and maintenance? Serverless provides a product form that shifts the responsibility of resource management from the user side to the platform side. As such, business developers only need to pay for business requests and focus on business innovation without paying attention to resource utilization.

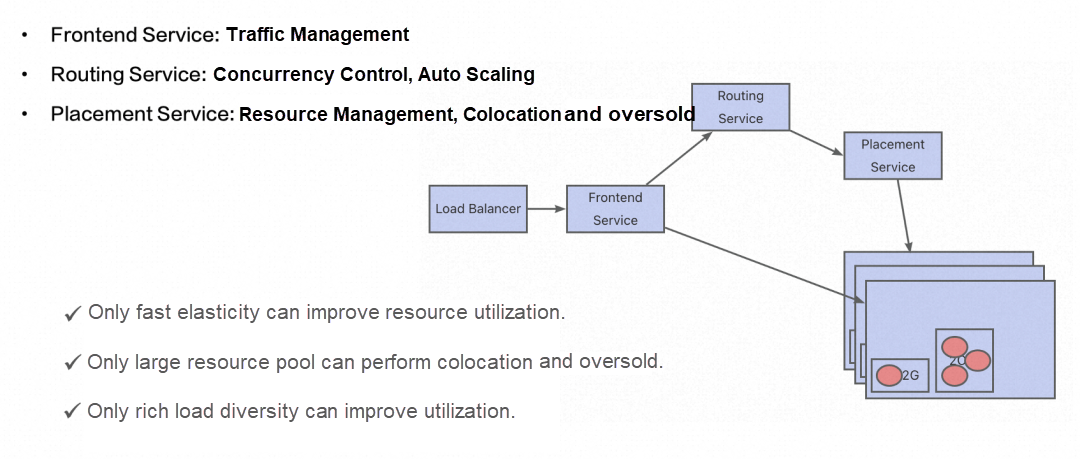

It does not mean that Serverless has no servers. Instead, the management, operation and maintenance, and utilization of servers are centralized on the platform side. This improves resource utilization by leveraging the advantages of the platform in terms of resource scale and load diversity. The following figure shows the internal architecture of a Serverless system. Through traffic management, auto scaling, and colocation and oversold on the system side, cluster resource utilization is improved.

From the user side, you can use the capabilities provided by the Serverless platform to improve resource utilization on the business side.

a) Request scheduling [5]: Charge according to request time and the idle time is free. The time utilization of instances is 100%.

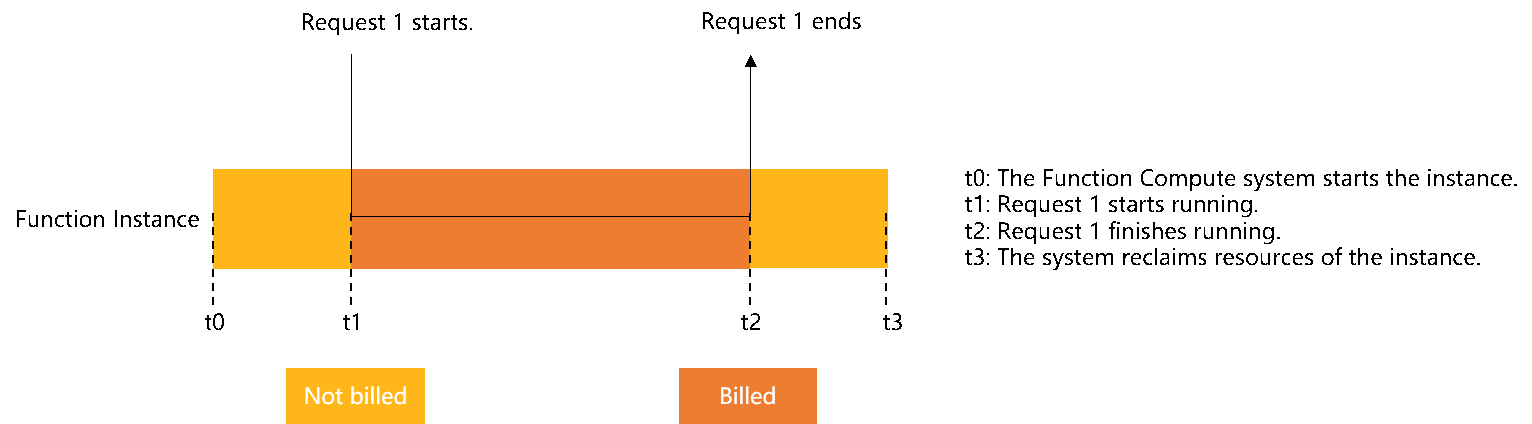

When an on-demand instance processes a single request, the billed execution duration starts from the time when the request arrives at the instance to the time when the request is completely executed.

If you use an on-demand instance to concurrently process multiple requests, the billed execution duration starts from the time when the first request arrives at the instance to the time when the last request is completely executed. You can reuse resources to concurrently process multiple requests. Then, resource costs can be reduced.

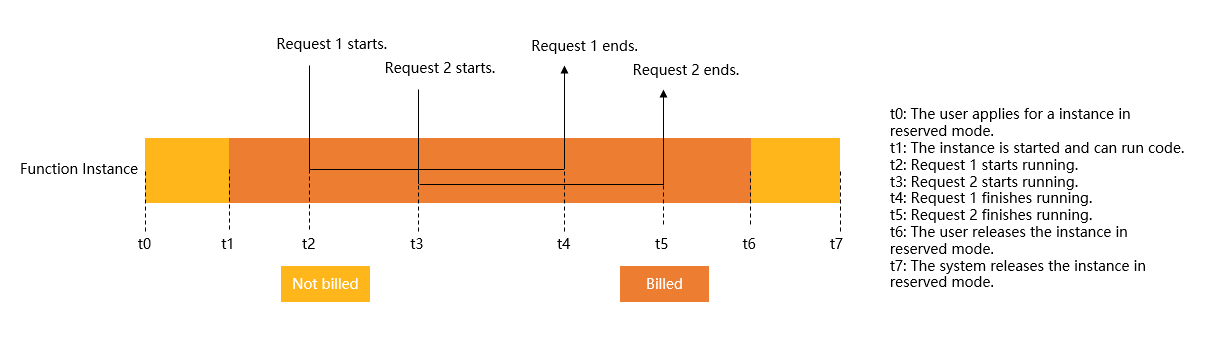

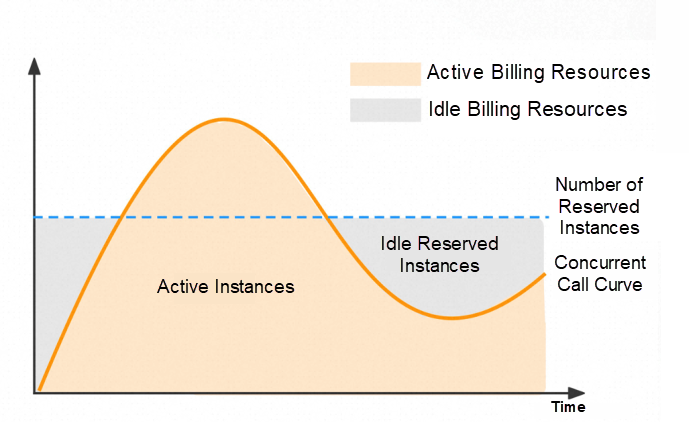

b) Idle billing [6]: In response to slow application cold start, reserved instances are provided for idle billing. When instances send no request, the fees are reduced to a tenth of that of active instances.

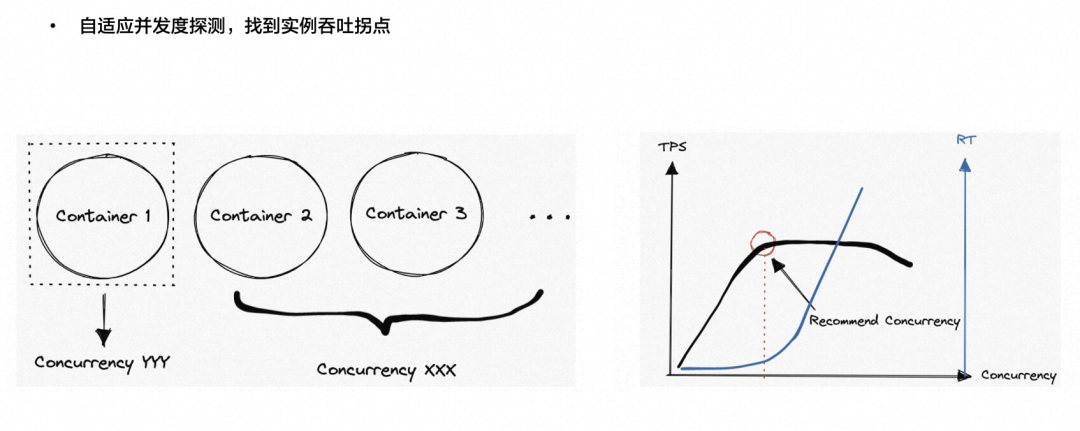

c) Dynamic concurrency: To solve the problem that the CPU threshold is set too low, the system dynamically determines the optimal concurrency based on the actual traffic and looks for the inflection point of throughput.

Due to the dynamic changes in workload, resource capacity evaluation and utilization improvement are challenges faced by business developers. Implementing resource utilization through auto scaling, colocation, and overselling can be complex and may not yield ideal results. The Serverless platform shifts the responsibility of resource management from the user to the platform, allowing business developers to focus on their core business development. Meanwhile, the platform leverages its resource scale and diverse workload to improve resource utilization, creating a win-win situation.

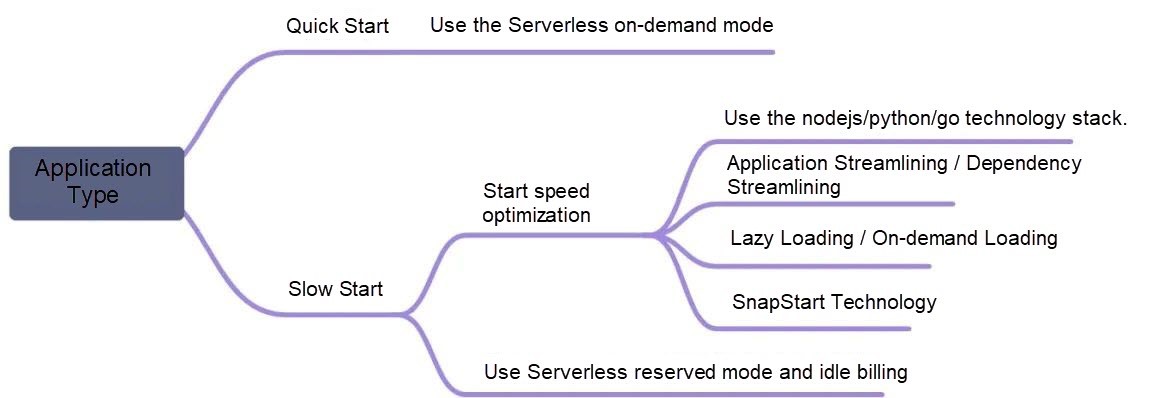

For business developers, different selection paths can be adopted based on the characteristics of their applications:

[1] HPA Policy

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/

[2] Function Compute

https://www.alibabacloud.com/product/function-compute

[3] Stabilization Window

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#default-behavior

[4] resource.request

https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

[5] Request Scheduling

https://www.alibabacloud.com/help/en/function-compute/latest/instance-types-and-instance-modes#p-kwb-hz6-6e3

[6] Idle Mode

https://www.alibabacloud.com/help/en/function-compute/latest/auto-scaling#section-sw6-nzj-rbs

Summary of Stability Construction of Kubernetes-based Serverless PaaS

Building an ETL System: The Best Practice for Database + Serverless Function Compute

99 posts | 7 followers

FollowAlibaba Cloud Serverless - February 17, 2023

Alibaba Developer - January 21, 2021

Alibaba Clouder - December 11, 2020

Alibaba Cloud Serverless - March 16, 2023

Alibaba Container Service - July 16, 2019

Aliware - March 19, 2021

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by Alibaba Cloud Serverless