Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

In the previous article, we mentioned that there are often centralized roles in distributed systems. As the cluster size increases, these centralized roles may have performance bottlenecks that prevent systems from continuously scaling out.

Then, we solve the performance problems of these centralized masters through methods (such as Federation).

However, this reminds us that in addition to masters, is it possible the slave/worker may also have performance problems? Is there a possibility of performance improvement?

Look again at the HDFS and MapReduce we mentioned before. In terms of architecture, after NameNode and ResourceManager as masters can be expanded, DataNode and NodeManager as slaves/workers can achieve stable scale-out. There are no obvious performance bottlenecks in the architecture.

As the architecture is too coarse-grained, let's look at the execution process to find out whether fine-grained individuals have performance problems.

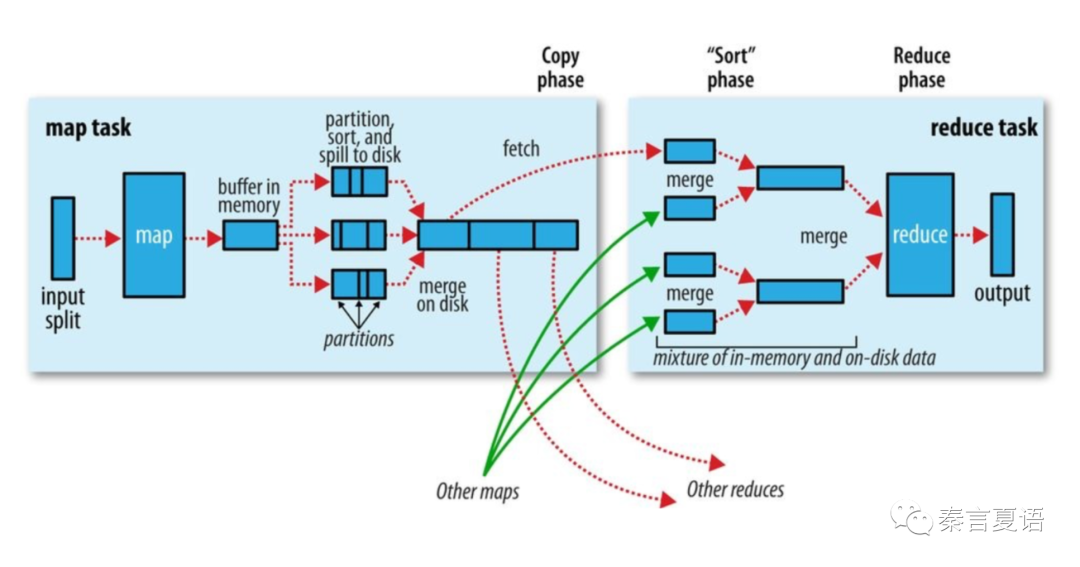

The following figure shows the execution process of MapReduce:

In traditional web and database fields, after frequent troubleshooting and analysis, we find that performance bottlenecks mostly occur in I/O. So, we need to focus on the I/O operation in the execution process of MapReduce.

The following steps are related to I/O:

The execution time of programs is mostly consumed in these I/O operations, especially the disk I/O, which significantly reduces performance.

MapReduce provides many ways to optimize performance, such as merging and splitting the map input, using combiners, and compressing map output.

However, no matter how the optimization is performed, there are still a large number of I/O operations, especially disk I/O operations.

The programming model of MapReduce is relatively simple and rigid. Slightly more complex processing logic requires multiple MapReduce tasks to be executed in sequence. For example, WordCount can count the occurrence of each word, but finding the most frequent word requires another MapReduce Task.

This way, the business logic is split into multiple MapReduce tasks to form a workflow. Each MapReduce task takes the previous output as its input, and its output becomes the input of the successor. This, without doubt, significantly increases IO operations, further slowing down performance.

As a result, I gradually came up with the idea of solving problems without MapReduce.

How can we reduce the performance problem caused by disk I/O? Save data in memory! The cache in traditional architecture has given us much experience for this answer.

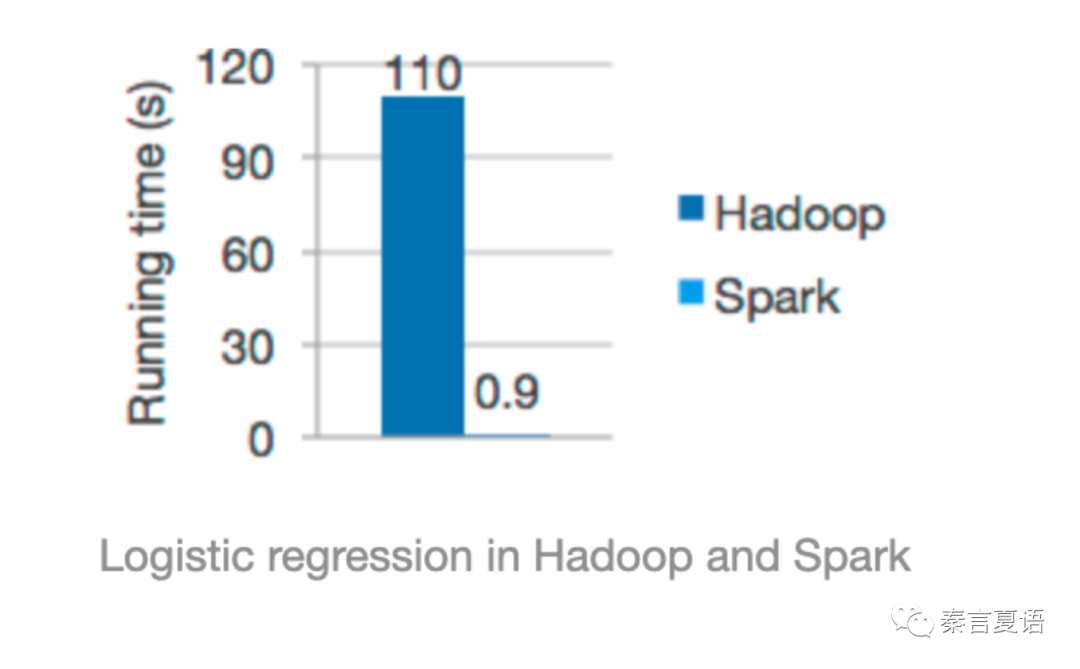

Apache Spark was initially under the banner of memory-based and quickly won a reputation and popularization.

The performance comparison graph above is still displayed on the Spark homepage today.

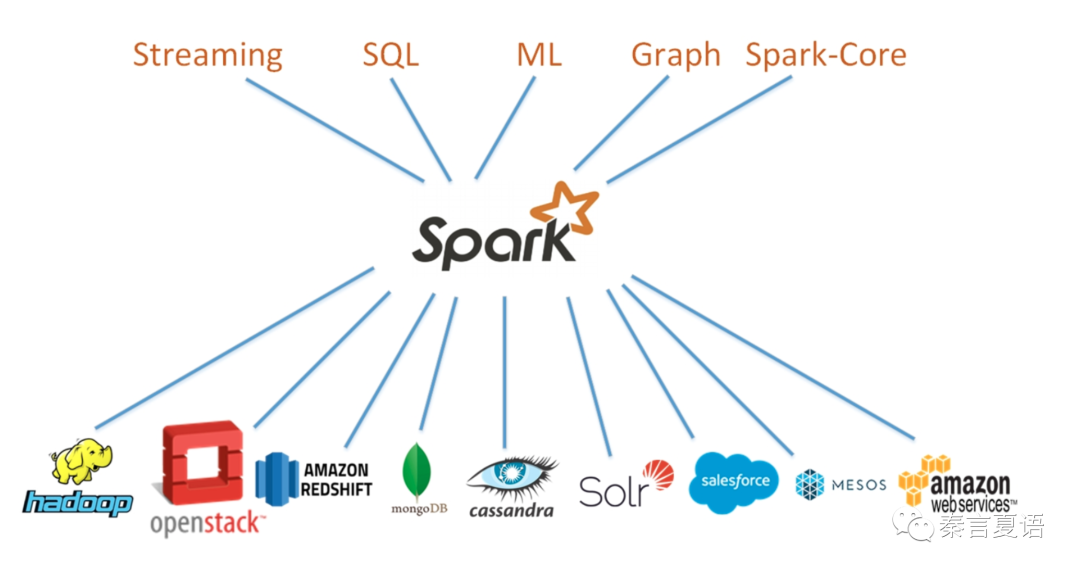

Spark is a very ambitious framework gradually fulfilling its ambitions. Spark is intended to be a unified memory-based computing framework that supports a variety of typical application scenarios and connects to a variety of storage systems.

However, the topic of this article is not Spark, so it will not be systematically introduced. Let's focus on performance first. Spark has made great efforts in performance optimization. There are a lot of things that can be improved. Let's focus on the two key points of architecture and design for now:

The first key point is the cache. Spark directly caches data in memory.

The core of Spark is Resilient Distributed Dataset (RDD). All operations revolve around this memory-based distributed data structure.

The memory cannot hold too much data. Even if it could, it is not cost-effective. Therefore, Spark supports the following StorageLevels:

Among them, serialization is to reduce memory overhead. Remember to use Kyro instead of the Java native serialization library for better performance. The cost of serialization is the resource and time overhead of processing CPUs, but these overheads are nothing compared with the IO performance improvement.

rdd.cache()You can put the entire rdd into the cache by simply calling a method.

As you can see, the cache of Spark is coarse-grained, and Spark does not provide fine-grained operations on specific data structures that a general-purpose cache like Redis does.

You can put the entire rdd into the cache by simply calling a method.

Another difference from caches (such as Redis) is that Spark cache is not for arbitrary use. If all data is cached, it would not be cost-effective.

Therefore, Spark cache is generally used in the following two scenarios:

The commonality between these two scenarios is that the same data will be accessed multiple times. This way, the performance improvement and the cost paid are offset. This is also an important basis for us to decide whether to cache data.

Now, let’s look at pipelining.

Spark's flexible programming paradigm can handle complex business logic better. There is no need to string tasks together like MapReduce.

Spark splits an app into many stages at intervals of shuffle operations. In each stage, data is processed in the form of a pipeline.

For example, in ds.map().filter().map(), each row of data in ds is mapped and filtered continuously before it is written in memory or hard disk. It is different from MapReduce, which runs two tasks and needs to write the data to hard disks after each task is run.

Therefore, with the two technologies of cache and pipelining, Spark can significantly reduce I/O operations and bring about a significant performance improvement.

However, Spark cache is app-level and cannot be shared among apps, let alone used by other non-Spark programs. This undoubtedly limits its power. The only way to achieve this is to sink and cache at the file system level.

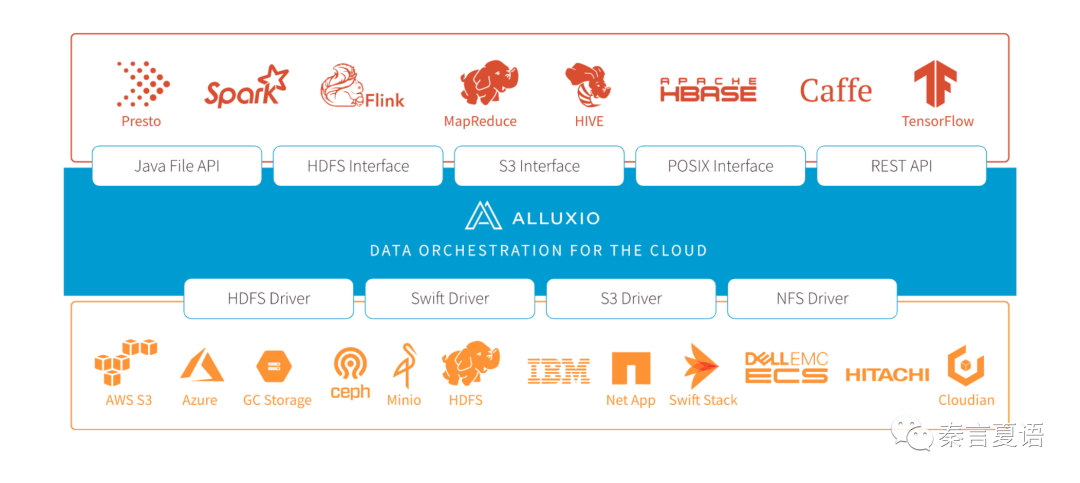

Then came Alluxio (formerly known as Tachyon). As a sibling of Spark from AMPLab, Alluxio has the same ambition as Spark.

Alluxio is intended to be a unified memory-based storage system that supports various distributed computing frameworks and connects to a variety of storage backends.

There is no detailed introduction here, but we will focus on relevant parts related to the topic of this article.

As Alluxio wants to be the bridge and agent, the first thing it needs to do is to unify abstraction.

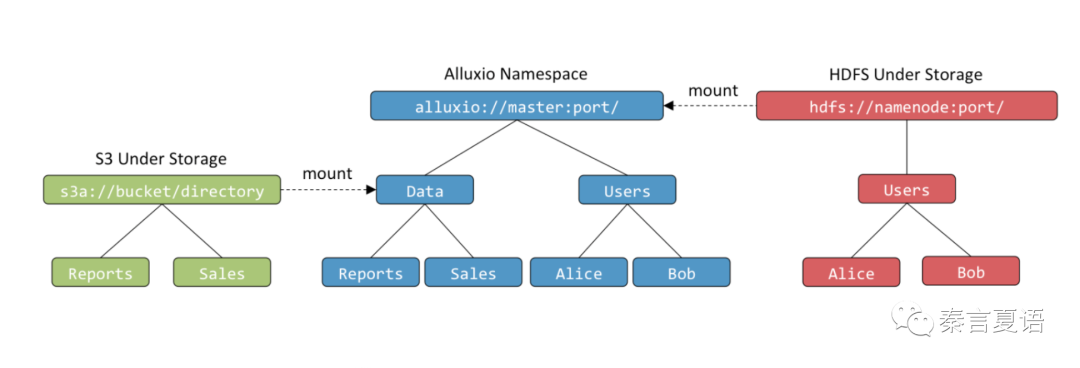

Alluxio provides Namespace.

It looks very similar to the HDFS Federation we discussed in the previous article because they connect different storage backends by mounting directories (Under File Storage or UFS).

The client can access data through the alluxio://master:port/A/B. The corresponding backend may be hdfs://namenode:port/A/B or s3a://A/B. The client cannot know it and does not need to know it. The diversity and complexity of the backend storage system are handled by Alluxio.

A unified agent provided by Alluxio only solves the complexity. Since Alluxio claims to be based on memory, it must have performance advantages.

At this point, Alluxio is like a cache. A small amount of hot data is stored in its worker to provide high performance. A large amount of cold data is still stored in UFS. Alluxio passes the request through.

Alluxio provides the heterogeneous multi-layer cache to save costs. It is somewhat similar to the multi-layer storage of HDFS we discussed earlier but with more cache-related operations.

Alluxio provides the following three types of data storage:

You can use the following format to set the storage type for a specific directory on workers:

# configure 2 tiers in Alluxio

alluxio.worker.tieredstore.levels=2

# the first (top) tier to be a memory tier

alluxio.worker.tieredstore.level0.alias=MEM

# defined `/mnt/ramdisk` to be the file path to the first tier

alluxio.worker.tieredstore.level0.dirs.path=/mnt/ramdisk

# defined MEM to be the medium type of the ramdisk directory

alluxio.worker.tieredstore.level0.dirs.mediumtype=MEM

# set the quota for the ramdisk to be `100GB`

alluxio.worker.tieredstore.level0.dirs.quota=100GB

# set the ratio of high watermark on top layer to be 90%

alluxio.worker.tieredstore.level0.watermark.high.ratio=0.9

# set the ratio of low watermark on top layer to be 70%

alluxio.worker.tieredstore.level0.watermark.low.ratio=0.7

# configure the second tier to be a hard disk tier

alluxio.worker.tieredstore.level1.alias=HDD

# configured 3 separate file paths for the second tier

alluxio.worker.tieredstore.level1.dirs.path=/mnt/hdd1,/mnt/hdd2,/mnt/hdd3

# defined HDD to be the medium type of the second tier

alluxio.worker.tieredstore.level0.dirs.mediumtype=HDD,HDD,HDD

# define the quota for each of the 3 file paths of the second tier

alluxio.worker.tieredstore.level1.dirs.quota=2TB,5TB,500GB

# set the ratio of high watermark on the second layer to be 90%

alluxio.worker.tieredstore.level1.watermark.high.ratio=0.9

# set the ratio of low watermark on the second layer to be 70%

alluxio.worker.tieredstore.level1.watermark.low.ratio=0.7Since it is a cache, it must evict cache data. Alluxio considers the storage performance to be decreasing from level 0 and uses this as the basis for cache eviction.

The following cache eviction policies are supported:

In addition, after reading so many previous articles about data consistency, you might take it more seriously now. How can we ensure data consistency between Alluxio and UFS?

It depends on the situation.

If there is a direct write operation on UFS without the use of Alluxio, the inconsistency is almost inevitable, and Alluxio cannot automatically detect it. Even if it wants to, it cannot be timely.

Alluxio provides the checkConsistency command to check the consistency of specified directories. After the command is executed, an inconsistency message is displayed. The administrator decides whether to manually synchronize the directory. Alternatively, you can add the -r parameter for automatic repair.

However, this is not safe after all. The possibility of data inconsistency is very high.

Alluxio offers this best practice advice based on how to ensure consistency in a general-purpose cache (like Redis).

Especially for the first advice, Alluxio provides different read-and-write policies with different persistence, consistency, and performance guarantees.

Alluxio provides the following read policies:

Strictly speaking, the client has no choice except Cache Skip. Alluxio will automatically choose the corresponding method based on the metadata.

Due to space limitations, we will only look at the GIF of Remote Cache Hit:

Alluxio provides the following write policies:

The data persistence of Must Cache and Async Through is not good, and there is a possibility of losing data, so Alluxio provides a replication mechanism to support writing data to multiple workers.

Due to space limitations, we will only look at the GIF of Async Through:

Based on the preceding read and write process, it is easy to see that Alluxio improves performance based on two understandings:

For the first one, the multi-layer cache is necessary, and the proportion of each layer can be adjusted depending on your budget.

For the second one, Alluxio suggests that its worker and compute node be deployed together. When UFS is in a remote data center, it will show greater performance advantages.

Spark has alleviated the performance problems at the computing level by reducing I/O operations, especially disk I/O operations, and Alluxio has significantly improved the storage performance, so the performance defects of MapReduce seem to be solved well.

However, we can see that these two memory-based solutions are relatively coarse-grained. Due to limited memory resources, it is impossible to solve all performance problems.

At the same time, this kind of architectural design improvement will miss the details in the execution process. Much optimization work is still required in these details.

In the next article, we will discuss the Shuffle operation, one of the main culprits of slowing down performance.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

Learning about Distributed Systems - Part 15: The Last Obstacle to Scalability

64 posts | 59 followers

FollowApsaraDB - August 1, 2022

Alibaba Container Service - February 12, 2021

youliang - February 5, 2021

Alibaba Cloud Data Intelligence - September 6, 2023

JeffLv - December 2, 2019

Alibaba Clouder - December 14, 2020

64 posts | 59 followers

Follow Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn MoreMore Posts by Alibaba Cloud_Academy

Dikky Ryan Pratama June 27, 2023 at 12:48 am

awesome!