Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

As I mentioned at the beginning of the series, one of the two core problems distributed systems have to solve is running slowly.

I wrote nearly ten articles analyzing how to make distributed programs run faster, including the fine tuning of shuffle and join and system optimization of RBO and CBO. At the same time, I introduced HBase to prevent the hard-won results from dropping the ball in the last step of reading, which is deeply related to the online database.

However, is the meaning of running fast limited to this?

There are two metrics for us to measure the performance of a program:

Throughput measures the processing capacity of a program from an overall perspective, which is the amount of data that can be processed in a unit of time, while latency measures the time taken by a program to process a piece of data from a microscopic perspective.

It is possible to have a program with throughput far exceeding the expected megabits per second, but it has an average latency of ten seconds, which is several times the expected value. It is inappropriate to call such a program a high-performance or fast-running program.

We have spent a lot of time talking about improving the throughput of programs. In this article, let's take a look at the efforts made by distributed systems in terms of latency.

As mentioned above, latency measures performance from a microscopic perspective. From the perspective of the processing program to the perspective of the data, it is the time taken by a piece of data from entering the field of view to processing completion.

We talked about MapReduce, Hive, and Spark earlier. The objects processed are all files, which can be regarded as a collection of many pieces of data (strictly speaking, the data are not necessarily pieces, but the situation is ignored here for the convenience of discussion).

The process of collecting data into files itself dragged down the performance metric, latency.

Therefore, we must change the way of introducing data to reduce the latency, so the data can be processed more quickly.

For example, if we want to count the number of tweets on Twitter, instead of landing a file every hour and processing it with Spark, we should pull the tweet data in real-time and input it to the processing program immediately.

Following this idea, it is easy to think that in processing data, you cannot wait for other data to be ready. It is necessary to process as soon as possible, it is best to process one by one, and the processed results are also synchronized to the outside world.

As such, from data readiness to data processing to result output, the entire chain is as real-time as possible. Thus, the overall latency can be reduced.

This processing mode is called real-time data processing, or from a macro point of view, all data flows like a river, so it can be called streaming data processing or stream computing.

It is simple to write a stream computing program. You only need a loop that continues to pull data from the source, process it immediately, and output it immediately. It can be completed with a few dozen lines of code (at most) using any mainstream language.

However, in the distributed context, it is clear that you will encounter various problems (such as performance, availability, stability, and consistency). We have already discussed a lot in the previous articles.

It is unnecessary to step into the pit again for these problems; just follow MapReduce.

Then came Apache Storm.

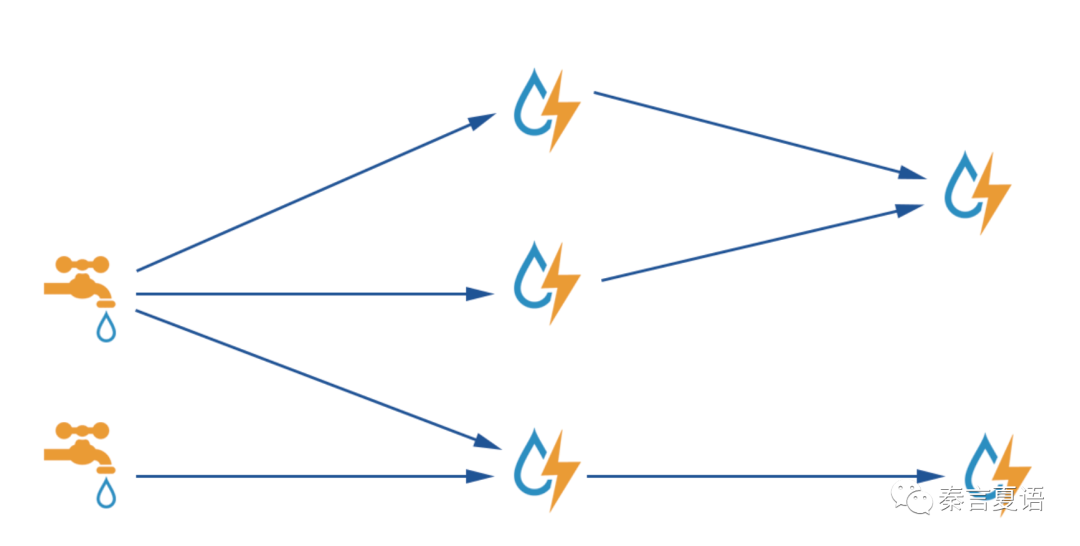

The figure above is a schematic diagram of a typical Storm program called Topology in Storm and is equivalent to App in MapReduce. Let’s take the classic WordCount as an example to understand:

Like MapReduce, Storm provides an Imperative API, which allows us to implement sophisticated operations.

However, like MR, it is accompanied by development inefficiency and cannot enjoy the performance optimization of Hive.

However, Storm is evolving and has begun to provide SQL support experimentally, but so far, it is not perfect.

Another point worth noting is data consistency, which we've spent considerable time discussing previously.

Storm provides an optional ACK mechanism to ensure each message is processed. Storm provides an at-most-once or at-least-once consistency guarantee.

This is not good enough because everyone wants exactly-once (I have previewed this topic many times, and we will discuss it later).

As a result, Storm upgraded its architecture and launched a new version of Trident. The original design intention of Trident is not limited to this, and the improvements and regressions brought by Trident are not limited to this. I won't go into them here.

The main reason I won't go into details is that Storm, as a veteran of distributed stream computing (like MapReduce), has fulfilled its historical mission well. Even though it continues to evolve, it has gradually given way to its descendants for various reasons.

In the previous article about Spark, I mentioned that Spark is a very ambitious project. As a general-purpose computing framework, stream computing is an important field that cannot be missed.

The most intuitive idea is to start with the core abstract RDD of Spark so RDD can support the introduction of streaming data. This way, you can enjoy the benefits of RDD.

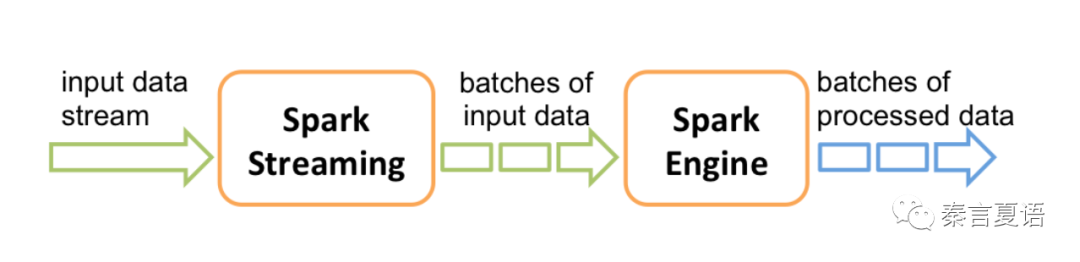

Spark put forward the concept of DStream (Discretized Stream). As shown in the figure above, DStream is essentially a collection of micro-batch data, small RDDs.

DStream can naturally enjoy the performance and availability improvements brought by RDD. The latency depends on the size of the batch, and the smaller the batch is, the closer it gets to absolute real-time processing (but it can't be approximate, and I will discuss it in subsequent articles).

On the other hand, Spark has seen an upgrade from RDD to DataFrame/Dataset in batch processing scenarios, which has significantly improved development efficiency and performance. It is necessary to make good use of it in the stream scenario.

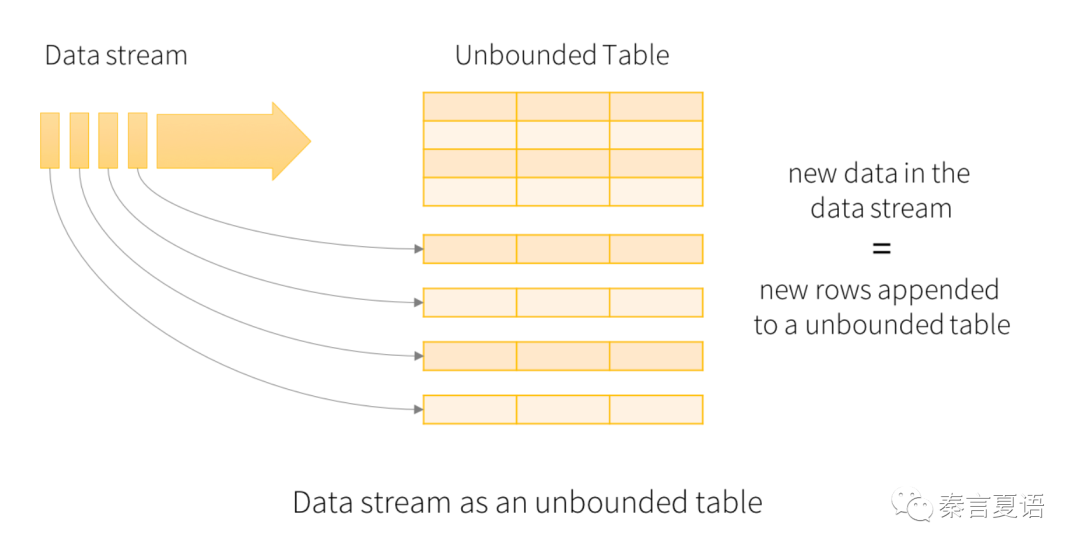

Then, there was Spark Structured Streaming.

From the name and figure above, it is easy to see that from Spark Streaming to Spark Structured Steaming, DStream is essentially structured and converted into a logical table. The main difference between this table and the tables we knew is that the table continues to add new data incrementally.

With this key abstraction, we can manipulate streaming data with relational operators (and even SQL) and enjoy the performance optimization brought by the Spark SQL engine.

Everything is the same as batch processing. This is also the benefit that Spark builds stream computing power based on the existing batch processing engine.

However, good fortunes and bad fortunes often come together. There is an idea of Spark, which is constructing a stream based on batch. Its biggest defect is that it isn't good enough in latency.

No matter how small the batch is, a micro-batch is a batch, and each message needs to wait until the messages of the same batch arrive before being processed together. However, if the batch is too small, it will be difficult to ensure stable low-latency performance due to the impact of Spark's own batch-based shuffle and checkpoint implementation.

As a result, Spark came up with a new concept and architecture called continuous processing. In essence, it is a continuous operation mode, hoping to provide a millisecond-level latency guarantee and significantly reduce the latency of 100 milliseconds in micro-batch mode. However, this architecture cannot take advantage of the existing core capabilities of Spark, the cost of changes is too high, and the relationship with the batch architecture is also difficult to explain. Therefore, after several years, it is still in the experimental state and rarely applied in the production environment.

In addition, in terms of data consistency, the batch-based architecture makes it natural for Spark Streaming to integrate the exactly-once capability.

Generally speaking, it's hard to say how successful Spark has been in expanding in the stream computing scenario. However, it is worth a try in scenarios where the latency is less demanding.

Although Storm comes from native streaming, its evolution is slow. Spark Streaming is based on batch and has inherent defects. Both of them are vulnerable to the fierce offensive of Apache Flink.

It can be said that Flink gives full play to its late-comer advantages. It absorbs the experience of Storm and Spark Streaming and avoids previous mistakes. So, it has become the de facto standard for stream computing.

Due to space limitations, we will not go into details here but briefly discuss why Flink will become the king of stream computing from three aspects.

1. Flink is truly stream computing.

Flink does not have the rich batch processing accumulation or historical burden Spark has. Flink was created to provide large-scale stream computing power. Therefore, the native streaming design similar to Storm is directly adopted, which makes the data processing latency excellent and can meet the requirements of most application scenarios.

2. Flink quickly provides support for the relational model.

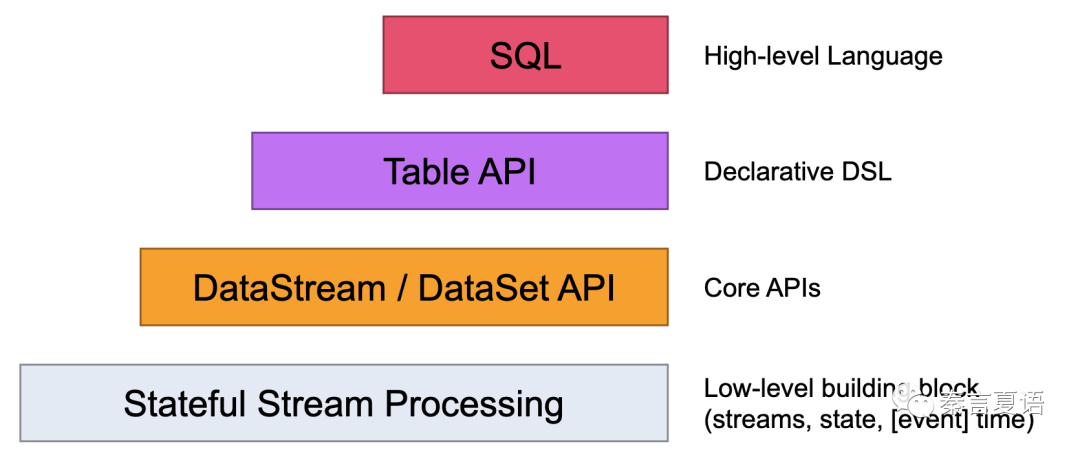

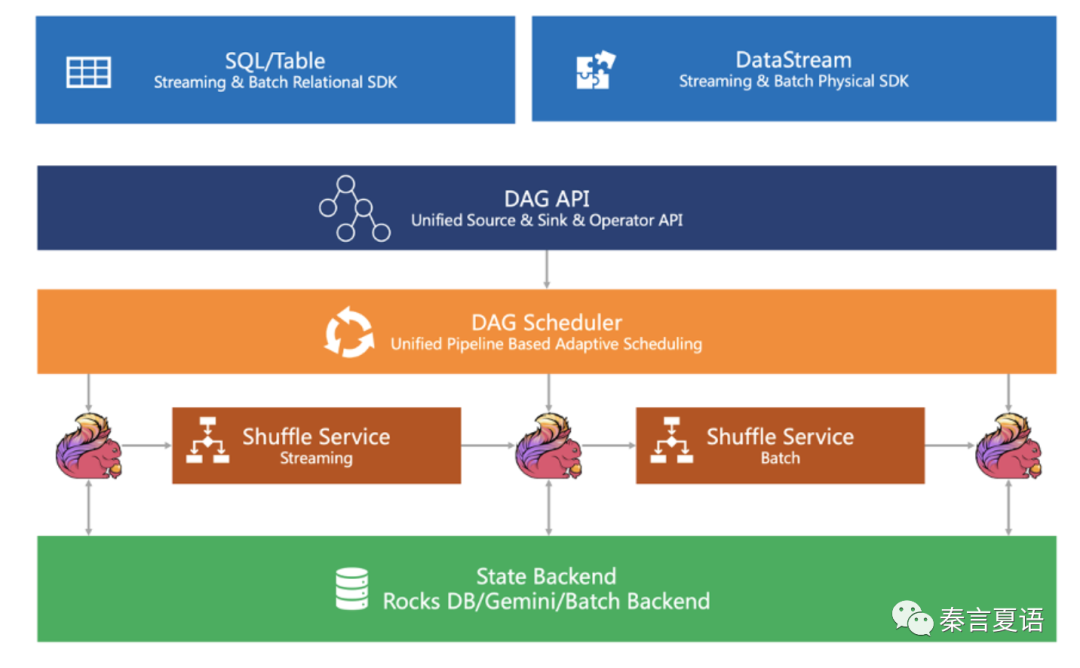

The figure above shows different levels of APIs provided by Flink. Table API and SQL are proposed and implemented faster than Storm. This allows Flink to quickly reduce learning and usage costs and significantly expand its application scenarios.

3. Flink has advanced concepts and implementations in stream-batch integration.

For a long time, batch processing and stream computing have been explored on their routes until Spark made an ambitious attempt to unify them. However, Spark failed to achieve similar achievements in the field of stream computing compared with achievements in the field of batch processing. As a result, users have to continue to pay double costs to deal with the same stream and batch scenario.

From the beginning of the design, Flink integrated the stream and batch and realized the original dream step by step. This also makes it the most potential and most concerned project in the field of general batch processing and stream computing.

The advantages of Flink are not limited to these three points. We will discuss them as needed. For example, Flink also provides a reliable exactly-once guarantee for critical data consistency issues.

In short, when you need to do large-scale real-time computing, Flink is the first option to consider.

That's all for this article. We have finally resolved another performance issue, latency, in addition to throughput, and have achieved comprehensive coverage from batch processing to stream computing scenarios.

Almost anyone in any scenario will always hope that the data can be seen earlier and the effect can be reflected earlier. Stream computing can be said to be a natural pursuit.

However, there are many different problems from batch processing to be solved in the implementation of stream computing. Before these problems are solved and the integration of stream and batch is completely realized, there will be a long-term coexistence situation. Fortunately, the road ahead is clear and definite.

In the subsequent articles, we will look at the problems faced by stream computing and the ins and outs of the integration of stream and batch.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

64 posts | 59 followers

FollowApache Flink Community China - July 27, 2021

Apache Flink Community China - February 28, 2022

Apache Flink Community China - January 11, 2021

Apache Flink Community China - November 6, 2020

Apache Flink Community - September 30, 2025

Apache Flink Community China - August 11, 2021

64 posts | 59 followers

Follow Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud_Academy